1. 冲突原因

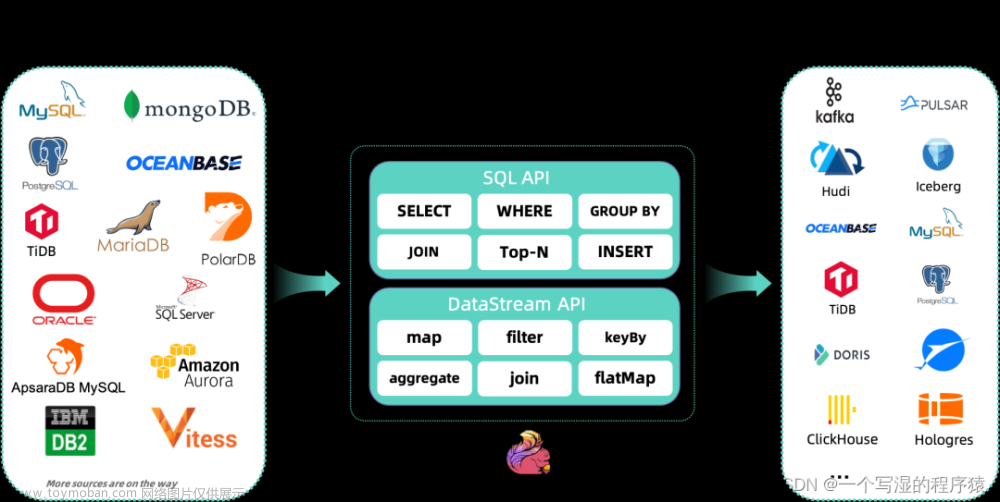

使用Flink CDC 2.2.0版本的时候,会报ThreadFactoryBuilder这个类找不到的错误,如下所示:

java.lang.NoClassDefFoundError: org/apache/flink/shaded/guava18/com/google/common/util/concurrent/ThreadFactoryBuilder

因为Flink CDC使用的是guava版本是18.0-13.0,如下所示:

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-guava</artifactId>

<version>18.0-13.0</version>

</dependency>

而Flink 1.14.4使用的guava版本是30.1.1-jre-14.0,如下所示:

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-guava</artifactId>

<version>30.1.1-jre-14.0</version>

</dependency>

但是项目中会使用30.1.1-jre-14.0版本的guava,所有会找不到guava 18.0-13.0这个版本,所以就会报错

找到了原因,下面就可以想办法进行解决了

2. 解决办法

需要我们自己编译源码,源码的编译过程如下:

- 下载源码包并解压,如下所示

[root@bigdata001 ~]# wget https://github.com/ververica/flink-cdc-connectors/archive/refs/tags/release-2.2.0.tar.gz

[root@bigdata001 ~]#

[root@bigdata001 ~]# tar -zxvf release-2.2.0.tar.gz

[root@bigdata001 ~]#

[root@bigdata001 ~]# cd flink-cdc-connectors-release-2.2.0/

[root@bigdata001 flink-cdc-connectors-release-2.2.0]#

- 修改pom.xml后的guava版本如下

[root@bigdata001 flink-cdc-connectors-release-2.2.0]# cat pom.xml

......省略部分......

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-guava</artifactId>

<version>30.1.1-jre-14.0</version>

</dependency>

......省略部分......

[root@bigdata001 flink-cdc-connectors-release-2.2.0]#

-

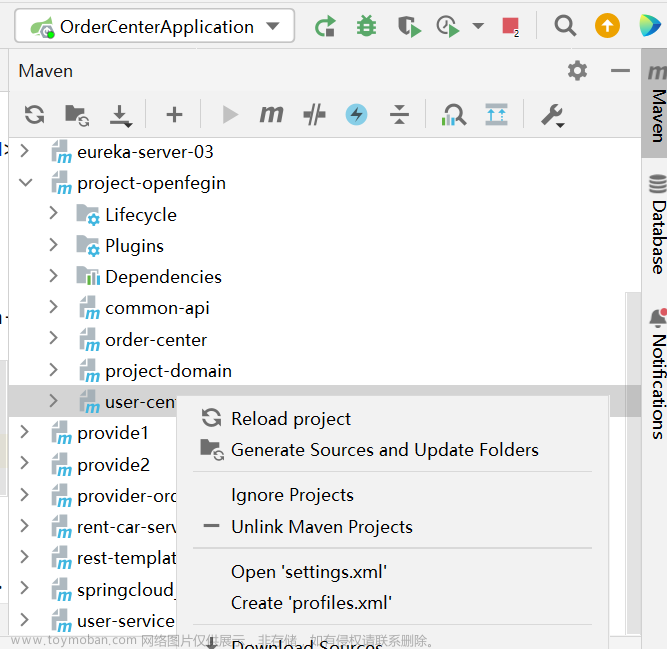

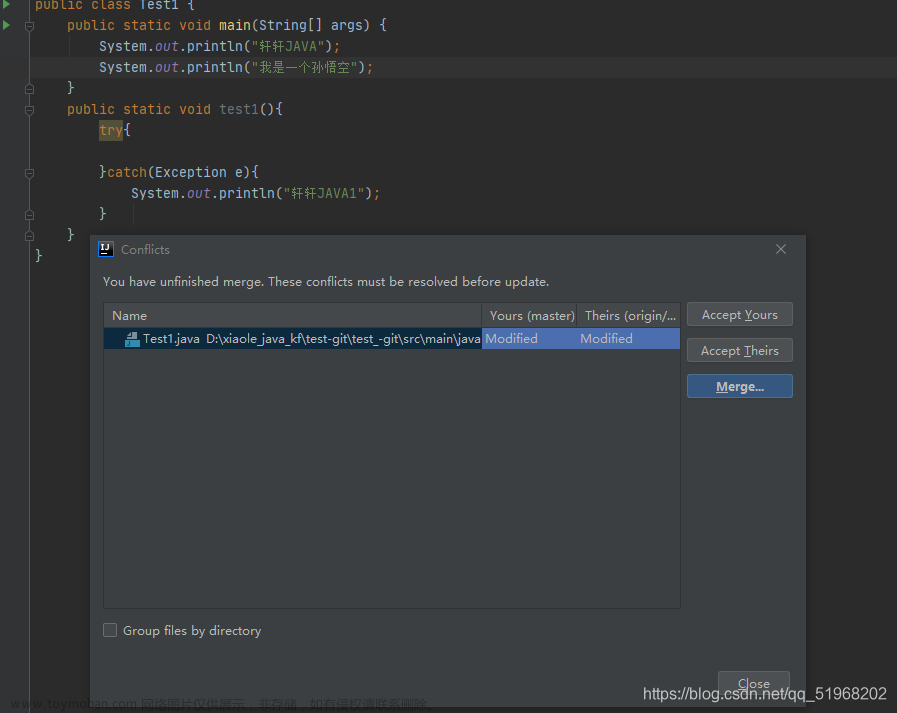

修改源码:将flink-cdc-connectors-release-2.2.0目录拷贝到本地,用IDEA打开,按Ctrl + Shift + R将所有guava18替换成guava30,然后替换Centos7上的flink-cdc-connectors-release-2.2.0目录

-

编译源码,如下所示

[root@bigdata001 flink-cdc-connectors-release-2.2.0]# mvn clean install -Dmaven.test.skip=true

[INFO] Scanning for projects...

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Build Order:

[INFO]

[INFO] flink-cdc-connectors [pom]

[INFO] flink-connector-debezium [jar]

[INFO] flink-cdc-base [jar]

[INFO] flink-connector-test-util [jar]

[INFO] flink-connector-mysql-cdc [jar]

[INFO] flink-connector-postgres-cdc [jar]

[INFO] flink-connector-oracle-cdc [jar]

[INFO] flink-connector-mongodb-cdc [jar]

[INFO] flink-connector-oceanbase-cdc [jar]

[INFO] flink-connector-sqlserver-cdc [jar]

[INFO] flink-connector-tidb-cdc [jar]

[INFO] flink-sql-connector-mysql-cdc [jar]

[INFO] flink-sql-connector-postgres-cdc [jar]

[INFO] flink-sql-connector-mongodb-cdc [jar]

[INFO] flink-sql-connector-oracle-cdc [jar]

[INFO] flink-sql-connector-oceanbase-cdc [jar]

[INFO] flink-sql-connector-sqlserver-cdc [jar]

[INFO] flink-sql-connector-tidb-cdc [jar]

[INFO] flink-cdc-e2e-tests [jar]

[INFO]

[INFO] -----------------< com.ververica:flink-cdc-connectors >-----------------

[INFO] Building flink-cdc-connectors 2.2.0 [1/19]

......省略部分......

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for flink-cdc-connectors 2.2.0:

[INFO]

[INFO] flink-cdc-connectors ............................... SUCCESS [ 2.666 s]

[INFO] flink-connector-debezium ........................... SUCCESS [ 4.044 s]

[INFO] flink-cdc-base ..................................... SUCCESS [ 2.823 s]

[INFO] flink-connector-test-util .......................... SUCCESS [ 1.013 s]

[INFO] flink-connector-mysql-cdc .......................... SUCCESS [ 3.430 s]

[INFO] flink-connector-postgres-cdc ....................... SUCCESS [ 1.242 s]

[INFO] flink-connector-oracle-cdc ......................... SUCCESS [ 1.192 s]

[INFO] flink-connector-mongodb-cdc ........................ SUCCESS [ 1.806 s]

[INFO] flink-connector-oceanbase-cdc ...................... SUCCESS [ 1.285 s]

[INFO] flink-connector-sqlserver-cdc ...................... SUCCESS [ 0.747 s]

[INFO] flink-connector-tidb-cdc ........................... SUCCESS [ 36.752 s]

[INFO] flink-sql-connector-mysql-cdc ...................... SUCCESS [ 8.316 s]

[INFO] flink-sql-connector-postgres-cdc ................... SUCCESS [ 5.348 s]

[INFO] flink-sql-connector-mongodb-cdc .................... SUCCESS [ 5.176 s]

[INFO] flink-sql-connector-oracle-cdc ..................... SUCCESS [ 7.375 s]

[INFO] flink-sql-connector-oceanbase-cdc .................. SUCCESS [ 5.118 s]

[INFO] flink-sql-connector-sqlserver-cdc .................. SUCCESS [ 4.721 s]

[INFO] flink-sql-connector-tidb-cdc ....................... SUCCESS [ 18.166 s]

[INFO] flink-cdc-e2e-tests ................................ SUCCESS [ 2.886 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:54 min

[INFO] Finished at: 2022-06-09T09:08:57+08:00

[INFO] ------------------------------------------------------------------------

[root@bigdata001 flink-cdc-connectors-release-2.2.0]#

-

将flink-sql-connector-mysql-cdc/target/flink-sql-connector-mysql-cdc-2.2.0.jar拷贝到本地进行引用,同时将原来pom.xml的flink-connector-mysql-cdc依赖注释掉,最后就可以在本地运行项目了文章来源:https://www.toymoban.com/news/detail-401247.html

-

如果不放心可以在本地使用自己编译的flink-sql-connector-mysql-cdc-2.2.0.jar,在生成环境使用github flink cdc提供的jar包文章来源地址https://www.toymoban.com/news/detail-401247.html

到了这里,关于在IDEA本地开发时Flink CDC和Flink的guava版本冲突解决办法的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!