K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-1.png)

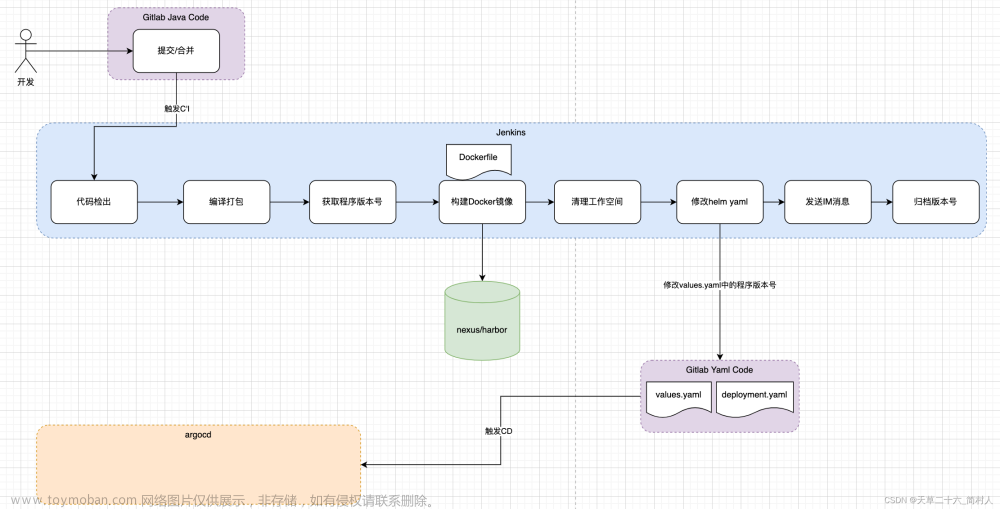

1. k8s-plantform-api-Pipeline

考虑到实际工作中前后端可能是不同的同学完成,一般Api部分完成后改动会比较小,web部分改动会比较频繁.于是将api和web分了2个pipeline实现

1.1 GIt仓库

- docker目录存放镜像构建相关文件

- k8s-plantform-api 存放api部分代码

- Jenkinsfile用作pipeline配置

- yaml用作生成k8s下k8s-plantform-api相关资源

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-2.png)

1.1.1 docker目录

1.1.1.1 Dockerfile

# 设置基础镜像

FROM centos:7.9.2009

#FROM harbor.intra.com/k8s-dashboard/api:v1

# 设置作者信息

LABEL maintainer="qiuqin <13917099322@139.com>"

# 创建目录

RUN mkdir -p /data/k8s-plantform

# 复制应用程序

Add ../k8s-plantform/* /data/k8s-plantform

# 安装 Go 和创建目录

RUN cd /etc/yum.repos.d && \

rm -f *.repo

RUN cd /data/k8s-plantform&& \

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo && \

echo "export GO111MODULE=on" >> ~/.profile&& \

echo "export GOPROXY=https://goproxy.cn" >> ~/.profile&& \

source ~/.profile&& \

rpm --import https://mirror.go-repo.io/centos/RPM-GPG-KEY-GO-REPO &&\

curl -s https://mirror.go-repo.io/centos/go-repo.repo | tee /etc/yum.repos.d/go-repo.repo &&\

yum install go -y &&\

go mod tidy

WORKDIR /data/k8s-plantform

ADD ./start.sh /data/k8s-plantform

# 暴露端口

EXPOSE 9091

# 启动应用程序

CMD ["/data/k8s-plantform/start.sh"]

1.1.1.2 start.sh

#!/bin/bash

echo "export GO111MODULE=on" >> ~/.profile

echo "export GOPROXY=https://goproxy.cn" >> ~/.profile

echo ${Db_Ip}>/data/dip.txt

echo ${Db_Port}>/data/dprt.txt

echo ${Db_User}>/data/duser.txt

echo ${Db_Pass}>/data/dpas.txt

dip=`cat /data/dip.txt`

dprt=`cat /data/dprt.txt`

duser=`cat /data/duser.txt`

dpas=`cat /data/dpas.txt`

[ ${Db_Ip} ] && sed -Ei "s/Db_Ip/${dip}/g" /data/k8s-plantform/config/config.go

[ ${Db_Port} ] && sed -Ei "s/Db_Port/${dprt}/g" /data/k8s-plantform/config/config.go

[ ${Db_User} ] && sed -Ei "s/Db_User/${duser}/g" /data/k8s-plantform/config/config.go

[ ${Db_Pass} ] && sed -Ei "s/Db_Pass/${dpas}/g" /data/k8s-plantform/config/config.go

sleep 3

rm -f /data/*.txt

source ~/.profile

cd /data/k8s-plantform

go run main.go

1.1.2 k8s-plantform-api目录

1.1.2.1 config.go

数据库的配置会由configmap传递到容器,并由脚本替换

k8s-plantform-api/config/config.go

package config

import "time"

const (

//监听地址

ListenAddr = "0.0.0.0:9091"

//kubeconfig路径

Kubeconfig = "config/config"

//pod日志tail显示行数

PodLogTailLine = 2000

//登录账号密码

AdminUser = "admin"

AdminPwd = "123456"

//数据库配置

DbType = "mysql"

DbHost = "Db_Ip"

DbPort = Db_Port

DbName = "k8s_dashboard"

DbUser = "Db_User"

DbPwd = "Db_Pass"

//打印mysql debug sql日志

LogMode = false

//连接池配置

MaxIdleConns = 10 //最大空闲连接

MaxOpenConns = 100 //最大连接数

MaxLifeTime = 30 * time.Second //最大生存时间

)

1.1.3 Jenkinsfile

pipeline {

agent any

environment {

Harbor_Addr = '192.168.31.104'

Username = 'admin'

Passwd = 'root123'

Port = '9091'

}

stages {

stage('代码克隆') {

steps {

checkout([$class: 'GitSCM', branches: [[name: '*/main']], extensions: [], userRemoteConfigs: [[credentialsId: '3c67dc4c-db8a-4c78-8278-19cf9eca88ce', url: 'http://192.168.31.199/root/k8s-plantform-api.git']]])

}

}

stage('SonarQube') {

steps{

sh '/var/jenkins_home/sonar-scanner/bin/sonar-scanner -Dsonar.projectname=${JOB_NAME} -Dsonar.projectKey=${JOB_NAME} -Dsonar.sources=./ -Dsonar.login=cdd09d5224d8623e4931bcf433274ae996c746f3'

}

}

stage('镜像制作和上传harbor') {

steps {

sh '''\\cp -R k8s-plantform-api docker/k8s-plantform

docker build -t k8sapi:${BUILD_TIMESTAMP} docker/

docker login -u admin -p root123 192.168.31.104

docker tag k8sapi:v1 192.168.31.104/k8s-dashboard/k8sapi:${BUILD_TIMESTAMP}

docker push 192.168.31.104/k8s-dashboard/k8sapi:${BUILD_TIMESTAMP}'''

}

}

stage('把yaml推送到k8s上') {

steps {

sshPublisher(publishers: [sshPublisherDesc(configName: 'k8sapi-192.168.31.41', transfers: [sshTransfer(cleanRemote: false, excludes: '', execCommand: '', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: '', remoteDirectorySDF: false, removePrefix: '', sourceFiles: 'yaml/api*.yaml')], usePromotionTimestamp: false, useWorkspaceInPromotion: false, verbose: false)])

}

}

stage('k8s上部署') {

steps {

sh 'ssh 192.168.31.41 "sed -i \'s/Tag/\$BUILD_TIMESTAMP/g\' /opt/k8sapi/yaml/api-deployment.yaml && kubectl apply -f /opt/k8sapi/yaml/"'

}

}

}

}

1.1.4 yaml

这里和之前的K8s 管理系统项目36[K8s环境–应用部署]配置一样,略

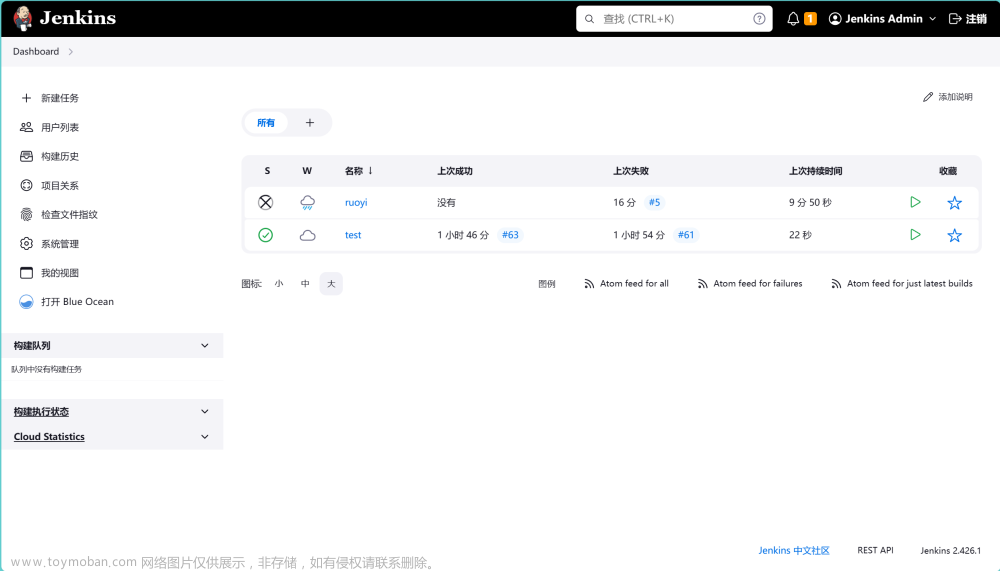

1.2 Jenkins Pipeline

1.2.1 Pipeline设置

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-3.png)

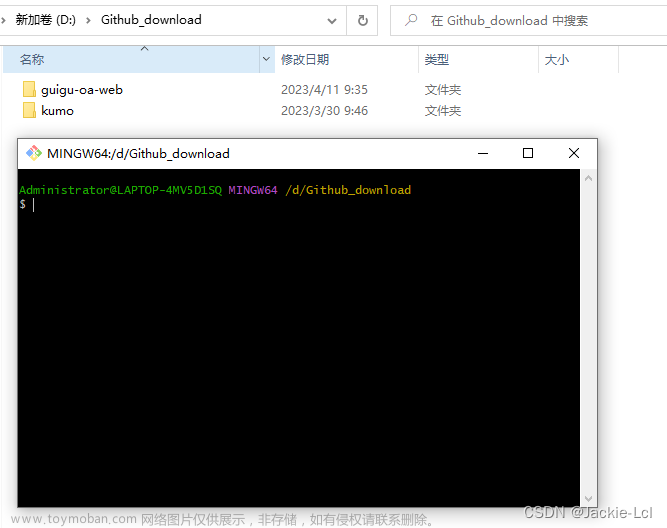

1.2.2 Jenkins工作目录配置

[系统管理] --> [系统配置]

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-4.png)

1.2.3 构建完成

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-5.png)

1.3 Api测试

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-6.png)

至此Api的部署已经完成

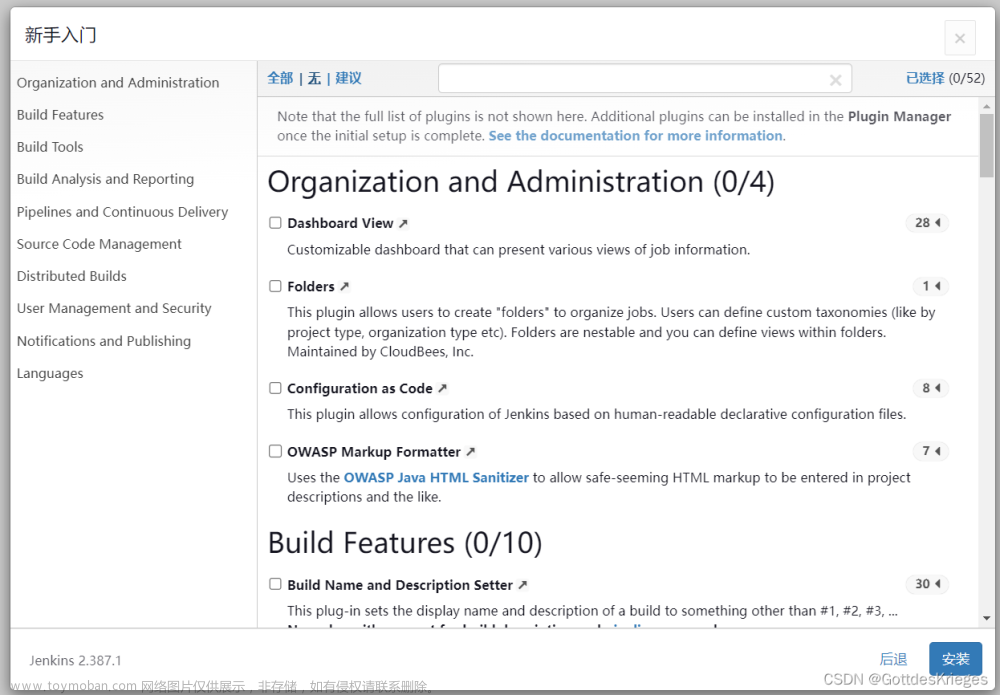

2. k8s-plantform-web-Pipeline

2.1 Git仓库

- docker目录存放镜像构建相关文件

- k8s-plantform-web 存放api部分代码

- Jenkinsfile用作pipeline配置

- yaml用作生成k8s下k8s-plantform-web相关资源

2.1.1 docker目录

2.1.1.1 Dockerfile

# 设置基础镜像

#FROM centos:7.9.2009

FROM 192.168.31.104/baseimages/base_centos:7.9.2009

# 设置作者信息

LABEL maintainer="qiuqin <13917099322@139.com>"

# 创建目录

RUN mkdir -p /data

# 复制应用程序

Add k8s-plantform-web /data/

# 配置yum仓库

#RUN cd /etc/yum.repos.d && \

# rm -f *.repo

#ADD Centos.repo /etc/yum.repos.d/Centos.repo

#RUN yum install gcc gcc-c++ wget -y

# 安装npm

RUN cd /data && \

wget https://registry.npmmirror.com/-/binary/node/latest-v16.x/node-v16.15.0-linux-x64.tar.gz && \

tar xf node-v16.15.0-linux-x64.tar.gz &&\

ln -sf /data/node-v16.15.0-linux-x64 /usr/local/node &&\

ln -sf /data/node-v16.15.0-linux-x64/bin/npm /usr/bin/npm &&\

export NODE_HOME=/usr/local/node &&\

export PATH=$NODE_HOME/bin:$PATH &&\

rm -f node-v16.15.0-linux-x64.tar.gz &&\

cd /data/k8s-plantform-web/ &&\

npm install

WORKDIR /data/k8s-plantform-web

ADD start.sh /data/k8s-plantform-web/

# 暴露端口

EXPOSE 9090

# 启动应用程序

CMD ["/data/k8s-plantform-web/start.sh"]

2.1.1.2 start.sh

#!/bin/bash

export NODE_HOME=/usr/local/node

export PATH=$NODE_HOME/bin:$PATH

cd /data/k8s-plantform-web/

npm run serve

2.1.2 k8s-plantform-web

2.1.2.1 vue.config.js

k8s-plantform-web/vue.config.js

const { defineConfig } = require('@vue/cli-service')

module.exports = defineConfig({

devServer:{

host: '0.0.0.0', // 监听地址

port: 9090, // 监听端口

open: true, // 启动后是否打开网页

allowedHosts: [

'web.k8s.intra.com',

'.intra.com'

],

},

// 关闭语法检测

lintOnSave: false,

})

2.1.2.2 Config.js

这里将url替换成api的ingress地址

k8s-plantform-web/src/views/common/Config.js

export default {

//后端接口路径

loginAuth: 'http://api.k8s.intra.com/api/login',

k8sWorkflowCreate: 'http://api.k8s.intra.com/api/k8s/workflow/create',

k8sWorkflowDetail: 'http://api.k8s.intra.com/api/k8s/workflow/detail',

k8sWorkflowList: 'http://api.k8s.intra.com/api/k8s/workflows',

k8sWorkflowDel: 'http://api.k8s.intra.com/api/k8s/workflow/del',

k8sDeploymentList: 'http://api.k8s.intra.com/api/k8s/deployments',

k8sDeploymentDetail: 'http://api.k8s.intra.com/api/k8s/deployment/detail',

k8sDeploymentUpdate: 'http://api.k8s.intra.com/api/k8s/deployment/update',

k8sDeploymentScale: 'http://api.k8s.intra.com/api/k8s/deployment/scale',

k8sDeploymentRestart: 'http://api.k8s.intra.com/api/k8s/deployment/restart',

k8sDeploymentDel: 'http://api.k8s.intra.com/api/k8s/deployment/del',

k8sDeploymentCreate: 'http://api.k8s.intra.com/api/k8s/deployment/create',

k8sDeploymentNumNp: 'http://api.k8s.intra.com/api/k8s/deployment/numnp',

k8sPodList: 'http://api.k8s.intra.com/api/k8s/pods',

k8sPodDetail: 'http://api.k8s.intra.com/api/k8s/pod/detail',

k8sPodUpdate: 'http://api.k8s.intra.com/api/k8s/pod/update',

k8sPodDel: 'http://api.k8s.intra.com/api/k8s/pod/del',

k8sPodContainer: 'http://api.k8s.intra.com/api/k8s/pod/container',

k8sPodLog: 'http://api.k8s.intra.com/api/k8s/pod/log',

k8sPodNumNp: 'http://api.k8s.intra.com/api/k8s/pod/numnp',

k8sDaemonSetList: 'http://api.k8s.intra.com/api/k8s/daemonsets',

k8sDaemonSetDetail: 'http://api.k8s.intra.com/api/k8s/daemonset/detail',

k8sDaemonSetUpdate: 'http://api.k8s.intra.com/api/k8s/daemonset/update',

k8sDaemonSetDel: 'http://api.k8s.intra.com/api/k8s/daemonset/del',

k8sStatefulSetList: 'http://api.k8s.intra.com/api/k8s/statefulsets',

k8sStatefulSetDetail: 'http://api.k8s.intra.com/api/k8s/statefulset/detail',

k8sStatefulSetUpdate: 'http://api.k8s.intra.com/api/k8s/statefulset/update',

k8sStatefulSetDel: 'http://api.k8s.intra.com/api/k8s/statefulset/del',

k8sServiceList: 'http://api.k8s.intra.com/api/k8s/services',

k8sServiceDetail: 'http://api.k8s.intra.com/api/k8s/service/detail',

k8sServiceUpdate: 'http://api.k8s.intra.com/api/k8s/service/update',

k8sServiceDel: 'http://api.k8s.intra.com/api/k8s/service/del',

k8sServiceCreate: 'http://api.k8s.intra.com/api/k8s/service/create',

k8sIngressList: 'http://api.k8s.intra.com/api/k8s/ingresses',

k8sIngressDetail: 'http://api.k8s.intra.com/api/k8s/ingress/detail',

k8sIngressUpdate: 'http://api.k8s.intra.com/api/k8s/ingress/update',

k8sIngressDel: 'http://api.k8s.intra.com/api/k8s/ingress/del',

k8sIngressCreate: 'http://api.k8s.intra.com/api/k8s/ingress/create',

k8sConfigMapList: 'http://api.k8s.intra.com/api/k8s/configmaps',

k8sConfigMapDetail: 'http://api.k8s.intra.com/api/k8s/configmap/detail',

k8sConfigMapUpdate: 'http://api.k8s.intra.com/api/k8s/configmap/update',

k8sConfigMapDel: 'http://api.k8s.intra.com/api/k8s/configmap/del',

k8sSecretList: 'http://api.k8s.intra.com/api/k8s/secrets',

k8sSecretDetail: 'http://api.k8s.intra.com/api/k8s/secret/detail',

k8sSecretUpdate: 'http://api.k8s.intra.com/api/k8s/secret/update',

k8sSecretDel: 'http://api.k8s.intra.com/api/k8s/secret/del',

k8sPvcList: 'http://api.k8s.intra.com/api/k8s/pvcs',

k8sPvcDetail: 'http://api.k8s.intra.com/api/k8s/pvc/detail',

k8sPvcUpdate: 'http://api.k8s.intra.com/api/k8s/pvc/update',

k8sPvcDel: 'http://api.k8s.intra.com/api/k8s/pvc/del',

k8sNodeList: 'http://api.k8s.intra.com/api/k8s/nodes',

k8sNodeDetail: 'http://api.k8s.intra.com/api/k8s/node/detail',

k8sNamespaceList: 'http://api.k8s.intra.com/api/k8s/namespaces',

k8sNamespaceDetail: 'http://api.k8s.intra.com/api/k8s/namespace/detail',

k8sNamespaceDel: 'http://api.k8s.intra.com/api/k8s/namespace/del',

k8sPvList: 'http://api.k8s.intra.com/api/k8s/pvs',

k8sPvDetail: 'http://api.k8s.intra.com/api/k8s/pv/detail',

k8sTerminalWs: 'ws://localhost:8081/ws',

//编辑器配置

cmOptions: {

// 语言及语法模式

mode: 'text/yaml',

// 主题

theme: 'idea',

// 显示行数

lineNumbers: true,

smartIndent: true, //智能缩进

indentUnit: 4, // 智能缩进单元长度为 4 个空格

styleActiveLine: true, // 显示选中行的样式

matchBrackets: true, //每当光标位于匹配的方括号旁边时,都会使其高亮显示

readOnly: false,

lineWrapping: true //自动换行

}

}

2.1.3 Jenkinsfile

pipeline {

agent any

environment {

Harbor_Addr = '192.168.31.104'

Username = 'admin'

Passwd = 'root123'

Port = '9090'

}

stages {

stage('代码克隆') {

steps {

checkout([$class: 'GitSCM', branches: [[name: '*/main']], extensions: [], userRemoteConfigs: [[credentialsId: '3c67dc4c-db8a-4c78-8278-19cf9eca88ce', url: 'http://192.168.31.199/root/k8s-plantform-web.git']]])

}

}

stage('镜像制作和上传harbor') {

steps {

sh '''\\cp -R k8s-plantform-web docker/k8s-plantform-web

docker build -t k8sweb:${BUILD_TIMESTAMP} docker/

docker login -u admin -p root123 192.168.31.104

docker tag k8sweb:${BUILD_TIMESTAMP} 192.168.31.104/k8s-dashboard/k8sweb:${BUILD_TIMESTAMP}

docker push 192.168.31.104/k8s-dashboard/k8sweb:${BUILD_TIMESTAMP}'''

}

}

stage('把yaml推送到k8s上') {

steps {

sshPublisher(publishers: [sshPublisherDesc(configName: 'k8sweb-192.168.31.41', transfers: [sshTransfer(cleanRemote: false, excludes: '', execCommand: '', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: '', remoteDirectorySDF: false, removePrefix: '', sourceFiles: 'yaml/web*.yaml')], usePromotionTimestamp: false, useWorkspaceInPromotion: false, verbose: false)])

}

}

stage('k8s上部署') {

steps {

sh 'ssh 192.168.31.41 "sed -i \'s/Tag/\$BUILD_TIMESTAMP/g\' /opt/k8sweb/yaml/web-deployment.yaml && kubectl apply -f /opt/k8sweb/yaml/"'

}

}

}

}

2.1.4 yaml

这里和之前的K8s 管理系统项目36[K8s环境–应用部署]配置一样,略

2.2 Jenkins Pipeline

2.2.1 Pipeline 设置

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-7.png)

2.2.2 Jenkins工作目录配置

[系统管理] --> [系统配置]

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-8.png)

2.2.3 构建完成

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-9.png)

2.3 Web测试

2.3.1 登录页面

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-10.png)

2.3.2 主要功能测试

2.3.2.1 集群状态

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-11.png)

2.3.2.2 Pods

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-12.png) 文章来源:https://www.toymoban.com/news/detail-401963.html

文章来源:https://www.toymoban.com/news/detail-401963.html

2.3.2.3 Service

![【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]](https://imgs.yssmx.com/Uploads/2023/04/401963-13.png) 文章来源地址https://www.toymoban.com/news/detail-401963.html

文章来源地址https://www.toymoban.com/news/detail-401963.html

到了这里,关于【Go】K8s 管理系统项目[Jenkins Pipeline K8s环境–应用部署]的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!