【Pnet原型网络】【】

# 转换维度

p = np.array(file)

p = np.transpose(p, (1, 2, 0))

# reshape的用法

img = np.reshape(img,(-1,512,512))

# 此处返回的是img的数组形式,大小加通道数

K = Read_img2array('bad-128.tif')[5]

print(K.shape, K.dtype)

# 创建数组,存入图片并显示图片

img=np.zeros(shape=(100,100,4))

img=K

# 显示图片

plt.imshow(img)

plt.show()【灰度拉伸】

#灰度拉伸

def grey_scale(image):

"""

灰度拉伸

定义:灰度拉伸,也称对比度拉伸,是一种简单的线性点运算。作用:扩展图像的

直方图,使其充满整个灰度等级范围内

公式:

g(x,y) = 255 / (B - A) * [f(x,y) - A],

其中,A = min[f(x,y)],最小灰度级;B = max[f(x,y)],最大灰度级;

f(x,y)为输入图像,g(x,y)为输出图像

缺点:如果灰度图像中最小值A=0,最大值B=255,则图像没有什么改变

"""

img_gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

rows, cols = img_gray.shape

flat_gray = img_gray.reshape((cols * rows,)).tolist()

A = min(flat_gray)

B = max(flat_gray)

print('A = %d,B = %d' % (A, B))

output = np.uint8(255 / (B - A) * (img_gray - A) + 0.5)

return output【对矩阵做线性插值】

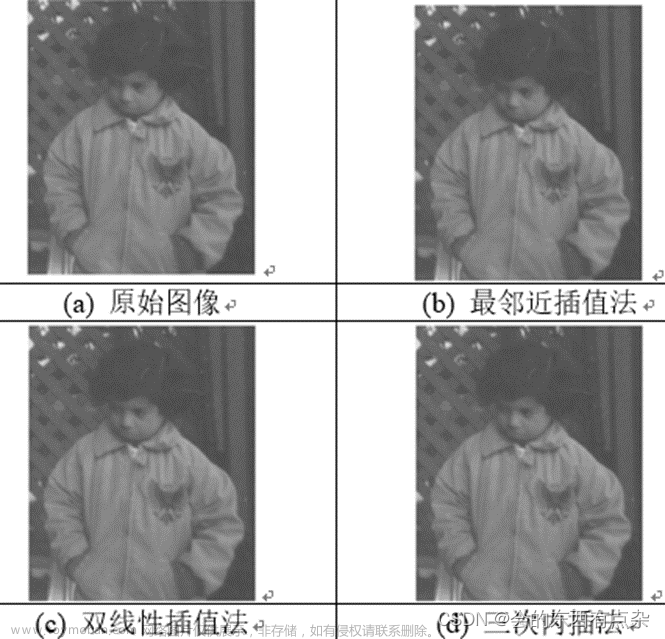

def bilinear(org_img, org_shape, dst_shape):

# print(org_shape)

# 下式在创建数组,行列和通道数

dst_img = np.zeros(shape=(dst_shape[0], dst_shape[1], 3))

dst_h, dst_w = dst_shape

org_h, org_w = org_shape

for i in range(dst_h):

for j in range(dst_w):

src_x = j * float(org_w / dst_w)

src_y = i * float(org_h / dst_h)

src_x_int = j * org_w // dst_w

src_y_int = i * org_h // dst_h

a = src_x - src_x_int

b = src_y - src_y_int

if src_x_int + 1 == org_w or src_y_int + 1 == org_h:

dst_img[i, j, :] = org_img[src_y_int, src_x_int, :]

continue

# print(src_x_int, src_y_int)

dst_img[i, j, :] = (1. - a) * (1. - b) * org_img[src_y_int + 1, src_x_int + 1, :] + \

(1. - a) * b * org_img[src_y_int, src_x_int + 1, :] + \

a * (1. - b) * org_img[src_y_int + 1, src_x_int, :] + \

a * b * org_img[src_y_int, src_x_int, :]

return dst_img【Totensor()函数】

ToTensor()将shape为(H, W, C)的nump.ndarray或img转为shape为(C, H, W)的tensor,其将每一个数值归一化到[0,1],其归一化方法比较简单,直接除以255即可。具体可参见如下代码:

import torchvision.transforms as transforms

import numpy as np

from __future__ import print_function

# 定义转换方式,transforms.Compose将多个转换函数组合起来使用

transform1 = transforms.Compose([transforms.ToTensor()]) #归一化到(0,1),简单直接除以255

# 定义一个数组

d1 = [1,2,3,4,5,6]

d2 = [4,5,6,7,8,9]

d3 = [7,8,9,10,11,14]

d4 = [11,12,13,14,15,15]

d5 = [d1,d2,d3,d4]

d = np.array([d5,d5,d5],dtype=np.float32)

d_t = np.transpose(d,(1,2,0)) # 转置为类似图像的shape,(H,W,C),作为transform的输入

# 查看d的shape

print('d.shape: ',d.shape, '\n', 'd_t.shape: ', d_t.shape)

# 输出

d.shape: (3, 4, 6)

d_t.shape: (4, 6, 3)

d_t_trans = transform1(d_t) # 直接使用函数归一化

# 手动归一化,下面的两个步骤可以在源码里面找到

d_t_temp = torch.from_numpy(d_t.transpose((2,0,1)))

d_t_trans_man = d_t_temp.float().div(255)

print(d_t_trans.equal(d_t_trans_man))

# 输出

True

【transforms.normalize()归一化函数】

在transforms.Compose([transforms.ToTensor()])中加入transforms.Normalize(),如下所示:transforms.Compose([transforms.ToTensor(),transforms.Normalize(std=(0.5,0.5,0.5),mean=(0.5,0.5,0.5))]),则其作用就是先将输入归一化到(0,1),再使用公式"(x-mean)/std",将每个元素分布到(-1,1)

transform2=transforms.Compose([transforms.ToTensor(),transforms.Normalize(std=(0.5,0.5,0.5),mean=(0.5,0.5,0.5))])

# 归一化到(0,1)之后,再 (x-mean)/std,归一化到(-1,1),数据中存在大于mean和小于mean

d_t_trans_2 = transform2(d_t)

d_t_temp1 = torch.from_numpy(d_t.transpose((2,0,1)))

d_t_temp2 = d_t_temp1.float().div(255)

d_t_trans_man2 = d_t_temp2.sub_(0.5).div_(0.5)

print(d_t_trans_2.equal(d_t_trans_man2))

#输出

True

# 单通道图片转三通道rgb

'''

单通道->三通道

'''

import os

import cv2

import numpy as np

import PIL.Image as Image

import os

# os.environ['CUDA_VISIBLE_DEVICES'] = '2'

img_path = r'E:\zp\data\tri\dataset\val\22'

save_img_path = r'E:\zp\data\tri\dataset1_3channel\val\22'

for img_name in os.listdir(img_path):

image = Image.open(img_path + '\\' + img_name)

if len(image.split()) == 1: # 查看通道数

print(len(image.split()))

print(img_path + '\\' +img_name)

img = cv2.imread(img_path + '\\' +img_name)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

img2 = np.zeros_like(img)

img2[:, :, 0] = gray

img2[:, :, 1] = gray

img2[:, :, 2] = gray

cv2.imwrite(save_img_path + '\\' +img_name, img2)

#image = Image.open(save_img_path +'\\' + img_name)

#print(len(image.split()))

else:

image.save(save_img_path +'\\' + img_name)

'''

单通道->三通道

'''

# img_src = np.expand_dims(img_src, axis=2)

# img_src = np.concatenate((img_src, img_src, img_src), axis=-1)

# 三通道tif图转jpg;

import os,sys

import cv2

import numpy as np

from skimage import io#使用IO库读取tif图片

def tif_jpg_transform(file_path_name, bgr_savepath_name):

img = io.imread(file_path_name)#读取文件名

img = img / img.max()#使其所有值不大于一

img = img * 255 - 0.001 # 减去0.001防止变成负整型

img = img.astype(np.uint8)#强制转换成8位整型

# img = np.array([img,img,img])

# img = img.transpose(1,2,0)

print(img.shape) # 显示图片大小和深度

b = img[:, :, 0] # 读取蓝通道

g = img[:, :, 1] # 读取绿通道

r = img[:, :, 2] # 读取红通道

bgr = cv2.merge([r, g, b]) # 通道拼接

cv2.imwrite(bgr_savepath_name, bgr)#图片存储

tif_file_path = r'E:\zp\data\tri\dataset1_3channel\val\22'# 为tif图片的文件夹路径

tif_fileList = os.listdir(tif_file_path)

for tif_file in tif_fileList:

file_path_name = tif_file_path + '/' + tif_file

jpg_path = r'E:\zp\data\tri\dataset2_jpg\val\22' + '/' + tif_file.split('.')[0] + '.jpg' #.jpg图片的保存路径

tif_jpg_transform(file_path_name, jpg_path)

【数组的分割与组合】

数组的组合主要有:

1.水平组合:np.hstack(arr1,arr2) 或 concatenate(arr1,arr2,axis=1)

2.垂直组合:np.vstack(arr1,arr2) 或 concatenate(arr1,arr2,axis=0)

3.深度组合:np.dstack(arr1,arr2)

4.列组合:np.column_stack(arr1,arr2)

5.行组合:np.row_stack(arr1,arr2)

数组的分割主要有:

1.水平分割:np.split(arr,n,axis=1) 或 np.hsplit(arr,n)

2.垂直分割:np.split(arr,n,axis=0) 或 np.vsplit(arr,n)

3.深度分割:np.dsplit(arr,n)# 数据集的处理和打包函数TensorDataset、DataLoader和Dataset:

TensorDataset和自定义的Dataset处理完之后送入DataLoader中,进行batch和shuffle,TensorDataset相当于Python中的Zip函数,做一个数据和标签的打包处理。

a = torch.tensor([[1, 2, 3], [4, 5, 6], [7, 8, 9], [1, 2, 3], [4, 5, 6], [7, 8, 9], [1, 2, 3], [4, 5, 6], [7, 8, 9], [1, 2, 3], [4, 5, 6], [7, 8, 9]]) # a是我们的数据集,里面是以np转tensor存储的图片;

b = torch.tensor([44, 55, 66, 44, 55, 66, 44, 55, 66, 44, 55, 66])

# b是我们的数据样本对应的标签;

train_ids = TensorDataset(a, b)

# 把数据样本和标签打包成数据集

for x_train, y_label in train_ids:

print(x_train, y_label)

# 自定义Dataset:必须要定义len和getitem两个函数

WINDOW_SIZE = 8

class Covid19Dataset(Dataset): # 定义一个数据集

def __len__(self):

return len(dfdiff) - WINDOW_SIZE # 返回数据集中的总样本数

def __getitem__(self,i):

x = dfdiff.loc[i:i+WINDOW_SIZE-1,:]

feature = torch.tensor(x.values)

y = dfdiff.loc[i+WINDOW_SIZE,:]

label = torch.tensor(y.values) # 这里定义了第i个样本的形式

return (feature,label) # 返回第i个样本,feature是样本,label是标签

ds_train = Covid19Dataset()

#数据较小,可以将全部训练数据放入到一个batch中,提升性能

dl_train = DataLoader(ds_train,batch_size = 38)

# DataLoader:数据封装,不需要手动切分batch

train_loader = DataLoader(dataset=train_ids, batch_size=4, shuffle=True) #shuffle参数:打乱数据顺序

for i, data in enumerate(train_loader, 1): # 注意enumerate返回值有两个,一个是序号,一个是数据(包含训练数据和标签),参数1是设置从1开始编号

x_data, label = data

print(' batch:{0} x_data:{1} label: {2}'.format(i, x_data, label))

# 在模型中调用DataLoader直接产生数据

def train_model(model,epochs,dl_train,dl_valid,log_step_freq):

metric_name = model.metric_name

dfhistory = pd.DataFrame(columns = ["epoch","loss",metric_name,"val_loss","val_"+metric_name])

print("Start Training...")

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("=========="*8 + "%s"%nowtime)

for epoch in range(1,epochs+1):

# 1,训练循环-------------------------------------------------

loss_sum = 0.0

metric_sum = 0.0

step = 1

for step, (features,labels) in enumerate(dl_train, 1):

loss,metric = train_step(model,features,labels)

# 打印batch级别日志

loss_sum += loss

metric_sum += metric

if step%log_step_freq == 0:

print(("[step = %d] loss: %.3f, "+metric_name+": %.3f") %

(step, loss_sum/step, metric_sum/step))

# 2,验证循环-------------------------------------------------

val_loss_sum = 0.0

val_metric_sum = 0.0

val_step = 1

for val_step, (features,labels) in enumerate(dl_valid, 1):

val_loss,val_metric = valid_step(model,features,labels)

val_loss_sum += val_loss

val_metric_sum += val_metric

# 3,记录日志-------------------------------------------------

info = (epoch, loss_sum/step, metric_sum/step,

val_loss_sum/val_step, val_metric_sum/val_step)

dfhistory.loc[epoch-1] = info

# 打印epoch级别日志

print(("\nEPOCH = %d, loss = %.3f,"+ metric_name + \

" = %.3f, val_loss = %.3f, "+"val_"+ metric_name+" = %.3f")

%info)

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("\n"+"=========="*8 + "%s"%nowtime)

print('Finished Training...')文章来源:https://www.toymoban.com/news/detail-405010.html

return dfhistory文章来源地址https://www.toymoban.com/news/detail-405010.html

到了这里,关于python:转换维度、reshape、灰度拉伸、矩阵线性插值、gdal读取tiff图的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!