原文链接: python 爬虫 爬取高考录取分数线 信息

上一篇: axios 原生上传xlsx文件

下一篇: pandas 表格 数据补全空值

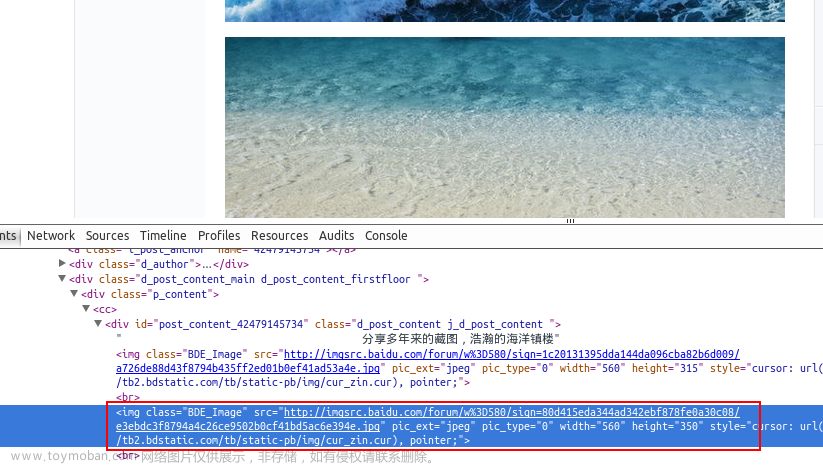

网页

https://gkcx.eol.cn/school/search

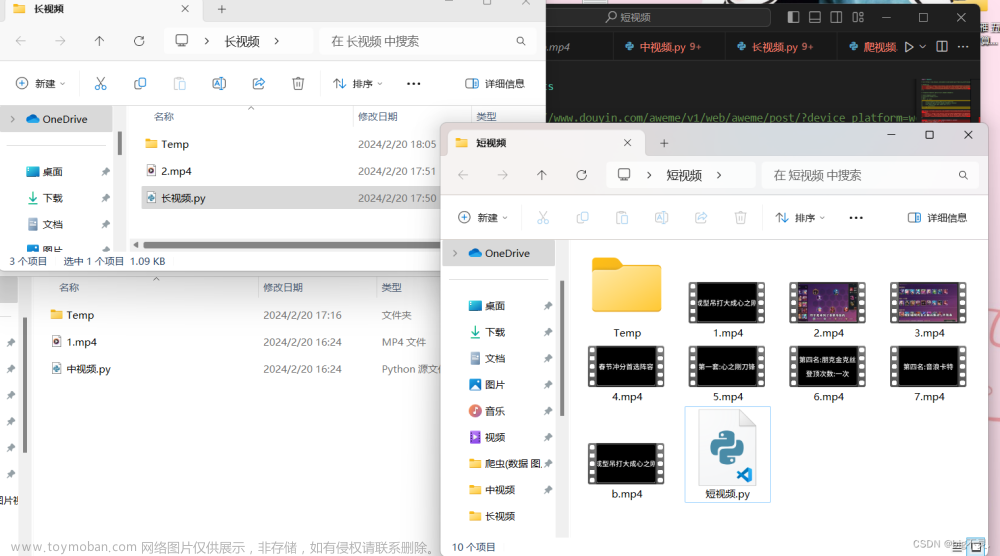

完整资料一个多g

高校信息爬取接口

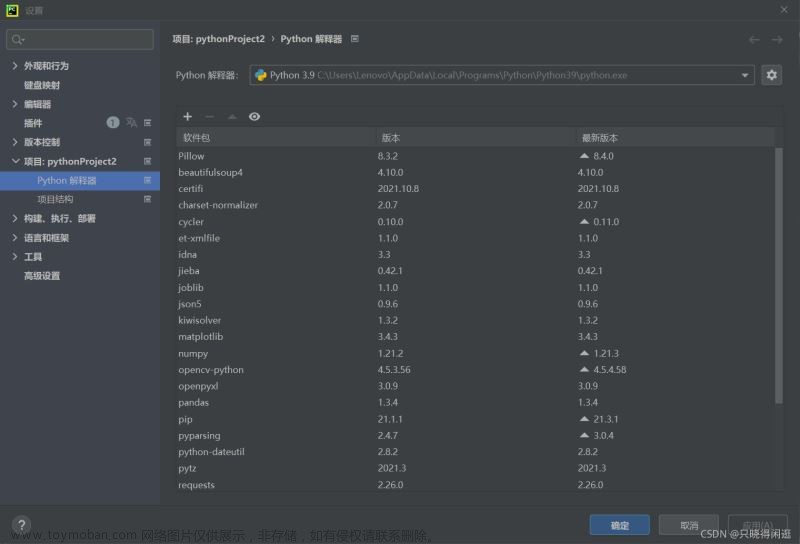

import requests_html

import json

sess = requests_html.HTMLSession()

url = "https://api.eol.cn/gkcx/api/"

data = {

"access_token": "",

"admissions": "",

"central": "",

"department": "",

"dual_class": "",

"f211": "",

"f985": "",

"is_dual_class": "",

"keyword": "",

"page": 8, "province_id": "",

"request_type": 1,

"school_type": "",

"size": 20,

"sort": "view_total",

"type": "",

"uri": "apigkcx/api/school/hotlists"

}

res = sess.post(url, data)

js = res.json()

print(type(js)) # <class 'dict'>

with open('./school.json', mode='w+') as f:

f.write(js['data']['item'])

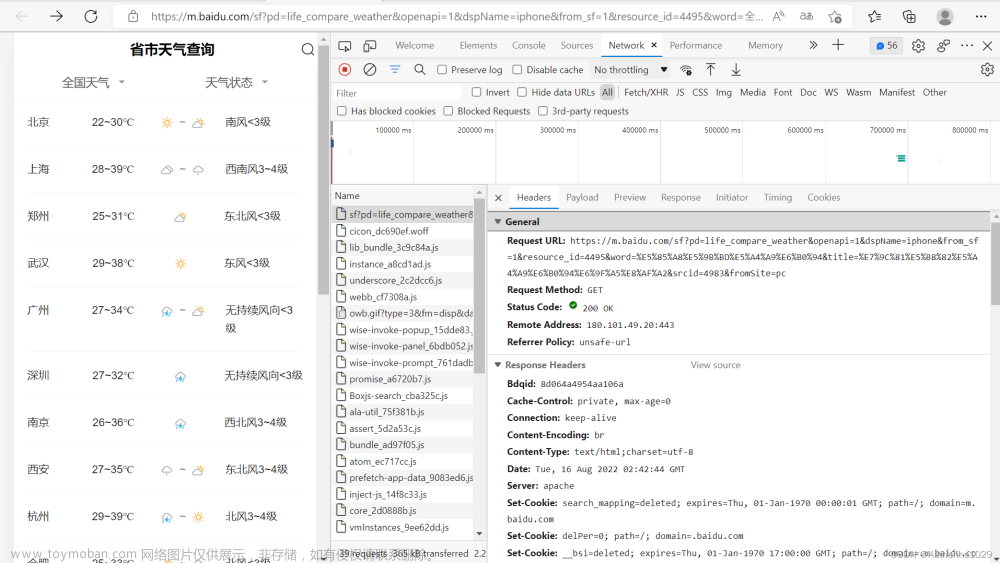

省份信息,在需要选择省份的下拉框页面中查看请求

def get_province():

url = "https://static-data.eol.cn/www/config/detial/1.json"

res = sess.get(url)

js = res.json()

print(js)

with open('./province.json', mode='w+') as f:

json.dump(js, f)

接口有最大数据传输量的限制,需要按照分页格式先请求所有,然后合并学校信息,并输出为xlsx文件

完整代码 文章来源:https://www.toymoban.com/news/detail-408254.html

import requests_html

import json

import glob

import pandas as pd

sess = requests_html.HTMLSession()

def get_school():

url = "https://api.eol.cn/gkcx/api/"

for pageNo in range(300):

data = {

"access_token": "",

"admissions": "",

"central": "",

"department": "",

"dual_class": "",

"f211": "",

"f985": "",

"is_dual_class": "",

"keyword": "",

"page": pageNo,

"province_id": "",

"request_type": 1,

"school_type": "",

"size": 20,

"sort": "view_total",

"type": "",

"uri": "apigkcx/api/school/hotlists"

}

res = sess.post(url, data)

js = res.json()

print(type(js['data']['item'])) # <class 'dict'>

with open(f'./school/school_{pageNo}.json', mode='w+') as f:

json.dump(js['data']['item'], f)

def get_province():

url = "https://static-data.eol.cn/www/config/detial/1.json"

res = sess.get(url)

js = res.json()

print(js)

with open('./province.json', mode='w+') as f:

json.dump(js, f)

def show_province():

with open('./province.json', ) as f:

data = json.load(f)

print(data)

def merge_school():

all_school = []

for path in glob.glob('./school/*'):

with open(path) as f:

data = json.load(f)

all_school += data

print(len(all_school)) # 2855

all_school = json.dumps(all_school)

with open('./json/school.json', mode='w+') as f:

json.dump(all_school, f)

df = pd.read_json(all_school)

df.to_excel('./school.xlsx')

if __name__ == '__main__':

# get_school()

# get_province()

merge_school()

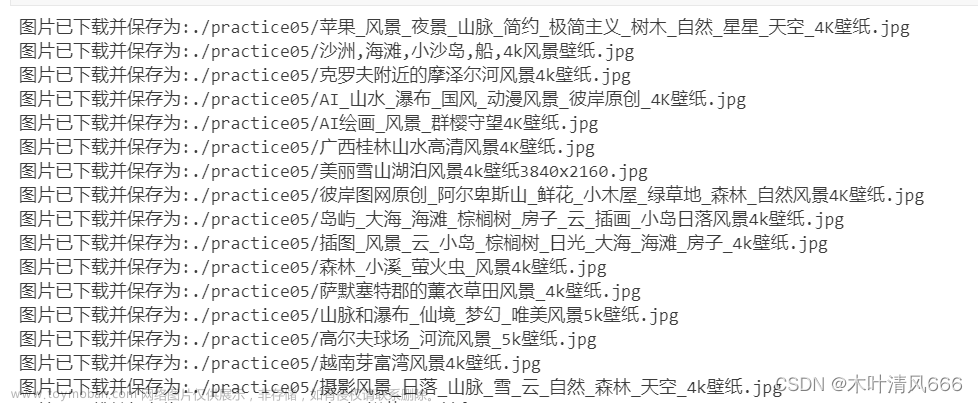

查询每个学校每年在各个省份的录取成绩 文章来源地址https://www.toymoban.com/news/detail-408254.html

import json

import requests

import os

from multiprocessing import Pool

import time

import random

years = range(2014, 2018 + 1) # 年份

pages = range(2) # 数目,3页基本够用了

types = [

{

'code': 1,

'name': '理科'

},

{

'code': 2,

'name': '文科'

}

] # 文理科

with open('./json/province.json') as f:

provinces = json.load(f)

with open('./json/school.json') as f:

schools = json.load(f)

headers = {

'Accept': 'application/json, text/plain, */*',

'Content-Type': 'application/json;charset=UTF-8',

'Origin': 'https://gkcx.eol.cn',

'Referer': 'https://gkcx.eol.cn/school/332',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36',

}

def get_score(school):

path = f"./score/{school['name']}.json"

if os.path.exists(path):

print(school['name'], 'exists')

return

sess = requests.Session()

url = "https://api.eol.cn/gkcx/api/"

score = {}

for type in types:

score[type['name']] = {}

for province in provinces:

score[type['name']][province['name']] = {}

for year in years:

all_data = []

try:

for page in pages:

data = {

"access_token": "",

"local_province_id": province['code'], # 学生省份

"local_type_id": type['code'], # 文理科 理科1 文科2

"page": page, # 页号

"school_id": school['school_id'], # 学校id

"size": 20,

"uri": "apidata/api/gk/score/special",

"year": year # 年份

}

# time.sleep(random.random() * 0.1)

js = sess.post(url, data, headers=headers).json()

all_data += js['data']['item']

except Exception as e:

print(e)

score[type['name']][province['name']][str(year)] = all_data

with open(path, mode='w+') as f:

json.dump(score, f)

print(school['name'], 'finished')

def start():

for school in schools[:10]:

print(school['name'], 'start')

get_score(school)

def thread_start():

# 线程数目太多会被访问拒绝

p = Pool(4)

for school in schools:

print(school['name'], 'start')

p.apply_async(get_score, args=(school,))

p.close() # 关闭进程池,不再接受新的任务

p.join() # 阻塞主进程,知道进程池中的进程完成任务

def main():

# start()

thread_start()

if __name__ == '__main__':

main()

到了这里,关于python 爬虫 爬取高考录取分数线 信息的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!