0. 模型总体结构:

Yolov3可以看作有三部分:Darknet53, FPN, Yolo Head.

Darknet53是Yolov3的主干网络,用来提取图像特征。共提取了三个特征层进行下一步网络的构建,这三个特征层即为“有效特征层”。

FPN 从backbone获取三个有效特征层后,进一步提取特征,进行特征融合,其目的是结合不同尺度的特征信息。

Yolo Head是YoloV3的分类器与回归器。 通过Darknet53与FPN, 可以获得三个加强过的特征层。他们的shape分别是:(52,52,128), (26,26,256), (13,13,512).

1. 结构分析:

1.1:backbone:

YoloV3的主干网络是Darknet53. 它使用残差网络Residual.

主干部分是一次1x1 conv和一次3x3 conv. 残差shortcut部分不做任何处理,直接将主干和的输出和从shortcut过来的输入结合。

如上图所示,共有5个残差模块。后面的xN,表示这个残差模块包含N个残差块。

Darknet53的每一个darknetConv2D后都紧跟BatchNormalization标准化与LeakyReLU部分。

摒弃了POOLing,用conv的stride来实现降采样。在这个网络结构中,使用的是步长为2的卷积来进行降采样。

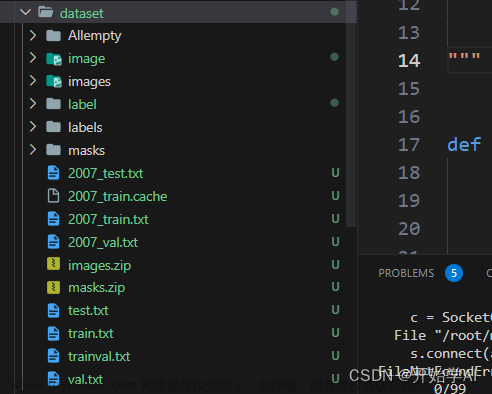

例子代码:

import tensorflow as tf

import tensorflow.keras as keras

from tensorflow.keras.models import Sequential

import tensorflow.keras.layers as layers

#filters_shape: kernel_Size_H, kernel_Size_W, Input_Channel,filter_count

def convolutional(input_layer, filters_shape, downsample=False, activate=True, bn=True, activate_type='leaky'):

if downsample:

input_layer = tf.keras.layers.ZeroPadding2D(((1, 0), (1, 0)))(input_layer)

padding = 'valid'

strides = 2

else:

strides = 1

padding = 'same'

conv = tf.keras.layers.Conv2D(filters=filters_shape[-1], kernel_size = filters_shape[0], strides=strides, padding=padding,

use_bias=not bn, kernel_regularizer=tf.keras.regularizers.l2(0.0005),

kernel_initializer=tf.random_normal_initializer(stddev=0.01),

bias_initializer=tf.constant_initializer(0.))(input_layer)

if bn:

conv =layers.BatchNormalization()(conv)

if activate == True:

if activate_type == "leaky":

conv = tf.nn.leaky_relu(conv, alpha=0.1)

elif activate_type == "mish":

conv = mish(conv)

return conv

def residual_block(input_layer, input_channel, filter_num1, filter_num2, activate_type='leaky'):

short_cut = input_layer

conv = convolutional(input_layer, filters_shape=(1, 1, input_channel, filter_num1), activate_type=activate_type)

conv = convolutional(conv , filters_shape=(3, 3, filter_num1, filter_num2), activate_type=activate_type)

residual_output = short_cut + conv

return residual_output

def darknet53(input_layer):

conv = convolutional(input_layer, (3, 3, 3, 32))

conv = convolutional(conv, (3,3, 32, 64), downsample=True)

for i in range(1):

input_data = residual_block(conv, 64, 32, 64)

input_data = convolutional(input_data, (3, 3, 64, 128), downsample=True)

for i in range(2):

input_data = residual_block(input_data, 128, 64, 128)

input_data = convolutional(input_data, (3, 3, 128, 256), downsample=True)

for i in range(8):

input_data = residual_block(input_data, 256, 128, 256)

route_1 = input_data

input_data = convolutional(input_data, (3, 3, 256, 512), downsample=True)

for i in range(8):

input_data = residual_block(input_data, 512, 256, 512)

route_2 = input_data

input_data = convolutional(input_data, (3, 3, 512, 1024), downsample=True)

for i in range(4):

input_data = residual_block(input_data, 1024, 512, 1024)

route_3 = input_data

return route_1, route_2, route_3

def main():

# 定义模型输入

input_layer = tf.keras.layers.Input([416, 416, 3])

conv = darknet53(input_layer)

Output_Layer = conv

# 创建模型

model = keras.Model(inputs=input_layer, outputs=Output_Layer, name="Yolov3 Model")

X = tf.random.uniform([1, 416, 416, 3])

model.summary()

if __name__ == "__main__":

main()

结果如下:

可以看到,共有51个卷积。

Model: "Yolov3 Model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 416, 416, 3 0 []

)]

conv2d (Conv2D) (None, 416, 416, 32 864 ['input_1[0][0]']

)

batch_normalization (BatchNorm (None, 416, 416, 32 128 ['conv2d[0][0]']

alization) )

tf.nn.leaky_relu (TFOpLambda) (None, 416, 416, 32 0 ['batch_normalization[0][0]']

)

zero_padding2d (ZeroPadding2D) (None, 417, 417, 32 0 ['tf.nn.leaky_relu[0][0]']

)

conv2d_1 (Conv2D) (None, 208, 208, 64 18432 ['zero_padding2d[0][0]']

)

batch_normalization_1 (BatchNo (None, 208, 208, 64 256 ['conv2d_1[0][0]']

rmalization) )

tf.nn.leaky_relu_1 (TFOpLambda (None, 208, 208, 64 0 ['batch_normalization_1[0][0]']

) )

conv2d_2 (Conv2D) (None, 208, 208, 32 2048 ['tf.nn.leaky_relu_1[0][0]']

)

batch_normalization_2 (BatchNo (None, 208, 208, 32 128 ['conv2d_2[0][0]']

rmalization) )

tf.nn.leaky_relu_2 (TFOpLambda (None, 208, 208, 32 0 ['batch_normalization_2[0][0]']

) )

conv2d_3 (Conv2D) (None, 208, 208, 64 18432 ['tf.nn.leaky_relu_2[0][0]']

)

batch_normalization_3 (BatchNo (None, 208, 208, 64 256 ['conv2d_3[0][0]']

rmalization) )

tf.nn.leaky_relu_3 (TFOpLambda (None, 208, 208, 64 0 ['batch_normalization_3[0][0]']

) )

tf.__operators__.add (TFOpLamb (None, 208, 208, 64 0 ['tf.nn.leaky_relu_1[0][0]',

da) ) 'tf.nn.leaky_relu_3[0][0]']

zero_padding2d_1 (ZeroPadding2 (None, 209, 209, 64 0 ['tf.__operators__.add[0][0]']

D) )

conv2d_4 (Conv2D) (None, 104, 104, 12 73728 ['zero_padding2d_1[0][0]']

8)

batch_normalization_4 (BatchNo (None, 104, 104, 12 512 ['conv2d_4[0][0]']

rmalization) 8)

tf.nn.leaky_relu_4 (TFOpLambda (None, 104, 104, 12 0 ['batch_normalization_4[0][0]']

) 8)

conv2d_5 (Conv2D) (None, 104, 104, 64 8192 ['tf.nn.leaky_relu_4[0][0]']

)

batch_normalization_5 (BatchNo (None, 104, 104, 64 256 ['conv2d_5[0][0]']

rmalization) )

tf.nn.leaky_relu_5 (TFOpLambda (None, 104, 104, 64 0 ['batch_normalization_5[0][0]']

) )

conv2d_6 (Conv2D) (None, 104, 104, 12 73728 ['tf.nn.leaky_relu_5[0][0]']

8)

batch_normalization_6 (BatchNo (None, 104, 104, 12 512 ['conv2d_6[0][0]']

rmalization) 8)

tf.nn.leaky_relu_6 (TFOpLambda (None, 104, 104, 12 0 ['batch_normalization_6[0][0]']

) 8)

tf.__operators__.add_1 (TFOpLa (None, 104, 104, 12 0 ['tf.nn.leaky_relu_4[0][0]',

mbda) 8) 'tf.nn.leaky_relu_6[0][0]']

conv2d_7 (Conv2D) (None, 104, 104, 64 8192 ['tf.__operators__.add_1[0][0]']

)

batch_normalization_7 (BatchNo (None, 104, 104, 64 256 ['conv2d_7[0][0]']

rmalization) )

tf.nn.leaky_relu_7 (TFOpLambda (None, 104, 104, 64 0 ['batch_normalization_7[0][0]']

) )

conv2d_8 (Conv2D) (None, 104, 104, 12 73728 ['tf.nn.leaky_relu_7[0][0]']

8)

batch_normalization_8 (BatchNo (None, 104, 104, 12 512 ['conv2d_8[0][0]']

rmalization) 8)

tf.nn.leaky_relu_8 (TFOpLambda (None, 104, 104, 12 0 ['batch_normalization_8[0][0]']

) 8)

tf.__operators__.add_2 (TFOpLa (None, 104, 104, 12 0 ['tf.__operators__.add_1[0][0]',

mbda) 8) 'tf.nn.leaky_relu_8[0][0]']

zero_padding2d_2 (ZeroPadding2 (None, 105, 105, 12 0 ['tf.__operators__.add_2[0][0]']

D) 8)

conv2d_9 (Conv2D) (None, 52, 52, 256) 294912 ['zero_padding2d_2[0][0]']

batch_normalization_9 (BatchNo (None, 52, 52, 256) 1024 ['conv2d_9[0][0]']

rmalization)

tf.nn.leaky_relu_9 (TFOpLambda (None, 52, 52, 256) 0 ['batch_normalization_9[0][0]']

)

conv2d_10 (Conv2D) (None, 52, 52, 128) 32768 ['tf.nn.leaky_relu_9[0][0]']

batch_normalization_10 (BatchN (None, 52, 52, 128) 512 ['conv2d_10[0][0]']

ormalization)

tf.nn.leaky_relu_10 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_10[0][0]']

a)

conv2d_11 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_10[0][0]']

batch_normalization_11 (BatchN (None, 52, 52, 256) 1024 ['conv2d_11[0][0]']

ormalization)

tf.nn.leaky_relu_11 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_11[0][0]']

a)

tf.__operators__.add_3 (TFOpLa (None, 52, 52, 256) 0 ['tf.nn.leaky_relu_9[0][0]',

mbda) 'tf.nn.leaky_relu_11[0][0]']

conv2d_12 (Conv2D) (None, 52, 52, 128) 32768 ['tf.__operators__.add_3[0][0]']

batch_normalization_12 (BatchN (None, 52, 52, 128) 512 ['conv2d_12[0][0]']

ormalization)

tf.nn.leaky_relu_12 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_12[0][0]']

a)

conv2d_13 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_12[0][0]']

batch_normalization_13 (BatchN (None, 52, 52, 256) 1024 ['conv2d_13[0][0]']

ormalization)

tf.nn.leaky_relu_13 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_13[0][0]']

a)

tf.__operators__.add_4 (TFOpLa (None, 52, 52, 256) 0 ['tf.__operators__.add_3[0][0]',

mbda) 'tf.nn.leaky_relu_13[0][0]']

conv2d_14 (Conv2D) (None, 52, 52, 128) 32768 ['tf.__operators__.add_4[0][0]']

batch_normalization_14 (BatchN (None, 52, 52, 128) 512 ['conv2d_14[0][0]']

ormalization)

tf.nn.leaky_relu_14 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_14[0][0]']

a)

conv2d_15 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_14[0][0]']

batch_normalization_15 (BatchN (None, 52, 52, 256) 1024 ['conv2d_15[0][0]']

ormalization)

tf.nn.leaky_relu_15 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_15[0][0]']

a)

tf.__operators__.add_5 (TFOpLa (None, 52, 52, 256) 0 ['tf.__operators__.add_4[0][0]',

mbda) 'tf.nn.leaky_relu_15[0][0]']

conv2d_16 (Conv2D) (None, 52, 52, 128) 32768 ['tf.__operators__.add_5[0][0]']

batch_normalization_16 (BatchN (None, 52, 52, 128) 512 ['conv2d_16[0][0]']

ormalization)

tf.nn.leaky_relu_16 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_16[0][0]']

a)

conv2d_17 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_16[0][0]']

batch_normalization_17 (BatchN (None, 52, 52, 256) 1024 ['conv2d_17[0][0]']

ormalization)

tf.nn.leaky_relu_17 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_17[0][0]']

a)

tf.__operators__.add_6 (TFOpLa (None, 52, 52, 256) 0 ['tf.__operators__.add_5[0][0]',

mbda) 'tf.nn.leaky_relu_17[0][0]']

conv2d_18 (Conv2D) (None, 52, 52, 128) 32768 ['tf.__operators__.add_6[0][0]']

batch_normalization_18 (BatchN (None, 52, 52, 128) 512 ['conv2d_18[0][0]']

ormalization)

tf.nn.leaky_relu_18 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_18[0][0]']

a)

conv2d_19 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_18[0][0]']

batch_normalization_19 (BatchN (None, 52, 52, 256) 1024 ['conv2d_19[0][0]']

ormalization)

tf.nn.leaky_relu_19 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_19[0][0]']

a)

tf.__operators__.add_7 (TFOpLa (None, 52, 52, 256) 0 ['tf.__operators__.add_6[0][0]',

mbda) 'tf.nn.leaky_relu_19[0][0]']

conv2d_20 (Conv2D) (None, 52, 52, 128) 32768 ['tf.__operators__.add_7[0][0]']

batch_normalization_20 (BatchN (None, 52, 52, 128) 512 ['conv2d_20[0][0]']

ormalization)

tf.nn.leaky_relu_20 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_20[0][0]']

a)

conv2d_21 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_20[0][0]']

batch_normalization_21 (BatchN (None, 52, 52, 256) 1024 ['conv2d_21[0][0]']

ormalization)

tf.nn.leaky_relu_21 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_21[0][0]']

a)

tf.__operators__.add_8 (TFOpLa (None, 52, 52, 256) 0 ['tf.__operators__.add_7[0][0]',

mbda) 'tf.nn.leaky_relu_21[0][0]']

conv2d_22 (Conv2D) (None, 52, 52, 128) 32768 ['tf.__operators__.add_8[0][0]']

batch_normalization_22 (BatchN (None, 52, 52, 128) 512 ['conv2d_22[0][0]']

ormalization)

tf.nn.leaky_relu_22 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_22[0][0]']

a)

conv2d_23 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_22[0][0]']

batch_normalization_23 (BatchN (None, 52, 52, 256) 1024 ['conv2d_23[0][0]']

ormalization)

tf.nn.leaky_relu_23 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_23[0][0]']

a)

tf.__operators__.add_9 (TFOpLa (None, 52, 52, 256) 0 ['tf.__operators__.add_8[0][0]',

mbda) 'tf.nn.leaky_relu_23[0][0]']

conv2d_24 (Conv2D) (None, 52, 52, 128) 32768 ['tf.__operators__.add_9[0][0]']

batch_normalization_24 (BatchN (None, 52, 52, 128) 512 ['conv2d_24[0][0]']

ormalization)

tf.nn.leaky_relu_24 (TFOpLambd (None, 52, 52, 128) 0 ['batch_normalization_24[0][0]']

a)

conv2d_25 (Conv2D) (None, 52, 52, 256) 294912 ['tf.nn.leaky_relu_24[0][0]']

batch_normalization_25 (BatchN (None, 52, 52, 256) 1024 ['conv2d_25[0][0]']

ormalization)

tf.nn.leaky_relu_25 (TFOpLambd (None, 52, 52, 256) 0 ['batch_normalization_25[0][0]']

a)

tf.__operators__.add_10 (TFOpL (None, 52, 52, 256) 0 ['tf.__operators__.add_9[0][0]',

ambda) 'tf.nn.leaky_relu_25[0][0]']

zero_padding2d_3 (ZeroPadding2 (None, 53, 53, 256) 0 ['tf.__operators__.add_10[0][0]']

D)

conv2d_26 (Conv2D) (None, 26, 26, 512) 1179648 ['zero_padding2d_3[0][0]']

batch_normalization_26 (BatchN (None, 26, 26, 512) 2048 ['conv2d_26[0][0]']

ormalization)

tf.nn.leaky_relu_26 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_26[0][0]']

a)

conv2d_27 (Conv2D) (None, 26, 26, 256) 131072 ['tf.nn.leaky_relu_26[0][0]']

batch_normalization_27 (BatchN (None, 26, 26, 256) 1024 ['conv2d_27[0][0]']

ormalization)

tf.nn.leaky_relu_27 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_27[0][0]']

a)

conv2d_28 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_27[0][0]']

batch_normalization_28 (BatchN (None, 26, 26, 512) 2048 ['conv2d_28[0][0]']

ormalization)

tf.nn.leaky_relu_28 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_28[0][0]']

a)

tf.__operators__.add_11 (TFOpL (None, 26, 26, 512) 0 ['tf.nn.leaky_relu_26[0][0]',

ambda) 'tf.nn.leaky_relu_28[0][0]']

conv2d_29 (Conv2D) (None, 26, 26, 256) 131072 ['tf.__operators__.add_11[0][0]']

batch_normalization_29 (BatchN (None, 26, 26, 256) 1024 ['conv2d_29[0][0]']

ormalization)

tf.nn.leaky_relu_29 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_29[0][0]']

a)

conv2d_30 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_29[0][0]']

batch_normalization_30 (BatchN (None, 26, 26, 512) 2048 ['conv2d_30[0][0]']

ormalization)

tf.nn.leaky_relu_30 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_30[0][0]']

a)

tf.__operators__.add_12 (TFOpL (None, 26, 26, 512) 0 ['tf.__operators__.add_11[0][0]',

ambda) 'tf.nn.leaky_relu_30[0][0]']

conv2d_31 (Conv2D) (None, 26, 26, 256) 131072 ['tf.__operators__.add_12[0][0]']

batch_normalization_31 (BatchN (None, 26, 26, 256) 1024 ['conv2d_31[0][0]']

ormalization)

tf.nn.leaky_relu_31 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_31[0][0]']

a)

conv2d_32 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_31[0][0]']

batch_normalization_32 (BatchN (None, 26, 26, 512) 2048 ['conv2d_32[0][0]']

ormalization)

tf.nn.leaky_relu_32 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_32[0][0]']

a)

tf.__operators__.add_13 (TFOpL (None, 26, 26, 512) 0 ['tf.__operators__.add_12[0][0]',

ambda) 'tf.nn.leaky_relu_32[0][0]']

conv2d_33 (Conv2D) (None, 26, 26, 256) 131072 ['tf.__operators__.add_13[0][0]']

batch_normalization_33 (BatchN (None, 26, 26, 256) 1024 ['conv2d_33[0][0]']

ormalization)

tf.nn.leaky_relu_33 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_33[0][0]']

a)

conv2d_34 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_33[0][0]']

batch_normalization_34 (BatchN (None, 26, 26, 512) 2048 ['conv2d_34[0][0]']

ormalization)

tf.nn.leaky_relu_34 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_34[0][0]']

a)

tf.__operators__.add_14 (TFOpL (None, 26, 26, 512) 0 ['tf.__operators__.add_13[0][0]',

ambda) 'tf.nn.leaky_relu_34[0][0]']

conv2d_35 (Conv2D) (None, 26, 26, 256) 131072 ['tf.__operators__.add_14[0][0]']

batch_normalization_35 (BatchN (None, 26, 26, 256) 1024 ['conv2d_35[0][0]']

ormalization)

tf.nn.leaky_relu_35 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_35[0][0]']

a)

conv2d_36 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_35[0][0]']

batch_normalization_36 (BatchN (None, 26, 26, 512) 2048 ['conv2d_36[0][0]']

ormalization)

tf.nn.leaky_relu_36 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_36[0][0]']

a)

tf.__operators__.add_15 (TFOpL (None, 26, 26, 512) 0 ['tf.__operators__.add_14[0][0]',

ambda) 'tf.nn.leaky_relu_36[0][0]']

conv2d_37 (Conv2D) (None, 26, 26, 256) 131072 ['tf.__operators__.add_15[0][0]']

batch_normalization_37 (BatchN (None, 26, 26, 256) 1024 ['conv2d_37[0][0]']

ormalization)

tf.nn.leaky_relu_37 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_37[0][0]']

a)

conv2d_38 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_37[0][0]']

batch_normalization_38 (BatchN (None, 26, 26, 512) 2048 ['conv2d_38[0][0]']

ormalization)

tf.nn.leaky_relu_38 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_38[0][0]']

a)

tf.__operators__.add_16 (TFOpL (None, 26, 26, 512) 0 ['tf.__operators__.add_15[0][0]',

ambda) 'tf.nn.leaky_relu_38[0][0]']

conv2d_39 (Conv2D) (None, 26, 26, 256) 131072 ['tf.__operators__.add_16[0][0]']

batch_normalization_39 (BatchN (None, 26, 26, 256) 1024 ['conv2d_39[0][0]']

ormalization)

tf.nn.leaky_relu_39 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_39[0][0]']

a)

conv2d_40 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_39[0][0]']

batch_normalization_40 (BatchN (None, 26, 26, 512) 2048 ['conv2d_40[0][0]']

ormalization)

tf.nn.leaky_relu_40 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_40[0][0]']

a)

tf.__operators__.add_17 (TFOpL (None, 26, 26, 512) 0 ['tf.__operators__.add_16[0][0]',

ambda) 'tf.nn.leaky_relu_40[0][0]']

conv2d_41 (Conv2D) (None, 26, 26, 256) 131072 ['tf.__operators__.add_17[0][0]']

batch_normalization_41 (BatchN (None, 26, 26, 256) 1024 ['conv2d_41[0][0]']

ormalization)

tf.nn.leaky_relu_41 (TFOpLambd (None, 26, 26, 256) 0 ['batch_normalization_41[0][0]']

a)

conv2d_42 (Conv2D) (None, 26, 26, 512) 1179648 ['tf.nn.leaky_relu_41[0][0]']

batch_normalization_42 (BatchN (None, 26, 26, 512) 2048 ['conv2d_42[0][0]']

ormalization)

tf.nn.leaky_relu_42 (TFOpLambd (None, 26, 26, 512) 0 ['batch_normalization_42[0][0]']

a)

tf.__operators__.add_18 (TFOpL (None, 26, 26, 512) 0 ['tf.__operators__.add_17[0][0]',

ambda) 'tf.nn.leaky_relu_42[0][0]']

zero_padding2d_4 (ZeroPadding2 (None, 27, 27, 512) 0 ['tf.__operators__.add_18[0][0]']

D)

conv2d_43 (Conv2D) (None, 13, 13, 1024 4718592 ['zero_padding2d_4[0][0]']

)

batch_normalization_43 (BatchN (None, 13, 13, 1024 4096 ['conv2d_43[0][0]']

ormalization) )

tf.nn.leaky_relu_43 (TFOpLambd (None, 13, 13, 1024 0 ['batch_normalization_43[0][0]']

a) )

conv2d_44 (Conv2D) (None, 13, 13, 512) 524288 ['tf.nn.leaky_relu_43[0][0]']

batch_normalization_44 (BatchN (None, 13, 13, 512) 2048 ['conv2d_44[0][0]']

ormalization)

tf.nn.leaky_relu_44 (TFOpLambd (None, 13, 13, 512) 0 ['batch_normalization_44[0][0]']

a)

conv2d_45 (Conv2D) (None, 13, 13, 1024 4718592 ['tf.nn.leaky_relu_44[0][0]']

)

batch_normalization_45 (BatchN (None, 13, 13, 1024 4096 ['conv2d_45[0][0]']

ormalization) )

tf.nn.leaky_relu_45 (TFOpLambd (None, 13, 13, 1024 0 ['batch_normalization_45[0][0]']

a) )

tf.__operators__.add_19 (TFOpL (None, 13, 13, 1024 0 ['tf.nn.leaky_relu_43[0][0]',

ambda) ) 'tf.nn.leaky_relu_45[0][0]']

conv2d_46 (Conv2D) (None, 13, 13, 512) 524288 ['tf.__operators__.add_19[0][0]']

batch_normalization_46 (BatchN (None, 13, 13, 512) 2048 ['conv2d_46[0][0]']

ormalization)

tf.nn.leaky_relu_46 (TFOpLambd (None, 13, 13, 512) 0 ['batch_normalization_46[0][0]']

a)

conv2d_47 (Conv2D) (None, 13, 13, 1024 4718592 ['tf.nn.leaky_relu_46[0][0]']

)

batch_normalization_47 (BatchN (None, 13, 13, 1024 4096 ['conv2d_47[0][0]']

ormalization) )

tf.nn.leaky_relu_47 (TFOpLambd (None, 13, 13, 1024 0 ['batch_normalization_47[0][0]']

a) )

tf.__operators__.add_20 (TFOpL (None, 13, 13, 1024 0 ['tf.__operators__.add_19[0][0]',

ambda) ) 'tf.nn.leaky_relu_47[0][0]']

conv2d_48 (Conv2D) (None, 13, 13, 512) 524288 ['tf.__operators__.add_20[0][0]']

batch_normalization_48 (BatchN (None, 13, 13, 512) 2048 ['conv2d_48[0][0]']

ormalization)

tf.nn.leaky_relu_48 (TFOpLambd (None, 13, 13, 512) 0 ['batch_normalization_48[0][0]']

a)

conv2d_49 (Conv2D) (None, 13, 13, 1024 4718592 ['tf.nn.leaky_relu_48[0][0]']

)

batch_normalization_49 (BatchN (None, 13, 13, 1024 4096 ['conv2d_49[0][0]']

ormalization) )

tf.nn.leaky_relu_49 (TFOpLambd (None, 13, 13, 1024 0 ['batch_normalization_49[0][0]']

a) )

tf.__operators__.add_21 (TFOpL (None, 13, 13, 1024 0 ['tf.__operators__.add_20[0][0]',

ambda) ) 'tf.nn.leaky_relu_49[0][0]']

conv2d_50 (Conv2D) (None, 13, 13, 512) 524288 ['tf.__operators__.add_21[0][0]']

batch_normalization_50 (BatchN (None, 13, 13, 512) 2048 ['conv2d_50[0][0]']

ormalization)

tf.nn.leaky_relu_50 (TFOpLambd (None, 13, 13, 512) 0 ['batch_normalization_50[0][0]']

a)

conv2d_51 (Conv2D) (None, 13, 13, 1024 4718592 ['tf.nn.leaky_relu_50[0][0]']

)

batch_normalization_51 (BatchN (None, 13, 13, 1024 4096 ['conv2d_51[0][0]']

ormalization) )

tf.nn.leaky_relu_51 (TFOpLambd (None, 13, 13, 1024 0 ['batch_normalization_51[0][0]']

a) )

tf.__operators__.add_22 (TFOpL (None, 13, 13, 1024 0 ['tf.__operators__.add_21[0][0]',

ambda) ) 'tf.nn.leaky_relu_51[0][0]']

==================================================================================================

Total params: 40,620,640

Trainable params: 40,584,928

Non-trainable params: 35,712

__________________________________________________________________________________________________

1.2:FPN:

为了加强算法对小目标检测的精确度,YOLO v3中采用类似FPN的upsample和融合做法(最后融合了3个scale,其他两个scale的大小分别是26×26和52×52),在多个scale的feature map上做检测。

concat: 张量拼接。 将darknet中间层和后面的某一层的上采样进行拼接。拼接的操作和残差层add的操作不同。拼接会扩充张量的维度。而add只是直接相加,不会导致张量位的的改变。

文章来源:https://www.toymoban.com/news/detail-408644.html

文章来源:https://www.toymoban.com/news/detail-408644.html

代码:文章来源地址https://www.toymoban.com/news/detail-408644.html

到了这里,关于Yolov3 模型结构的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!