![在这里插入图片描述]

一般任务 = 预训练 + 架构 + 应用

在本文中,我们将用BERT + 架构去实现文本分类任务

未使用BERT架构,使用基本的模型架构解决文本分类任务的可见这篇文章

中文文本分类,基本模型的pytoch实现 - 影子的文章 - 知乎 https://zhuanlan.zhihu.com/p/577121058

BERT

最基本的BERT实现文本分类任务,就是在最后一层加上一个全连接层即可

- BERT模型配置参数:config.json

- BERT模型训练权重:PyTorch_model.bin

- vocab词表信息:尽管BERT可以处理一百多种语言,但是它仍需要词表文件用于识别所支持语言的字符、字符串或者单词

格式准备

我们需要将输入数据格式转化为BERT需要的格式。

- Token embeddings:词向量,开头的token一定得是[CLS]。[CLS]作为整篇文本的语义表示,用于文本分类等任务

- Segment embeddings:这个向量主要是用来将两句话进行区分,比如问答任务,问句和答句同时输入,这时需要一个能够区分两句话的操作。再本次任务中,由于是分类任务,故只有一个句子

- Position embeddings:记录了单词的位置信息

选择优化器

我们要对网络中的所有权重参数进行设置,这样优化器就可以知道哪些参数是需要优化的,将参数list放到优化器中,这里使用的是BertAdam优化器

param_optimizer = list(model.named_parameters())

no_decay = ['bias', 'LayerNorm.bias', 'LayerNorm.weight']

optimizer_grouped_parameters = [

{'params': [p for n, p in param_optimizer if not any(nd in n for nd in no_decay)], 'weight_decay': 0.01},

{'params': [p for n, p in param_optimizer if any(nd in n for nd in no_decay)], 'weight_decay': 0.0}]

# optimizer = torch.optim.Adam(model.parameters(), lr=config.learning_rate)

optimizer = BertAdam(optimizer_grouped_parameters,

lr=config.learning_rate,

warmup=0.05,

t_total=len(train_iter) * config.num_epochs)

模型构建

基本Config配置信息

class Config(object):

"""配置参数"""

def __init__(self, dataset):

self.model_name = 'bert'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 设备

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.num_epochs = 3 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 5e-5 # 学习率

self.bert_path = './bert_pretrain'

self.tokenizer = BertTokenizer.from_pretrained(self.bert_path) # 得到tokenizer,从vocab.txt转化而来

self.hidden_size = 768

BERT模型搭建

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

self.bert = BertModel.from_pretrained(config.bert_path) # 得到bert架构及参数,预训练权重导入

for param in self.bert.parameters():

param.requires_grad = True

self.fc = nn.Linear(config.hidden_size, config.num_classes)

def forward(self, x): # x:{[batch_size,seq_len],[batch_size,],[batch_size,seq_len]}

context = x[0] # context:[batch_size,seq_len],输入的句子

mask = x[2] # mask:[bacth_size,seq_len],对padding部分进行mask

_, pooled = self.bert(context, attention_mask=mask, output_all_encoded_layers=False) # pooled:[batch_size,hidden_size]

out = self.fc(pooled) # out:[batch_size,num_class]

return out

BERT + RNN

class Config(object):

"""配置参数"""

def __init__(self, dataset):

self.model_name = 'bert'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 设备

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.num_epochs = 3 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 5e-5 # 学习率

self.bert_path = './bert_pretrain'

self.tokenizer = BertTokenizer.from_pretrained(self.bert_path)

self.hidden_size = 768

self.dropout = 0.1

self.rnn_hidden = 768

self.num_layers = 2

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

self.bert = BertModel.from_pretrained(config.bert_path)

for param in self.bert.parameters():

param.requires_grad = True

self.lstm = nn.LSTM(config.hidden_size, config.rnn_hidden, config.num_layers,

bidirectional=True, batch_first=True, dropout=config.dropout)

self.dropout = nn.Dropout(config.dropout)

self.fc_rnn = nn.Linear(config.rnn_hidden * 2, config.num_classes)

def forward(self, x): # x:{[batch_size,seq_len],[batch_size,],[batch_size,seq_len]}

context = x[0] # context:[batch_size,seq_len],输入的句子

mask = x[2] # mask:[bacth_size,seq_len],对padding部分进行mask

encoder_out, text_cls = self.bert(context, attention_mask=mask, output_all_encoded_layers=False) # encoder_out:[batch_size,seq_len,hidden_size],text_cls:[batch_size,hidden_size]

out, _ = self.lstm(encoder_out) # out:[batch_size,seq_len,rnn_hidden * 2]

out = self.dropout(out)

out = self.fc_rnn(out[:, -1, :]) # out:[batch_size,num_class],句子最后时刻的 hidden state

return out

BERT + CNN

class Config(object):

"""配置参数"""

def __init__(self, dataset):

self.model_name = 'bert'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 设备

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.num_epochs = 3 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 5e-5 # 学习率

self.bert_path = './bert_pretrain'

self.tokenizer = BertTokenizer.from_pretrained(self.bert_path)

self.hidden_size = 768

self.filter_sizes = (2, 3, 4) # 卷积核尺寸

self.num_filters = 256 # 卷积核数量(channels数)

self.dropout = 0.1

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

self.bert = BertModel.from_pretrained(config.bert_path)

for param in self.bert.parameters():

param.requires_grad = True

self.convs = nn.ModuleList(

[nn.Conv2d(1, config.num_filters, (k, config.hidden_size)) for k in config.filter_sizes])

self.dropout = nn.Dropout(config.dropout)

self.fc_cnn = nn.Linear(config.num_filters * len(config.filter_sizes), config.num_classes)

def conv_and_pool(self, x, conv):

x = F.relu(conv(x)).squeeze(3)

x = F.max_pool1d(x, x.size(2)).squeeze(2)

return x

def forward(self, x): # x:{[batch_size,seq_len],[batch_size,],[batch_size,seq_len]}

context = x[0] # context:[batch_size,seq_len],输入的句子

mask = x[2] # mask:[bacth_size,seq_len],对padding部分进行mask

encoder_out, text_cls = self.bert(context, attention_mask=mask, output_all_encoded_layers=False) # encoder_out:[batch_size,seq_len,hidden_size],text_cls:[batch_size,hidden_size]

out = encoder_out.unsqueeze(1) # out:[batch_size,1,seq_len,hidden_size]

out = torch.cat([self.conv_and_pool(out, conv) for conv in self.convs], 1) # out:[batch_size,num_filters * len(filter_sizes)]

out = self.dropout(out)

out = self.fc_cnn(out) # out:[batch_size,num_class]

return out

BERT + RCNN

class Config(object):

"""配置参数"""

def __init__(self, dataset):

self.model_name = 'bert'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 设备

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.num_epochs = 3 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 5e-5 # 学习率

self.bert_path = './bert_pretrain'

self.tokenizer = BertTokenizer.from_pretrained(self.bert_path)

self.hidden_size = 768

self.dropout = 0.1

self.rnn_hidden = 256

self.num_layers = 2

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

self.bert = BertModel.from_pretrained(config.bert_path)

for param in self.bert.parameters():

param.requires_grad = True

self.lstm = nn.LSTM(config.hidden_size, config.rnn_hidden, config.num_layers,

bidirectional=True, batch_first=True, dropout=config.dropout)

self.maxpool = nn.MaxPool1d(config.pad_size)

self.fc = nn.Linear(config.rnn_hidden * 2 + config.hidden_size, config.num_classes)

def forward(self, x):# x:{[batch_size,seq_len],[batch_size,],[batch_size,seq_len]}

context = x[0] # context:[batch_size,seq_len],输入的句子

mask = x[2] # mask:[bacth_size,seq_len],对padding部分进行mask

encoder_out, text_cls = self.bert(context, attention_mask=mask, output_all_encoded_layers=False) # encoder_out:[batch_size,seq_len,hidden_size],text_cls:[batch_size,hidden_size]

out, _ = self.lstm(encoder_out)# out:[batch_size,seq_len,rnn_hiden * 2]

out = torch.cat((encoder_out, out), 2)# out:[batch_size,seq_len,rnn_hiden * 2 + embedding]

out = F.relu(out)

out = out.permute(0, 2, 1) # out:[batch_size,rnn_hidden * 2 + embedding,seq_len]

out = self.maxpool(out).squeeze() # out:[batch_size,rnn_hidden * 2 + embedding]

out = self.fc(out) # out:[batch_size,num_classes]

return out

BERT + DPCNN

class Config(object):

"""配置参数"""

def __init__(self, dataset):

self.model_name = 'bert'

self.train_path = dataset + '/data/train.txt' # 训练集

self.dev_path = dataset + '/data/dev.txt' # 验证集

self.test_path = dataset + '/data/test.txt' # 测试集

self.class_list = [x.strip() for x in open(

dataset + '/data/class.txt').readlines()] # 类别名单

self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型训练结果

self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 设备

self.require_improvement = 1000 # 若超过1000batch效果还没提升,则提前结束训练

self.num_classes = len(self.class_list) # 类别数

self.num_epochs = 3 # epoch数

self.batch_size = 128 # mini-batch大小

self.pad_size = 32 # 每句话处理成的长度(短填长切)

self.learning_rate = 5e-5 # 学习率

self.bert_path = './bert_pretrain'

self.tokenizer = BertTokenizer.from_pretrained(self.bert_path)

self.hidden_size = 768

self.num_filters = 250 # 卷积核数量(channels数)

class Model(nn.Module):

def __init__(self, config):

super(Model, self).__init__()

self.bert = BertModel.from_pretrained(config.bert_path)

for param in self.bert.parameters():

param.requires_grad = True

# self.fc = nn.Linear(config.hidden_size, config.num_classes)

self.conv_region = nn.Conv2d(1, config.num_filters, (3, config.hidden_size), stride=1)

self.conv = nn.Conv2d(config.num_filters, config.num_filters, (3, 1), stride=1)

self.max_pool = nn.MaxPool2d(kernel_size=(3, 1), stride=2)

self.padding1 = nn.ZeroPad2d((0, 0, 1, 1)) # top bottom

self.padding2 = nn.ZeroPad2d((0, 0, 0, 1)) # bottom

self.relu = nn.ReLU()

self.fc = nn.Linear(config.num_filters, config.num_classes)

def forward(self, x):

context = x[0] # 输入的句子

mask = x[2] # 对padding部分进行mask,和句子一个size,padding部分用0表示,如:[1, 1, 1, 1, 0, 0]

encoder_out, text_cls = self.bert(context, attention_mask=mask, output_all_encoded_layers=False)# encoder_out:[batch_size,seq_len,hidden_size],text_cls:[batch_size,hidden_size]

x = encoder_out.unsqueeze(1) # x:[batch_size,1,seq_len,hidden_size]

x = self.conv_region(x) # x:[batch_size, num_filters, seq_len-3+1, 1]

x = self.padding1(x) # x:[batch_size, num_filters, seq_len, 1]

x = self.relu(x)

x = self.conv(x) # x:[batch_size, num_filters, seq_len-3+1, 1]

x = self.padding1(x) # x:[batch_size, num_filters, seq_len, 1]

x = self.relu(x)

x = self.conv(x) # x:[batch_size, num_filters, seq_len-3+1, 1]

while x.size()[2] > 2:

x = self._block(x) # x:[batch_size,num_filters,1,1]

x = x.squeeze() # [batch_size, num_filters]

x = self.fc(x) # x:[batch_size,num_class]

return x

def _block(self, x):

x = self.padding2(x)

px = self.max_pool(x)

x = self.padding1(px)

x = F.relu(x)

x = self.conv(x)

x = self.padding1(x)

x = F.relu(x)

x = self.conv(x)

x = x + px # short cut

return x

参考资料

使用BERT构建文本分类模型 - 知乎 (zhihu.com)

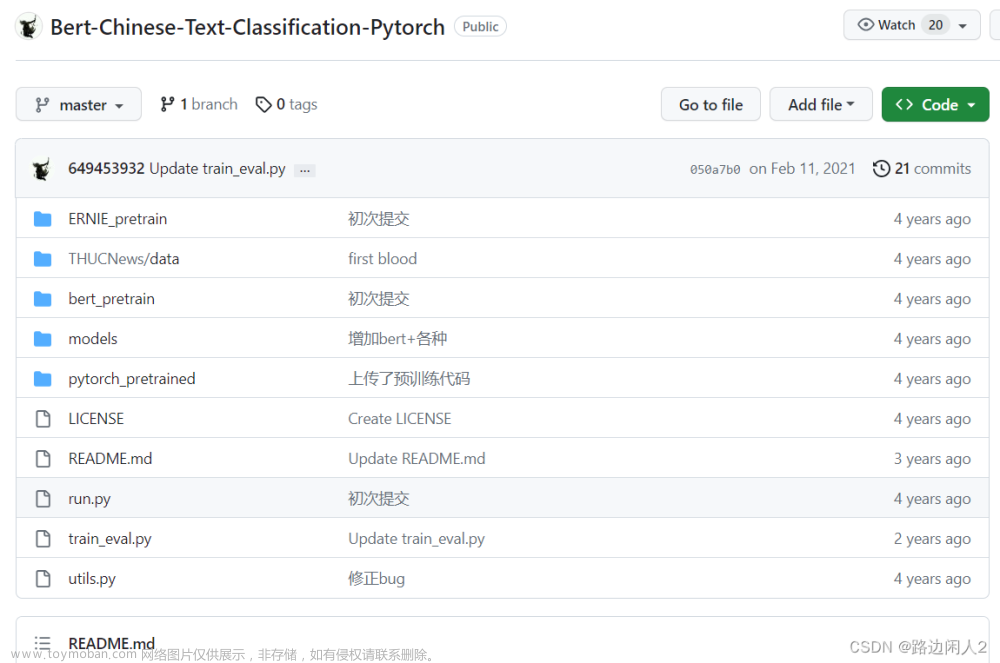

649453932/Bert-Chinese-Text-Classification-Pytorch: 使用Bert,ERNIE,进行中文文本分类 (github.com)文章来源:https://www.toymoban.com/news/detail-411989.html

李沐-动手深度学习文章来源地址https://www.toymoban.com/news/detail-411989.html

到了这里,关于Bert + 架构解决文本分类任务的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[oneAPI] 使用Bert进行中文文本分类](https://imgs.yssmx.com/Uploads/2024/02/661538-1.png)

![[oneAPI] 基于BERT预训练模型的英文文本蕴含任务](https://imgs.yssmx.com/Uploads/2024/02/666282-1.png)