前言

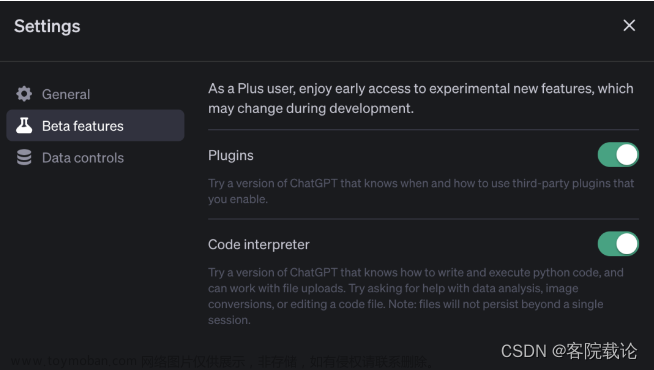

ChatGPT很火,但是对于这个模型我们怎么用呢?只是和他聊聊天,回答回答问题?

如何基于这个模型进行二次开发呢?是否可以和new bing一样,可以搜索资料然后进行回复?甚至可以按照你的指令帮你操作机器人?

LangChain的 Agent模块就可以帮大家做到这些,而Agent是如何使用LLM思考并与外部世界进行交互的呢?我们通过源码给大家进行讲解。

以下源码是基于 LangChain的 0.0.117版本,也就是最近的最新版本

应用案例

应用代码

在这个案例中,我们通过定一个了一个搜索es的工具给 agent,从而让agent具备了使用外部工具的能力。而我也开启了verbose的输出,就可以看到完整的思考过程。

案例仅仅是为了更好解释过程,实际并不怎么准确

import os

os.environ["OPENAI_API_KEY"] = "xxxxxxxxxx"

import requests

from langchain.memory import ConversationBufferMemory

from langchain.chat_models import ChatOpenAI

# Import things that are needed generically

from langchain.agents import initialize_agent

from langchain.tools import BaseTool

class CustomSearchTool(BaseTool):

name = "Search"

description = "useful for when you need to answer questions about current events"

def _run(self, query: str) -> str:

"""Use the tool."""

auth = ("elastic", "xxxx")

session = requests.Session()

session.trust_env = False

ret = session.get("http://127.0.0.1:9200/summary_section/_search", auth=auth, json={

"query": {

"multi_match": {

"query": query,

"fields": ["content", "title"]

}

},

"size": 5

})

print(ret.text)

if ret.status_code != 200 or ret.json().get("hits").get("total").get('value') == 0:

return "No good search result found"

else:

retstr = ""

for hit in ret.json().get("hits").get('hits'):

retstr += f"标题: {hit.get('_source').get('title')} 内容: {hit.get('_source').get('content')}\n"

return retstr

async def _arun(self, query: str) -> str:

"""Use the tool asynchronously."""

raise NotImplementedError("BingSearchRun does not support async")

tools = [

CustomSearchTool()

]

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

llm=ChatOpenAI(temperature=0)

agent_chain = initialize_agent(tools, llm, agent="chat-conversational-react-description", agent_kwargs ={"verbose": True} , verbose=True, memory=memory)

agent_chain.agent.llm_chain.verbose = True

agent_chain.run(input="清洁和TMT行业哪个行业更利好")

完整输出

触发执行的语句

我先和他进行了两次互动,这里是为了测试这个模型的记忆功能

agent_chain.run("我是赖赖")

agent_chain.run("我是谁")

然后才问行业的问题

agent_chain.run("清洁和TMT行业哪个行业更利好")

以下是行业问题的详细日志输出和解释

第一轮思考

可以看到第一轮思考过程,把我的语句和工具描述,以及历史的对话内容拼装成prompt给到openai

> Entering new AgentExecutor chain...

> Entering new LLMChain chain...

Prompt after formatting:

System: Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful system that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

Human: 我是赖赖

AI: 你好,赖赖!有什么我可以帮助你的吗?

Human: 我是谁?

AI: 你是赖赖,这是你在之前的提问中提到的。

Human: TOOLS

------

Assistant can ask the user to use tools to look up information that may be helpful in answering the users original question. The tools the human can use are:

> Search: useful for when you need to answer questions about current events

RESPONSE FORMAT INSTRUCTIONS

----------------------------

When responding to me please, please output a response in one of two formats:

**Option 1:**

Use this if you want the human to use a tool.

Markdown code snippet formatted in the following schema:

json

``{

"action": string \ The action to take. Must be one of Search

"action_input": string \ The input to the action

}

``

**Option #2:**

Use this if you want to respond directly to the human. Markdown code snippet formatted in the following schema:

``json

{

"action": "Final Answer",

"action_input": string \ You should put what you want to return to use here

}

``

USER'S INPUT

--------------------

Here is the user's input (remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else):

清洁和TMT行业哪个行业更利好

最终得到这样的输出

> Finished chain.

``json

{

"action": "Search",

"action_input": "清洁和TMT行业哪个行业更利好"

}

``

再通过这个action直接调用工具获取数据

Observation: 标题: 5G时代下科技股该如何投资2 内容: 2022年TMT领域热点频出,如信创、数字经济以及引发全民关注的源宇宙等。同时A股震荡格局下,TMT行业细分赛道持续轮动表现可圈可点。2023年TMT行业的发展趋势如何?又有何投资机会?

标题: 中国清洁服务的增长逻辑 内容: 作为一个传统的劳动密集型的行业,清洁服务也会面临着因为人口老龄化而逐渐失去竞争力,清洁服务行业需要一个转型的契机,届时机械化、一体化、市场化,这三个方面将是解决问题的一个关键。用机械来代替人力,将有效的提高了清洁服务的一个工作效率,而一体化的作业模式将加强企业对于项目的管理以及作业的监管,提高一个清洁服务的一个工作效率,市场化将会让更多企业参与到竞争当中,提升清洁服务的企业的服务水平。随着这三大趋势的随着这三大趋势越来越明显,未来清洁服务行业竞争将会更愈发的激烈,如何做好更精细化的管理,以及应用更先进的一些机械化的设备,将大家大了行业的准入门槛,但是这也促进了整个行业的规范化以及技术的发展。

标题: 5G时代下科技股该如何投资 内容: 最后我们还是落到TMT的行业方面。

标题: 天融信 内容: 实际上应该说它应该目前来看,还真的没有说哪个行业是所以我们自己实际卖的来看,就卖了一个多亿来看,这些行业没有一个太大的特点。

第二轮思考

把从工具获得的结果塞到了prompt里面

Thought:

> Entering new LLMChain chain...

Prompt after formatting:

System: Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful system that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

Human: 我是赖赖

AI: 你好,赖赖!有什么我可以帮助你的吗?

Human: 我是谁?

AI: 你是赖赖,这是你在之前的提问中提到的。

Human: TOOLS

------

Assistant can ask the user to use tools to look up information that may be helpful in answering the users original question. The tools the human can use are:

> Search: useful for when you need to answer questions about current events

RESPONSE FORMAT INSTRUCTIONS

----------------------------

When responding to me please, please output a response in one of two formats:

**Option 1:**

Use this if you want the human to use a tool.

Markdown code snippet formatted in the following schema:

``json

{

"action": string \ The action to take. Must be one of Search

"action_input": string \ The input to the action

}

``

**Option #2:**

Use this if you want to respond directly to the human. Markdown code snippet formatted in the following schema:

``json

{

"action": "Final Answer",

"action_input": string \ You should put what you want to return to use here

}

``

USER'S INPUT

--------------------

Here is the user's input (remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else):

清洁和TMT行业哪个行业更利好

AI: ``json

{

"action": "Search",

"action_input": "清洁和TMT行业哪个行业更利好"

}

``

Human: TOOL RESPONSE:

---------------------

标题: 5G时代下科技股该如何投资2 内容: 2022年TMT领域热点频出,如信创、数字经济以及引发全民关注的源宇宙等。同时A股震荡格局下,TMT行业细分赛道持续轮动表现可圈可点。2023年TMT行业的发展趋势如何?又有何投资机会?

标题: 中国清洁服务的增长逻辑 内容: 作为一个传统的劳动密集型的行业,清洁服务也会面临着因为人口老龄化而逐渐失去竞争力,清洁服务行业需要一个转型的契机,届时机械化、一体化、市场化,这三个方面将是解决问题的一个关键。用机械来代替人力,将有效的提高了清洁服务的一个工作效率,而一体化的作业模式将加强企业对于项目的管理以及作业的监管,提高一个清洁服务的一个工作效率,市场化将会让更多企业参与到竞争当中,提升清洁服务的企业的服务水平。随着这三大趋势的随着这三大趋势越来越明显,未来清洁服务行业竞争将会更愈发的激烈,如何做好更精细化的管理,以及应用更先进的一些机械化的设备,将大家大了行业的准入门槛,但是这也促进了整个行业的规范化以及技术的发展。

标题: 5G时代下科技股该如何投资 内容: 最后我们还是落到TMT的行业方面。

标题: 天融信 内容: 实际上应该说它应该目前来看,还真的没有说哪个行业是所以我们自己实际卖的来看,就卖了一个多亿来看,这些行业没有一个太大的特点。

USER'S INPUT

--------------------

Okay, so what is the response to my original question? If using information from tools, you must say it explicitly - I have forgotten all TOOL RESPONSES! Remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else.

得到结果是Final Anser,直接输出

> Finished chain.

``json

{

"action": "Final Answer",

"action_input": "根据提供的信息,TMT行业在未来可能会有更好的发展前景。"

}

``

> Finished chain.

Out[17]: '根据提供的信息,TMT行业在未来可能会有更好的发展前景。'

源码解析

调用栈和核心逻辑

因为直接使用了agent_chain.run,所以就从 agent_chain的构造开始

AgentExecutor的初始化

def initialize_agent(

tools: Sequence[BaseTool],

llm: BaseLLM,

agent: Optional[str] = None,

callback_manager: Optional[BaseCallbackManager] = None,

agent_path: Optional[str] = None,

agent_kwargs: Optional[dict] = None,

**kwargs: Any,

) -> AgentExecutor:

首先通过 initialize_agent 生成一个 AgentExecutor

这里生成了agent_obj(Agent), 传进去了tools

return AgentExecutor.from_agent_and_tools(

agent=agent_obj,

tools=tools,

callback_manager=callback_manager,

**kwargs,

)

callback_manager 这里会使用默认的

通过langchain.callbacks 里面的

def get_callback_manager() -> BaseCallbackManager:

"""Return the shared callback manager."""

return SharedCallbackManager()

AgentExecutor run()

调用链

AgentExecutor .run() 调用 call()

if args and not kwargs:

if len(args) != 1:

raise ValueError("`run` supports only one positional argument.")

return self(args[0])[self.output_keys[0]]

if kwargs and not args:

return self(kwargs)[self.output_keys[0]]

call() 调用_call()

inputs = self.prep_inputs(inputs)

self.callback_manager.on_chain_start(

{"name": self.__class__.__name__},

inputs,

verbose=self.verbose,

)

try:

outputs = self._call(inputs)

except (KeyboardInterrupt, Exception) as e:

self.callback_manager.on_chain_error(e, verbose=self.verbose)

raise e

self.callback_manager.on_chain_end(outputs, verbose=self.verbose)

return self.prep_outputs(inputs, outputs, return_only_outputs)

AgentExecute run 的核心逻辑 _call()

_call() 里面就是AgentExecutor run()的核心代码,这里通过不停的迭代的传入 intermediate_steps 最终到 AgentFinish

def _call(self, inputs: Dict[str, str]) -> Dict[str, Any]:

"""Run text through and get agent response."""

# Do any preparation necessary when receiving a new input.

self.agent.prepare_for_new_call()

# Construct a mapping of tool name to tool for easy lookup

name_to_tool_map = {tool.name: tool for tool in self.tools}

# We construct a mapping from each tool to a color, used for logging.

color_mapping = get_color_mapping(

[tool.name for tool in self.tools], excluded_colors=["green"]

)

intermediate_steps: List[Tuple[AgentAction, str]] = []

# Let's start tracking the iterations the agent has gone through

iterations = 0

# We now enter the agent loop (until it returns something).

while self._should_continue(iterations):

next_step_output = self._take_next_step(

name_to_tool_map, color_mapping, inputs, intermediate_steps

)

if isinstance(next_step_output, AgentFinish):

return self._return(next_step_output, intermediate_steps)

intermediate_steps.append(next_step_output)

# See if tool should return directly

tool_return = self._get_tool_return(next_step_output)

if tool_return is not None:

return self._return(tool_return, intermediate_steps)

iterations += 1

output = self.agent.return_stopped_response(

self.early_stopping_method, intermediate_steps, **inputs

)

return self._return(output, intermediate_steps)

intermediate_steps 里面是 AgentAction, str 其中

class AgentAction(NamedTuple):

"""Agent's action to take."""

tool: str

tool_input: str

log: str

AgentExecutor _call() 中的核心逻辑 _take_next_step

_call() 中调用代码

next_step_output = self._take_next_step(

name_to_tool_map, color_mapping, inputs, intermediate_steps

)

方法定义如下

def _take_next_step(

self,

name_to_tool_map: Dict[str, BaseTool],

color_mapping: Dict[str, str],

inputs: Dict[str, str],

intermediate_steps: List[Tuple[AgentAction, str]],

) -> Union[AgentFinish, Tuple[AgentAction, str]]:

"""Take a single step in the thought-action-observation loop.

Override this to take control of how the agent makes and acts on choices.

"""

# Call the LLM to see what to do.

output = self.agent.plan(intermediate_steps, **inputs)

# If the tool chosen is the finishing tool, then we end and return.

if isinstance(output, AgentFinish):

return output

self.callback_manager.on_agent_action(

output, verbose=self.verbose, color="green"

)

# Otherwise we lookup the tool

if output.tool in name_to_tool_map:

tool = name_to_tool_map[output.tool]

return_direct = tool.return_direct

color = color_mapping[output.tool]

llm_prefix = "" if return_direct else self.agent.llm_prefix

# We then call the tool on the tool input to get an observation

observation = tool.run(

output.tool_input,

verbose=self.verbose,

color=color,

llm_prefix=llm_prefix,

observation_prefix=self.agent.observation_prefix,

)

else:

observation = InvalidTool().run(

output.tool,

verbose=self.verbose,

color=None,

llm_prefix="",

observation_prefix=self.agent.observation_prefix,

)

return output, observation

这个方法最终通过调用self.agent.plan 终于将工作移交给agent

output = self.agent.plan(intermediate_steps, **inputs)

Agent plan方法

通过这个的方法的执行结果进行工具的调用或者是得到最终结果

这里的执行结果其实就是上面日志中看到的

{

"action": "Search",

"action_input": "清洁和TMT行业哪个行业更利好"

}

def plan(

self, intermediate_steps: List[Tuple[AgentAction, str]], **kwargs: Any

) -> Union[AgentAction, AgentFinish]:

"""Given input, decided what to do.

Args:

intermediate_steps: Steps the LLM has taken to date,

along with observations

**kwargs: User inputs.

Returns:

Action specifying what tool to use.

"""

full_inputs = self.get_full_inputs(intermediate_steps, **kwargs)

action = self._get_next_action(full_inputs)

if action.tool == self.finish_tool_name:

return AgentFinish({"output": action.tool_input}, action.log)

return action

方法里面的核心调用是_get_next_action(full_inputs)

_get_next_action 中的输入

plan中的 full_inputs 就非常关键了,是组成prompt的核心关键

def get_full_inputs(

self, intermediate_steps: List[Tuple[AgentAction, str]], **kwargs: Any

) -> Dict[str, Any]:

"""Create the full inputs for the LLMChain from intermediate steps."""

thoughts = self._construct_scratchpad(intermediate_steps)

new_inputs = {"agent_scratchpad": thoughts, "stop": self._stop}

full_inputs = {**kwargs, **new_inputs}

return full_inputs

def _construct_scratchpad(

self, intermediate_steps: List[Tuple[AgentAction, str]]

) -> List[BaseMessage]:

"""Construct the scratchpad that lets the agent continue its thought process."""

thoughts: List[BaseMessage] = []

for action, observation in intermediate_steps:

thoughts.append(AIMessage(content=action.log))

human_message = HumanMessage(

content=TEMPLATE_TOOL_RESPONSE.format(observation=observation)

)

thoughts.append(human_message)

return thoughts

这里的 full_inputs 是个 dict,默就有 agent_scratchpad 和 stop 这两个, 其中stop就是"Observation:" 这个词而已,用于prompt的组装。

agent_scratchpad这个部分 是 AIMessage 和 HumanMessage的组合

其中 AIMessage里面的 action.log 就是LLM的直接返回:

``json

{

"action": string \ The action to take. Must be one of Search

"action_input": string \ The input to the action

}

``

HumanMessage 就是 通过TEMPLATE_TOOL_RESPONSE 格式化的值:

其中TEMPLATE_TOOL_RESPONSE

TEMPLATE_TOOL_RESPONSE = """TOOL RESPONSE:

---------------------

{observation}

USER'S INPUT

--------------------

Okay, so what is the response to my original question? If using information from tools, you must say it explicitly - I have forgotten all TOOL RESPONSES! Remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else."""

_get_next_action方法

plan中的核心函数 _get_next_action

可以看到这里就是直接调用 llm_chain的 predict,至此移交到了llm_chain

def _get_next_action(self, full_inputs: Dict[str, str]) -> AgentAction:

full_output = self.llm_chain.predict(**full_inputs)

parsed_output = self._extract_tool_and_input(full_output)

while parsed_output is None:

full_output = self._fix_text(full_output)

full_inputs["agent_scratchpad"] += full_output

output = self.llm_chain.predict(**full_inputs)

full_output += output

parsed_output = self._extract_tool_and_input(full_output)

return AgentAction(

tool=parsed_output[0], tool_input=parsed_output[1], log=full_output

)

LLMChain中的 predict

def predict(self, **kwargs: Any) -> str:

"""Format prompt with kwargs and pass to LLM.

Args:

**kwargs: Keys to pass to prompt template.

Returns:

Completion from LLM.

Example:

.. code-block:: python

completion = llm.predict(adjective="funny")

"""

return self(kwargs)[self.output_key]

这里的调用链就是调用__call__() 再调用 _call() (这是标准的 Chain调用),

在_call()中再调用 apply() (这个是LLMChain的调用)

def apply(self, input_list: List[Dict[str, Any]]) -> List[Dict[str, str]]:

"""Utilize the LLM generate method for speed gains."""

response = self.generate(input_list)

return self.create_outputs(response)

再进入

def generate(self, input_list: List[Dict[str, Any]]) -> LLMResult:

"""Generate LLM result from inputs."""

prompts, stop = self.prep_prompts(input_list)

return self.llm.generate_prompt(prompts, stop)

generate 就是把prompt给llm调用了

generate中的核心就是组件prompts了,所以其中的 pre_prompts是核心方法

def prep_prompts(

self, input_list: List[Dict[str, Any]]

) -> Tuple[List[PromptValue], Optional[List[str]]]:

"""Prepare prompts from inputs."""

stop = None

if "stop" in input_list[0]:

stop = input_list[0]["stop"]

prompts = []

for inputs in input_list:

selected_inputs = {k: inputs[k] for k in self.prompt.input_variables}

prompt = self.prompt.format_prompt(**selected_inputs)

_colored_text = get_colored_text(prompt.to_string(), "green")

_text = "Prompt after formatting:\n" + _colored_text

self.callback_manager.on_text(_text, end="\n", verbose=self.verbose)

if "stop" in inputs and inputs["stop"] != stop:

raise ValueError(

"If `stop` is present in any inputs, should be present in all."

)

prompts.append(prompt)

return prompts, stop

prep_prompts 的核心是 format_prompt

def format_prompt(self, **kwargs: Any) -> PromptValue:

kwargs = self._merge_partial_and_user_variables(**kwargs)

result = []

for message_template in self.messages:

if isinstance(message_template, BaseMessage):

result.extend([message_template])

elif isinstance(message_template, BaseMessagePromptTemplate):

rel_params = {

k: v

for k, v in kwargs.items()

if k in message_template.input_variables

}

message = message_template.format_messages(**rel_params)

result.extend(message)

else:

raise ValueError(f"Unexpected input: {message_template}")

return ChatPromptValue(messages=result)

那这里的核心就是prompt的一个构造过程,这里就又要开始溯源看如何构造出这个prompt

prompt 的构造过程

构造传递路径

AgentExecutor → Agent → LLMChain → LLM

我们就依次看传递过程

initialize_agent 中构造了 AgentExecutor

agent_obj = agent_cls.from_llm_and_tools(

llm, tools, callback_manager=callback_manager, **agent_kwargs

)

from_llm_and_tools 方法构造了 Agent

Prompt也在这里构造

@classmethod

def from_llm_and_tools(

cls,

llm: BaseLanguageModel,

tools: Sequence[BaseTool],

callback_manager: Optional[BaseCallbackManager] = None,

system_message: str = PREFIX,

human_message: str = SUFFIX,

input_variables: Optional[List[str]] = None,

output_parser: Optional[BaseOutputParser] = None,

**kwargs: Any,

) -> Agent:

"""Construct an agent from an LLM and tools."""

cls._validate_tools(tools)

_output_parser = output_parser or AgentOutputParser()

prompt = cls.create_prompt(

tools,

system_message=system_message,

human_message=human_message,

input_variables=input_variables,

output_parser=_output_parser,

)

llm_chain = LLMChain(

llm=llm,

prompt=prompt,

callback_manager=callback_manager,

)

tool_names = [tool.name for tool in tools]

return cls(

llm_chain=llm_chain,

allowed_tools=tool_names,

output_parser=_output_parser,

**kwargs,

)

这里给LLMChain的一个构造还有prompt的创建

llm_chain = LLMChain(

llm=llm,

prompt=prompt,

callback_manager=callback_manager,

)

prompt = cls.create_prompt(

tools,

system_message=system_message,

human_message=human_message,

input_variables=input_variables,

output_parser=_output_parser,

)

LLMChain构造完毕

create_prompt 传递 agent中给的prompt

@classmethod

def create_prompt(

cls,

tools: Sequence[BaseTool],

system_message: str = PREFIX,

human_message: str = SUFFIX,

input_variables: Optional[List[str]] = None,

output_parser: Optional[BaseOutputParser] = None,

) -> BasePromptTemplate:

tool_strings = "\n".join(

[f"> {tool.name}: {tool.description}" for tool in tools]

)

tool_names = ", ".join([tool.name for tool in tools])

_output_parser = output_parser or AgentOutputParser()

format_instructions = human_message.format(

format_instructions=_output_parser.get_format_instructions()

)

final_prompt = format_instructions.format(

tool_names=tool_names, tools=tool_strings

)

if input_variables is None:

input_variables = ["input", "chat_history", "agent_scratchpad"]

messages = [

SystemMessagePromptTemplate.from_template(system_message),

MessagesPlaceholder(variable_name="chat_history"),

HumanMessagePromptTemplate.from_template(final_prompt),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

return ChatPromptTemplate(input_variables=input_variables, messages=messages)

这里面就是直接构造了ChatPromptTemplate

messages = [

SystemMessagePromptTemplate.from_template(system_message),

MessagesPlaceholder(variable_name="chat_history"),

HumanMessagePromptTemplate.from_template(final_prompt),

MessagesPlaceholder(variable_name="agent_scratchpad"),

]

ChatPromptTemplate展开

SystemMessage

PREFIX = """Assistant is a large language model trained by OpenAI.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful system that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist."""

chat_history

由memory控制的聊天历史

样例如下:

Human: 我是赖赖

AI: 你好,赖赖!有什么我可以帮助你的吗?

Human: 我是谁?

AI: 你是赖赖,这是你在之前的提问中提到的。

HumanMessage

SUFFIX = """TOOLS

------

Assistant can ask the user to use tools to look up information that may be helpful in answering the users original question. The tools the human can use are:

{{tools}}

RESPONSE FORMAT INSTRUCTIONS

----------------------------

When responding to me please, please output a response in one of two formats:

**Option 1:**

Use this if you want the human to use a tool.

Markdown code snippet formatted in the following schema:

``json

{{{{

"action": string \\ The action to take. Must be one of {tool_names}

"action_input": string \\ The input to the action

}}}}

``

**Option #2:**

Use this if you want to respond directly to the human. Markdown code snippet formatted in the following schema:

``json

{{{{

"action": "Final Answer",

"action_input": string \\ You should put what you want to return to use here

}}}}

``

USER'S INPUT

--------------------

Here is the user's input (remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else):

{{{{input}}}}"""

agent_scrachpad

这个很眼熟悉之前有提到过,包含两个部分

AIMessage

其中 AIMessage里面的 action.log 就是机器的直接返回,也就是这个

{

"action": string ,

"action_input": string

}

human_message

就是通过TEMPLATE_TOOL_RESPONSE 格式化的返回结果文章来源:https://www.toymoban.com/news/detail-412680.html

TEMPLATE_TOOL_RESPONSE = """TOOL RESPONSE:

---------------------

{observation}

USER'S INPUT

--------------------

Okay, so what is the response to my original question? If using information from tools, you must say it explicitly - I have forgotten all TOOL RESPONSES! Remember to respond with a markdown code snippet of a json blob with a single action, and NOTHING else."""

参考链接:

https://langchain.readthedocs.io/en/latest/getting_started/getting_started.html

https://github.com/microsoft/visual-chatgpt文章来源地址https://www.toymoban.com/news/detail-412680.html

到了这里,关于怎么和Bing一样使用ChatGPT?如何让ChapGPT与外界世界交互结合?LangChain Agent模块帮你解决问题。LangChain Agent模块的使用案例和源码详解的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!