scala和spark版本对应关系:https://blog.csdn.net/qq_34319644/article/details/115555522

这里采用jdk1.8+spark3.0+scala2.12

先配置scala 2.12:

官网:https://www.scala-lang.org/download/2.12.17.html

tar -zxf scala-2.12.17.tgz

vim .bashrc

export SCALA_HOME=/home/xingmo/sdk/scala

export PATH=$PATH:$SCALA_HOME/bin

source .bashrc

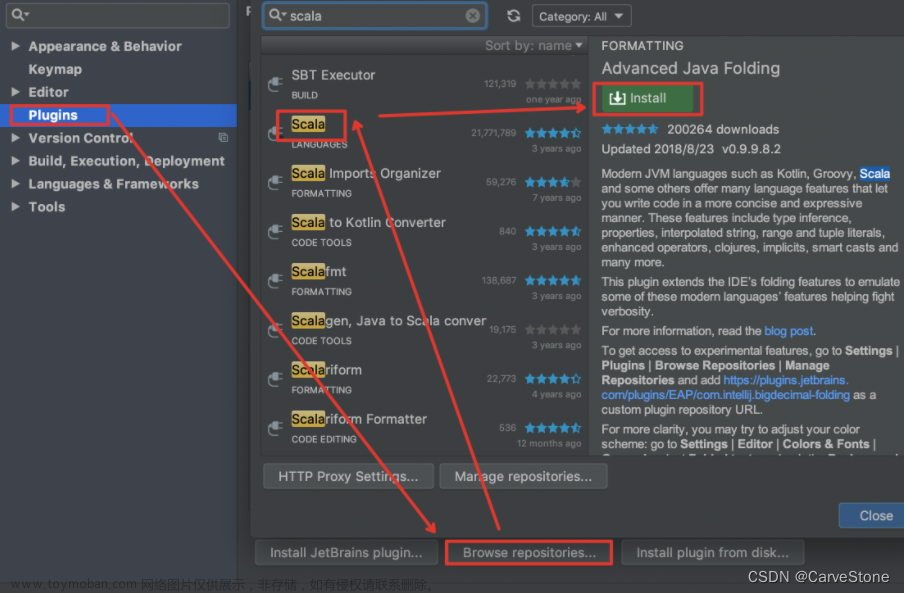

IDEA安装scala插件:file->setting

添加libraries:file->project structure

scala测试:

package spark.core

object Test {

def main(args:Array[String]):Unit = {

println("Hello Spark")

}

}

jdk版本记得切换:

可参考:https://blog.csdn.net/weixin_45490198/article/details/125119932文章来源:https://www.toymoban.com/news/detail-412779.html

文章来源地址https://www.toymoban.com/news/detail-412779.html

文章来源地址https://www.toymoban.com/news/detail-412779.html

mave依赖:

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>3.0.0</version>

</dependency>

</dependencies>

配置完成,测试代码:

package spark.core.wc

import org.apache.spark.{SparkConf, SparkContext}

object Spark01_WordCount {

def main(array: Array[String]):Unit={

// TODO 建立和Spark框架的连接

val sparConf = new SparkConf().setMaster("local").setAppName("WordCount")

val sc = new SparkContext(sparConf)

// TODO 执行业务操作

// TODO 关闭连接

sc.stop()

}

}

到了这里,关于IDEA中Spark配置的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!