原文:https://arxiv.org/abs/1910.03151

代码:https://github.com/BangguWu/ECANet

论文题目:ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks

目录

引言

一、ECANet结构

二、ECANet代码

三、将ECANet作为一个模块加入到CNN中

1、要加入的CNN网络

2、加入eca_block的语句

3、加入eca_block后的网络结构的代码(例如在第二层卷积层之后加入)

引言

ECANet是对SENet模块的改进,提出了一种不降维的局部跨信道交互策略(ECA模块)和自适应选择一维卷积核大小的方法,从而实现了性能上的提优。

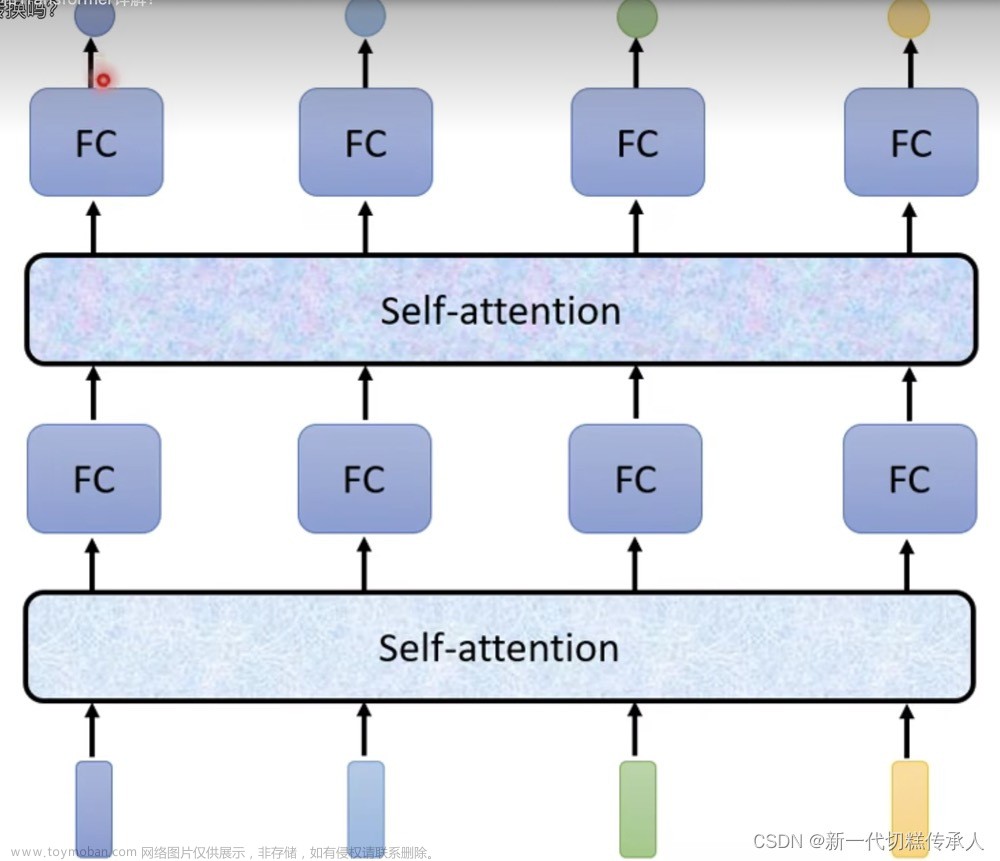

在给定输入特征的情况下,SE块首先对每个通道单独使用全局平均池化,然后使用两个具有非线性的完全连接(FC)层,然后使用一个Sigmoid函数来生成通道权值。两个FC层的设计是为了捕捉非线性的跨通道交互,其中包括降维来控制模型的复杂性。虽然该策略在后续的通道注意模块中得到了广泛的应用,但作者的实验研究表明,降维对通道注意预测带来了副作用,捕获所有通道之间的依赖是低效的,也是不必要的。

因此,提出了一种针对深度CNN的高效通道注意(ECA)模块,该模块避免了降维,有效捕获了跨通道交互的信息。如下图

一、ECANet结构

和SENet模块相比,ECANet在全局平均池化之后去除了全连接层,改用1*1卷积

在没有降维的通道全局平均池化之后,ECANet使用一维卷积来实现跨通道信息交互,而卷积核的大小k通过函数来自适应。

给定通道维度 C,卷积核大小 k 可以自适应地确定为:

odd为取奇数,,

二、ECANet代码

我用来处理一维信号,所以网络里的池化为1D文章来源:https://www.toymoban.com/news/detail-413007.html

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import Model, layers

import math

def eca_block(inputs, b=1, gama=2):

# 输入特征图的通道数

in_channel = inputs.shape[-1]

# 根据公式计算自适应卷积核大小

kernel_size = int(abs((math.log(in_channel, 2) + b) / gama))

# 如果卷积核大小是偶数,就使用它

if kernel_size % 2:

kernel_size = kernel_size

# 如果卷积核大小是奇数就变成偶数

else:

kernel_size = kernel_size + 1

# [h,w,c]==>[None,c] 全局平均池化

x = layers.GlobalAveragePooling1D()(inputs)

# [None,c]==>[c,1]

x = layers.Reshape(target_shape=(in_channel, 1))(x)

# [c,1]==>[c,1]

x = layers.Conv1D(filters=1, kernel_size=kernel_size, padding='same', use_bias=False)(x)

# sigmoid激活

x = tf.nn.sigmoid(x)

# [c,1]==>[1,1,c]

x = layers.Reshape((1, 1, in_channel))(x)

# 结果和输入相乘

outputs = layers.multiply([inputs, x])

return outputs三、将ECANet作为一个模块加入到CNN中

1、要加入的CNN网络

from keras.layers import Conv1D, Dense, Dropout, BatchNormalization, MaxPooling1D, Activation, Flatten,Input

from keras.models import Model

import preprocess

from keras.callbacks import TensorBoard

import matplotlib.pyplot as plt

import numpy as np

from keras.regularizers import l2

# 数据路径

path = xxx

# 数据经过preprocess预处理

x_train, y_train, x_valid, y_valid, x_test, y_test = preprocess.prepro(d_path=path,length=length,

number=number,

normal=normal,

rate=rate,

enc=True, enc_step=28)

x_train, x_valid, x_test = x_train[:,:,np.newaxis], x_valid[:,:,np.newaxis], x_test[:,:,np.newaxis]

batch_size = 128

epochs = 20

num_classes = 10

length = 2048

BatchNorm = True # 是否批量归一化

number = 1000 # 每类样本的数量

normal = True # 是否标准化

rate = [0.7,0.2,0.1] # 测试集验证集划分比例

input_shape =x_train.shape[1:]

# 定义输入层,确定输入维度

input = Input(shape = input_shape)

# 卷积层1

x = Conv1D(filters=16, kernel_size=64, strides=16, padding='same',kernel_regularizer=l2(1e-4),input_shape=input_shape)(input)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 卷积层2

x = Conv1D(filters=32, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 卷积层3

x = Conv1D(filters=64, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 卷积层4

x = Conv1D(filters=64, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 卷积层5

x = Conv1D(filters=64, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 从卷积到全连接需要展平

x = Flatten()(x)

# 添加全连接层

x = Dense(units=100, activation='relu', kernel_regularizer=l2(1e-4))(x)

# 增加输出层

output = Dense(units=num_classes, activation='softmax', kernel_regularizer=l2(1e-4))(x)

model =Model(inputs = input,outputs = output)

model.compile(optimizer='Adam', loss='categorical_crossentropy',

metrics=['accuracy'])

2、加入eca_block的语句

# eca_block

eca = eca_block(x)

x = layers.add([x,eca])3、加入eca_block后的网络结构的代码(例如在第二层卷积层之后加入)

# 定义输入层,确定输入维度

input = Input(shape = input_shape)

# 卷积层1

x = Conv1D(filters=16, kernel_size=64, strides=16, padding='same',kernel_regularizer=l2(1e-4),input_shape=input_shape)(input)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 卷积层2

x = Conv1D(filters=32, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# eca_block

eca = eca_block(x)

x = layers.add([x,eca])

# 卷积层3

x = Conv1D(filters=64, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 卷积层4

x = Conv1D(filters=64, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 卷积层5

x = Conv1D(filters=64, kernel_size=3, strides=1, padding='same',kernel_regularizer=l2(1e-4))(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling1D(pool_size=2)(x)

# 从卷积到全连接需要展平

x = Flatten()(x)

# 添加全连接层

x = Dense(units=100, activation='relu', kernel_regularizer=l2(1e-4))(x)

# 增加输出层

output = Dense(units=num_classes, activation='softmax', kernel_regularizer=l2(1e-4))(x)

model =Model(inputs = input,outputs = output)

model.compile(optimizer='Adam', loss='categorical_crossentropy',

metrics=['accuracy'])网络结构由keras中的Model方法构建,用来处理一维信号文章来源地址https://www.toymoban.com/news/detail-413007.html

到了这里,关于Attention注意力机制——ECANet以及加入到1DCNN网络方法的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!