注意:这里安装测试的k8s版本比较低

k8s核心功能

自我修复

服务发现和负载均衡

自动部署和回滚

弹性伸缩

服务器环境准备

参考 k8s 安装部署 1 - 环境准备

准备3台服务器

192.168.56.101 k8s-node1

192.168.56.102 k8s-node2

192.168.56.103 k8s-node3

#其中k8s-node1作为master节点

Linux修改配置

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭交换分区 free -m 其中swap 要为0

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

#使以上配置生效

sudo sysctl --system

配置Docker安装源

sudo yum install -y yum-utils

#使用阿里云的库

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

配置kubernetes安装源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

安装etcd

在master节点安装配置etcd

yum install -y etcd

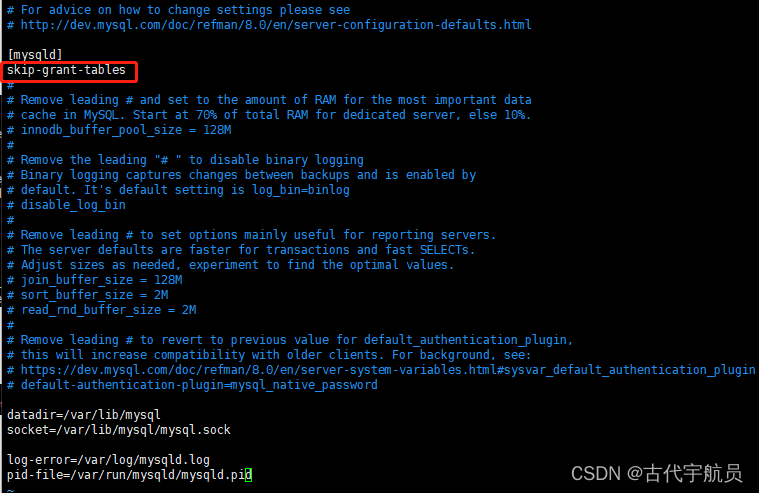

#修改配置

vi /etc/etcd/etcd.conf

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.56.101:2379"

systemctl start etcd

systemctl enable etcd

安装k8s master

在master节点安装

yum install -y kubernetes-master.x86_64

#apiserver配置

vi /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBELTE_PORT="--kubelet-port=10250"

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.56.101:2379"

#master节点端口

vi /etc/kubernetes/config

KUBE_MASTER="--master=http://192.168.56.101:8080"

#启动服务

systemctl start kube-apiserver.service

systemctl start kube-controller-manager.service

systemctl start kube-scheduler.service

systemctl enable kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl enable kube-scheduler.service

kubectl get componentstatus

安装node

在3台服务器上安装node

yum install -y kubernetes-node.x86_64

#修改配置

vi /etc/kubernetes/config

KUBE_MASTER="--master=http://192.168.56.101:8080"

#kubelet配置

vi /etc/kubernetes/kubelet

#配置当前主机IP,每台服务器的IP不一样

KUBELET_ADDRESS="--address=192.168.56.101"

KUBELET_PORT="--port=10250"

#配置当前主机名称

KUBELET_HOSTNAME="--hostname-override=k8s-node1"

KUBELET_API_SERVER="--api-servers=http://192.168.56.101:8080"

systemctl start kubelet.service

#会同时启动docker

systemctl status docker

systemctl enable kubelet.service

systemctl start kube-proxy.service

systemctl enable kube-proxy.service

kubectl version

kubectl get nodes

安装flannel网络插件

容器和容器之间需要通信,跨宿主机间容器通信

在所有节点上安装

yum install -y flannel

#修改配置

vi /etc/sysconfig/flanneld

#配置ETCD

FLANNEL_ETCD_ENDPOINTS="http://192.168.56.101:2379"

#ETCD中配置项key值前缀

FLANNEL_ETCD_PREFIX="/atomic.io/network"

#如果是在Vagrant中启动的虚拟机,网卡eth0是预留给Vagrant ssh使用的,flannel需要配置使用eth1

FLANNEL_OPTIONS="--iface=eth1"

master节点中,在etcd中配置flannel的参数项,设置集群中容器ip地址范围

etcdctl set /atomic.io/network/config '{ "Network":"172.16.0.0/16" }'

启动flanneld

systemctl start flanneld.service

systemctl enable flanneld.service

systemctl restart flanneld.service

ifconfig

#flannel0 可以看到ip信息,docker的IP还没有变,需要重启docker

systemctl restart docker

ifconfig

#docker的IP也是172.16.x.x

测试跨宿主机容器之间的互通性

#在所有节点上执行

docker pull busybox

docker run -it busybox

ifconfig

#暂时无法ping通

ping 不同容器的ip

#网络不通原因,docker版本问题

docker version

#docker默认把iptables修改成DROP,需要修改成ACCEPT

iptables -L -n

Chain FORWARD (policy DROP)

#修改后,在容器中互相可以ping通

iptables -P FORWARD ACCEPT

#查找iptables路径

which iptables

/usr/sbin/iptables

#iptables修改的命令,加入到docker的启动命令中

systemctl status docker

vi /usr/lib/systemd/system/docker.service

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

ExecStart=/usr/bin/dockerd-current \

systemctl daemon-reload

创建一个Pod

vi nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

执行

kubectl apply -f nginx_pod.yaml

#遇到问题:

Error from server (ServerTimeout): error when creating "nginx_pod.yaml": No API token found for service account "default", retry after the token is automatically created and added to the service account

没有权限账号操作

#修改apiserver配置

vi /etc/kubernetes/apiserver

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

#调整权限,修改为

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

systemctl restart kube-apiserver.service

kubectl apply -f nginx_pod.yaml

kubectl get pods -a

kubectl describe pod nginx

#再次出现错误

failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest

docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

#文件是一个软链接,打不开 open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory

ll /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt

cat /etc/kubernetes/kubelet

#指定的镜像下载不了,更换一下

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

docker search pod-infrastructure

vi /etc/kubernetes/kubelet

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=docker.io/tianyebj/pod-infrastructure:latest"

systemctl restart kubelet.service

Docker设置阿里云镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://registry.docker-cn.com", "https://82m9ar63.mirror.aliyuncs.com", "http://hub-mirror.c.163.com", "https://rn2snvg1.mirror.aliyuncs.com", "https://docker.mirrors.ustc.edu.cn"]

}

EOF

systemctl daemon-reload

systemctl restart docker

查看Pod

kubectl get pods -o wide

curl 172.16.86.2

docker ps

#查看docker.io/tianyebj/pod-infrastructure:latest容器IP地址

docker inspect 363968f850d2 | grep -i ipaddress

#而nginx容器没有IP地址

docker inspect 707344d5fcc2 | grep -i ipaddress

#查看nginx网络模式:container类型,与pod-infrastructure容器共用IP地址

docker inspect 707344d5fcc2 | grep -i network

#force强制删除,grace-period资源回收

kubectl delete pod test --force --grace-period=0

查看命令帮助文档

kubectl explain pod

kubectl explain pod.spec.containers

Relication Controller

滚动升级、升级回滚等

rc保证指定数量的pod始终存活,rc通过标签选择器来关联pod

删除多余pod资源时会删除存活时间最短的哪一个

rc在管理pod时通过selector中定义的标签来管理pod的数量,如果pod标签与rc标签一致,rc则认为这是自己开启的pod会对它进行管理,当少的时候增加,多的时候删除

创建一个rc文件 vim nginx-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 2

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: nginx:1.13

ports:

- containerPort: 80

创建rc资源

kubectl create -f nginx-rc.yaml

kubectl get rc

kubectl get pods

kubectl get rc -o wide

#修改label

kubectl edit pod nginx

#rc滚动升级,update-period指定升级间隔时间

kubectl rolling-update myweb -f nginx-rc2.yaml --update-period=30s

#回滚

kubectl rolling-update myweb2 -f nginx-rc.yaml --update-period=1s

#升级到一半,Ctrl + C中断

kubectl rolling-update myweb -f nginx-rc2.yaml --update-period=50s

#回滚到myweb,如果没有指定间隔时间,1分钟回滚1个pod

kubectl rolling-update myweb myweb2 --rollback

创建ervice

vim nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 80

nodePort: 30000

targetPort: 80

selector:

app: myweb

创建资源

kubectl apply -f nginx-svc.yam

kubectl describe service myweb

kubectl get svc myweb

kubectl get pod -o wide

kubectl scale rc myweb --replicas=3

#复制文件到pod中

kubectl cp index.html myweb-xxx:/user/share/nginx/html/index.html

#设置service外部端口范围

vi /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=30000-50000"

systemctl restart kube-apiserver.service

创建Deployment

#旧版本

apiVersion: extensions/v1beta1

#apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

创建资源

kubectl apply -f nginx-deploy.yaml

#创建Service,port指定访问Service的端口

kubectl expose deployment nginx-deployment --port=8000 --target-port=80 --type=NodePort

#修改nginx版本

kubectl edit deployment nginx-deployment

#回滚到上一个版本

kubectl rollout undo deployment nginx-deployment

#查看历史版本(没有纪录改变信息)

kubectl rollout history deployment nginx-deployment

kubectl delete -f nginx-deploy.yaml

kubectl apply -f nginx-deploy.yaml

#滚动更新,参数record纪录变更的原因

kubectl set image deployment/nginx-deployment nginx=nginx:1.16.1 --record

kubectl get pods -owide

#查看正在滚动升级的状态

kubectl rollout status deployment/nginx-deployment

#查看历史记录

kubectl rollout history deployment/nginx-deployment

#查看某个历史详情

kubectl rollout history deployment/nginx-deployment --revision=2

#回滚到上个版本

kubectl rollout undo deployment/nginx-deployment

#回滚(回到指定版本)

kubectl rollout undo deployment/nginx-deployment --to-revision=2

#查看使用的镜像版本

kubectl get deploy/nginx-deployment -oyaml|grep image

Pod的健康检查

livenessProbe:健康状态检查,周期性检查服务是否存活,检查结果失败,将重启容器

readinessProbe:可用性检查,周期性检查服务是否可用,不可用将从Service的Endpoints中移除

探针的检测方法:

exec:执行一段命令

httpGet:检测某个http请求的返回状态码

tcpSocket:测试某个端口是否能正常连接

liveness探针的exec使用 vi nginx_pod_exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod_exec

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

执行

kubectl apply -f nginx_pod_exec.yaml

kubectl describe pod pod_exec

liveness探针的httpGet使用 vi nginx_pod_httpGet.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod_httpget

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

执行

kubectl apply -f nginx_pod_httpGet.yaml

kubectl describe pod pod_httpget

#测试移动文件index.html

kubectl exec -it pod_httpget /bin/bash

cd /usr/share/nginx/html

mv index.html /tmp/

kubectl describe pod pod_httpget

readiness探针的httpGet使用 vi nginx-rc-httpGet.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: readiness_test

spec:

replicas: 2

selector:

app: readiness

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /intmall.html

port: 80

initialDelaySeconds: 3

perodSeconds: 3

执行

kubectl apply -f nginx-rc-httpGet.yaml

#如果intmall.html文件不存在,pod不会加入到svc中

kubectl expose rc readiness_svc --port=80

kubectl describe svc readiness_svc

kubectl exec -it readiness_test-xxx /bin/bash

cd /usr/share/nginx/html

echo 'hello' > intmall.html

exit

kubectl describe svc readiness_svc

http压力测试工具ab

yum install -y httpd-tools

ab -n 500000 -c 10 http://127.0.0.1/index.html

分布式文件系统GlusterFS

在3台服务器中安装

yum install -y centos-release-gluster

yum install -y glusterfs-server

systemctl start glusterd.service

systemctl enable glusterd.service

mkdir -p /gfs/test1

mkdir -p /gfs/test2

添加存储资源池

#master节点

gluster pool list

gluster peer probe k8s-node2

gluster peer probe k8s-node3

gluster pool list

GlusterFS卷管理

创建分布式复制卷

gluster volume create intmall replica 2 k8s-node1:/gfs/test1 k8s-node1:/gfs/test2 k8s-node2:/gfs/test1 k8s-node2:/gfs/test2 force

启动卷

gluster volume start intmall

查看卷

gluster volume info intmall

挂载卷

mount -t glusterfs 192.168.56.101:/intmall /mnt

df -h

扩容命令

gluster volume add-brick intmall replica 2 k8s-node3:/gfs/test1 k8s-node3:/gfs/test2 force

df -h

cd /mnt

rz #上传一个压缩文件后解压

#在3台节点上查看文件,均匀分布存储2份

tree /gfs

k8s使用GlusterFS后端存储

查看帮助文档

kubectl explain pv.spec

#endpoints指向Service中的Endpoints

kubectl explain pv.spec.glusterfs

创建Endpoint vi glusterfs-ep.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs

subsets:

- addresses:

- ip: 192.168.56.101

- ip: 192.168.56.102

- ip: 192.168.56.103

ports:

- port: 49152

protocol: TCP

执行

#glusterfs默认端口49152

netstat -lntup

kubectl apply -f glusterfs-ep.yaml

kubectl get ep

创建Service vi glusterfs-svc.yaml

#name需要与Endpoints相同

apiVersion: v1

kind: Service

metadata:

name: glusterfs

spec:

ports:

- port: 49152

protocol: TCP

targetPort: 49152

sessionAffinity: None

type: ClusterIP

执行

kubectl apply -f glusterfs-svc.yaml

kubectl get svc

kubectl describe svc glusterfs

创建pv vi glusterfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: glusterfs

labels:

type: glusterfs

spec:

capacity:

storage: 100M

accessModes:

- ReadWriteMany

glusterfs:

endpoints: "glusterfs"

path: "intmall"

readOnly: false

执行

kubectl apply -f glusterfs-pv.yaml

kubectl get pv

创建pvc vi glusterfs-pvc.yaml

vi glusterfs-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: gluster

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 90Mi

执行

kubectl apply -f glusterfs-pvc.yaml

kubectl get pvc

kubectl get pv

创建Pod,使用pvc vi glusterfs-pod.yaml

vi glusterfs-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pvc

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: gluster

执行

kubectl apply -f glusterfs-pod.yaml

kubectl get pod

使用Jenkins部署服务

参考:

http://events.jianshu.io/p/d28bd468d76d

Jenkins-推送镜像到阿里云仓库

https://blog.csdn.net/ichen820/article/details/103461148

CentOS7安装Jenkins教程

https://blog.csdn.net/weixin_44896406/article/details/127032933

jenkins在linux下安装(rpm包)

https://blog.csdn.net/C343500263/article/details/123400385

jenkins配置文件不生效等踩坑日志

https://www.cnblogs.com/creamk87/p/14504323.html

Centos7部署Jenkins,配置自动拉取gitlab上自动化测试代码并构件执行

Jenkins安装

在 https://mirrors.tuna.tsinghua.edu.cn/ 中搜索jenkins,下载 jenkins-2.346.3-1.1.noarch.rpm

安装

yum -y install jenkins-2.346.3-1.1.noarch.rpm

修改配置文件 vim /etc/sysconfig/jenkins

JENKINS_PORT="8084"

JENKINS_USER="root"

JENKINS_JAVA_OPTIONS="-Djava.awt.headless=true -Dorg.apache.commons.jelly.tags.fmt.timeZone=Asia/Shanghai"

启动Jenkins

systemctl enable --now jenkins

如果遇到错误:

systemctl status jenkins.service

● jenkins.service - Jenkins Continuous Integration Server

Loaded: loaded (/usr/lib/systemd/system/jenkins.service; enabled; vendor preset: disabled)

Active: failed (Result: start-limit) since Thu 2022-11-17 14:08:38 UTC; 8s ago

Process: 24235 ExecStart=/usr/bin/jenkins (code=exited, status=1/FAILURE)

Main PID: 24235 (code=exited, status=1/FAILURE)

#给jdk创建软链接

ln -s /usr/local/jdk1.8.0_202/bin/java /usr/bin/java

systemctl daemon-reload

systemctl enable --now jenkins

如果还遇到端口占用问题,是Jenkins运行使用了不同的配置文件

#查看使用的配置文件

systemctl status jenkins

vi /usr/lib/systemd/system/jenkins.service

JENKINS_PORT="8084"

JENKINS_USER="root"

JENKINS_JAVA_OPTIONS="-Djava.awt.headless=true -Dorg.apache.commons.jelly.tags.fmt.timeZone=Asia/Shanghai"

systemctl enable --now jenkins

配置Jenkins

http://192.168.56.101:8084

修改插件更新源为国内源地址

cd /var/lib/jenkins/updates

cp default.json default.json.bakup

sed -i 's#updates.jenkins.io/download/plugins#mirrors.tuna.tsinghua.edu.cn/jenkins/plugins#g' default.json

sed -i 's#www.google.com#www.baidu.com#g' default.json

systemctl restart jenkins

安装gitee插件

配置gitee交互凭证

Manage Credentials -》全局,添加Gitee API令牌

在 https://gitee.com/profile/personal_access_tokens 生成私人令牌

添加 Gitee 链接配置

Jenkins -> Manage Jenkins -> Configure System -> Gitee 配置 -> Gitee 链接

https://gitee.com

git仓库的credentials 只能选择ssh key 或者用户名密码的凭据,gitee api token的凭据只是给触发器插件用的,目前这是两套凭据。

所以需要单独为git源码管理插件配置ssh key 或者用户名密码凭据哦

添加阿里云镜像仓库用户名凭证,ID可以命名:ALIYUN_ACCOUNT

准备一个gitee测试项目修改配置文件

在gitee中搜索h5_demo,fork到自己账号中

在h5_demo根目录创建文件 Dockerfile

FROM nginx:1.21.6

ADD . /usr/share/nginx/html/

打包镜像在拷贝文件时,排除Dockerfile文件本身

vi .dockerignore

Dockerfile

Windows可以在cmd中输入echo Dockerfile > .dockerignore

代码提交上传到gitee中

测试镜像

#注意:这里是私有项目

git clone https://gitee.com/galen.zhang/h5_demo

cd h5_demo

docker build -t h5_demo:v1 .

docker run -d -p 88:80 h5_demo:v1

curl http:127.0.0.1:88

Jenkins创建项目

Jenkins创建一个项目,git代码 https://gitee.com/galen.zhang/h5_demo

选择全局凭证

先构建一次测试是否能正常拉取项目代码

在shell中打包镜像并推送到阿里云镜像仓库

#ALIYUN_REGISTRY = credentials('ALIYUN_ACCOUNT')

#docker login --username=${ALIYUN_REGISTRY_USR} --password=${ALIYUN_REGISTRY_PSW} registry.cn-hangzhou.aliyuncs.com

#docker build -t registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v1 .

#docker push registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v1

#遇到错误,暂时无法解决

#syntax error near unexpected token `('

#暂时直接配置用户名、密码

docker login --username=xxxx --password=xxxx registry.cn-hangzhou.aliyuncs.com

docker build -t registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v1 .

docker push registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v1

构建项目,在阿里云镜像仓库查看镜像是否推送成功

使用变量,每次构建镜像时使用不能版本号

docker build -t registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v$BUILD_ID .

docker push registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v$BUILD_ID

如果代码没有改变,不重新构建

#!/bin/bash

if [ $GIT_PREVIOUS_SUCCESSFUL_COMMIT == $GIT_COMMIT ];then

echo "no change, skip build"

exit 0

else

echo "git pull"

fi

在k8s中部署项目

kubectl run h5-demo --image=registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v1 --replicas=2 --record

kubectl rollout history deployment h5-demo

kubectl get all

kubectl expose deployment h5-demo --port=80 --type=NodePort

升级回滚

kubectl set image deploy h5-demo h5-demo=registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v12

kubectl rollout history deployment h5-demo

kubectl rollout undo deployment h5-demo --to-revision=1

#-s指定k8s的apiserver地址

kubectl -s 192.168.56.101:8080 get nodes

使用Jenkins构建部署到k8s中,在shell中增加文章来源:https://www.toymoban.com/news/detail-415402.html

kubectl -s 192.168.56.101:8080 set image deploy h5-demo h5-demo=registry.cn-hangzhou.aliyuncs.com/intmall/h5_demo:v$BUILD_ID

h5_demo修改代码上传,重新构建Jenkins文章来源地址https://www.toymoban.com/news/detail-415402.html

到了这里,关于CentOS7使用Yum安装k8s的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!