赛题描述

用户贷款违约预测,分类任务,label是响应变量。采用AUC作为评价指标。相关字段以及解释如下。数据集质量比较高,无缺失值。由于数据都已标准化和匿名化处理,因此较难分析异常值。

| 字段 | 描述 | 类型 |

|---|---|---|

| id | 样本唯一标识符 | 已匿名处理 |

| income | 用户收入 | 已做标准化处理 |

| age | 用户年龄 | 已做标准化处理 |

| experience_years | 用户从业年限 | 已做标准化处理 |

| is_married | 用户是否结婚 | 已匿名处理 |

| city | 居住城市,匿名处理 | 已做标准化处理 |

| region | 居住地区,匿名处理 | 已做标准化处理 |

| current_job_years | 现任职位工作年限 | 已匿名处理 |

| current_house_years | 在现房屋的居住年数 | 已做标准化处理 |

| house_ownership | 房屋类型:租用;个人;未有 | 已做标准化处理 |

| car_ownership | 是否拥有汽车 | 已匿名处理 |

| profession | 职业,匿名处理 | 已做标准化处理 |

| label | 表示过去是否存在违约 | float |

特征工程

在数据科学类竞赛中,特征工程极为重要,其重要性要远大于模型和参数。

在特征工程中,主要做了以下几个方面

- 针对类别特征对连续特征进行分组统计,进行特征衍生。

- 针对收入、年龄、从业年限进行分箱

- 针对类别特征进行Target Encoding

针对样本不均衡进行处理,利用SMOTE+ENN进行采样处理(分数不升反降,猜测在采样和清洗过程中引入了噪声,丢失了信息,后没再用)- 针对连续特征进行标准化和归一化操作

- 类别特征二阶组合

分组统计

# //求熵

def myEntro(x):

"""

calculate shanno ent of x

"""

x = np.array(x)

x_value_list = set([x[i] for i in range(x.shape[0])])

ent = 0.0

for x_value in x_value_list:

p = float(x[x == x_value].shape[0]) / x.shape[0]

logp = np.log2(p)

ent -= p * logp

# print(x_value,p,logp)

# print(ent)

return ent

# //求值的范围

def myRange(x):

return pd.Series.max(x) - pd.Series.min(x)

feature = ['income', 'age', 'experience_years', 'is_married', 'city',

'region', 'current_job_years', 'current_house_years', 'house_ownership',

'car_ownership', 'profession']

# , 'current_job_years_precentage',

# 'income_pre_age', 'income_pre_experience_years',

# 'experience_years_pre_age']

cat_feature = ['is_married','city','region','house_ownership','car_ownership','profession']

#类别特征

num_feature = ['income', 'age', 'experience_years', 'current_job_years', 'current_house_years']#,'income_pre_age','income_pre_experience_years','experience_years_pre_age']

#连续特征

ways = ['mean','max','min','std','sum','median',myEntro,myRange]#,myRms,myMode,myQ25,myQ75,myQ10,myQ90]

#进行统计的方法

for cat in tqdm(cat_feature):

data[cat+"_"+"count"] = data.groupby(cat)['id'].transform('count')

feature.append(cat+"_"+"count")

data[cat+"_"+"label_mean"] = data.groupby(cat)['label'].transform('mean')

feature.append(cat+"_"+"label_mean")

for num in num_feature:

for way in ways:

data[num+'_'+cat+"_"+str(way)] = data.groupby(cat)[num].transform(way)

feature.append(num+'_'+cat+"_"+str(way))

分箱

num_feature = list(set(feature) - set(cat_feature))

#分箱

for i in tqdm(['income']):

data[i+'_cut'] = pd.cut(data[i],bins=100,labels=range(100)).astype('int')

feature.append(i+'_cut')

cat_feature.append(i+'_cut')

for i in tqdm(['age']):

data[i+'_cut'] = pd.cut(data[i],bins=10,labels=range(10)).astype('int')

feature.append(i+'_cut')

cat_feature.append(i+'_cut')

for i in tqdm(['experience_years']):

data[i+'_cut'] = pd.cut(data[i],bins=5,labels=range(5)).astype('int')

feature.append(i+'_cut')

cat_feature.append(i+'_cut')

标准化归一化

transfer = StandardScaler()

# 调用fit_transform (只需要处理连续特征)

num_feature = list(set(feature) - set(cat_feature))

data[num_feature] = transfer.fit_transform(data[num_feature])

transfer = MinMaxScaler()

data[num_feature] = transfer.fit_transform(data[num_feature])

类别特征二阶组合

enc = LabelEncoder()

num = len(cat_feature)

for i in tqdm(range(num)):

for j in range(i,num):

name = str(cat_feature[i])+"_"+str(cat_feature[j])

data[name] = data[cat_feature[i]].astype(str) + data[cat_feature[j]].astype(str)

data[name] = enc.fit_transform(data[name])

feature.append(name)

cat_feature.append(name)

模型搭建

尝试了Catboost,XGBoost,LightGBM。Catboost表现最好,且由于时间原因,未做模型融合,只使用CatBoost。

构建模型

def train_model_classification(X, X_test, y, params, num_classes=2,

folds=None, model_type='lgb',

eval_metric='logloss', columns=None,

plot_feature_importance=False,

model=None, verbose=10000,

early_stopping_rounds=200,

splits=None, n_folds=3):

"""

分类模型函数

返回字典,包括: oof predictions, test predictions, scores and, if necessary, feature importances.

:params: X - 训练数据, pd.DataFrame

:params: X_test - 测试数据,pd.DataFrame

:params: y - 目标

:params: folds - folds to split data

:params: model_type - 模型

:params: eval_metric - 评价指标

:params: columns - 特征列

:params: plot_feature_importance - 是否展示特征重要性

:params: model - sklearn model, works only for "sklearn" model type

"""

start_time = time.time()

global y_pred_valid, y_pred

columns = X.columns if columns is None else columns

X_test = X_test[columns]

splits = folds.split(X, y) if splits is None else splits

n_splits = folds.n_splits if splits is None else n_folds

# to set up scoring parameters

metrics_dict = {

'logloss': {

'lgb_metric_name': 'logloss',

'xgb_metric_name': 'logloss',

'catboost_metric_name': 'Logloss',

'sklearn_scoring_function': metrics.log_loss

},

'lb_score_method': {

'sklearn_scoring_f1': metrics.f1_score, # 线上评价指标

'sklearn_scoring_accuracy': metrics.accuracy_score, # 线上评价指标

'sklearn_scoring_auc': metrics.roc_auc_score

},

}

result_dict = {}

count = 0

# out-of-fold predictions on train data

oof = np.zeros(shape=(len(X), num_classes))

# averaged predictions on train data

prediction = np.zeros(shape=(len(X_test), num_classes))

# list of scores on folds

acc_scores=[]

scores = []

# feature importance

feature_importance = pd.DataFrame()

# split and train on folds

for fold_n, (train_index, valid_index) in enumerate(splits):

if verbose:

print(f'Fold {fold_n + 1} started at {time.ctime()}')

if type(X) == np.ndarray:

X_train, X_valid = X[train_index], X[valid_index]

y_train, y_valid = y[train_index], y[valid_index]

else:

X_train, X_valid = X[columns].iloc[train_index], X[columns].iloc[valid_index]

y_train, y_valid = y.iloc[train_index], y.iloc[valid_index]

# 在此进行数据采样处理

# print("采样处理前,正负样本比例为",Counter(y_train),end = '\t')

# smo_enn = SMOTENC(categorical_features=[i for i in range(len(feature)) if feature[i] in cat_feature],random_state=2023,n_jobs=-1)

# X_train, y_train = smo_enn.fit_resample(X_train, y_train)

# print("采样处理后,正负样本比例为",Counter(y_train))

if model_type == 'lgb':

model = LGBMClassifier(**params)

model.fit(X_train, y_train,

eval_set=[(X_train, y_train), (X_valid, y_valid)],

eval_metric=metrics_dict[eval_metric]['lgb_metric_name'],

verbose=verbose,

early_stopping_rounds=early_stopping_rounds)

y_pred_valid = model.predict_proba(X_valid)

y_pred = model.predict_proba(X_test, num_iteration=model.best_iteration_)

if model_type == 'xgb':

model = xgb.XGBClassifier(**params)

model.fit(X_train, y_train,

eval_set=[(X_train, y_train), (X_valid, y_valid)],

eval_metric=metrics_dict[eval_metric]['xgb_metric_name'],

verbose=bool(verbose), # xgb verbose bool

early_stopping_rounds=early_stopping_rounds)

y_pred_valid = model.predict_proba(X_valid)

y_pred = model.predict_proba(X_test, ntree_limit=model.best_ntree_limit)

if model_type == 'sklearn':

model = model

model.fit(X_train, y_train)

y_pred_valid = model.predict_proba(X_valid)

score = metrics_dict[eval_metric]['sklearn_scoring_function'](y_valid, y_pred_valid)

print(f'Fold {fold_n}. {eval_metric}: {score:.4f}.')

y_pred = model.predict_proba(X_test)

if model_type == 'cat':

model = CatBoostClassifier(iterations=20000, eval_metric=metrics_dict[eval_metric]['catboost_metric_name'],

**params,

loss_function=metrics_dict[eval_metric]['catboost_metric_name'])

model.fit(X_train, y_train, eval_set=(X_valid, y_valid),

cat_features=cat_feature,

# num_features = num_feature,

# pairwise_interactions=origin_feature,

use_best_model=True,

verbose=False)

# model = BalancedBaggingClassifier(base_estimator=model,

# sampling_strategy='auto',

# replacement=False,

# random_state=0)

# model.fit(X_train, y_train)

y_pre_train = model.predict_proba(X_train)

y_pred_valid = model.predict_proba(X_valid)

y_pred = model.predict_proba(X_test)

oof[valid_index] = y_pred_valid

# 评价指标

acc_scores.append(

metrics_dict['lb_score_method']['sklearn_scoring_accuracy'](y_valid, np.argmax(y_pred_valid, axis=1)))

scores.append(

metrics_dict['lb_score_method']['sklearn_scoring_auc'](y_valid, y_pred_valid[:,1]))

print(acc_scores)

print(scores)

# if scores[-1]>0.94:

# prediction += y_pred

# count = count+1

prediction += y_pred

if model_type == 'lgb' and plot_feature_importance:

# feature importance

fold_importance = pd.DataFrame()

fold_importance["feature"] = columns

fold_importance["importance"] = model.feature_importances_

fold_importance["fold"] = fold_n + 1

feature_importance = pd.concat([feature_importance, fold_importance], axis=0)

if model_type == 'xgb' and plot_feature_importance:

# feature importance

fold_importance = pd.DataFrame()

fold_importance["feature"] = columns

fold_importance["importance"] = model.feature_importances_

fold_importance["fold"] = fold_n + 1

feature_importance = pd.concat([feature_importance, fold_importance], axis=0)

prediction /= n_splits

print('CV mean score: {0:.4f}, std: {1:.4f}.'.format(np.mean(scores), np.std(scores)))

result_dict['oof'] = oof

result_dict['prediction'] = prediction

result_dict['acc_scores'] = acc_scores

result_dict['scores'] = scores

if model_type == 'lgb' or model_type == 'xgb':

if plot_feature_importance:

feature_importance["importance"] /= n_splits

cols = feature_importance[["feature", "importance"]].groupby("feature").mean().sort_values(

by="importance", ascending=False)[:50].index

best_features = feature_importance.loc[feature_importance.feature.isin(cols)]

plt.figure(figsize=(16, 12))

sns.barplot(x="importance", y="feature", data=best_features.sort_values(by="importance", ascending=False))

plt.title('LGB Features (avg over folds)')

plt.show()

result_dict['feature_importance'] = feature_importance

end_time = time.time()

print("train_model_classification cost time:{}".format(end_time - start_time))

return result_dict

进行训练和预测

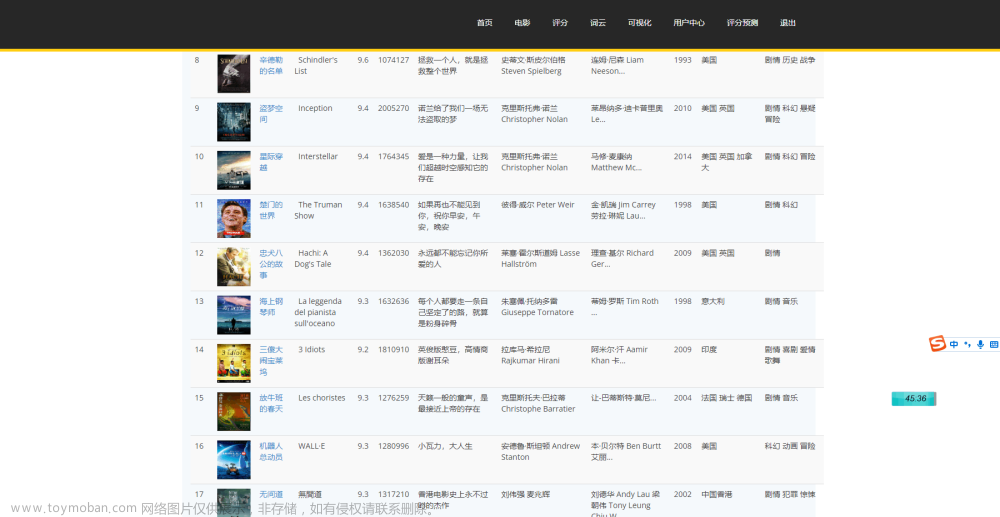

采用10折交叉验证,效果要好于5折和20折。

cat_params = {'learning_rate': 0.1, 'depth': 9, 'l2_leaf_reg': 10, 'bootstrap_type': 'Bernoulli',#'task_type': 'GPU',

'od_type': 'Iter', 'od_wait': 50, 'random_seed': 11, 'allow_writing_files': False}

n_fold = 10

num_classes = 2

print("分类个数num_classes:{}".format(num_classes))

folds = StratifiedKFold(n_splits=n_fold, random_state=1314, shuffle=True)

result_dict_cat = train_model_classification(X=X,

X_test=test[feature],

y=Y,

params=cat_params,

num_classes=num_classes,

folds=folds,

model_type='cat',

eval_metric='logloss',

plot_feature_importance=True,

verbose=1,

early_stopping_rounds=800,#原400

n_folds=n_fold)

最终结果文章来源:https://www.toymoban.com/news/detail-415513.html

[0.9032051282051282, 0.9058333333333334, 0.9024358974358975, 0.9044871794871795, 0.9055769230769231, 0.9019230769230769, 0.9071153846153847, 0.9091025641025641, 0.9046153846153846, 0.9057051282051282]

[0.940374324805705, 0.9433745066510744, 0.9367520694627971, 0.9416194244693141, 0.9405878127004588, 0.9378354914113705, 0.9418489996834368, 0.9442885857569249, 0.9411564723924757, 0.9421991199077591]

CV mean score: 0.9410, std: 0.0022.

train_model_classification cost time:1650.3819825649261

赛题链接

代码链接-和鲸社区

代码链接-AIStudio

代码链接-GItHub文章来源地址https://www.toymoban.com/news/detail-415513.html

到了这里,关于用户贷款违约预测-Top1方案-单模0.9414的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!