目录

前言

二、修改qt例程

1、添加yuyv转rgb的函数到capture_thread.cpp

2、声明屏幕缓冲变量

3、yuyv转rgb的实际处理

三、测试

1、开发板获取摄像头数据测试

2、客户端与服务器通信测试

四、修改后的正点原子video_server项目代码

前言

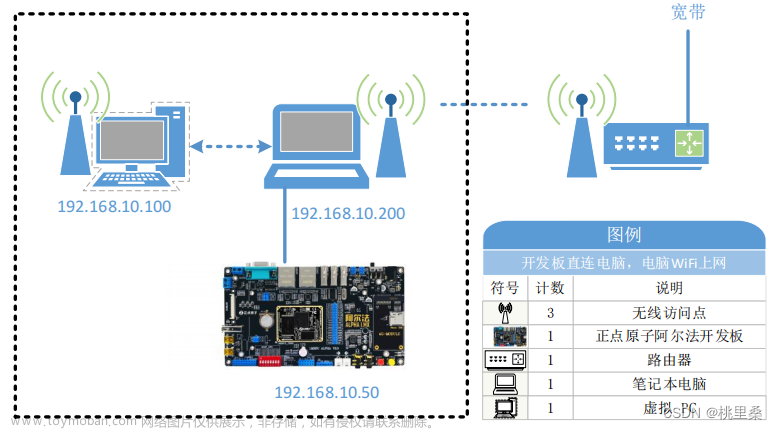

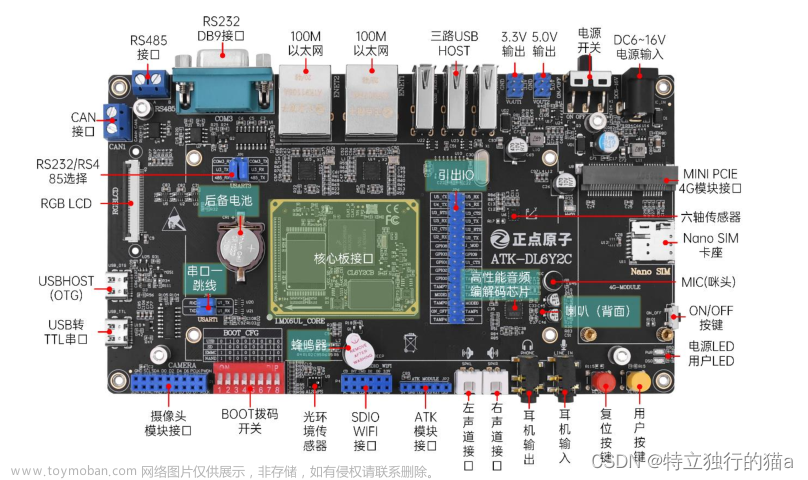

正点原子《I.MX6U 嵌入式 Qt 开发指南》教程使用的是ov系列的摄像头,输出rgb格式,可以直接显示到屏幕,不需要进行格式转化。由于我使用的是yuyv格式的usb摄像头,下面进行适配。

参考:正点原子《I.MX6U 嵌入式 Qt 开发指南》第二十八章 视频监控项目

lcd大小:4.3寸 480*272

QT文件: server: video_server client: video_client

一、YUYV摄像头直接使用原始例程

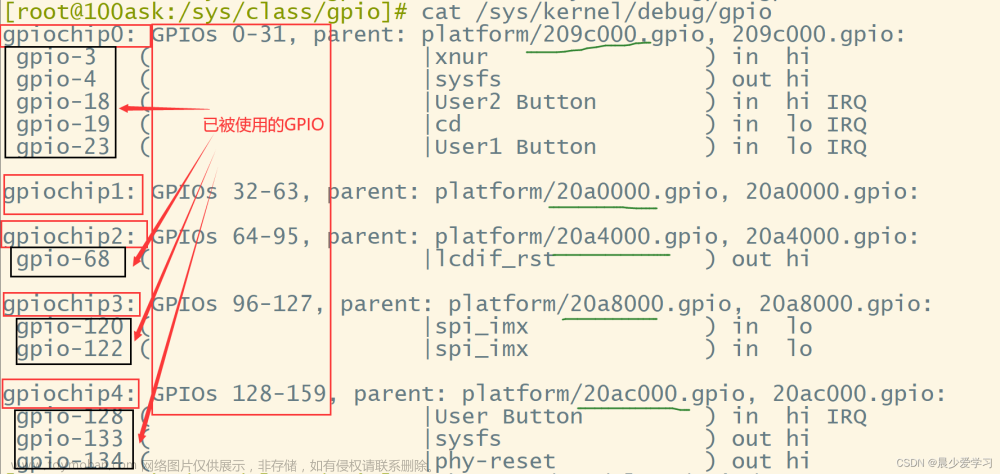

先查看usb摄像头的输出像素格式:v4l2-ctl -d /dev/video1 --all

直接修改正点原子的例程,修改pixformat=V4L2_PIX_FMT_YUYV

此时使用例程,显示效果如下,很显然原因是摄像头采集的yuyv数据,以Format_RGB16显示到QImage控件上导致了格式异常。

二、修改qt例程

1、添加yuyv转rgb的函数到capture_thread.cpp

void yuyv_to_rgb(unsigned char* yuv,unsigned char* rgb)

{

unsigned int i;

unsigned char* y0 = yuv + 0;

unsigned char* u0 = yuv + 1;

unsigned char* y1 = yuv + 2;

unsigned char* v0 = yuv + 3;

unsigned char* r0 = rgb + 0;

unsigned char* g0 = rgb + 1;

unsigned char* b0 = rgb + 2;

unsigned char* r1 = rgb + 3;

unsigned char* g1 = rgb + 4;

unsigned char* b1 = rgb + 5;

float rt0 = 0, gt0 = 0, bt0 = 0, rt1 = 0, gt1 = 0, bt1 = 0;

for(i = 0; i <= (WIDTH * HEIGHT) / 2 ;i++)

{

bt0 = 1.164 * (*y0 - 16) + 2.018 * (*u0 - 128);

gt0 = 1.164 * (*y0 - 16) - 0.813 * (*v0 - 128) - 0.394 * (*u0 - 128);

rt0 = 1.164 * (*y0 - 16) + 1.596 * (*v0 - 128);

bt1 = 1.164 * (*y1 - 16) + 2.018 * (*u0 - 128);

gt1 = 1.164 * (*y1 - 16) - 0.813 * (*v0 - 128) - 0.394 * (*u0 - 128);

rt1 = 1.164 * (*y1 - 16) + 1.596 * (*v0 - 128);

if(rt0 > 250) rt0 = 255;

if(rt0< 0) rt0 = 0;

if(gt0 > 250) gt0 = 255;

if(gt0 < 0) gt0 = 0;

if(bt0 > 250) bt0 = 255;

if(bt0 < 0) bt0 = 0;

if(rt1 > 250) rt1 = 255;

if(rt1 < 0) rt1 = 0;

if(gt1 > 250) gt1 = 255;

if(gt1 < 0) gt1 = 0;

if(bt1 > 250) bt1 = 255;

if(bt1 < 0) bt1 = 0;

*r0 = (unsigned char)rt0;

*g0 = (unsigned char)gt0;

*b0 = (unsigned char)bt0;

*r1 = (unsigned char)rt1;

*g1 = (unsigned char)gt1;

*b1 = (unsigned char)bt1;

yuv = yuv + 4;

rgb = rgb + 6;

if(yuv == NULL)

break;

y0 = yuv;

u0 = yuv + 1;

y1 = yuv + 2;

v0 = yuv + 3;

r0 = rgb + 0;

g0 = rgb + 1;

b0 = rgb + 2;

r1 = rgb + 3;

g1 = rgb + 4;

b1 = rgb + 5;

}

}2、声明屏幕缓冲变量

这里的WIDTH和HEIGHT需要根据实际显示设置,由于我的lcd为480*272,因此我设置视频数据为320*240

#define WIDTH 320

#define HEIGHT 240

unsigned char src_buffer[WIDTH*HEIGHT*2]; //用来装v4l2出队的yuyv数据

unsigned char rgb_buffer[WIDTH*HEIGHT*3]; //用来装yuyv转换为rgb的数据3、yuyv转rgb的实际处理

在帧缓冲出队函数中,对帧缓冲数据进行memcpy到src_buffer,再调用yuyv_to_rgb函数,将转换为rgb的数据保存到rgb_buffer中。然后将rgb_buffer显示到屏幕。

三、测试

1、开发板获取摄像头数据测试

将QT工程video_server交叉编译后,放到开发板上运行,查看显示到本地显示的数据是否正常。此时与一开始相比,颜色显示已经正常。

2、客户端与服务器通信测试

将QT工程video_client编译后在pc上运行。

将开发板上的video_server勾选开启广播后,运行,即可看到客户端收到的视频数据。

文章来源:https://www.toymoban.com/news/detail-420256.html

文章来源:https://www.toymoban.com/news/detail-420256.html

四、修改后的正点原子video_server项目代码

/******************************************************************

Copyright © Deng Zhimao Co., Ltd. 2021-2030. All rights reserved.

* @projectName video_server

* @brief mainwindow.cpp

* @author Deng Zhimao

* @email dengzhimao@alientek.com

* @link www.openedv.com

* @date 2021-11-19

*******************************************************************/

#include "mainwindow.h"

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

{

this->setGeometry(0, 0, 480 , 272); //由于屏幕大小不同,进行了修改

videoLabel = new QLabel(this);

videoLabel->setText("未获取到图像数据或未开启本地显示");

videoLabel->setStyleSheet("QWidget {color: white;}");

videoLabel->setAlignment(Qt::AlignCenter);

videoLabel->resize(480, 272); //由于屏幕大小不同,进行了修改

checkBox1 = new QCheckBox(this);

checkBox2 = new QCheckBox(this);

checkBox1->resize(120, 50);

checkBox2->resize(120, 50);

checkBox1->setText("本地显示");

checkBox2->setText("开启广播");

checkBox1->setStyleSheet("QCheckBox {color: yellow;}"

"QCheckBox:indicator {width: 40; height: 40;}");

checkBox2->setStyleSheet("QCheckBox {color: yellow;}"

"QCheckBox:indicator {width: 40; height: 40}");

/* 按钮 */

startCaptureButton = new QPushButton(this);

startCaptureButton->setCheckable(true);

startCaptureButton->setText("开始采集摄像头数据");

/* 设置背景颜色为黑色 */

QColor color = QColor(Qt::black);

QPalette p;

p.setColor(QPalette::Window, color);

this->setPalette(p);

/* 样式表 */

startCaptureButton->setStyleSheet("QPushButton {background-color: white; border-radius: 30}"

"QPushButton:pressed {background-color: red;}");

captureThread = new CaptureThread(this);

connect(startCaptureButton, SIGNAL(clicked(bool)), captureThread, SLOT(setThreadStart(bool)));

connect(startCaptureButton, SIGNAL(clicked(bool)), this, SLOT(startCaptureButtonClicked(bool)));

connect(captureThread, SIGNAL(imageReady(QImage)), this, SLOT(showImage(QImage)));

connect(checkBox1, SIGNAL(clicked(bool)), captureThread, SLOT(setLocalDisplay(bool)));

connect(checkBox2, SIGNAL(clicked(bool)), captureThread, SLOT(setBroadcast(bool)));

}

MainWindow::~MainWindow()

{

}

void MainWindow::showImage(QImage image)

{

videoLabel->setPixmap(QPixmap::fromImage(image));

}

void MainWindow::resizeEvent(QResizeEvent *event)

{

Q_UNUSED(event)

startCaptureButton->move((this->width() - 200) / 2, this->height() - 80);

startCaptureButton->resize(200, 60);

videoLabel->move((this->width() - 480) / 2, (this->height() - 272) / 2); //由于屏幕大小不同,进行了修改

checkBox1->move(this->width() - 120, this->height() / 2 - 50);

checkBox2->move(this->width() - 120, this->height() / 2 + 25);

}

void MainWindow::startCaptureButtonClicked(bool start)

{

if (start)

startCaptureButton->setText("停止采集摄像头数据");

else

startCaptureButton->setText("开始采集摄像头数据");

}

文章来源地址https://www.toymoban.com/news/detail-420256.html

/******************************************************************

Copyright © Deng Zhimao Co., Ltd. 2021-2030. All rights reserved.

* @projectName video_server

* @brief capture_thread.cpp

* @author Deng Zhimao

* @email dengzhimao@alientek.com

* @link www.openedv.com

* @date 2021-11-19

*******************************************************************/

#include "capture_thread.h"

#include <string.h>

#define WIDTH 320 //摄像头拍摄以及显示的大小

#define HEIGHT 240

void yuyv_to_rgb(unsigned char* yuv,unsigned char* rgb)

{

unsigned int i;

unsigned char* y0 = yuv + 0;

unsigned char* u0 = yuv + 1;

unsigned char* y1 = yuv + 2;

unsigned char* v0 = yuv + 3;

unsigned char* r0 = rgb + 0;

unsigned char* g0 = rgb + 1;

unsigned char* b0 = rgb + 2;

unsigned char* r1 = rgb + 3;

unsigned char* g1 = rgb + 4;

unsigned char* b1 = rgb + 5;

float rt0 = 0, gt0 = 0, bt0 = 0, rt1 = 0, gt1 = 0, bt1 = 0;

for(i = 0; i <= (WIDTH * HEIGHT) / 2 ;i++)

{

bt0 = 1.164 * (*y0 - 16) + 2.018 * (*u0 - 128);

gt0 = 1.164 * (*y0 - 16) - 0.813 * (*v0 - 128) - 0.394 * (*u0 - 128);

rt0 = 1.164 * (*y0 - 16) + 1.596 * (*v0 - 128);

bt1 = 1.164 * (*y1 - 16) + 2.018 * (*u0 - 128);

gt1 = 1.164 * (*y1 - 16) - 0.813 * (*v0 - 128) - 0.394 * (*u0 - 128);

rt1 = 1.164 * (*y1 - 16) + 1.596 * (*v0 - 128);

if(rt0 > 250) rt0 = 255;

if(rt0< 0) rt0 = 0;

if(gt0 > 250) gt0 = 255;

if(gt0 < 0) gt0 = 0;

if(bt0 > 250) bt0 = 255;

if(bt0 < 0) bt0 = 0;

if(rt1 > 250) rt1 = 255;

if(rt1 < 0) rt1 = 0;

if(gt1 > 250) gt1 = 255;

if(gt1 < 0) gt1 = 0;

if(bt1 > 250) bt1 = 255;

if(bt1 < 0) bt1 = 0;

*r0 = (unsigned char)rt0;

*g0 = (unsigned char)gt0;

*b0 = (unsigned char)bt0;

*r1 = (unsigned char)rt1;

*g1 = (unsigned char)gt1;

*b1 = (unsigned char)bt1;

yuv = yuv + 4;

rgb = rgb + 6;

if(yuv == NULL)

break;

y0 = yuv;

u0 = yuv + 1;

y1 = yuv + 2;

v0 = yuv + 3;

r0 = rgb + 0;

g0 = rgb + 1;

b0 = rgb + 2;

r1 = rgb + 3;

g1 = rgb + 4;

b1 = rgb + 5;

}

}

void CaptureThread::run()

{

/* 下面的代码请参考正点原子C应用编程V4L2章节,摄像头编程,这里不作解释 */

#ifdef linux

#ifndef __arm__

return;

#endif

int video_fd = -1;

struct v4l2_format fmt;

struct v4l2_requestbuffers req_bufs;

static struct v4l2_buffer buf;

int n_buf;

struct buffer_info bufs_info[VIDEO_BUFFER_COUNT];

enum v4l2_buf_type type;

unsigned char src_buffer[WIDTH*HEIGHT*2];

unsigned char rgb_buffer[WIDTH*HEIGHT*2];

video_fd = open(VIDEO_DEV, O_RDWR);

if (0 > video_fd) {

printf("ERROR: failed to open video device %s\n", VIDEO_DEV);

return ;

}

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.fmt.pix.width = WIDTH;

fmt.fmt.pix.height = HEIGHT;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

if (0 > ioctl(video_fd, VIDIOC_S_FMT, &fmt)) {

printf("ERROR: failed to VIDIOC_S_FMT\n");

close(video_fd);

return ;

}

if (0 > ioctl(video_fd, VIDIOC_G_FMT, &fmt)) {

printf("ERROR: failed to VIDIOC_S_FMT\n");

close(video_fd);

return ;

}

printf("fmt.type:\t\t%d\n",fmt.type);

printf("pix.pixelformat:\t%c%c%c%c\n", \

fmt.fmt.pix.pixelformat & 0xFF,\

(fmt.fmt.pix.pixelformat >> 8) & 0xFF, \

(fmt.fmt.pix.pixelformat >> 16) & 0xFF,\

(fmt.fmt.pix.pixelformat >> 24) & 0xFF);

printf("pix.width:\t\t%d\n",fmt.fmt.pix.width);

printf("pix.height:\t\t%d\n",fmt.fmt.pix.height);

printf("pix.field:\t\t%d\n",fmt.fmt.pix.field);

req_bufs.count = VIDEO_BUFFER_COUNT;

req_bufs.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req_bufs.memory = V4L2_MEMORY_MMAP;

if (0 > ioctl(video_fd, VIDIOC_REQBUFS, &req_bufs)) {

printf("ERROR: failed to VIDIOC_REQBUFS\n");

return ;

}

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

for (n_buf = 0; n_buf < VIDEO_BUFFER_COUNT; n_buf++) {

buf.index = n_buf;

if (0 > ioctl(video_fd, VIDIOC_QUERYBUF, &buf)) {

printf("ERROR: failed to VIDIOC_QUERYBUF\n");

return ;

}

bufs_info[n_buf].length = buf.length;

bufs_info[n_buf].start = mmap(NULL, buf.length,

PROT_READ | PROT_WRITE, MAP_SHARED,

video_fd, buf.m.offset);

if (MAP_FAILED == bufs_info[n_buf].start) {

printf("ERROR: failed to mmap video buffer, size 0x%x\n", buf.length);

return ;

}

}

for (n_buf = 0; n_buf < VIDEO_BUFFER_COUNT; n_buf++) {

buf.index = n_buf;

if (0 > ioctl(video_fd, VIDIOC_QBUF, &buf)) {

printf("ERROR: failed to VIDIOC_QBUF\n");

return ;

}

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (0 > ioctl(video_fd, VIDIOC_STREAMON, &type)) {

printf("ERROR: failed to VIDIOC_STREAMON\n");

return ;

}

while (startFlag) {

for (n_buf = 0; n_buf < VIDEO_BUFFER_COUNT; n_buf++) {

buf.index = n_buf;

//出队

if (0 > ioctl(video_fd, VIDIOC_DQBUF, &buf)) {

printf("ERROR: failed to VIDIOC_DQBUF\n");

return;

}

// printf("now memcpy the src buf, buf start=%d, len=%d\r\n", bufs_info[n_buf].start, bufs_info[n_buf].length);

memcpy(src_buffer, bufs_info[n_buf].start, bufs_info[n_buf].length); //将帧缓冲数据复制到到src_buffer

yuyv_to_rgb((unsigned char*)bufs_info[n_buf].start, rgb_buffer); //yuyv转rgb

QImage qImage(rgb_buffer, fmt.fmt.pix.width, fmt.fmt.pix.height, QImage::Format_RGB16); //显示到屏幕

// QImage qImage((unsigned char*)bufs_info[n_buf].start, fmt.fmt.pix.width, fmt.fmt.pix.height, QImage::Format_RGB16);

/* 是否开启本地显示,开启本地显示可能会导致开启广播卡顿,它们互相制约 */

if (startLocalDisplay)

emit imageReady(qImage);

/* 是否开启广播,开启广播会导致本地显示卡顿,它们互相制约 */

if (startBroadcast) {

/* udp套接字 */

QUdpSocket udpSocket;

/* QByteArray类型 */

QByteArray byte;

/* 建立一个用于IO读写的缓冲区 */

QBuffer buff(&byte);

/* image转为byte的类型,再存入buff */

qImage.save(&buff, "JPEG", -1);

/* 转换为base64Byte类型 */

QByteArray base64Byte = byte.toBase64();

/* 由udpSocket以广播的形式传输数据,端口号为8888 */

udpSocket.writeDatagram(base64Byte.data(), base64Byte.size(), QHostAddress::Broadcast, 8888);

}

//入队

if (0 > ioctl(video_fd, VIDIOC_QBUF, &buf)) {

printf("ERROR: failed to VIDIOC_QBUF\n");

return;

}

}

}

msleep(800);//at lease 650

for (int i = 0; i < VIDEO_BUFFER_COUNT; i++) {

munmap(bufs_info[i].start, buf.length);

}

close(video_fd);

#endif

}

到了这里,关于正点原子imx6ull: QT视频监控项目使用yuyv格式的usb摄像头的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!