利用Apple最新的Realitykit搭配ARkit实现虚拟物体的放置,结合内置的Speech库实现语音的识别功能,将语音内容转为文本内容,从而让机器进行运动。

大体思路:

1、配置并启动ARkit环境。

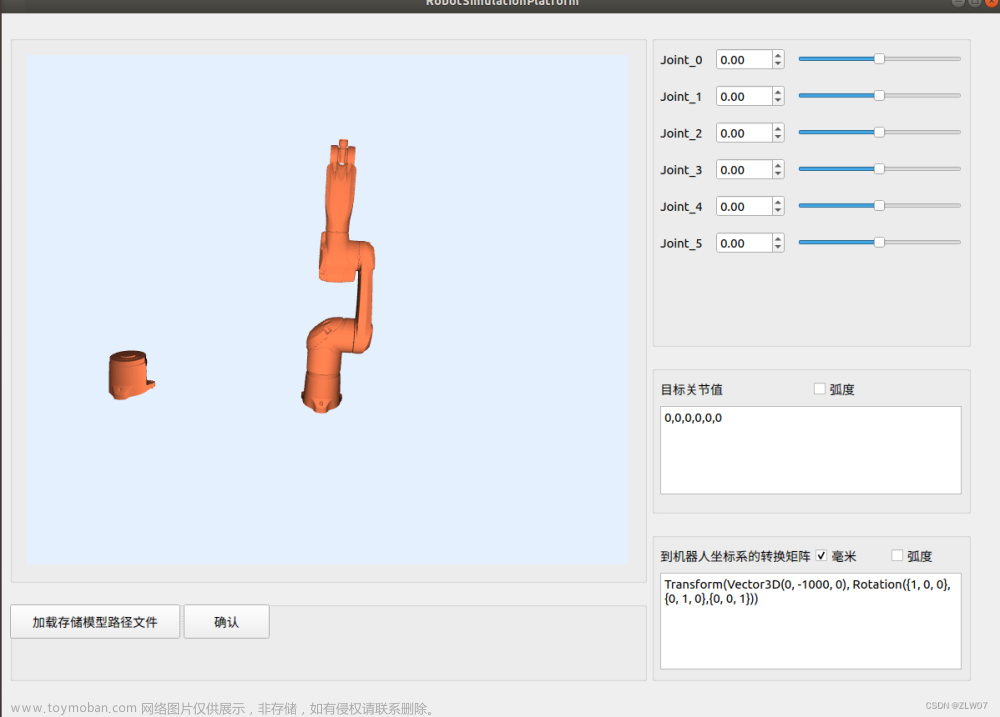

2、构建Entity实体。可以用Apple官方的CreatingAPhotogrammetryCommandLineApp的代码文档来生成.usdz文件,从而建造自己想要的实体。

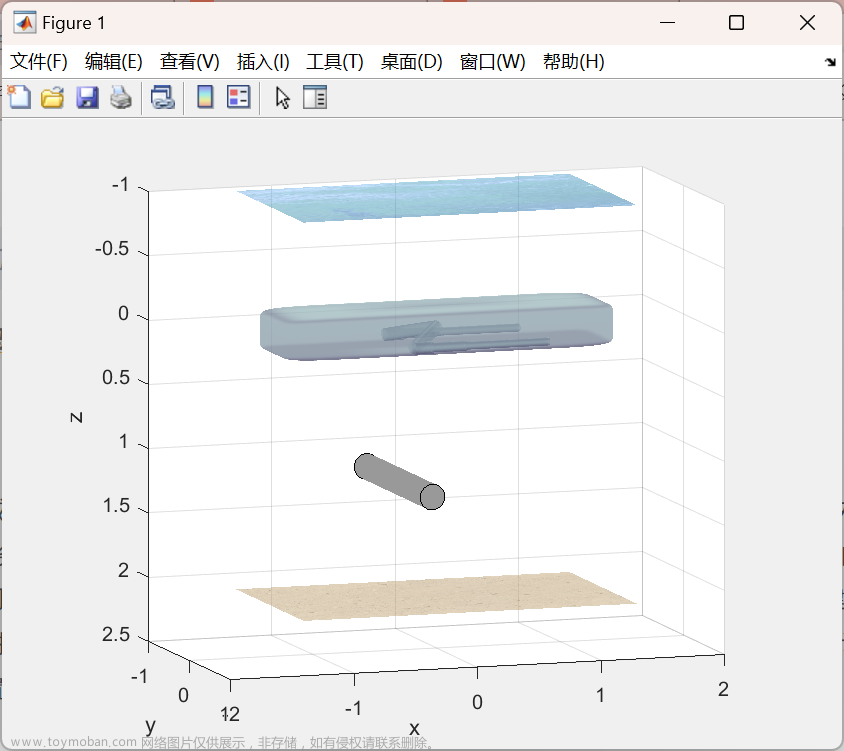

3、放置实体到现实环境中。通过raycast发射射线,通过转化获得现实世界中的x,y,z的坐标,从而把实体放在现实世界中。

4、实现机器人的运动。通过传入文本信息,利用实体的transition属性来进行移动。

5、通过SFSpeechRecognizer获得语音识别允许。

6、创造音频节点,将输入语音的设备设置为麦克风,将音频结果初始化,放到SFSpeechAudioBufferRecognitionRequest里面。

7、进行音频结果的处理,转化为文本,放到机器人运动中。

8、机器人完成运动

没介绍的其他代码就是为实体添加属性,让机器人能够移动,放缩,旋转。

还有一些手势的识别:长按添加新的实体,滑动让实体消失。文章来源:https://www.toymoban.com/news/detail-421137.html

import UIKit

import RealityKit

import ARKit

import Speech

class ViewController: UIViewController {

@IBOutlet var arView: ARView!

var entity: Entity?

var moveToLocation: Transform = Transform()

let moveTime: Double = 5

//语音识别

let speechRecognizer: SFSpeechRecognizer? = SFSpeechRecognizer()

let speechRequest = SFSpeechAudioBufferRecognitionRequest()

var speechTask = SFSpeechRecognitionTask()

// 音频实例化

let audioEngine = AVAudioEngine() //设立音频节点,处理音频输入和输出

let audioSession = AVAudioSession() //音频记录初始化

override func viewDidLoad() {

super.viewDidLoad()

entity = try! Entity.loadModel(named: "toy_robot_vintage.usdz")

entity?.generateCollisionShapes(recursive: true)

//包裹起来 增加碰撞属性

arView.installGestures([.rotation,.scale,.translation], for: entity! as! HasCollision)

//创建session

startARSession()

//创建手势 将2d位置转换为3d位置 获取位置传递到下面的函数中

arView.addGestureRecognizer(UITapGestureRecognizer(target: self, action: #selector(handleTapLocation)))

arView.addGestureRecognizer(UISwipeGestureRecognizer(target: self, action: #selector(handleSwipeLocation)))

arView.addGestureRecognizer(UILongPressGestureRecognizer(target: self, action: #selector(handleLongPressLocation)))

startSpeechRecognition()

}

@objc func handleTapLocation(_ recognizer:UITapGestureRecognizer){

let tapLocation = recognizer.location(in: arView)

//发射粒子 转化为3d坐标

let result = arView.raycast(from: tapLocation, allowing: .estimatedPlane, alignment: .horizontal)

//得到x,y,z坐标

if let firstResult = result.first{

let worldPosition = simd_make_float3(firstResult.worldTransform.columns.3)

placeModelInWorld(object: entity!, position: worldPosition)

}

}

@objc func handleSwipeLocation(_ recognizer: UISwipeGestureRecognizer){

let longPressLocation = recognizer.location(in: arView)

if let entity = arView.entity(at: longPressLocation){

entity.anchor?.removeFromParent()

}

}

@objc func handleLongPressLocation(_ recognizer: UILongPressGestureRecognizer){

let doubleTapLocation = recognizer.location(in: arView)

let result = arView.raycast(from: doubleTapLocation, allowing: .estimatedPlane, alignment: .horizontal)

//得到x,y,z坐标

if let firstResult = result.first{

let worldPosition = simd_make_float3(firstResult.worldTransform.columns.3)

if (arView.entity(at: doubleTapLocation) == nil){

let objectAnchor = AnchorEntity(world: worldPosition)

let entity1 = try! Entity.loadModel(named: "toy_robot_vintageOne.usdz")

entity1.generateCollisionShapes(recursive: true)

arView.installGestures([.translation,.rotation,.scale], for: entity1)

objectAnchor.addChild(entity1)

arView.scene.addAnchor(objectAnchor)

}

}

}

func startARSession(){

arView.automaticallyConfigureSession = true

let configuration = ARWorldTrackingConfiguration()

configuration.planeDetection = [.horizontal]

configuration.environmentTexturing = .automatic

// arView.debugOptions = .showAnchorGeometry

arView.session.run(configuration)

}

func placeModelInWorld(object:Entity,position:SIMD3<Float>){

let objectAnchor = AnchorEntity(world: position)

objectAnchor.addChild(object)

arView.scene.addAnchor(objectAnchor)

}

func rebotMove(direction: String){

switch direction{

case "forward":

moveToLocation.translation = (entity!.transform.translation)+simd_float3(x:0,y:0,z:20)

entity!.move(to: moveToLocation, relativeTo: entity!, duration: moveTime)

walkAnimation(movementDuration: moveTime)

print("moveForward")

case "back":

moveToLocation.translation = (entity!.transform.translation)+simd_float3(x:0,y:0,z:-20)

entity!.move(to: moveToLocation, relativeTo: entity!, duration: moveTime)

walkAnimation(movementDuration: moveTime)

case "left":

let rotateToAngle = simd_quatf(angle: GLKMathDegreesToRadians(90), axis: SIMD3(x:0,y:1,z:0))

entity!.setOrientation(rotateToAngle, relativeTo: entity!)

case "right":

let rotateToAngle = simd_quatf(angle: GLKMathDegreesToRadians(-90), axis: SIMD3(x:0,y:1,z:0))

entity!.setOrientation(rotateToAngle, relativeTo: entity!)

default:

print("没有移动指令")

}

}

func walkAnimation(movementDuration: Double){

if let rebotAnimation = entity!.availableAnimations.first{

entity!.playAnimation(rebotAnimation.repeat(duration: movementDuration),transitionDuration: 0.5,startsPaused: false)

print("Yes")

}else{

print("没有相关动画")

}

}

func startSpeechRecognition(){

//获得允许

requestPermission()

//记录

startAudioRecoding()

//识别

speechRecognize()

}

func requestPermission(){

SFSpeechRecognizer.requestAuthorization { (authorizationStatus) in

if(authorizationStatus == .authorized){

print("允许")

}else if(authorizationStatus == .denied){

print("拒绝")

}else if(authorizationStatus == .notDetermined){

print("等待您的决定")

}else if(authorizationStatus == .restricted){

print("无法启用")

}

}

}

func startAudioRecoding(){

//创造输入节点

let node = audioEngine.inputNode

let recordingFormate = node.outputFormat(forBus: 0)

node.installTap(onBus: 0, bufferSize: 1024, format: recordingFormate) { (buffer, _) in

self.speechRequest.append(buffer)

}

//启动引擎

do{

//配置音频会话为从麦克风录制

try audioSession.setCategory(.record,mode: .measurement,options: .duckOthers)

try audioSession.setActive(true,options: .notifyOthersOnDeactivation)

audioEngine.prepare()

try audioEngine.start()

}

catch{

}

}

func speechRecognize(){

guard let speechRecognizer = SFSpeechRecognizer()else{

print("语音识别不可用")

return

}

if (speechRecognizer.isAvailable == false){

print("无法正常工作")

}

var count = 0

speechTask = speechRecognizer.recognitionTask(with: speechRequest, resultHandler: { (result, error) in

count += 1

if(count == 1){

guard let result = result else {

return

}

let recognizedText = result.bestTranscription.segments.last

self.rebotMove(direction: recognizedText!.substring)

print(recognizedText!.substring)

}else if(count>=3){

count = 0

}

})

}

}

注释已经放在代码中了,只需要导入自己的.usdz文件就可以运行成功了。文章来源地址https://www.toymoban.com/news/detail-421137.html

到了这里,关于Realitykit结合Speech实现语音控制AR机器人移动(完整代码)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!