自行准备一套k8s集群,如果不知道怎么搭建,可以参考一下我之前的博客

https://blog.csdn.net/qq_46902467/article/details/126660847

我的k8s集群地址是:

k8s-master1 10.0.0.10

k8s-node1 10.0.0.11

k8s-node2 10.0.0.12

一、安装nfs服务

#10.0.0.11作为nfs服务端,10.0.0.10和10.0.0.12作为nfs客户端

1.创建共享目录

mkdir /data/nfs -p

2.安装依赖包

yum install -y nfs-utils

3.修改/etc/exports文件,将需要共享的目录和客户添加进来

cat >> /etc/exports << EOF

/data/nfs/ *(rw,sync,no_subtree_check,no_root_squash # *代表所用IP都能访问

EOF

4.启动nfs服务,先为rpcbind和nfs做开机启动

systemctl start rpcbind

systemctl start nfs

#查看状态

systemctl status nfs

#设置开机自启

systemctl enable rpcbind

systemctl enable nfs

5.启动完成后,让配置生效

exportfs –r

#查看验证

exportfs二、安装nfs客户端

1.安装nfs服务

yum install -y nfs-utils

2.启动rpcbind,设置开机自启、客户端不需要启动nfs

systemctl start rpcbind

systemctl enable rpcbind

3.检查nfs服务端是否开启了共享目录

showmount -e 10.0.0.11

4、测试客户端挂载

mount -t nfs 10.0.0.11:/data/nfs /mnt/

#查看挂载情况

df -Th

5.取消挂载

umount /mnt/三、部署Prometheus

1.创建目录

mkdir /usr/local/src/monitoring/{prometheus,grafana,alertmanager,node-exporter} -p

2.创建命名空间

kubectl create ns monitoring

3.创建PV持久化存储卷 #偷个懒,我用的是静态PV

cat >>/usr/local/src/monitoring/prometheus/prometheus-pv.yaml << EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-data-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/nfs/prometheus_data

server: 10.0.0.11

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-data-pvc

namespace: monitoring

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

4.创建RBAC

cat >> /usr/local/src/monitoring/prometheus/prometheus-rbac.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitoring

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- "/metrics"

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitoring

EOF

5.创建Prometheus监控配置文件

cat >> /usr/local/src/monitoring/prometheus/prometheusconfig.yaml << EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager.monitoring.svc:9093"]

rule_files:

- /etc/prometheus/rules/*.yml

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: kubeneters-coredns

honor_labels: false

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 15s

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kube-dns

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: metrics

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: metrics

- job_name: kubeneters-apiserver

honor_labels: false

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

scheme: https

tls_config:

insecure_skip_verify: false

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server_name: kubernetes

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_component

regex: apiserver

- action: keep

source_labels:

- __meta_kubernetes_service_label_provider

regex: kubernetes

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: https

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_component

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: https

metric_relabel_configs:

- source_labels:

- __name__

regex: etcd_(debugging|disk|request|server).*

action: drop

- source_labels:

- __name__

regex: apiserver_admission_controller_admission_latencies_seconds_.*

action: drop

- source_labels:

- __name__

regex: apiserver_admission_step_admission_latencies_seconds_.*

action: drop

- job_name: kubeneters-controller-manager

honor_labels: false

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kube-controller-manager

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: http-metrics

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: http-metrics

metric_relabel_configs:

- source_labels:

- __name__

regex: etcd_(debugging|disk|request|server).*

action: drop

- job_name: kubeneters-scheduler

honor_labels: false

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kube-scheduler

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: http-metrics

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: http-metrics

- job_name: m-alarm/kube-state-metrics/0

honor_labels: true

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

scrape_timeout: 30s

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kube-state-metrics

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: https-main

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: https-main

- regex: (pod|service|endpoint|namespace)

action: labeldrop

- job_name: m-alarm/kube-state-metrics/1

honor_labels: false

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kube-state-metrics

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: https-self

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: https-self

- job_name: kubeneters-kubelet

honor_labels: true

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kubelet

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: https-metrics

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: https-metrics

- source_labels:

- __metrics_path__

target_label: metrics_path

- job_name: kubeneters-cadvisor

honor_labels: true

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

metrics_path: /metrics/cadvisor

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: kubelet

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: https-metrics

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: https-metrics

- source_labels:

- __metrics_path__

target_label: metrics_path

metric_relabel_configs:

- source_labels:

- __name__

regex: container_(network_tcp_usage_total|network_udp_usage_total|tasks_state|cpu_load_average_10s)

action: drop

- job_name: kubeneters-node

honor_labels: false

kubernetes_sd_configs:

- role: endpoints

scrape_interval: 30s

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: keep

source_labels:

- __meta_kubernetes_service_label_k8s_app

regex: node-exporter

- action: keep

source_labels:

- __meta_kubernetes_endpoint_port_name

regex: https

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Node;(.*)

replacement: ${1}

target_label: node

- source_labels:

- __meta_kubernetes_endpoint_address_target_kind

- __meta_kubernetes_endpoint_address_target_name

separator: ;

regex: Pod;(.*)

replacement: ${1}

target_label: pod

- source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: service

- source_labels:

- __meta_kubernetes_pod_name

target_label: pod

- source_labels:

- __meta_kubernetes_service_name

target_label: job

replacement: ${1}

- source_labels:

- __meta_kubernetes_service_label_k8s_app

target_label: job

regex: (.+)

replacement: ${1}

- target_label: endpoint

replacement: https

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: instance

regex: (.*)

replacement: $1

action: replace

6.创建Prometheus告警配置文件

cat >> /usr/local/src/monitoring/prometheus/prometheus-rules.yaml << EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: monitoring

data:

general.rules: |

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: error

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上."

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: 100 - (node_filesystem_free_bytes{fstype=~"ext4|xfs"} / node_filesystem_size_bytes{fstype=~"ext4|xfs"} * 100) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})"

6.创建Prometheus

cat >> /usr/local/src/monitoring/prometheus/prometheus.yaml << EOF

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: prometheus

namespace: monitoring

labels:

app: prometheus

spec:

serviceName: "prometheus"

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

securityContext:

runAsUser: 0

serviceAccountName: prometheus

serviceAccount: prometheus

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus-pvc

- name: prometheus-config

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

- name: localtime

hostPath:

path: /etc/localtime

containers:

- name: prometheus-container

image: prom/prometheus:v2.32.1

imagePullPolicy: Always

command:

- "/app/prometheus/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/app/prometheus/data"

- "--web.console.libraries=/app/prometheus/console_libraries"

- "--web.console.templates=/app/prometheus/consoles"

- "--log.level=info"

- "--web.enable-admin-api"

ports:

- name: prometheus

containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

resources:

requests:

cpu: 100m

memory: 30Mi

limits:

cpu: 500m

memory: 500Mi

volumeMounts:

- mountPath: "/app/prometheus/data"

name: data

- mountPath: "/etc/prometheus"

name: prometheus-config

- name: prometheus-rules

mountPath: /etc/prometheus/rules

- name: localtime

mountPath: /etc/localtime

EOF

7.创建Prometheus svc

cat >> /usr/local/src/monitoring/prometheus/prometheus-svc.yaml << EOF

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/name: "Prometheus"

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

selector:

k8s-app: prometheus

EOF

8.创建Prometheus-ingress

cat >> /usr/local/src/monitoring/prometheus/prometheus-ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prometheus

namespace: monitoring

spec:

rules:

- host: prometheus.com

http:

paths:

- path: /

backend:

serviceName: prometheus

servicePort: http

EOF

9.部署pro

metueus

kubectl apply -f /usr/local/src/monitoring/prometheus/四、部署grafana

1.创建pv持久化存储卷

cat >>/usr/local/src/monitoring/grafana/grafana-pv.yaml << EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-data-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/nfs/grafana_data

server: 10.0.0.11

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-data-pvc

namespace: monitoring

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

EOF

2.创建grafana服务

cat >>/usr/local/src/monitoring/grafana/grafana.yaml << EOF

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: grafana

namespace: monitoring

spec:

serviceName: "grafana"

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana:8.3.3

ports:

- containerPort: 3000

protocol: TCP

resources:

limits:

cpu: 100m

memory: 256Mi

requests:

cpu: 100m

memory: 256Mi

volumeMounts:

- name: grafana-data

mountPath: /var/lib/grafana

subPath: grafana

securityContext:

fsGroup: 472

runAsUser: 472

volumes:

- name: grafana-data

persistentVolumeClaim:

claimName: grafana-pvc

EOF

3.创建grafana-svc

cat >>/usr/local/src/monitoring/grafana/grafana-svc.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

spec:

ports:

- name: http

port : 3000

targetPort: 3000

selector:

app: grafana

EOF

3.创建grafana-ingress

cat >>/usr/local/src/monitoring/grafana/grafana-ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana

namespace: monitoring

spec:

rules:

- host: grafana.com

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: http

EOF

4.部署grafana

kubecatl apply -f /usr/local/src/monitoring/grafana/五、部署alertmanager

1.创建pv持久化存储卷

cat >>/usr/local/src/monitoring/alertmanager/alertmanager-pv.yaml << EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: alertmanager-data-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/nfs/alertmanager_data

server: 10.0.0.11

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: alertmanager-data-pvc

namespace: monitoring

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

EOF

2.创建alertmangaer邮箱告警文件

cat >>/usr/local/src/monitoring/alertmanager/alertmanager-configmap.yaml << EOF

apiVersion: v1

kind: ConfigMap

metadata:

# 配置文件名称

name: alertmanager-config

namespace: monitoring

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

# 告警自定义邮件

smtp_smarthost: 'smtp.163.com:25'

smtp_from: 'w.jjwx@163.com'

smtp_auth_username: 'w.jjwx@163.com'

smtp_auth_password: '密码'

receivers:

- name: default-receiver

email_configs:

- to: "1965161128@qq.com"

route:

group_interval: 1m

group_wait: 10s

receiver: default-receiver

repeat_interval: 1m

EOF

3.创建alertmangaer

cat >>/usr/local/src/monitoring/alertmanager/alertmanager.yaml << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: monitoring

labels:

k8s-app: alertmanager

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.14.0

spec:

replicas: 1

selector:

matchLabels:

k8s-app: alertmanager

version: v0.14.0

template:

metadata:

labels:

k8s-app: alertmanager

version: v0.14.0

spec:

priorityClassName: system-cluster-critical

containers:

- name: prometheus-alertmanager

image: "prom/alertmanager:v0.14.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/alertmanager.yml

- --storage.path=/data

- --web.external-url=/

ports:

- containerPort: 9093

readinessProbe:

httpGet:

path: /#/status

port: 9093

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: storage-volume

mountPath: "/data"

subPath: ""

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

- name: prometheus-alertmanager-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9093/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

persistentVolumeClaim:

claimName: alertmanager-pvc

EOF

4.创建alertmangaer-svc

cat >>/usr/local/src/monitoring/alertmanager/alertmanager-svc.yaml << EOF

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: monitoring

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Alertmanager"

spec:

ports:

- name: http

port: 9093

protocol: TCP

targetPort: 9093

selector:

k8s-app: alertmanager

4.创建alertmangaer-ingress

cat >> /usr/local/src/monitoring/alertmanager/alertmanager-ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: alertmanager

namespace: monitoring

spec:

rules:

- host: alertmanager.com

http:

paths:

- path: /

backend:

serviceName:alertmanager

servicePort: http

EOF

5.部署alertmanager

kubectl apply -f /usr/local/src/monitoring/alertmanager/六、部署node-exporter

cat >>/usr/local/src/monitoring/node-exporter/node-exporter-ds.yaml << EOF

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitoring

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

hostPID: true

hostIPC: true

hostNetwork: true # hostNetwork hostIPC hostPID都为True时,表示这个Pod里的所有容器会直接使用宿主机的网络,直接与宿主机进行IPC(进程间通信通信,可以看到宿主机里正在运行的所有进程。

# 加入了hostNetwork:true会直接将我们的宿主机的9100端口映射出来,从而不需要创建service在我们的宿主机上就会有一个 9100的端口

containers:

- name: node-exporter

image: prom/node-exporter:v1.3.0

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15 # 这个容器运行至少需要0.15核cpu

securityContext:

privileged: true # 开启特权模式

args:

- --path.procfs=/host/proc # 配置挂载宿主机(node节点)的路径

- --path.sysfs=/host/sys # 配置挂载宿主机(node节点)的路径

- --path.rootfs=/host

volumeMounts: # 将主机/dev /proc /sys 这些目录挂在到容器中,这是因为我们采集的很多节点数据都是通过这些文件来获取系统信息的。

- name: dev

mountPath: /host/dev

readOnly: true

- name: proc

mountPath: /host/proc

readOnly: true

- name: sys

mountPath: /host/sys

readOnly: true

- name: rootfs

mountPath: /host

readOnly: true

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

#部署

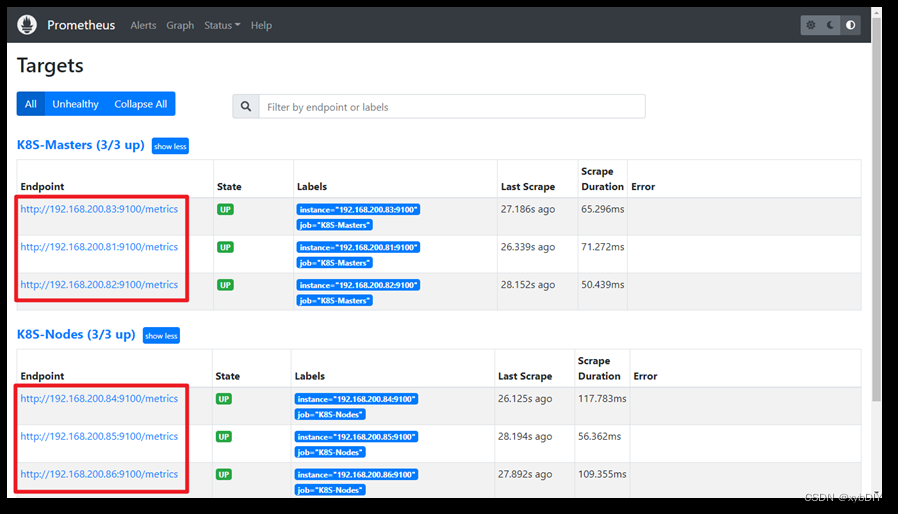

kubectl apply -f /usr/local/src/monitoring/node-exporter/node-exporter-ds.yaml查看pod是否已经running

电脑设置域名解析

C:\Windows\System32\drivers\etc\hosts

10.0.0.11 prometheus.com grafana.comm gitlab.com alertmanager.com

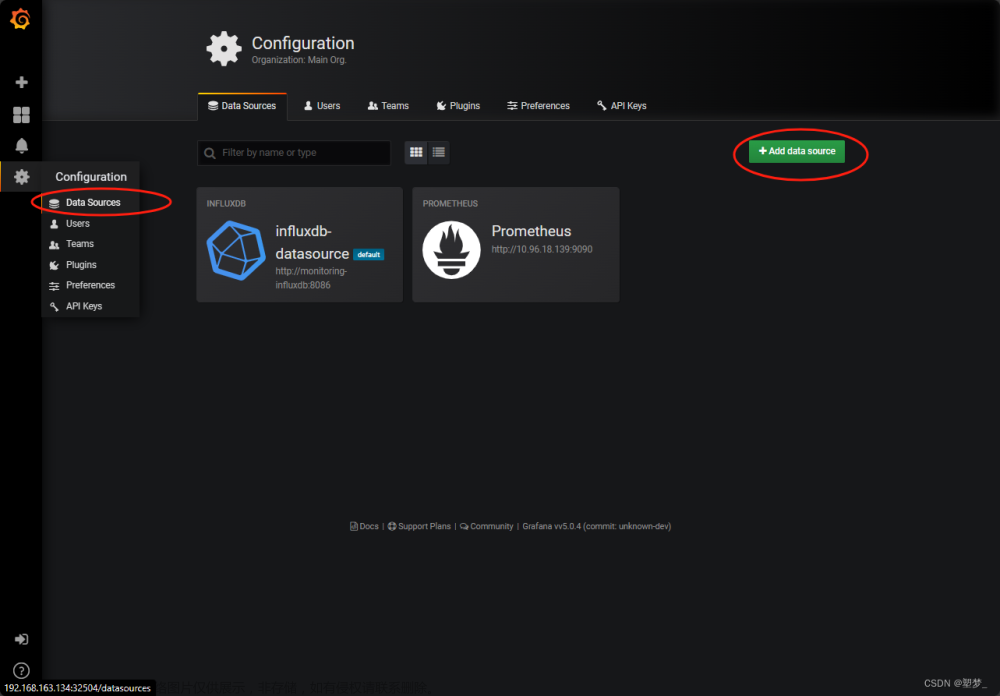

七、grafana添加Prometheus源文章来源:https://www.toymoban.com/news/detail-423108.html

八、添加dashboard文章来源地址https://www.toymoban.com/news/detail-423108.html

k8s dashboard模板

ID:13105

k8s node-exporter模板

ID:8919

到了这里,关于K8s部署Prometheus+grafana+alertmanager报警监控系统(持续更新)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!