一、vhost-user说明

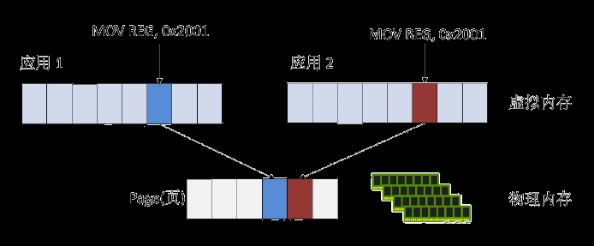

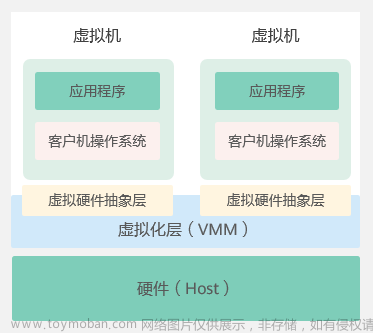

在网络IO的半虚拟中,vhost-user是目前最优秀的解决方案。在DPDK中,同样也采用了这种方式。vhost-user是为了解决内核状态数据操作复杂的情况提出的一种解决方式,通过在用户进程来替代内核进程来实现数据交互的最少化。在vhost-user中,使用Socket进行设备文件间通信(替代了Kernel模式),而数据交换则采用mmap的进程内存宰共享的模式减少数据的交互。

vhost-user在DPDK中的vhost库中实现,其包含了完整的virtio的后端逻辑功能。在软件虚拟交的机OVS中,就应用到了DPDK这个库。

一般来说,vhost-user由OVS为每个虚拟创建珍上vhost端口,来实现相关数据操作。而此时的virtio前端和会调用此相关的端口进行通信。

二、数据结构

基本的数据结构如下(lib/librte_vhost/vhost_user.h):

/* Same structure as vhost-user backend session info */

typedef struct VhostUserCryptoSessionParam {

int64_t session_id;

uint32_t op_code;

uint32_t cipher_algo;

uint32_t cipher_key_len;

uint32_t hash_algo;

uint32_t digest_len;

uint32_t auth_key_len;

uint32_t aad_len;

uint8_t op_type;

uint8_t dir;

uint8_t hash_mode;

uint8_t chaining_dir;

uint8_t * ciphe_key;

uint8_t * auth_key;

uint8_t cipher_key_buf[VHOST_USER_CRYPTO_MAX_CIPHER_KEY_LENGTH];

uint8_t auth_key_buf[VHOST_USER_CRYPTO_MAX_HMAC_KEY_LENGTH];

} VhostUserCryptoSessionParam;

typedef struct VhostUserVringArea {

uint64_t u64;

uint64_t size;

uint64_t offset;

} VhostUserVringArea;

typedef struct VhostUserInflight {

uint64_t mmap_size;

uint64_t mmap_offset;

uint16_t num_queues;

uint16_t queue_size;

} VhostUserInflight;

typedef struct VhostUserMsg {

union {

uint32_t master; /* a VhostUserRequest value */

uint32_t slave; /* a VhostUserSlaveRequest value*/

} request;

#define VHOST_USER_VERSION_MASK 0x3

#define VHOST_USER_REPLY_MASK (0x1 << 2)

#define VHOST_USER_NEED_REPLY (0x1 << 3)

uint32_t flags;

uint32_t size; /* the following payload size */

union {

#define VHOST_USER_VRING_IDX_MASK 0xff

#define VHOST_USER_VRING_NOFD_MASK (0x1<<8)

uint64_t u64;

struct vhost_vring_state state;

struct vhost_vring_addr addr;

VhostUserMemory memory;

VhostUserLog log;

struct vhost_iotlb_msg iotlb;

VhostUserCryptoSessionParam crypto_session;

VhostUserVringArea area;

VhostUserInflight inflight;

} payload;

int fds[VHOST_MEMORY_MAX_NREGIONS];

int fd_num;

} __attribute((packed)) VhostUserMsg;

其中最主要的就是最后一个数据结构VhostUserMsg,这个消息里包含着消息的种类、内容和相关内容的数据大小。而这个消息,正是通过vhost_user.c(lib/librte_vhost)中的vhost_user_msg_handler这个函数来处理的。它们之间的消息类型定义如下:

typedef enum VhostUserRequest {

VHOST_USER_NONE = 0,

VHOST_USER_GET_FEATURES = 1,

VHOST_USER_SET_FEATURES = 2,

VHOST_USER_SET_OWNER = 3,

VHOST_USER_RESET_OWNER = 4,

VHOST_USER_SET_MEM_TABLE = 5,

VHOST_USER_SET_LOG_BASE = 6,

VHOST_USER_SET_LOG_FD = 7,

VHOST_USER_SET_VRING_NUM = 8,

VHOST_USER_SET_VRING_ADDR = 9,

VHOST_USER_SET_VRING_BASE = 10,

VHOST_USER_GET_VRING_BASE = 11,

VHOST_USER_SET_VRING_KICK = 12,

VHOST_USER_SET_VRING_CALL = 13,

VHOST_USER_SET_VRING_ERR = 14,

VHOST_USER_GET_PROTOCOL_FEATURES = 15,

VHOST_USER_SET_PROTOCOL_FEATURES = 16,

VHOST_USER_GET_QUEUE_NUM = 17,

VHOST_USER_SET_VRING_ENABLE = 18,

VHOST_USER_SEND_RARP = 19,

VHOST_USER_NET_SET_MTU = 20,

VHOST_USER_SET_SLAVE_REQ_FD = 21,

VHOST_USER_IOTLB_MSG = 22,

VHOST_USER_MAX

} VhostUserRequest;

随着版本的迭代和新的设备及相关控制手段增加会引起些消息的增加。

再看一下相关的共享内存数据结构(lib/librte_vhost/vhost_user.h):

/*对应qemu端的region结构*/

typedef struct VhostUserMemoryRegion {

uint64_t guest_phys_addr;

uint64_t memory_size;

uint64_t userspace_addr;

uint64_t mmap_offset;

} VhostUserMemoryRegion;

typedef struct VhostUserMemory {

uint32_t nregions;

uint32_t padding;

VhostUserMemoryRegion regions[VHOST_MEMORY_MAX_NREGIONS];

} VhostUserMemory;

//lib/librte_vhost/rte_vhost.h

/**

* Information relating to memory regions including offsets to

* addresses in QEMUs memory file.

*/

struct rte_vhost_mem_region {

uint64_t guest_phys_addr;

uint64_t guest_user_addr;

uint64_t host_user_addr;

uint64_t size;

void *mmap_addr;

uint64_t mmap_size;

int fd;

};

/**

* Memory structure includes region and mapping information.

*/

struct rte_vhost_memory {

uint32_t nregions;

struct rte_vhost_mem_region regions[];

};

上面的两个不同文件夹的相关数据结构体互相对应。

三、基本流程

1、连接和初始化

连接的建立是使用Sokcet来进行的。在前面的消息数据结构体中,其实是定义了很多消息枚举和相关的数组的。这个上面的数据结构体中已经有所体现。

int

rte_vhost_driver_start(const char * path)

{

struct vhost_user_socket * vsocket;

static pthread_t fdset_tid;

pthread_mutex_lock(&vhost_user.mutex);

vsocket = find_vhost_user_socket(path);

pthread_mutex_unlock(&vhost_user.mutex);

if (!vsocket)

return -1;

if (fdset_tid == 0) {

/**

* create a pipe which will be waited by poll and notified to

* rebuild the wait list of poll.

*/

if (fdset_pipe_init(&vhost_user.fdset) < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to create pipe for vhost fdset\n");

return -1;

}

int ret = rte_ctrl_thread_create(&fdset_tid,

"vhost-events", NULL, fdset_event_dispatch,

&vhost_user.fdset);

if (ret != 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to create fdset handling thread\n");

fdset_pipe_uninit(&vhost_user.fdset);

return -1;

}

}

if (vsocket->is_server)

return vhost_user_start_server(vsocket);

else

return vhost_user_start_client(vsocket);

}

通过线程来启动分发控制,最后根据是客户端或者服务端来启动相应的功能函数。

当有一个新的连接时,则处理为:

/* call back when there is new vhost-user connection from client */

static void

vhost_user_server_new_connection(int fd, void *dat, int * remove __rte_unused)

{

struct vhost_user_socket *vsocket = dat;

fd = accept(fd, NULL, NULL);

if (fd < 0)

return;

RTE_LOG(INFO, VHOST_CONFIG, "new vhost user connection is %d\n", fd);

vhost_user_add_connection(fd, vsocket);

}

其实你看lib/librte_vhost/socket.c中的代码,如果有Socket编程的经验的一眼就可以看出好多相关的处理函数和处理手段。

2、数据通信

数据通信中数据交互使用mmap,相关设置代码:

static int

vhost_user_set_mem_table(struct virtio_net **pdev, struct VhostUserMsg *msg,

int main_fd)

{

struct virtio_net *dev = *pdev;

struct VhostUserMemory *memory = &msg->payload.memory;

struct rte_vhost_mem_region *reg;

void *mmap_addr;

uint64_t mmap_size;

uint64_t mmap_offset;

uint64_t alignment;

uint32_t i;

int populate;

if (validate_msg_fds(msg, memory->nregions) != 0)

return RTE_VHOST_MSG_RESULT_ERR;

if (memory->nregions > VHOST_MEMORY_MAX_NREGIONS) {

RTE_LOG(ERR, VHOST_CONFIG,

"too many memory regions (%u)\n", memory->nregions);

goto close_msg_fds;

}

if (dev->mem && !vhost_memory_changed(memory, dev->mem)) {

RTE_LOG(INFO, VHOST_CONFIG,

"(%d) memory regions not changed\n", dev->vid);

close_msg_fds(msg);

return RTE_VHOST_MSG_RESULT_OK;

}

if (dev->mem) {

free_mem_region(dev);

rte_free(dev->mem);

dev->mem = NULL;

}

/* Flush IOTLB cache as previous HVAs are now invalid */

if (dev->features & (1ULL << VIRTIO_F_IOMMU_PLATFORM))

for (i = 0; i < dev->nr_vring; i++)

vhost_user_iotlb_flush_all(dev->virtqueue[i]);

dev->nr_guest_pages = 0;

if (dev->guest_pages == NULL) {

dev->max_guest_pages = 8;

dev->guest_pages = rte_zmalloc(NULL,

dev->max_guest_pages *

sizeof(struct guest_page),

RTE_CACHE_LINE_SIZE);

if (dev->guest_pages == NULL) {

RTE_LOG(ERR, VHOST_CONFIG,

"(%d) failed to allocate memory "

"for dev->guest_pages\n",

dev->vid);

goto close_msg_fds;

}

}

dev->mem = rte_zmalloc("vhost-mem-table", sizeof(struct rte_vhost_memory) +

sizeof(struct rte_vhost_mem_region) * memory->nregions, 0);

if (dev->mem == NULL) {

RTE_LOG(ERR, VHOST_CONFIG,

"(%d) failed to allocate memory for dev->mem\n",

dev->vid);

goto free_guest_pages;

}

dev->mem->nregions = memory->nregions;

for (i = 0; i < memory->nregions; i++) {

reg = &dev->mem->regions[i];

reg->guest_phys_addr = memory->regions[i].guest_phys_addr;

reg->guest_user_addr = memory->regions[i].userspace_addr;

reg->size = memory->regions[i].memory_size;

reg->fd = msg->fds[i];

/*

* Assign invalid file descriptor value to avoid double

* closing on error path.

*/

msg->fds[i] = -1;

mmap_offset = memory->regions[i].mmap_offset;

/* Check for memory_size + mmap_offset overflow */

if (mmap_offset >= -reg->size) {

RTE_LOG(ERR, VHOST_CONFIG,

"mmap_offset (%#"PRIx64") and memory_size "

"(%#"PRIx64") overflow\n",

mmap_offset, reg->size);

goto free_mem_table;

}

mmap_size = reg->size + mmap_offset;

/* mmap() without flag of MAP_ANONYMOUS, should be called

* with length argument aligned with hugepagesz at older

* longterm version Linux, like 2.6.32 and 3.2.72, or

* mmap() will fail with EINVAL.

*

* to avoid failure, make sure in caller to keep length

* aligned.

*/

alignment = get_blk_size(reg->fd);

if (alignment == (uint64_t)-1) {

RTE_LOG(ERR, VHOST_CONFIG,

"couldn't get hugepage size through fstat\n");

goto free_mem_table;

}

mmap_size = RTE_ALIGN_CEIL(mmap_size, alignment);

if (mmap_size == 0) {

/*

* It could happen if initial mmap_size + alignment

* overflows the sizeof uint64, which could happen if

* either mmap_size or alignment value is wrong.

*

* mmap() kernel implementation would return an error,

* but better catch it before and provide useful info

* in the logs.

*/

RTE_LOG(ERR, VHOST_CONFIG, "mmap size (0x%" PRIx64 ") "

"or alignment (0x%" PRIx64 ") is invalid\n",

reg->size + mmap_offset, alignment);

goto free_mem_table;

}

populate = (dev->dequeue_zero_copy) ? MAP_POPULATE : 0;

mmap_addr = mmap(NULL, mmap_size, PROT_READ | PROT_WRITE,

MAP_SHARED | populate, reg->fd, 0);

if (mmap_addr == MAP_FAILED) {

RTE_LOG(ERR, VHOST_CONFIG,

"mmap region %u failed.\n", i);

goto free_mem_table;

}

reg->mmap_addr = mmap_addr;

reg->mmap_size = mmap_size;

reg->host_user_addr = (uint64_t)(uintptr_t)mmap_addr +

mmap_offset;

if (dev->dequeue_zero_copy)

if (add_guest_pages(dev, reg, alignment) < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"adding guest pages to region %u failed.\n",

i);

goto free_mem_table;

}

RTE_LOG(INFO, VHOST_CONFIG,

"guest memory region %u, size: 0x%" PRIx64 "\n"

"\t guest physical addr: 0x%" PRIx64 "\n"

"\t guest virtual addr: 0x%" PRIx64 "\n"

"\t host virtual addr: 0x%" PRIx64 "\n"

"\t mmap addr : 0x%" PRIx64 "\n"

"\t mmap size : 0x%" PRIx64 "\n"

"\t mmap align: 0x%" PRIx64 "\n"

"\t mmap off : 0x%" PRIx64 "\n",

i, reg->size,

reg->guest_phys_addr,

reg->guest_user_addr,

reg->host_user_addr,

(uint64_t)(uintptr_t)mmap_addr,

mmap_size,

alignment,

mmap_offset);

if (dev->postcopy_listening) {

/*

* We haven't a better way right now than sharing

* DPDK's virtual address with Qemu, so that Qemu can

* retrieve the region offset when handling userfaults.

*/

memory->regions[i].userspace_addr =

reg->host_user_addr;

}

}

if (dev->postcopy_listening) {

/* Send the addresses back to qemu */

msg->fd_num = 0;

send_vhost_reply(main_fd, msg);

/* Wait for qemu to acknolwedge it's got the addresses

* we've got to wait before we're allowed to generate faults.

*/

VhostUserMsg ack_msg;

if (read_vhost_message(main_fd, &ack_msg) <= 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"Failed to read qemu ack on postcopy set-mem-table\n");

goto free_mem_table;

}

if (validate_msg_fds(&ack_msg, 0) != 0)

goto free_mem_table;

if (ack_msg.request.master != VHOST_USER_SET_MEM_TABLE) {

RTE_LOG(ERR, VHOST_CONFIG,

"Bad qemu ack on postcopy set-mem-table (%d)\n",

ack_msg.request.master);

goto free_mem_table;

}

/* Now userfault register and we can use the memory */

for (i = 0; i < memory->nregions; i++) {

#ifdef RTE_LIBRTE_VHOST_POSTCOPY

reg = &dev->mem->regions[i];

struct uffdio_register reg_struct;

/*

* Let's register all the mmap'ed area to ensure

* alignment on page boundary.

*/

reg_struct.range.start =

(uint64_t)(uintptr_t)reg->mmap_addr;

reg_struct.range.len = reg->mmap_size;

reg_struct.mode = UFFDIO_REGISTER_MODE_MISSING;

if (ioctl(dev->postcopy_ufd, UFFDIO_REGISTER,

®_struct)) {

RTE_LOG(ERR, VHOST_CONFIG,

"Failed to register ufd for region %d: (ufd = %d) %s\n",

i, dev->postcopy_ufd,

strerror(errno));

goto free_mem_table;

}

RTE_LOG(INFO, VHOST_CONFIG,

"\t userfaultfd registered for range : "

"%" PRIx64 " - %" PRIx64 "\n",

(uint64_t)reg_struct.range.start,

(uint64_t)reg_struct.range.start +

(uint64_t)reg_struct.range.len - 1);

#else

goto free_mem_table;

#endif

}

}

for (i = 0; i < dev->nr_vring; i++) {

struct vhost_virtqueue *vq = dev->virtqueue[i];

if (vq->desc || vq->avail || vq->used) {

/*

* If the memory table got updated, the ring addresses

* need to be translated again as virtual addresses have

* changed.

*/

vring_invalidate(dev, vq);

dev = translate_ring_addresses(dev, i);

if (!dev) {

dev = *pdev;

goto free_mem_table;

}

*pdev = dev;

}

}

dump_guest_pages(dev);

return RTE_VHOST_MSG_RESULT_OK;

free_mem_table:

free_mem_region(dev);

rte_free(dev->mem);

dev->mem = NULL;

free_guest_pages:

rte_free(dev->guest_pages);

dev->guest_pages = NULL;

close_msg_fds:

close_msg_fds(msg);

return RTE_VHOST_MSG_RESULT_ERR;

}

地址的转换是在下面的函数:

/* Converts QEMU virtual address to Vhost virtual address. */

static uint64_t

qva_to_vva(struct virtio_net *dev, uint64_t qva, uint64_t *len)

{

struct rte_vhost_mem_region *r;

uint32_t i;

if (unlikely(!dev || !dev->mem))

goto out_error;

/* Find the region where the address lives. */

for (i = 0; i < dev->mem->nregions; i++) {

r = &dev->mem->regions[i];

if (qva >= r->guest_user_addr &&

qva < r->guest_user_addr + r->size) {

if (unlikely(*len > r->guest_user_addr + r->size - qva))

*len = r->guest_user_addr + r->size - qva;

return qva - r->guest_user_addr +

r->host_user_addr;

}

}

out_error:

*len = 0;

return 0;

}

数据的实际通信,在前面分析过,就是“rte_vhost_enqueue_burst”和“rte_vhost_dequeue_burst”这两个函数。

3、通知机制

基本上是采用eventfd的方式,这和网络通信保持一致:

static int

vhost_user_set_vring_kick(struct virtio_net **pdev, struct VhostUserMsg *msg,

int main_fd __rte_unused)

{

struct virtio_net *dev = *pdev;

struct vhost_vring_file file;

struct vhost_virtqueue *vq;

int expected_fds;

expected_fds = (msg->payload.u64 & VHOST_USER_VRING_NOFD_MASK) ? 0 : 1;

if (validate_msg_fds(msg, expected_fds) != 0)

return RTE_VHOST_MSG_RESULT_ERR;

file.index = msg->payload.u64 & VHOST_USER_VRING_IDX_MASK;

if (msg->payload.u64 & VHOST_USER_VRING_NOFD_MASK)

file.fd = VIRTIO_INVALID_EVENTFD;

else

file.fd = msg->fds[0];

RTE_LOG(INFO, VHOST_CONFIG,

"vring kick idx:%d file:%d\n", file.index, file.fd);

/* Interpret ring addresses only when ring is started. */

dev = translate_ring_addresses(dev, file.index);

if (!dev) {

if (file.fd != VIRTIO_INVALID_EVENTFD)

close(file.fd);

return RTE_VHOST_MSG_RESULT_ERR;

}

*pdev = dev;

vq = dev->virtqueue[file.index];

/*

* When VHOST_USER_F_PROTOCOL_FEATURES is not negotiated,

* the ring starts already enabled. Otherwise, it is enabled via

* the SET_VRING_ENABLE message.

*/

if (!(dev->features & (1ULL << VHOST_USER_F_PROTOCOL_FEATURES))) {

vq->enabled = 1;

if (dev->notify_ops->vring_state_changed)

dev->notify_ops->vring_state_changed(

dev->vid, file.index, 1);

}

if (vq->kickfd >= 0)

close(vq->kickfd);

vq->kickfd = file.fd;

if (vq_is_packed(dev)) {

if (vhost_check_queue_inflights_packed(dev, vq)) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to inflights for vq: %d\n", file.index);

return RTE_VHOST_MSG_RESULT_ERR;

}

} else {

if (vhost_check_queue_inflights_split(dev, vq)) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to inflights for vq: %d\n", file.index);

return RTE_VHOST_MSG_RESULT_ERR;

}

}

return RTE_VHOST_MSG_RESULT_OK;

}

上述交互使用Poll机制,也就是轮询,来不断驱动着数据的流动。文章来源:https://www.toymoban.com/news/detail-424171.html

四、总结

可以说从内核转到用户空间本身就是一个非常大的进步。减少甚至不和内核打交道,这实际就是设计上的解耦,同时增加了内核的安全性。反而效率成为了一种为了需要产生的有益的副作用。从这一点可以看出,软件设计思想的提高和在实际上的普及应用,是非常重要的。想到,才有可能做到。文章来源地址https://www.toymoban.com/news/detail-424171.html

到了这里,关于DPDK系列之十五虚拟化virtio源码分析之vhost-user的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!