一、初级检索

1、_cat

# 查看所有节点信息

GET /_cat/nodes

postman:http://192.168.120.120:9200/_cat/nodes

# 结果

127.0.0.1 31 93 4 0.03 0.07 0.17 dilm * 0e01be6f8988

* 代表主节点

0e01be6f8988 代表节点

GET /_cat/health:查看 es 健康状况

GET /_cat/master:查看主节点

GET /_cat/indices:查看所有索引 show databases;

2、索引一个文档(保存)

保存一个数据,保存在哪个索引的哪个类型下,指定用哪个唯一标识(相当于,保存一个数据,保存在那个数据库中的哪个表中,指定主键ID)

例:PUT customer/external/1;在customer索引下的external类型下保存1号数据name为John Doe的数据

PUT customer/external/1

{

"name": "John Doe"

}

POST和PUT都可以新增数据

注意:

POST 新增。如果不指定 id,会自动生成 id。指定 id 就会修改这个数据,并新增版本号

PUT 可以新增可以修改。PUT 必须指定 id;由于 PUT 需要指定 id,我们一般都用来做修改 操作,不指定 id 会报错。

3、查询文档

GET customer/external/1

结果:

{

"_index": "customer", // 索引名称

"_type": "external", // 类型名称

"_id": "1", // 唯一标识

"_version": 1, // 版本号

"_seq_no": 0, // 并发控制字段,每次更新就会+1,用来做乐观锁

"_primary_term": 1, // 同上,主分片重新分配,如重启,就会变化

"found": true,

"_source": { // 真正的内容

"name": "John Doe"

}

}

更新时携带 ?if_seq_no=0&if_primary_term=1 便会产生乐观锁

4、更新文档

POST customer/external/1/_update

更新内容:

{

"doc": {

"name": "John"

}

}

如果带了_update,则内容需要有doc,更新是会检查元数据,如果一样则什么都不做,如果不带,则反复更新

或者 POST customer/external/1

{

"name": "John Doe2"

}

post可以带_update也可以不带,但是put不可以带_update

或者

PUT customer/external/1

{

"name": "John Doe"

}

POST和PUT的异同点:

post可以带_update,也可以不带,但是put不能带

post带_update时,post操作会对比源文档数据内容,如果相同则不会有什么操作,文档version不增加等;

post不带时和put相同,会将数据重新保存,并增加version版本;

带_update 对比元数据如果一样就不进行任何操作。

看场景;

对于大并发更新,不带 update;

对于大并发查询偶尔更新,带 update;对比更新,重新计算分配规则。

更新同时增加属性

POST customer/external/1/_update

{

"doc": {

"name": "Jane Doe",

"age": 20

}

}

PUT 和 POST 不带_update 也可以实现

5、删除文档&索引

删除文档

DELETE customer/external/1

删除整个索引

DELETE customer

注:没有删除类型的操作

6、bulk 批量 API

POST customer/external/_bulk

{"index":{"_id":"1"}}

{"name": "John Doe" }

{"index":{"_id":"2"}}

{"name": "Jane Doe" }

两行为一个整体,第一行是索引的唯一标识,第二行是文档的内容

语法格式:

{ action: { metadata }}\n

{ request body }\n

{ action: { metadata }}\n

{ request body }\n

复杂实例: POST /_bulk

{ "delete": { "_index": "website", "_type": "blog", "_id": "123" }}

{ "create": { "_index": "website", "_type": "blog", "_id": "123" }}

{ "title": "My first blog post" }

{ "index": { "_index": "website", "_type": "blog" }}

{ "title": "My second blog post" }

{ "update": { "_index": "website", "_type": "blog", "_id": "123", "_retry_on_conflict" : 3} }

{ "doc" : {"title" : "My updated blog post"} }

bulk API 以此按顺序执行所有的 action(动作)。如果一个单个的动作因任何原因而失败, 它将继续处理它后面剩余的动作。当 bulk API 返回时,它将提供每个动作的状态(与发送 的顺序相同),所以您可以检查是否一个指定的动作是不是失败了。

7、样本测试数据

准备了一份顾客银行账户信息的虚构的 JSON 文档样本,每个文档都有下列的 schema (模式)

GET /bank/account/0

{

"_index" : "bank",

"_type" : "account",

"_id" : "0",

"_version" : 1,

"_seq_no" : 600,

"_primary_term" : 1,

"found" : true,

"_source" : {

"account_number" : 0,

"balance" : 16623,

"firstname" : "Bradshaw",

"lastname" : "Mckenzie",

"age" : 29,

"gender" : "F",

"address" : "244 Columbus Place",

"employer" : "Euron",

"email" : "bradshawmckenzie@euron.com",

"city" : "Hobucken",

"state" : "CO"

}

}

测试数据

二、进阶检索

1、SearchAPI

ES 支持两种基本方式检索 :

- 一个是通过使用 REST request URI 发送搜索参数(uri+检索参数)

- 另一个是通过使用 REST request body 来发送它们(uri+请求体)

1)、检索信息

检索 bank 下所有信息,包括 type 和 docs

GET bank/_search

请求参数方式检索

GET bank/_search?q=*&sort=account_number:asc

q=* 表示查询所以

sort=account_number:asc 表示通过account_number升序排序

响应结果解释:

took - Elasticsearch 执行搜索的时间(毫秒)

time_out - 告诉我们搜索是否超时

_shards - 告诉我们多少个分片被搜索了,以及统计了成功/失败的搜索分片

hits - 搜索结果

hits.total - 搜索结果

hits.hits - 实际的搜索结果数组(默认为前 10 的文档)

sort - 结果的排序 key(键)(没有则按 score 排序)

score 和 max_score –相关性得分和最高得分(全文检索用)

uri+请求体进行检索

GET bank/_search

{

"query":{

"match_all": {}

},

"sort": [{

"account_number":{

"order": "desc"

}

}]

}

检索了1000条数据,但是根据相关性算法,只返回10条,自动分页

2、Query DSL

GET查询的请求体 叫 query DSL

1)、基本语法格式

Elasticsearch 提供了一个可以执行查询的 Json 风格的 DSL(domain-specific language 领域特 定语言)。这个被称为 Query DSL。该查询语言非常全面,并且刚开始的时候感觉有点复杂, 真正学好它的方法是从一些基础的示例开始的。

- 一个查询语句 的典型结构

QUERY_NAME:{

ARGUMENT:VALUE,

ARGUMENT:VALUE,

...

}

- 如果是针对某个字段,那么它的结构如下:

{

QUERY_NAME:{

FIELD_NAME:{

ARGUMENT:VALUE,

ARGUMENT:VALUE,...

}

}

}

示例

GET bank/_search

{

"query": {

"match_all": {}

},

"from": 0,

"size": 5,

"_source":["balance"],

"sort": [

{

"account_number": {

"order": "desc"

}

}

]

}

- query 定义如何查询

- match_all 查询类型【代表查询所有的所有】,es 中可以在 query 中组合非常多的查 询类型完成复杂查询

- 除了 query 参数之外,我们也可以传递其它的参数以改变查询结果。如 sort,size

- from+size 限定,完成分页功能

- sort 排序,多字段排序,会在前序字段相等时后续字段内部排序,否则以前序为准

2)、返回部分字段

GET bank/_search

{

"query": {

"match_all": {}

},

"from": 0,

"size": 5,

"sort": [

{

"account_number": {

"order": "desc"

}

}

],

"_source": ["balance","firstname"]

}

查询结果:

{

"took" : 18,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "999",

"_score" : null,

"_source" : {

"firstname" : "Dorothy",

"balance" : 6087

},

"sort" : [

999

]

},

省略。。。

3)、match【匹配查询】

- 基本类型(非字符串),精确匹配

GET bank/_search

{

"query": {

"match": {

"account_number": 20

}

}

}

match 返回 account_number=20 的

结果

{

"took" : 13,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "20",

"_score" : 1.0,

"_source" : {

"account_number" : 20,

"balance" : 16418,

"firstname" : "Elinor",

"lastname" : "Ratliff",

"age" : 36,

"gender" : "M",

"address" : "282 Kings Place",

"employer" : "Scentric",

"email" : "elinorratliff@scentric.com",

"city" : "Ribera",

"state" : "WA"

}

}

]

}

}

- 字符串,全文检索

GET bank/_search

{

"query": {

"match": {

"address": "kings"

}

}

}

最终查询出 address 中包含 mill 单词的所有记录

match 当搜索字符串类型的时候,会进行全文检索,并且每条记录有相关性得分

结果

{

"took" : 5,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 2,

"relation" : "eq"

},

"max_score" : 5.9908285,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "20",

"_score" : 5.9908285,

"_source" : {

"account_number" : 20,

"balance" : 16418,

"firstname" : "Elinor",

"lastname" : "Ratliff",

"age" : 36,

"gender" : "M",

"address" : "282 Kings Place",

"employer" : "Scentric",

"email" : "elinorratliff@scentric.com",

"city" : "Ribera",

"state" : "WA"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "722",

"_score" : 5.9908285,

"_source" : {

"account_number" : 722,

"balance" : 27256,

"firstname" : "Roberts",

"lastname" : "Beasley",

"age" : 34,

"gender" : "F",

"address" : "305 Kings Hwy",

"employer" : "Quintity",

"email" : "robertsbeasley@quintity.com",

"city" : "Hayden",

"state" : "PA"

}

}

]

}

}

- 字符串,多个单词(分词+全文检索)

GET bank/_search

{

"query": {

"match": {

"address": "mill road"

}

}

}

最终查询出 address 中包含 mill 或者 road 或者 mill road 的所有记录,并给出相关性得分

结果

{

"took" : 13,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 32,

"relation" : "eq"

},

"max_score" : 8.926605,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 8.926605,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

},

.......

]

}

}

4)、match_phrase【短语匹配】

- 将需要匹配的值当成一个整体单词(不分词)进行检索

GET bank/_search

{

"query": {

"match_phrase": {

"address": "mill road"

}

}

}

前面的是包含mill或road就能查出来,现在是要都包含才能查出

结果

{

"took" : 8,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 8.926605,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 8.926605,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

}

]

}

}

附加

文本字段的匹配,使用keyword,匹配的条件就是要显示字段的全部值,要进行精确匹配的。

match_phrase是做短语匹配,只要文本中包含匹配条件,就能匹配到

使用match的keyword

GET bank/_search

{

"query": {

"match": {

"address.keyword": "990 Mill"

}

}

}

匹配不到数据

修改匹配条件为“990 Mill Road”

GET bank/_search

{

"query": {

"match": {

"address.keyword": "990 Mill Road"

}

}

}

查到一条数据

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 6.5032897,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 6.5032897,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

}

]

}

}

5)、multi_match【多字段匹配】

- state 或者 address 包含 mill

GET bank/_search

{

"query": {

"multi_match": {

"query": "mill",

"fields": ["state", "address"]

}

}

}

在查询过程中,会对于查询条件进行分词

结果

{

"took" : 5,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 4,

"relation" : "eq"

},

"max_score" : 5.4032025,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 5.4032025,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "136",

"_score" : 5.4032025,

"_source" : {

"account_number" : 136,

"balance" : 45801,

"firstname" : "Winnie",

"lastname" : "Holland",

"age" : 38,

"gender" : "M",

"address" : "198 Mill Lane",

"employer" : "Neteria",

"email" : "winnieholland@neteria.com",

"city" : "Urie",

"state" : "IL"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "345",

"_score" : 5.4032025,

"_source" : {

"account_number" : 345,

"balance" : 9812,

"firstname" : "Parker",

"lastname" : "Hines",

"age" : 38,

"gender" : "M",

"address" : "715 Mill Avenue",

"employer" : "Baluba",

"email" : "parkerhines@baluba.com",

"city" : "Blackgum",

"state" : "KY"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "472",

"_score" : 5.4032025,

"_source" : {

"account_number" : 472,

"balance" : 25571,

"firstname" : "Lee",

"lastname" : "Long",

"age" : 32,

"gender" : "F",

"address" : "288 Mill Street",

"employer" : "Comverges",

"email" : "leelong@comverges.com",

"city" : "Movico",

"state" : "MT"

}

}

]

}

}

6)、bool【复合查询】

复合语句可以合并 任何 其它查询语句,包括复合语句,复合语句之间可以互相嵌套,可以表达非常复杂的逻辑

must:必须达到must所列举的所有条件

must_not:必须不匹配must_not所列举的所有条件。

should:应该满足should所列举的条件。满足条件最好,不满足也可以,满足得分更高

- must:必须达到 must 列举的所有条件

实例:查询gender=m,并且address=mill的数据

GET bank/_search

{

"query":{

"bool":{

"must":[

{"match":{"address":"mill"}},

{"match":{"gender":"M"}}

]

}

}

}

结果

{

"took" : 33,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 3,

"relation" : "eq"

},

"max_score" : 6.0824604,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 6.0824604,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "136",

"_score" : 6.0824604,

"_source" : {

"account_number" : 136,

"balance" : 45801,

"firstname" : "Winnie",

"lastname" : "Holland",

"age" : 38,

"gender" : "M",

"address" : "198 Mill Lane",

"employer" : "Neteria",

"email" : "winnieholland@neteria.com",

"city" : "Urie",

"state" : "IL"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "345",

"_score" : 6.0824604,

"_source" : {

"account_number" : 345,

"balance" : 9812,

"firstname" : "Parker",

"lastname" : "Hines",

"age" : 38,

"gender" : "M",

"address" : "715 Mill Avenue",

"employer" : "Baluba",

"email" : "parkerhines@baluba.com",

"city" : "Blackgum",

"state" : "KY"

}

}

]

}

}

- must_not 必须不是指定的情况

实例:查询gender=m,并且address=mill的数据,但是age不等于38的

GET bank/_search

{

"query": {

"bool": {

"must": [

{"match": {"gender": "M"}},

{"match": {"address": "mill"}}

],

"must_not": [{

"match": {"age": "38"}

}

]

}

}

}

结果

{

"took" : 8,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 6.0824604,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 6.0824604,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

}

]

}

}

-

should:应该达到 should 列举的条件,如果达到会增加相关文档的评分

并不会改变 查询的结果。如果 query 中只有 should 且只有一种匹配规则,那么 should 的条件就会 被作为默认匹配条件而去改变查询结果

# 实例:匹配lastName应该等于Wallace的数据

GET bank/_search

{

"query": {

"bool": {

"must": [

{"match": {"gender": "M"}},

{"match": {"address": "mill"}}

],

"must_not": [

{"match": {"age": "18"}}

],

"should": [

{"match": {"lastname": "Wallace"}}

]

}

}

}

结果

{

"took" : 3,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 3,

"relation" : "eq"

},

"max_score" : 12.585751,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 12.585751,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "136",

"_score" : 6.0824604,

"_source" : {

"account_number" : 136,

"balance" : 45801,

"firstname" : "Winnie",

"lastname" : "Holland",

"age" : 38,

"gender" : "M",

"address" : "198 Mill Lane",

"employer" : "Neteria",

"email" : "winnieholland@neteria.com",

"city" : "Urie",

"state" : "IL"

}

},

{

"_index" : "bank",

"_type" : "account",

"_id" : "345",

"_score" : 6.0824604,

"_source" : {

"account_number" : 345,

"balance" : 9812,

"firstname" : "Parker",

"lastname" : "Hines",

"age" : 38,

"gender" : "M",

"address" : "715 Mill Avenue",

"employer" : "Baluba",

"email" : "parkerhines@baluba.com",

"city" : "Blackgum",

"state" : "KY"

}

}

]

}

}

7)、filter【结果过滤】

上面的must和should影响相关性得分,而must_not仅仅是一个filter ,不贡献得分

must改为filter就使must不贡献得分

如果只有filter条件的话,我们会发现得分都是0

并不是所有的查询都需要产生分数,特别是那些仅用于 “filtering”(过滤)的文档。为了不 计算分数 Elasticsearch 会自动检查场景并且优化查询的执行。

GET bank/_search

{

"query": {

"bool": {

"must": [

{ "match": {"address": "mill" } }

],

"filter": {

"range": {

"balance": {

"gte": "10000",

"lte": "20000"

}

}

}

}

}

}

这里先是查询所有匹配address=mill的文档,然后再根据10000<=balance<=20000进行过滤查询结果

结果

{

"took" : 5,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 5.4032025,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "970",

"_score" : 5.4032025,

"_source" : {

"account_number" : 970,

"balance" : 19648,

"firstname" : "Forbes",

"lastname" : "Wallace",

"age" : 28,

"gender" : "M",

"address" : "990 Mill Road",

"employer" : "Pheast",

"email" : "forbeswallace@pheast.com",

"city" : "Lopezo",

"state" : "AK"

}

}

]

}

}

如果只有filter条件的话,我们会发现得分都是0

GET bank/_search

{

"query": {

"bool": {

"filter": {

"range": {

"balance": {

"gte": "10000",

"lte": "20000"

}

}

}

}

}

}

结果

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 213,

"relation" : "eq"

},

"max_score" : 0.0,

"hits" : [

{

"_index" : "bank",

"_type" : "account",

"_id" : "20",

"_score" : 0.0,

"_source" : {

"account_number" : 20,

"balance" : 16418,

"firstname" : "Elinor",

"lastname" : "Ratliff",

"age" : 36,

"gender" : "M",

"address" : "282 Kings Place",

"employer" : "Scentric",

"email" : "elinorratliff@scentric.com",

"city" : "Ribera",

"state" : "WA"

}

},

......

]

}

}

能看到所有文档的 “_score” : 0.0。

8)、term【匹配查询】

和 match 一样。匹配某个属性的值。全文检索字段用 match,其他非 text 字段匹配用 term,不要使用term来进行文本字段查询。

GET bank/_search

{

"query": {

"term": {

"address": "mill Road"

}

}

}

如果使用term查询text的话,是查不到数据的,换成match就会查到数据

9)、aggregations【执行聚合】

聚合提供了从数据中分组和提取数据的能力。最简单的聚合方法大致等于 SQL GROUP BY 和 SQL 聚合函数。在 Elasticsearch 中,您有执行搜索返回 hits(命中结果),并且同时返 回聚合结果,把一个响应中的所有 hits(命中结果)分隔开的能力。这是非常强大且有效的, 您可以执行查询和多个聚合,并且在一次使用中得到各自的(任何一个的)返回结果,使用 一次简洁和简化的 API 来避免网络往返。

terms:看值的可能性分布

avg:看值的分布平均

- 搜索 address 中包含 mill 的所有人的年龄分布以及平均年龄

GET bank/_search

{

"query": {

"match": { // 查询出address中包含mill

"address": "mill"

}

},

"aggs": { // 基于查询聚合

"ageAgg": { // 聚合的名字,可以随便取

"terms": { // 看值的可能性分布

"field": "age",

"size": 10

}

},

"ageAvg": {

"avg": { // 看age的平均值

"field": "age"

}

},

"balanceAvg": {

"avg": { // 看balance的平均值

"field": "balance"

}

}

},

"size": 0 // 不显示查询详情

}

结果

{

"took" : 13,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 4,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"ageAggs" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : 38,

"doc_count" : 2

},

{

"key" : 28,

"doc_count" : 1

},

{

"key" : 32,

"doc_count" : 1

}

]

}

}

}

"size": 0 不显示这些人的详情

- 复杂:按照年龄聚合,并且请求这些年龄段的这些人的平均薪资(子聚合)

GET bank/_search

{

"query": {

"match_all": {}

},

"aggs": {

"ageAggs": {

"terms": {

"field": "age",

"size": 10

},

"aggs": { // 子聚合,基于上次的聚合结果再次聚合

"balanceAvg": {

"avg": { // 查看平均薪资

"field": "balance"

}

}

}

}

},

"size": 0

}

结果

{

"took" : 22,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"ageAggs" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 463,

"buckets" : [

{

"key" : 31,

"doc_count" : 61,

"balanceAvg" : {

"value" : 28312.918032786885

}

},

{

"key" : 39,

"doc_count" : 60,

"balanceAvg" : {

"value" : 25269.583333333332

}

},

......

{

"key" : 34,

"doc_count" : 49,

"balanceAvg" : {

"value" : 26809.95918367347

}

}

]

}

}

}

- 复杂子聚合:查出所有年龄分布,并且这些年龄段中M的平均薪资和F的平均薪资以及这个年龄段的总体平均薪资

GET bank/_search

{

"query": {

"match_all": {}

},

"aggs": {

"ageAggs": {

"terms": { // 第一次聚合,查看年龄分布

"field": "age",

"size": 3

},

"aggs": { // 子聚合

"genderAggs": {

"terms": { // 查看性别分布

"field": "gender.keyword", // 注意这里,terms查询的话,文本字段应该用.keyword

"size": 3

},

"aggs": { // 孙子聚合

"balabceAvg": {

"avg": { // 查看不同性别的平均薪资

"field": "balance"

}

}

}

},

"balanceAvg": {

"avg": { // 查看不同年龄段的总体平均薪资

"field": "balance"

}

}

}

}

},

"size": 0

}

结果

{

"took" : 16,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : null,

"hits" : [ ]

},

"aggregations" : {

"ageAggs" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 879,

"buckets" : [

{

"key" : 31,

"doc_count" : 61,

"genderAggs" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "M",

"doc_count" : 35,

"balabceAvg" : {

"value" : 29565.628571428573

}

},

{

"key" : "F",

"doc_count" : 26,

"balabceAvg" : {

"value" : 26626.576923076922

}

}

]

},

"balanceAvg" : {

"value" : 28312.918032786885

}

},

{

"key" : 39,

"doc_count" : 60,

"genderAggs" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "F",

"doc_count" : 38,

"balabceAvg" : {

"value" : 26348.684210526317

}

},

{

"key" : "M",

"doc_count" : 22,

"balabceAvg" : {

"value" : 23405.68181818182

}

}

]

},

"balanceAvg" : {

"value" : 25269.583333333332

}

}

]

}

}

}

3、Mapping

1)、字段类型

https://www.elastic.co/guide/en/elasticsearch/reference/7.x/mapping-types.html

核心类型

复合类型

地理类型

特定类型

核心数据类型

(1)字符串

text ⽤于全⽂索引,搜索时会自动使用分词器进⾏分词再匹配

keyword 不分词,搜索时需要匹配完整的值

(2)数值型

整型: byte,short,integer,long

浮点型: float, half_float, scaled_float,double

(3)日期类型:date

(4)范围型

integer_range, long_range, float_range,double_range,date_range

gt是大于,lt是小于,e是equals等于。

age_limit的区间包含了此值的文档都算是匹配。

(5)布尔

boolean

(6)⼆进制

binary 会把值当做经过 base64 编码的字符串,默认不存储,且不可搜索

复杂数据类型

(1)对象

object一个对象中可以嵌套对象。

(2)数组

Array

嵌套类型

nested 用于json对象数组

2)、映射

Mapping(映射) Mapping 是用来定义一个文档(document),以及它所包含的属性(field)是如何存储和 索引的。比如,使用 mapping 来定义:

- 哪些字符串属性应该被看做全文本属性(full text fields)。

- 哪些属性包含数字,日期或者地理位置。

- 文档中的所有属性是否都能被索引(_all 配置)。

- 日期的格式。

- 自定义映射规则来执行动态添加属性。

3)、创建映射

创建索引并指定映射

PUT /my_index

{

"mappings": {

"properties": {

"age": {

"type": "integer"

},

"email": {

"type": "keyword" # 指定为keyword

},

"name": {

"type": "text" # 全文检索。保存时候分词,检索时候进行分词匹配

}

}

}

}

查看映射

GET /my_index

4)、添加新的字段映射

PUT /my_index/_mapping

{

"properties": {

"employee-id": {

"type": "keyword",

"index": false # 字段不能被检索。检索

}

}

}

这里的 “index”: false,表明新增的字段不能被检索,只是一个冗余字段。

5)、更新映射

对于已经存在的映射字段,我们不能更新。更新必须创建新的索引进行数据迁移

6)、数据迁移

先创建出 new_twitter 的正确映射。然后使用如下方式进行数据迁移

6.0 以后写法

POST reindex

{

"source":{

"index":"twitter"

},

"dest":{

"index":"new_twitters"

}

}

老版本写法

POST reindex

{

"source":{

"index":"twitter",

"type":"twitter" // 类型,老版本有,7.x之后就取消了

},

"dest":{

"index":"new_twitters"

}

}

案例:原来类型为account,新版本没有类型了,所以我们把他去掉

GET /bank/_search

{

"took" : 0,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "bank",

"_type" : "account", //原来类型为account,新版本没有类型了,所以我们把他去掉

"_id" : "1",

"_score" : 1.0,

"_source" : {

"account_number" : 1,

"balance" : 39225,

"firstname" : "Amber",

"lastname" : "Duke",

"age" : 32,

"gender" : "M",

"address" : "880 Holmes Lane",

"employer" : "Pyrami",

"email" : "amberduke@pyrami.com",

"city" : "Brogan",

"state" : "IL"

}

},

...

GET /bank/_search

查出

"age":{"type":"long"}

想要将年龄修改为integer

先创建新的索引

PUT /newbank

{

"mappings": {

"properties": {

"account_number": {

"type": "long"

},

"address": {

"type": "text"

},

"age": {

"type": "integer"

},

"balance": {

"type": "long"

},

"city": {

"type": "keyword"

},

"email": {

"type": "keyword"

},

"employer": {

"type": "keyword"

},

"firstname": {

"type": "text"

},

"gender": {

"type": "keyword"

},

"lastname": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"state": {

"type": "keyword"

}

}

}

}

查看“newbank”的映射:

GET /newbank/_mapping

能够看到age的映射类型被修改为了integer.

"age":{"type":"integer"}

将bank中的数据迁移到newbank中

POST _reindex

{

"source": {

"index": "bank",

"type": "account"

},

"dest": {

"index": "newbank"

}

}

运行输出:

#! Deprecation: [types removal] Specifying types in reindex requests is deprecated.

{

"took" : 768,

"timed_out" : false,

"total" : 1000,

"updated" : 0,

"created" : 1000,

"deleted" : 0,

"batches" : 1,

"version_conflicts" : 0,

"noops" : 0,

"retries" : {

"bulk" : 0,

"search" : 0

},

"throttled_millis" : 0,

"requests_per_second" : -1.0,

"throttled_until_millis" : 0,

"failures" : [ ]

}

查看newbank中的数据

GET /newbank/_search

输出

"hits" : {

"total" : {

"value" : 1000,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "newbank",

"_type" : "_doc", # 没有了类型

4、分词

一个 tokenizer(分词器)接收一个字符流,将之分割为独立的 tokens(词元,通常是独立 的单词),然后输出 tokens 流。 例如,whitespace tokenizer 遇到空白字符时分割文本。它会将文本 “Quick brown fox!” 分割 为 [Quick, brown, fox!]。 该 tokenizer(分词器)还负责记录各个 term(词条)的顺序或 position 位置(用于 phrase 短 语和 word proximity 词近邻查询),以及 term(词条)所代表的原始 word(单词)的 start (起始)和 end(结束)的 character offsets(字符偏移量)(用于高亮显示搜索的内容)。 Elasticsearch 提供了很多内置的分词器,可以用来构建 custom analyzers(自定义分词器)。

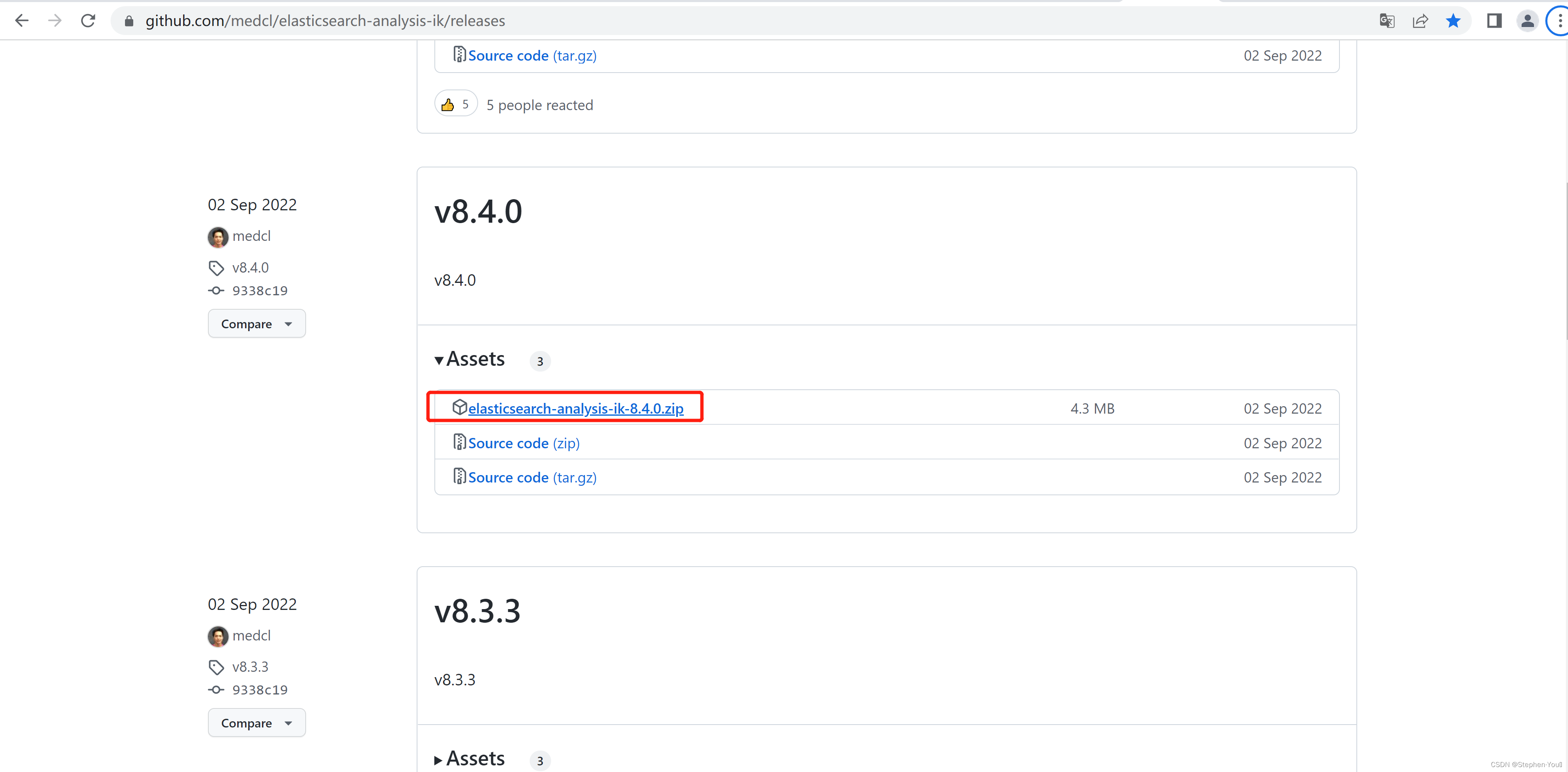

1)、安装 ik 分词器

所有的语言分词,默认使用的都是“Standard Analyzer”,但是这些分词器针对于中文的分词,并不友好。为此需要安装中文的分词器。

在前面安装的elasticsearch时,我们已经将elasticsearch容器的“/usr/share/elasticsearch/plugins”目录,映射到宿主机的“ /usr/local/elasticsearch/plugins”目录下,所以比较方便的做法就是下载“/elasticsearch-analysis-ik-7.4.2.zip”文件,然后解压到目录ik下即可。安装完毕后,需要重启elasticsearch容器。

https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.4.2/elasticsearch-anal ysis-ik-7.4.2.zip

unzip 下载的文件

rm –rf *.zip

mv elasticsearch/ ik

可以确认是否安装好了分词器

cd ../bin

elasticsearch plugin list:即可列出系统的分词器

2)、测试分词器

使用默认分词器

GET _analyze

{

"text":""

}

请观察执行结果:

{

"tokens" : [

{

"token1" : "我",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<IDEOGRAPHIC>",

"position" : 0

},

{

"token1" : "是",

"start_offset" : 1,

"end_offset" : 2,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token1" : "中",

"start_offset" : 2,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 2

},

{

"token1" : "国",

"start_offset" : 3,

"end_offset" : 4,

"type" : "<IDEOGRAPHIC>",

"position" : 3

},

{

"token1" : "人",

"start_offset" : 4,

"end_offset" : 5,

"type" : "<IDEOGRAPHIC>",

"position" : 4

}

]

}

GET _analyze

{

"analyzer": "ik_smart",

"text":"我是中国人"

}

输出结果:

{

"tokens" : [

{

"token1" : "我",

"start_offset" : 0,

"end_offset" : 1,

"type" : "CN_CHAR",

"position" : 0

},

{

"token1" : "是",

"start_offset" : 1,

"end_offset" : 2,

"type" : "CN_CHAR",

"position" : 1

},

{

"token1" : "中国人",

"start_offset" : 2,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 2

}

]

}

GET _analyze

{

"analyzer": "ik_max_word",

"text":"我是中国人"

}

输出结果:

{

"tokens" : [

{

"token1" : "我",

"start_offset" : 0,

"end_offset" : 1,

"type" : "CN_CHAR",

"position" : 0

},

{

"token1" : "是",

"start_offset" : 1,

"end_offset" : 2,

"type" : "CN_CHAR",

"position" : 1

},

{

"token1" : "中国人",

"start_offset" : 2,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 2

},

{

"token1" : "中国",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 3

},

{

"token1" : "国人",

"start_offset" : 3,

"end_offset" : 5,

"type" : "CN_WORD",

"position" : 4

}

]

}

能够看出不同的分词器,分词有明显的区别,所以以后定义一个索引不能再使用默 认的 mapping 了,要手工建立 mapping, 因为要选择分词器。

3)、自定义词库

比如我们要把尚硅谷算作一个词

修改/usr/local/elasticsearch/plugins/ik/config中的IKAnalyzer.cfg.xml

[root@localhost ik]# cd /usr/local/elasticsearch/plugins/ik/config/

[root@localhost config]# ls

extra_main.dic extra_single_word_full.dic extra_stopword.dic main.dic quantifier.dic suffix.dic

extra_single_word.dic extra_single_word_low_freq.dic IKAnalyzer.cfg.xml preposition.dic stopword.dic surname.dic

[root@localhost config]# vi IKAnalyzer.cfg.xml

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd">

<properties>

<comment>IK Analyzer 扩展配置</comment>

<!--用户可以在这里配置自己的扩展字典 -->

<entry key="ext_dict"></entry>

<!--用户可以在这里配置自己的扩展停止词字典-->

<entry key="ext_stopwords"></entry>

<!--用户可以在这里配置远程扩展字典 -->

<entry key="remote_ext_dict">http://192.168.120.21/es/fenci.txt</entry>

<!--用户可以在这里配置远程扩展停止词字典-->

<!-- <entry key="remote_ext_stopwords">words_location</entry> -->

</properties>

重启ES

修改完成后,需要重启elasticsearch容器,否则修改不生效。

docker restart elasticsearch

更新完成后,es只会对于新增的数据用更新分词。历史数据是不会重新分词的。如果想要历史数据重新分词,需要执行:

POST my_index/_update_by_query?conflicts=proceed

三、ElasticSearch-Rest-Client

1)、9300:TCP

- spring-data-elasticsearch:transport-api.jar;

- springboot 版本不同, transport-api.jar 不同,不能适配 es 版本

- 7.x 已经不建议使用,8 以后就要废弃

2)、9200:HTTP

- JestClient:非官方,更新慢

- RestTemplate:模拟发 HTTP 请求,ES 很多操作需要自己封装,麻烦

- HttpClient:同上

3)、Elasticsearch-Rest-Client:官方 RestClient,封装了 ES 操作,API 层次分明,上手简单

最终选择 Elasticsearch-Rest-Client(elasticsearch-rest-high-level-client) https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high.html

1、SpringBoot 整合ElasticSearch

创建新的maven工程

导入依赖

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>7.4.2</version>

</dependency>

在spring-boot-dependencies中所依赖的ES版本位6.4.3,要改掉 (springboot版本为2.1.8.RELEASE)

<properties>

<java.version>1.8</java.version>

<elasticsearch.version>7.4.2</elasticsearch.version>

</properties>

编写配置文件

导入common依赖

<dependency>

<groupId>com.clown.clownmall</groupId>

<artifactId>clownmall-common</artifactId>

<version>0.0.1-SNAPSHOT</version>

</dependency>

启用服务的注册发现

启动类加注解

@EnableDiscoveryClient // 启用服务的注册发现

配置注册中心

spring.cloud.nacos.discovery.server-addr=127.0.0.1:8848

应用名称

spring.application.name=clownmall-search

创建配置文件

import org.apache.http.HttpHost;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestHighLevelClient;

import org.springframework.context.annotation.Configuration;

@Configuration

public class ClownmallElasticSearchConfig {

public RestHighLevelClient esRestClient() {

RestHighLevelClient client = new RestHighLevelClient(

RestClient.builder(

new HttpHost("192.168.120.21", 9200, "http")));

return client;

}

}

api参考文档(https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high.html)

请求测试项,比如es添加了安全访问规则,访问es需要添加一个安全头,就可以通过requestOptions设置

官方建议把requestOptions创建成单实例

2、使用

1)、测试存储数据到ES

通过api文档我们知道有很多存储的方法,但我们使用第一种,将对象转为JSON字符串存储

/**

* 测试存储数据到ES

* 更新也可以

*/

@Test

public void indexData() throws IOException {

// 设置索引

IndexRequest indexRequest = new IndexRequest("users");

indexRequest.id("1"); // 数据的id

User user = new User();

user.setUserName("zhangsan");

user.setGender("男");

user.setAge(18);

// 将对象转为JSON字符串

String jsonString = JSON.toJSONString(user);

// 设置要保存的内容,指定数据和类型

indexRequest.source(jsonString, XContentType.JSON);

// 执行操作(保存)

IndexResponse index = client.index(indexRequest, ClownmallElasticSearchConfig.COMMON_OPTIONS);

// 提取有用的响应数据

System.out.println(index);

}

@Data

class User {

private String userName;

private String gender;

private Integer age;

}

结果

IndexResponse[index=users,type=_doc,id=1,version=1,result=created,seqNo=0,primaryTerm=1,shards={"total":2,"successful":1,"failed":0}]

2)、测试复杂查询

@RunWith(SpringRunner.class)

@SpringBootTest

public class ClownmallSearchApplicationTests {

@Autowired

private RestHighLevelClient client;

/**

* 测试复杂查询

* @throws IOException

*/

@Test

public void searchData() throws IOException {

// 1、创建检索请求

SearchRequest searchRequest = new SearchRequest();

// 指定索引

searchRequest.indices("bank");

// 知道DSL,检索条件

// SearchSourceBuilder sourceBuilder 封装的条件

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// 1.1)、构造查询条件

// sourceBuilder.query();

// sourceBuilder.from();

// sourceBuilder.size();

sourceBuilder.query(QueryBuilders.matchQuery("address", "mill"));

// 1.2)、按照年龄的值分布进行聚合

TermsAggregationBuilder ageAgg = AggregationBuilders.terms("ageAgg").field("age").size(10);

sourceBuilder.aggregation(ageAgg);

// 1.3)、按照平均薪资进行聚合

AvgAggregationBuilder balanceAvg = AggregationBuilders.avg("balanceAvg").field("balance");

sourceBuilder.aggregation(balanceAvg);

// 打印查询条件

System.out.println(sourceBuilder.toString());

searchRequest.source(sourceBuilder);

// 2、执行检索

SearchResponse searchResponse = client.search(searchRequest, ClownmallElasticSearchConfig.COMMON_OPTIONS);

// 3、分析结果 searchResponse

System.out.println(searchResponse.toString());

}

}

分析结果文章来源:https://www.toymoban.com/news/detail-424606.html

/**

* 测试复杂查询

* @throws IOException

*/

@Test

public void searchData() throws IOException {

// 1、创建检索请求

SearchRequest searchRequest = new SearchRequest();

// 指定索引

searchRequest.indices("bank");

// 知道DSL,检索条件

// SearchSourceBuilder sourceBuilder 封装的条件

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// 1.1)、构造查询条件

// sourceBuilder.query();

// sourceBuilder.from();

// sourceBuilder.size();

sourceBuilder.query(QueryBuilders.matchQuery("address", "mill"));

// 1.2)、按照年龄的值分布进行聚合

TermsAggregationBuilder ageAgg = AggregationBuilders.terms("ageAgg").field("age").size(10);

sourceBuilder.aggregation(ageAgg);

// 1.3)、按照平均薪资进行聚合

AvgAggregationBuilder balanceAvg = AggregationBuilders.avg("balanceAvg").field("balance");

sourceBuilder.aggregation(balanceAvg);

// 打印查询条件

System.out.println(sourceBuilder.toString());

searchRequest.source(sourceBuilder);

// 2、执行检索

SearchResponse searchResponse = client.search(searchRequest, ClownmallElasticSearchConfig.COMMON_OPTIONS);

// 3、分析结果 searchResponse

System.out.println(searchResponse.toString());

// 3.1) 获取所查到的数据

SearchHits hits = searchResponse.getHits();

for (SearchHit hit : hits) {

// hit.getIndex();hit.getType();hit.getId();hit.getScore();

String string = hit.getSourceAsString();

Account account = JSON.parseObject(string, Account.class);

System.out.println(account);

}

// 3.2) 获取检索到的分析数据

Aggregations aggregations = searchResponse.getAggregations();

// 查询年龄分布

Terms ageAgg1 = aggregations.get("ageAgg");

for (Terms.Bucket bucket : ageAgg1.getBuckets()) {

String keyAsString = bucket.getKeyAsString();

System.out.println("年龄:" + keyAsString + "==>" + bucket.getDocCount());

}

// 查询平均薪资

Avg balanceAvg1 = aggregations.get("balanceAvg");

String valueAsString = balanceAvg1.getValueAsString();

System.out.println("平均薪资:" + valueAsString);

}

@Data

@ToString

static class Account {

private int account_number;

private int balance;

private String firstname;

private String lastname;

private int age;

private String gender;

private String address;

private String employer;

private String email;

private String city;

private String state;

}

测试类全部代码文章来源地址https://www.toymoban.com/news/detail-424606.html

package com.clown.clownmall.search;

import com.alibaba.fastjson.JSON;

import com.clown.clownmall.search.config.ClownmallElasticSearchConfig;

import lombok.Data;

import lombok.ToString;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.action.index.IndexResponse;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.xcontent.XContentType;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.SearchHits;

import org.elasticsearch.search.aggregations.AggregationBuilders;

import org.elasticsearch.search.aggregations.Aggregations;

import org.elasticsearch.search.aggregations.bucket.terms.Terms;

import org.elasticsearch.search.aggregations.bucket.terms.TermsAggregationBuilder;

import org.elasticsearch.search.aggregations.metrics.Avg;

import org.elasticsearch.search.aggregations.metrics.AvgAggregationBuilder;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

import java.io.IOException;

@RunWith(SpringRunner.class)

@SpringBootTest

public class ClownmallSearchApplicationTests {

@Autowired

private RestHighLevelClient client;

/**

* 测试复杂查询

*

* @throws IOException

*/

@Test

public void searchData() throws IOException {

// 1、创建检索请求

SearchRequest searchRequest = new SearchRequest();

// 指定索引

searchRequest.indices("bank");

// 知道DSL,检索条件

// SearchSourceBuilder sourceBuilder 封装的条件

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// 1.1)、构造查询条件

// sourceBuilder.query();

// sourceBuilder.from();

// sourceBuilder.size();

sourceBuilder.query(QueryBuilders.matchQuery("address", "mill"));

// 1.2)、按照年龄的值分布进行聚合

TermsAggregationBuilder ageAgg = AggregationBuilders.terms("ageAgg").field("age").size(10);

sourceBuilder.aggregation(ageAgg);

// 1.3)、按照平均薪资进行聚合

AvgAggregationBuilder balanceAvg = AggregationBuilders.avg("balanceAvg").field("balance");

sourceBuilder.aggregation(balanceAvg);

// 打印查询条件

System.out.println(sourceBuilder.toString());

searchRequest.source(sourceBuilder);

// 2、执行检索

SearchResponse searchResponse = client.search(searchRequest, ClownmallElasticSearchConfig.COMMON_OPTIONS);

// 3、分析结果 searchResponse

System.out.println(searchResponse.toString());

// 3.1) 获取所查到的数据

SearchHits hits = searchResponse.getHits();

for (SearchHit hit : hits) {

// hit.getIndex();hit.getType();hit.getId();hit.getScore();

String string = hit.getSourceAsString();

Account account = JSON.parseObject(string, Account.class);

System.out.println(account);

}

// 3.2) 获取检索到的分析数据

Aggregations aggregations = searchResponse.getAggregations();

// 查询年龄分布

Terms ageAgg1 = aggregations.get("ageAgg");

for (Terms.Bucket bucket : ageAgg1.getBuckets()) {

String keyAsString = bucket.getKeyAsString();

System.out.println("年龄:" + keyAsString + "==>" + bucket.getDocCount());

}

// 查询平均薪资

Avg balanceAvg1 = aggregations.get("balanceAvg");

String valueAsString = balanceAvg1.getValueAsString();

System.out.println("平均薪资:" + valueAsString);

}

@Data

@ToString

static class Account {

private int account_number;

private int balance;

private String firstname;

private String lastname;

private int age;

private String gender;

private String address;

private String employer;

private String email;

private String city;

private String state;

}

/**

* 测试存储数据到ES

* 更新也可以

*/

@Test

public void indexData() throws IOException {

// 设置索引

IndexRequest indexRequest = new IndexRequest("users");

indexRequest.id("1"); // 数据的id

User user = new User();

user.setUserName("zhangsan");

user.setGender("男");

user.setAge(18);

// 将对象转为JSON字符串

String jsonString = JSON.toJSONString(user);

// 设置要保存的内容,指定数据和类型

indexRequest.source(jsonString, XContentType.JSON);

// 执行操作(保存)

IndexResponse index = client.index(indexRequest, ClownmallElasticSearchConfig.COMMON_OPTIONS);

// 提取有用的响应数据

System.out.println(index);

}

@Data

class User {

private String userName;

private String gender;

private Integer age;

}

@Test

public void contextLoads() {

System.out.println(client);

}

}

四、附页:Nginx安装

随便启动一个nginx实例,只是为了复制出配置

docker run -p 80:80 --name nginx -d nginx:1.10

将容器内的配置文件拷贝到/usr/local/nginx/conf/ 下

注:所有操作都在 /usr/local 文件夹下

将nginx文件夹改名为conf

mv nginx conf

创建一个新的nginx文件夹

mkdir nginx

将conf移动到nginx中

mv conf nginx

终止原容器:

docker stop nginx

执行命令删除原容器:

docker rm nginx

创建新的Nginx,执行以下命令

docker run -p 80:80 --name nginx \

-v /usr/local/nginx/html:/usr/share/nginx/html \

-v /usr/local/nginx/logs:/var/log/nginx \

-v /usr/local/nginx/conf:/etc/nginx \

-d nginx:1.10

在nginx的HTML文件夹中创建自己的ES词库

[root@localhost local]# cd /usr/local/nginx/

[root@localhost nginx]# ls

conf html logs

[root@localhost nginx]# cd html

[root@localhost html]# ls

[root@localhost html]# vi index.html

[root@localhost html]# ls

index.html

[root@localhost html]# ls

index.html

[root@localhost html]# mkdir es

[root@localhost html]# ls

es index.html

[root@localhost html]# cd es

[root@localhost es]# ls

[root@localhost es]# vi fenci.txt

[root@localhost es]#

到了这里,关于ElasticSearch的使用,安装ik分词器,自定义词库,SpringBoot整合ES(增、删、改、查)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!