1.基本原理

最速下降法就是从一个初始点开始,逐步沿着以当前点为基准,函数值变化最快的方向走,一直走到最优解为止。那么接下来就要考虑两个问题:(1)沿着什么方向走;(2)应该走多远;

我们知道,沿着函数中某点方向导数最大的方向走下降是最快的,那么我们就得去找平行于该点梯度的方向,沿着这个方向(当为max问题)或者沿着这个方向的反方向(当为min问题)去更新当前位置。再考虑走多远呢?这时我们就要沿着梯度的方向不断迭代,直到找到收敛的迭代点为止,这个点也就是我们要求的最优解。

2.python代码实现

下面来使用最速下降法求函数

的最小值,其中初始点为(0,0)。

下面给出两种实现代码:

import math

from sympy import *

x1=symbols('x1')

x2=symbols('x2')

fun=x1**2+2*x2**2-2*x1*x2-2*x2

grad1=diff(fun,x1)

grad2=diff(fun,x2)

MaxIter=100

epsilon=0.0001

iter_cnt=0

current_step_size=100

x1_value=0

x2_value=0

grad1_value=(float)(grad1.subs({x1:x1_value,x2:x2_value}).evalf())

grad2_value=(float)(grad2.subs({x1:x1_value,x2:x2_value}).evalf())

current_obj=fun.subs({x1:x1_value,x2:x2_value}).evalf()

print('iterCnt:%2d cur_point(%3.2f,%3.2f) cur_obj:%5.4f grad1:%5.4f grad2:%5.4f '

%(iter_cnt,x1_value,x2_value,current_obj,grad1_value,grad2_value))

while(abs(grad1_value) + abs(grad2_value) >= epsilon):

iter_cnt += 1

t = symbols('t')

x1_updated = x1_value - grad1_value * t

x2_updated = x2_value - grad2_value * t

Fun_updated = fun.subs({x1: x1_updated, x2: x2_updated})

grad_t = diff(Fun_updated, t)

t_value = solve(grad_t, t)[0] # solve grad_t == 0

grad1_value = (float)(grad1.subs({x1: x1_value, x2: x2_value}).evalf())

grad2_value = (float)(grad2.subs({x1: x1_value, x2: x2_value}).evalf())

x1_value = (float)(x1_value - t_value * grad1_value)

x2_value = (float)(x2_value - t_value * grad2_value)

current_obj = fun.subs({x1: x1_value, x2: x2_value}).evalf()

current_step_size = t_value

print('iterCnt:%2d cur_point(%3.2f, %3.2f) cur_obj:%5.4f grad_1:%5.4f grad_2 :%5.4f'

% (iter_cnt, x1_value, x2_value, current_obj, grad1_value, grad2_value))

import numpy as np

from sympy import *

import math

import matplotlib.pyplot as plt

import mpl_toolkits.axisartist as axisartist

x1, x2, t = symbols('x1, x2, t')

def func():

return pow(x1, 2) + 2 * pow(x2, 2) - 2 * x1 * x2 - 2 * x2

def grad(data):

f = func()

grad_vec = [diff(f, x1), diff(f, x2)] # 求偏导数,梯度向量

grad = []

for item in grad_vec:

grad.append(item.subs(x1, data[0]).subs(x2, data[1]))

return grad

def grad_len(grad):

vec_len = math.sqrt(pow(grad[0], 2) + pow(grad[1], 2))

return vec_len

def zhudian(f):

t_diff = diff(f)

t_min = solve(t_diff)

return t_min

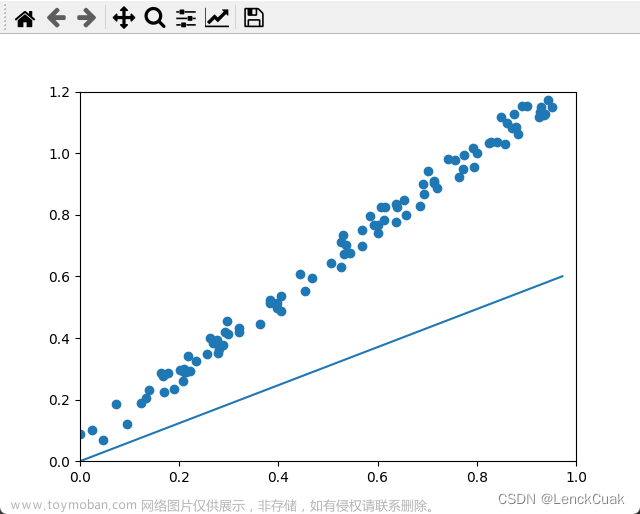

def main(X0, theta):

f = func()

grad_vec = grad(X0)

grad_length = grad_len(grad_vec) # 梯度向量的模长

k = 0

data_x = [0]

data_y = [0]

while grad_length > theta: # 迭代的终止条件

k += 1

p = -np.array(grad_vec)

# 迭代

X = np.array(X0) + t*p

t_func = f.subs(x1, X[0]).subs(x2, X[1])

t_min = zhudian(t_func)

X0 = np.array(X0) + t_min*p

grad_vec = grad(X0)

grad_length = grad_len(grad_vec)

print('grad_length', grad_length)

print('坐标', float(X0[0]), float(X0[1]))

data_x.append(X0[0])

data_y.append(X0[1])

print(k)

# 绘图

fig = plt.figure()

ax = axisartist.Subplot(fig, 111)

fig.add_axes(ax)

ax.axis["bottom"].set_axisline_style("-|>", size=1.5)

ax.axis["left"].set_axisline_style("->", size=1.5)

ax.axis["top"].set_visible(False)

ax.axis["right"].set_visible(False)

plt.title(r'$Gradient \ method - steepest \ descent \ method$')

plt.plot(data_x, data_y,color='r',label=r'$f(x_1,x_2)=x_1^2+2 \cdot x_2^2-2 \cdot x_1 \cdot x_2-2 \cdot x_2$')

plt.legend()

plt.scatter(1, 1, marker=(3, 1), c=2, s=100)

plt.grid()

plt.xlabel(r'$x_1$', fontsize=20)

plt.ylabel(r'$x_2$', fontsize=20)

plt.show()

if __name__ == '__main__':

# 给定初始迭代点和阈值

main([0, 0], 0.00001)

求解结果是在(1,1)点时有最小值-1。

参考博客:

Python实现最速下降法(The steepest descent method)详细案例文章来源:https://www.toymoban.com/news/detail-434359.html

Python梯度法——最速下降法文章来源地址https://www.toymoban.com/news/detail-434359.html

到了这里,关于最速下降法—python实现的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!