问题

我安装的是Hadoop3.3.4,使用的是Java17,Spark用的是3.3.2

1. spark hadoop启动后输入命令出现错误

启动完成后,我在控制台输入如下命令

spark-submit --master yarn --deploy-mode cluster --class org.apache.spark.examples.SparkPi $SPARK_HOME/examples/jars/spark-examples_2.12-3.3.2.jar 100

出现报错信息

2023-03-21 17:45:04,392 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2023-03-21 17:45:04,460 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at master/192.168.186.141:8032

2023-03-21 17:45:04,968 INFO conf.Configuration: resource-types.xml not found

2023-03-21 17:45:04,968 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2023-03-21 17:45:04,980 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

2023-03-21 17:45:04,981 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead

2023-03-21 17:45:04,982 INFO yarn.Client: Setting up container launch context for our AM

2023-03-21 17:45:04,982 INFO yarn.Client: Setting up the launch environment for our AM container

2023-03-21 17:45:04,994 INFO yarn.Client: Preparing resources for our AM container

2023-03-21 17:45:05,019 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

2023-03-21 17:45:05,626 INFO yarn.Client: Uploading resource file:/tmp/spark-300fccc5-6f53-48f7-b289-43938b5170d1/__spark_libs__10348349781677306272.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009/__spark_libs__10348349781677306272.zip

2023-03-21 17:45:07,612 INFO yarn.Client: Uploading resource file:/opt/spark/examples/jars/spark-examples_2.12-3.3.2.jar -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009/spark-examples_2.12-3.3.2.jar

2023-03-21 17:45:07,817 INFO yarn.Client: Uploading resource file:/tmp/spark-300fccc5-6f53-48f7-b289-43938b5170d1/__spark_conf__14133738159966771512.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009/__spark_conf__.zip

2023-03-21 17:45:07,887 INFO spark.SecurityManager: Changing view acls to: root

2023-03-21 17:45:07,887 INFO spark.SecurityManager: Changing modify acls to: root

2023-03-21 17:45:07,887 INFO spark.SecurityManager: Changing view acls groups to:

2023-03-21 17:45:07,888 INFO spark.SecurityManager: Changing modify acls groups to:

2023-03-21 17:45:07,888 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2023-03-21 17:45:07,934 INFO yarn.Client: Submitting application application_1679387136817_0009 to ResourceManager

2023-03-21 17:45:07,972 INFO impl.YarnClientImpl: Submitted application application_1679387136817_0009

2023-03-21 17:45:08,976 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:08,978 INFO yarn.Client:

client token: N/A

diagnostics: AM container is launched, waiting for AM container to Register with RM

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1679391907944

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1679387136817_0009/

user: root

2023-03-21 17:45:09,981 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:10,983 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:11,987 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:12,989 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:13,991 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:14,993 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:15,995 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:16,997 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:17,999 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:19,001 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:20,004 INFO yarn.Client: Application report for application_1679387136817_0009 (state: ACCEPTED)

2023-03-21 17:45:21,007 INFO yarn.Client: Application report for application_1679387136817_0009 (state: FAILED)

2023-03-21 17:45:21,008 INFO yarn.Client:

client token: N/A

diagnostics: Application application_1679387136817_0009 failed 2 times due to AM Container for appattempt_1679387136817_0009_000002 exited with exitCode: 13

Failing this attempt.Diagnostics: [2023-03-21 17:45:20.457]Exception from container-launch.

Container id: container_1679387136817_0009_02_000001

Exit code: 13

[2023-03-21 17:45:20.459]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

cEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)

at jdk.proxy2/jdk.proxy2.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:965)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at jdk.proxy2/jdk.proxy2.$Proxy21.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1739)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1753)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1750)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1765)

at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77)

at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221)

at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:83)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:622)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2714)

at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:953)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:947)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:30)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:739)

Caused by: java.net.ConnectException: Connection refused

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:672)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:946)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:205)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:586)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:711)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:833)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677)

at org.apache.hadoop.ipc.Client.call(Client.java:1502)

... 35 more

2023-03-21 17:45:20,318 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009

2023-03-21 17:45:20,405 INFO util.ShutdownHookManager: Shutdown hook called

2023-03-21 17:45:20,406 INFO util.ShutdownHookManager: Deleting directory /opt/localdir/usercache/root/appcache/application_1679387136817_0009/spark-b46475ca-fe68-435b-b528-d6a235d0f5c4

[2023-03-21 17:45:20.459]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

cEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)

at jdk.proxy2/jdk.proxy2.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:965)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at jdk.proxy2/jdk.proxy2.$Proxy21.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1739)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1753)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1750)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1765)

at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77)

at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221)

at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:83)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:622)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2714)

at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:953)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:947)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:30)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:739)

Caused by: java.net.ConnectException: Connection refused

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:672)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:946)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:205)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:586)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:711)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:833)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677)

at org.apache.hadoop.ipc.Client.call(Client.java:1502)

... 35 more

2023-03-21 17:45:20,318 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009

2023-03-21 17:45:20,405 INFO util.ShutdownHookManager: Shutdown hook called

2023-03-21 17:45:20,406 INFO util.ShutdownHookManager: Deleting directory /opt/localdir/usercache/root/appcache/application_1679387136817_0009/spark-b46475ca-fe68-435b-b528-d6a235d0f5c4

For more detailed output, check the application tracking page: http://master:8088/cluster/app/application_1679387136817_0009 Then click on links to logs of each attempt.

. Failing the application.

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1679391907944

final status: FAILED

tracking URL: http://master:8088/cluster/app/application_1679387136817_0009

user: root

2023-03-21 17:45:21,015 ERROR yarn.Client: Application diagnostics message: Application application_1679387136817_0009 failed 2 times due to AM Container for appattempt_1679387136817_0009_000002 exited with exitCode: 13

Failing this attempt.Diagnostics: [2023-03-21 17:45:20.457]Exception from container-launch.

Container id: container_1679387136817_0009_02_000001

Exit code: 13

[2023-03-21 17:45:20.459]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

cEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)

at jdk.proxy2/jdk.proxy2.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:965)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at jdk.proxy2/jdk.proxy2.$Proxy21.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1739)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1753)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1750)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1765)

at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77)

at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221)

at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:83)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:622)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2714)

at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:953)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:947)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:30)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:739)

Caused by: java.net.ConnectException: Connection refused

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:672)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:946)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:205)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:586)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:711)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:833)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677)

at org.apache.hadoop.ipc.Client.call(Client.java:1502)

... 35 more

2023-03-21 17:45:20,318 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009

2023-03-21 17:45:20,405 INFO util.ShutdownHookManager: Shutdown hook called

2023-03-21 17:45:20,406 INFO util.ShutdownHookManager: Deleting directory /opt/localdir/usercache/root/appcache/application_1679387136817_0009/spark-b46475ca-fe68-435b-b528-d6a235d0f5c4

[2023-03-21 17:45:20.459]Container exited with a non-zero exit code 13. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

cEngine2$Invoker.invoke(ProtobufRpcEngine2.java:242)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:129)

at jdk.proxy2/jdk.proxy2.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:965)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at jdk.proxy2/jdk.proxy2.$Proxy21.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1739)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1753)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1750)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1765)

at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77)

at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221)

at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:83)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:622)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2714)

at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:953)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:947)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:30)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:739)

Caused by: java.net.ConnectException: Connection refused

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:672)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:946)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:205)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:586)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:711)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:833)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:414)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1677)

at org.apache.hadoop.ipc.Client.call(Client.java:1502)

... 35 more

2023-03-21 17:45:20,318 INFO yarn.ApplicationMaster: Deleting staging directory hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0009

2023-03-21 17:45:20,405 INFO util.ShutdownHookManager: Shutdown hook called

2023-03-21 17:45:20,406 INFO util.ShutdownHookManager: Deleting directory /opt/localdir/usercache/root/appcache/application_1679387136817_0009/spark-b46475ca-fe68-435b-b528-d6a235d0f5c4

For more detailed output, check the application tracking page: http://master:8088/cluster/app/application_1679387136817_0009 Then click on links to logs of each attempt.

. Failing the application.

Exception in thread "main" org.apache.spark.SparkException: Application application_1679387136817_0009 finished with failed status

at org.apache.spark.deploy.yarn.Client.run(Client.scala:1342)

at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1764)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

2023-03-21 17:45:21,019 INFO util.ShutdownHookManager: Shutdown hook called

2023-03-21 17:45:21,020 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-300fccc5-6f53-48f7-b289-43938b5170d1

2023-03-21 17:45:21,037 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-0025d22f-2183-4f78-8ee4-28300ca7486f

2. 查看hadoop-root-namenode-master.log日志出现Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:05,012 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Number of transactions: 53 Total time for transactions(ms): 0 Number of transactions batched in Syncs: 13 Number of syncs: 40 SyncTimes(ms): 83

2023-03-21 17:45:05,680 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:05,680 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:05,680 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741874_1050, replicas=192.168.186.145:9866, 192.168.186.144:9866, 192.168.186.146:9866 for /user/root/.sparkStaging/application_1679387136817_0009/__spark_libs__10348349781677306272.zip

2023-03-21 17:45:06,936 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:06,936 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:06,936 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741875_1051, replicas=192.168.186.145:9866, 192.168.186.147:9866, 192.168.186.146:9866 for /user/root/.sparkStaging/application_1679387136817_0009/__spark_libs__10348349781677306272.zip

2023-03-21 17:45:07,566 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /user/root/.sparkStaging/application_1679387136817_0009/__spark_libs__10348349781677306272.zip is closed by DFSClient_NONMAPREDUCE_-1160862633_1

2023-03-21 17:45:07,618 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:07,618 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:07,618 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741876_1052, replicas=192.168.186.144:9866, 192.168.186.146:9866, 192.168.186.145:9866 for /user/root/.sparkStaging/application_1679387136817_0009/spark-examples_2.12-3.3.2.jar

2023-03-21 17:45:07,677 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /user/root/.sparkStaging/application_1679387136817_0009/spark-examples_2.12-3.3.2.jar is closed by DFSClient_NONMAPREDUCE_-1160862633_1

2023-03-21 17:45:07,822 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:07,822 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockPlacementPolicy: Not enough replicas was chosen. Reason: {NO_REQUIRED_STORAGE_TYPE=1}

2023-03-21 17:45:07,822 INFO org.apache.hadoop.hdfs.StateChange: BLOCK* allocate blk_1073741877_1053, replicas=192.168.186.146:9866, 192.168.186.145:9866, 192.168.186.144:9866 for /user/root/.sparkStaging/application_1679387136817_0009/__spark_conf__.zip

2023-03-21 17:45:07,852 INFO org.apache.hadoop.hdfs.StateChange: DIR* completeFile: /user/root/.sparkStaging/application_1679387136817_0009/__spark_conf__.zip is closed by DFSClient_NONMAPREDUCE_-1160862633_1

解决方法

1. 停止spark

进入spark下的sbin目录,输入下面的命令

./stop-all.sh

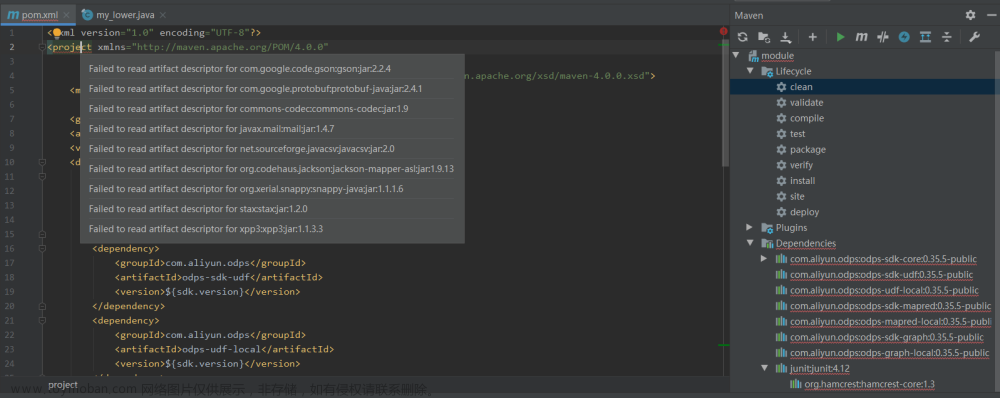

2. 修改master节点的spark下的spark-defaults.conf文件

我的在/opt/spark/conf这个路径下

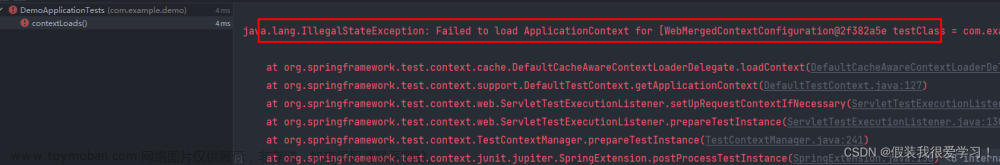

找到这里,把红框的端口号进行修改

与hadoop下的core-site.xml文件中的fs.defaultFS端口号一致

修改完后重启spark即可

3. 验证

重启spark后,输入同样的命令

org.apache.spark.deploy.master.Master running as process 14873. Stop it first.

localhost: org.apache.spark.deploy.worker.Worker running as process 15081. Stop it first.

vice1: org.apache.spark.deploy.worker.Worker running as process 8411. Stop it first.

vice3: org.apache.spark.deploy.worker.Worker running as process 7851. Stop it first.

vice4: org.apache.spark.deploy.worker.Worker running as process 8120. Stop it first.

vice2: org.apache.spark.deploy.worker.Worker running as process 8222. Stop it first.

[root@master sbin]# ./stop-all.sh

localhost: stopping org.apache.spark.deploy.worker.Worker

vice2: stopping org.apache.spark.deploy.worker.Worker

vice4: stopping org.apache.spark.deploy.worker.Worker

vice3: stopping org.apache.spark.deploy.worker.Worker

vice1: stopping org.apache.spark.deploy.worker.Worker

stopping org.apache.spark.deploy.master.Master

[root@master sbin]# ./start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

localhost: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-master.out

vice2: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vice2.out

vice1: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vice1.out

vice3: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vice3.out

vice4: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-vice4.out

[root@master sbin]# spark-submit --master yarn --deploy-mode cluster --class org.apache.spark.examples.SparkPi $SPARK_HOME/examples/jars/spark-examples_2.12-3.3.2.jar 100

2023-03-21 17:56:09,538 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2023-03-21 17:56:09,610 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at master/192.168.186.141:8032

2023-03-21 17:56:10,171 INFO conf.Configuration: resource-types.xml not found

2023-03-21 17:56:10,171 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2023-03-21 17:56:10,189 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

2023-03-21 17:56:10,190 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead

2023-03-21 17:56:10,191 INFO yarn.Client: Setting up container launch context for our AM

2023-03-21 17:56:10,193 INFO yarn.Client: Setting up the launch environment for our AM container

2023-03-21 17:56:10,213 INFO yarn.Client: Preparing resources for our AM container

2023-03-21 17:56:10,259 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

2023-03-21 17:56:10,962 INFO yarn.Client: Uploading resource file:/tmp/spark-f4010a3e-1084-4538-ad02-7bf1148d61e5/__spark_libs__8751511770633019725.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0010/__spark_libs__8751511770633019725.zip

2023-03-21 17:56:13,482 INFO yarn.Client: Uploading resource file:/opt/spark/examples/jars/spark-examples_2.12-3.3.2.jar -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0010/spark-examples_2.12-3.3.2.jar

2023-03-21 17:56:13,811 INFO yarn.Client: Uploading resource file:/tmp/spark-f4010a3e-1084-4538-ad02-7bf1148d61e5/__spark_conf__11078522526971619709.zip -> hdfs://master:9000/user/root/.sparkStaging/application_1679387136817_0010/__spark_conf__.zip

2023-03-21 17:56:13,875 INFO spark.SecurityManager: Changing view acls to: root

2023-03-21 17:56:13,875 INFO spark.SecurityManager: Changing modify acls to: root

2023-03-21 17:56:13,876 INFO spark.SecurityManager: Changing view acls groups to:

2023-03-21 17:56:13,876 INFO spark.SecurityManager: Changing modify acls groups to:

2023-03-21 17:56:13,877 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2023-03-21 17:56:13,927 INFO yarn.Client: Submitting application application_1679387136817_0010 to ResourceManager

2023-03-21 17:56:13,960 INFO impl.YarnClientImpl: Submitted application application_1679387136817_0010

2023-03-21 17:56:14,964 INFO yarn.Client: Application report for application_1679387136817_0010 (state: ACCEPTED)

2023-03-21 17:56:14,967 INFO yarn.Client:

client token: N/A

diagnostics: AM container is launched, waiting for AM container to Register with RM

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1679392573939

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1679387136817_0010/

user: root

2023-03-21 17:56:15,970 INFO yarn.Client: Application report for application_1679387136817_0010 (state: ACCEPTED)

2023-03-21 17:56:16,971 INFO yarn.Client: Application report for application_1679387136817_0010 (state: ACCEPTED)

2023-03-21 17:56:17,973 INFO yarn.Client: Application report for application_1679387136817_0010 (state: ACCEPTED)

2023-03-21 17:56:18,976 INFO yarn.Client: Application report for application_1679387136817_0010 (state: ACCEPTED)

2023-03-21 17:56:19,977 INFO yarn.Client: Application report for application_1679387136817_0010 (state: ACCEPTED)

2023-03-21 17:56:20,979 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:20,979 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: vice3

ApplicationMaster RPC port: 39297

queue: default

start time: 1679392573939

final status: UNDEFINED

tracking URL: http://master:8088/proxy/application_1679387136817_0010/

user: root

2023-03-21 17:56:21,981 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:22,983 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:23,985 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:24,992 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:25,995 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:26,997 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:27,999 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:29,002 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:30,004 INFO yarn.Client: Application report for application_1679387136817_0010 (state: RUNNING)

2023-03-21 17:56:31,006 INFO yarn.Client: Application report for application_1679387136817_0010 (state: FINISHED)

2023-03-21 17:56:31,006 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: vice3

ApplicationMaster RPC port: 39297

queue: default

start time: 1679392573939

final status: SUCCEEDED

tracking URL: http://master:8088/proxy/application_1679387136817_0010/

user: root

2023-03-21 17:56:31,015 INFO util.ShutdownHookManager: Shutdown hook called

2023-03-21 17:56:31,015 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-e23b1042-b210-44ea-a8ef-e8b87ea1f560

2023-03-21 17:56:31,041 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-f4010a3e-1084-4538-ad02-7bf1148d61e5

这次运行正常,问题解决文章来源:https://www.toymoban.com/news/detail-435621.html

4. 总结

出现问题要首先检查hostname是不是与hadoop、spark配置的一致,不要想当然的认为是一样的,先检查一下最基础的,如果还是不能解决,就要在网上搜索一下了,通常情况下,问题产生的原因都是因为忽略了某个地方的小细节文章来源地址https://www.toymoban.com/news/detail-435621.html

到了这里,关于解决 Application xxx failed 2 times due to AM Container for xxx exited with exitCode: 13 问题的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!