之前 写了Android中怎么引入FFMmpeg的例子 。

本编文章将会写一个简单的demo实现ffmpeg拉去rtsp流并在界面中打印前五个字节

懒得往下细看的可以点击这里下载工程 基于andorid studio

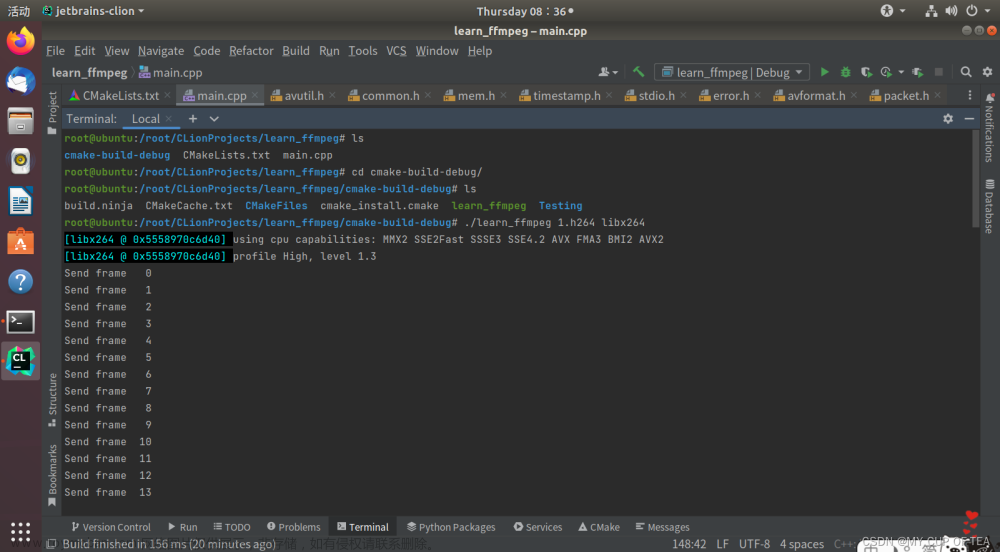

实际效果下图:

android 用ffmpeg 拉取rtsp流 解出h264数据

看下目录结构:

很简单 应用进去之后有一个主界面MainActivity 主界面有一个按钮点击一下进入一个新的界面会显示解封装的h264数据的包的大小 和前5个字节

MainActivity内容如下:

sdfsdf

package com.qmcy.demux;

import androidx.appcompat.app.AppCompatActivity;

import android.content.DialogInterface;

import android.content.Intent;

import android.os.Bundle;

import android.view.View;

import android.widget.Button;

import android.widget.TextView;

import com.qmcy.demux.databinding.ActivityMainBinding;

public class MainActivity extends AppCompatActivity {

// Used to load the 'demux' library on application startup.

static {

System.loadLibrary("demux");

}

private ActivityMainBinding binding;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

binding = ActivityMainBinding.inflate(getLayoutInflater());

setContentView(binding.getRoot());

// Example of a call to a native method

TextView tv = binding.sampleText;

tv.setText(GetVersion());

Button rtsp = (Button)findViewById(R.id.rtsp);

rtsp.setOnClickListener(new View.OnClickListener(){

@Override

public void onClick(View v)

{

Intent intent = new Intent(MainActivity.this,FFDemuxActivity.class);

startActivity(intent);

}

});

}

/**

* A native method that is implemented by the 'demux' native library,

* which is packaged with this application.

*/

public native String stringFromJNI();

public native String GetVersion();

}FFDemuxActivity代码如下:

package com.qmcy.demux;

import android.Manifest;

import android.content.pm.PackageManager;

import android.os.Bundle;

import android.util.Log;

import android.view.MotionEvent;

import android.view.SurfaceHolder;

import android.view.View;

import android.widget.EditText;

import android.widget.SeekBar;

import android.widget.Toast;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

public class FFDemuxActivity extends AppCompatActivity implements FFDemuxJava.EventCallback{

private static final String TAG = "FFDemuxActivity";

private static final String[] REQUEST_PERMISSIONS = {

Manifest.permission.WRITE_EXTERNAL_STORAGE,

};

private static final int PERMISSION_REQUEST_CODE = 1;

private FFDemuxJava m_demuxer = null;

private EditText editText;

private boolean mIsTouch = false;

private final String mVideoPath = "rtsp://uer:gd123456@192.168.2.123:554/Streaming/Channels/101";

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.demux);

editText = findViewById(R.id.output);

m_demuxer = new FFDemuxJava();

m_demuxer.addEventCallback(this);

m_demuxer.init(mVideoPath);

m_demuxer.Start();

}

@Override

protected void onResume() {

super.onResume();

if (!hasPermissionsGranted(REQUEST_PERMISSIONS)) {

ActivityCompat.requestPermissions(this, REQUEST_PERMISSIONS, PERMISSION_REQUEST_CODE);

}

if(m_demuxer != null)

m_demuxer.Start();

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

if (requestCode == PERMISSION_REQUEST_CODE) {

if (!hasPermissionsGranted(REQUEST_PERMISSIONS)) {

Toast.makeText(this, "We need the permission: WRITE_EXTERNAL_STORAGE", Toast.LENGTH_SHORT).show();

}

} else {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

}

}

@Override

protected void onPause() {

super.onPause();

if(m_demuxer != null)

{

}

}

@Override

protected void onDestroy() {

m_demuxer.unInit();

super.onDestroy();

}

@Override

public void onPacketEvent(byte[] data) {

Log.d(TAG, "onPacketEvent() called with: size = [" + data.length+ "]"+"["+data[0]+" "+data[1]+" "+data[2]+" "+data[3]+" "+data[4]+" "+data[5]+"]");

String str = "onPacketEvent() called with: size = [" + data.length+ "]"+"["+data[0]+" "+data[1]+" "+data[2]+" "+data[3]+" "+data[4]+" "+data[5]+"]";

runOnUiThread(new Runnable() {

@Override

public void run() {

editText.setText(str);

}

});

}

@Override

public void onMessageEvent(final int msgType, final float msgValue) {

Log.d(TAG, "onPlayerEvent() called with: msgType = [" + msgType + "], msgValue = [" + msgValue + "]");

runOnUiThread(new Runnable() {

@Override

public void run() {

switch (msgType) {

case 1:

break;

default:

break;

}

}

});

}

protected boolean hasPermissionsGranted(String[] permissions) {

for (String permission : permissions) {

if (ActivityCompat.checkSelfPermission(this, permission)

!= PackageManager.PERMISSION_GRANTED) {

return false;

}

}

return true;

}

}

FFDemuxJava代码如下:

package com.qmcy.demux;

import android.view.Surface;

public class FFDemuxJava {

static {

System.loadLibrary("demux");

}

private long m_handle = 0;

private EventCallback mEventCallback = null;

public void init(String url) {

m_handle = native_Init(url);

}

public void Start() {

native_Start(m_handle);

}

public void stop() {

native_Stop(m_handle);

}

public void unInit() {

native_UnInit(m_handle);

}

public void addEventCallback(EventCallback callback) {

mEventCallback = callback;

}

private void playerEventCallback(int msgType, float msgValue) {

if(mEventCallback != null)

mEventCallback.onMessageEvent(msgType, msgValue);

}

private void packetEventCallback(byte[]data) {

if(mEventCallback != null)

mEventCallback.onPacketEvent(data);

}

private native long native_Init(String url);

private native void native_Start(long playerHandle);

private native void native_Stop(long playerHandle);

private native void native_UnInit(long playerHandle);

public interface EventCallback {

void onMessageEvent(int msgType, float msgValue);

void onPacketEvent(byte []data);

}

}

然后C++部分的代码 核心的在FFDemux.cpp中

//

// Created by Administrator on 2022/4/14/014.

//

#include "FFDemux.h"

FFDemux::FFDemux() {

}

FFDemux::~FFDemux() {

//UnInit();

}

void FFDemux::Start() {

if(m_Thread == nullptr) {

StartThread();

} else {

std::unique_lock<std::mutex> lock(m_Mutex);

m_DecoderState = STATE_DEMUXING;

m_Cond.notify_all();

}

}

void FFDemux::Stop() {

LOGCATE("FFDemux::Stop2222");

std::unique_lock<std::mutex> lock(m_Mutex);

m_DecoderState = STATE_STOP;

m_Cond.notify_all();

}

int FFDemux::Init(const char *url) {

strcpy(m_Url,url);

return 0;

}

void FFDemux::UnInit() {

if(m_Thread) {

Stop();

m_Thread->join();

delete m_Thread;

m_Thread = nullptr;

}

}

int FFDemux::InitDemux() {

int result = -1;

do {

//1.创建封装格式上下文

m_AVFormatContext = avformat_alloc_context();

//2.打开文件

if(avformat_open_input(&m_AVFormatContext, m_Url, NULL, NULL) != 0)

{

LOGCATE("FFDemux::InitDemux avformat_open_input fail.");

break;

}

//3.获取音视频流信息

if(avformat_find_stream_info(m_AVFormatContext, NULL) < 0) {

LOGCATE("FFDemux::InitDemux avformat_find_stream_info fail.");

break;

}

//4.获取音视频流索引

for(int i=0; i < m_AVFormatContext->nb_streams; i++) {

if(m_AVFormatContext->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

m_StreamIndex = i;

break;

}

}

if(m_StreamIndex == -1) {

LOGCATE("FFDemux::InitFFDecoder Fail to find stream index.");

break;

}

AVDictionary *pAVDictionary = nullptr;

av_dict_set(&pAVDictionary, "buffer_size", "1024000", 0);

av_dict_set(&pAVDictionary, "stimeout", "20000000", 0);

av_dict_set(&pAVDictionary, "max_delay", "30000000", 0);

av_dict_set(&pAVDictionary, "rtsp_transport", "tcp", 0);

result = 0;

m_Packet = av_packet_alloc();

} while (false);

if(result != 0 && m_MsgContext && m_MsgCallback)

m_MsgCallback(m_MsgContext, MSG_DECODER_INIT_ERROR, 0);

return result;

}

void FFDemux::StartThread() {

// m_Thread = new thread(DoAVDecoding, this);

m_Thread = new thread(DoDemux, this);

}

void FFDemux::DemuxLoop() {

{

std::unique_lock<std::mutex> lock(m_Mutex);

m_DecoderState = STATE_DEMUXING;

lock.unlock();

}

for(;;) {

LOGCATE("111111111111111111111111111 m_DecoderState =%d", m_DecoderState);

if(m_DecoderState == STATE_STOP) {

break;

}

if(DecodeOnePacket() != 0) {

//解码结束,暂停解码器

std::unique_lock<std::mutex> lock(m_Mutex);

m_DecoderState = STATE_ERROR;

}

}

}

int FFDemux::DecodeOnePacket() {

int result = av_read_frame(m_AVFormatContext, m_Packet);

while(result == 0) {

if(m_DecoderState == STATE_STOP)

{

LOGCATE("111111111111111111111111111 m_DecoderState is stop");

break;

}

if(m_Packet->stream_index == m_StreamIndex) {

OnReceivePacket(m_Packet);

//LOGCATE("111111111111111111111111111 BaseDecoder::DecodeOnePacket_Ex packet size =%d", m_Packet->size);

//判断一个 packet 是否解码完成

}

av_packet_unref(m_Packet);

result = av_read_frame(m_AVFormatContext, m_Packet);

}

__EXIT:

av_packet_unref(m_Packet);

return result;

}

void FFDemux::DoDemux(FFDemux *demux) {

LOGCATE("FFDemux::DoDemux");

do {

if(demux->InitDemux() != 0) {

break;

}

demux->DemuxLoop();

} while (false);

demux->DeInitDemux();

}

void FFDemux::DeInitDemux() {

LOGCATE("FFDemux::DeInitDemux");

if(m_Packet != nullptr) {

av_packet_free(&m_Packet);

m_Packet = nullptr;

}

if(m_AVFormatContext != nullptr) {

avformat_close_input(&m_AVFormatContext);

avformat_free_context(m_AVFormatContext);

m_AVFormatContext = nullptr;

}

}

void FFDemux::OnReceivePacket(AVPacket *packet) {

if(m_MsgContext && m_MsgCallback)

m_PacketCallback(m_MsgContext, packet->data,packet->size);

}FFBridge充当cpp和java之间的桥梁 封装了一层

//

// Created by Administrator on 2022/4/14/014.

//

#include "FFBridge.h"

void FFBridge::Init(JNIEnv *jniEnv, jobject obj, char *url) {

jniEnv->GetJavaVM(&m_JavaVM);

m_JavaObj = jniEnv->NewGlobalRef(obj);

m_demux = new FFDemux();

m_demux->Init(url);

m_demux->SetMessageCallback(this, PostMessage);

m_demux->SetPacketCallback(this, PostPacket);

}

void FFBridge::Start() {

LOGCATE("FFBridge::Start");

if(m_demux)

m_demux->Start();

}

void FFBridge::UnInit() {

LOGCATE("FFBridge::UnInit");

if(m_demux) {

Stop();

delete m_demux;

m_demux = nullptr;

}

bool isAttach = false;

GetJNIEnv(&isAttach)->DeleteGlobalRef(m_JavaObj);

if(isAttach)

GetJavaVM()->DetachCurrentThread();

}

void FFBridge::Stop() {

LOGCATE("FFBridge::Stop");

if(m_demux)

{

m_demux->UnInit();

}

}

JNIEnv *FFBridge::GetJNIEnv(bool *isAttach) {

JNIEnv *env;

int status;

if (nullptr == m_JavaVM) {

LOGCATE("FFBridge::GetJNIEnv m_JavaVM == nullptr");

return nullptr;

}

*isAttach = false;

status = m_JavaVM->GetEnv((void **)&env, JNI_VERSION_1_4);

if (status != JNI_OK) {

status = m_JavaVM->AttachCurrentThread(&env, nullptr);

if (status != JNI_OK) {

LOGCATE("FFBridge::GetJNIEnv failed to attach current thread");

return nullptr;

}

*isAttach = true;

}

return env;

}

jobject FFBridge::GetJavaObj() {

return m_JavaObj;

}

JavaVM *FFBridge::GetJavaVM() {

return m_JavaVM;

}

void FFBridge::PostMessage(void *context, int msgType, float msgCode) {

if(context != nullptr)

{

FFBridge *player = static_cast<FFBridge *>(context);

bool isAttach = false;

JNIEnv *env = player->GetJNIEnv(&isAttach);

// LOGCATE("FFBridge::PostMessage env=%p", env);

if(env == nullptr)

return;

jobject javaObj = player->GetJavaObj();

jmethodID mid = env->GetMethodID(env->GetObjectClass(javaObj), JAVA_MESSAGE_EVENT_CALLBACK_API_NAME, "(IF)V");

env->CallVoidMethod(javaObj, mid, msgType, msgCode);

if(isAttach)

player->GetJavaVM()->DetachCurrentThread();

}

}

void FFBridge::PostPacket(void *context, uint8_t *buf,int size) {

if(context != nullptr)

{

FFBridge *player = static_cast<FFBridge *>(context);

bool isAttach = false;

JNIEnv *env = player->GetJNIEnv(&isAttach);

//LOGCATE("FFBridge::PostPacket env=%p", env);

if(env == nullptr)

return;

jobject javaObj = player->GetJavaObj();

jbyteArray array1 = env->NewByteArray(size);

env->SetByteArrayRegion(array1,0,size,(jbyte*)buf);

jmethodID mid = env->GetMethodID(env->GetObjectClass(javaObj), JAVA_PACKET_EVENT_CALLBACK_API_NAME, "([B)V");

env->CallVoidMethod(javaObj, mid, array1);

env->DeleteLocalRef(array1);

if(isAttach)

player->GetJavaVM()->DetachCurrentThread();

}

}

然后native 代码都在native-lib.cpp中

#include <jni.h>

#include <string>

#include "FFBridge.h"

extern "C"

{

#include <libavutil/time.h>

#include <libavcodec/avcodec.h>

#include <libavcodec/packet.h>

#include <libavutil/imgutils.h>

#include <libswscale/swscale.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libavutil/opt.h>

};

extern "C" JNIEXPORT jstring JNICALL

Java_com_qmcy_demux_MainActivity_stringFromJNI(

JNIEnv* env,

jobject /* this */) {

std::string hello = "Hello from C++";

return env->NewStringUTF(hello.c_str());

}

extern "C" JNIEXPORT jstring JNICALL

Java_com_qmcy_demux_MainActivity_GetVersion(

JNIEnv* env,

jobject /* this */) {

char strBuffer[1024 * 4] = {0};

strcat(strBuffer, "libavcodec : ");

strcat(strBuffer, AV_STRINGIFY(LIBAVCODEC_VERSION));

strcat(strBuffer, "\nlibavformat : ");

strcat(strBuffer, AV_STRINGIFY(LIBAVFORMAT_VERSION));

strcat(strBuffer, "\nlibavutil : ");

strcat(strBuffer, AV_STRINGIFY(LIBAVUTIL_VERSION));

strcat(strBuffer, "\nlibavfilter : ");

strcat(strBuffer, AV_STRINGIFY(LIBAVFILTER_VERSION));

strcat(strBuffer, "\nlibswresample : ");

strcat(strBuffer, AV_STRINGIFY(LIBSWRESAMPLE_VERSION));

strcat(strBuffer, "\nlibswscale : ");

strcat(strBuffer, AV_STRINGIFY(LIBSWSCALE_VERSION));

strcat(strBuffer, "\navcodec_configure : \n");

strcat(strBuffer, avcodec_configuration());

strcat(strBuffer, "\navcodec_license : ");

strcat(strBuffer, avcodec_license());

//LOGCATE("GetFFmpegVersion\n%s", strBuffer);

return env->NewStringUTF(strBuffer);

}

extern "C" JNIEXPORT jlong JNICALL Java_com_qmcy_demux_FFDemuxJava_native_1Init

(JNIEnv *env, jobject obj, jstring jurl)

{

const char* url = env->GetStringUTFChars(jurl, nullptr);

FFBridge *bridge = new FFBridge();

bridge->Init(env, obj, const_cast<char *>(url));

env->ReleaseStringUTFChars(jurl, url);

return reinterpret_cast<jlong>(bridge);

}

extern "C"

JNIEXPORT void JNICALL Java_com_qmcy_demux_FFDemuxJava_native_1Start

(JNIEnv *env, jobject obj, jlong handle)

{

if(handle != 0)

{

FFBridge *bridge = reinterpret_cast<FFBridge *>(handle);

bridge->Start();

}

}

extern "C"

JNIEXPORT void JNICALL Java_com_qmcy_demux_FFDemuxJava_native_1Stop

(JNIEnv *env, jobject obj, jlong handle)

{

if(handle != 0)

{

FFBridge *bridge = reinterpret_cast<FFBridge *>(handle);

bridge->Stop();

}

}

extern "C"

JNIEXPORT void JNICALL Java_com_qmcy_demux_FFDemuxJava_native_1UnInit

(JNIEnv *env, jobject obj, jlong handle)

{

if(handle != 0)

{

FFBridge *bridge = reinterpret_cast<FFBridge *>(handle);

bridge->UnInit();

delete bridge;

}

}一直从事的都是 c/c++的开发 对于java的知识 还停留在大学阶段所学的阶段

更别谈android了 很早的几年前 也写过一个简单的手机控制蓝牙的android app 但是那会还是 2015年左右 那会用的还是eclipse 还没有android studio这个ide 。差别还是蛮大的

参考了csdn的另一位博主 字节流动 的 代码 做个入门阶段的demo

一些容错处理没处理好 ,毕竟只是个demo。 通过这个demo 了解下 JNI 以及Java C++ 如何交互

以及回调函数怎么处理 等等。文章来源:https://www.toymoban.com/news/detail-436151.html

ffmpeg -rtsp_transport tcp -i rtsp://uer:gd123456@192.168.2.124:554/Streaming/Channels/101 -vcodec copy -an -f flv rtmp://192.168.0.209:1935/live/0 文章来源地址https://www.toymoban.com/news/detail-436151.html

到了这里,关于Android 引入FFmpeg 读取RTSP流 解封装获取H264原始数据的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!