故障描述

calico-kube-controllers 异常,不断重启

日志信息如下

2023-02-21 01:26:47.085 [INFO][1] main.go 92: Loaded configuration from environment config=&config.Config{LogLevel:"info", WorkloadEndpointWorkers:1, ProfileWorkers:1, PolicyWorkers:1, NodeWorkers:1, Kubeconfig:"", DatastoreType:"kubernetes"}

W0221 01:26:47.086980 1 client_config.go:615] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

2023-02-21 01:26:47.087 [INFO][1] main.go 113: Ensuring Calico datastore is initialized

2023-02-21 01:26:47.106 [INFO][1] main.go 153: Getting initial config snapshot from datastore

2023-02-21 01:26:47.120 [INFO][1] main.go 156: Got initial config snapshot

2023-02-21 01:26:47.120 [INFO][1] watchersyncer.go 89: Start called

2023-02-21 01:26:47.120 [INFO][1] main.go 173: Starting status report routine

2023-02-21 01:26:47.120 [INFO][1] main.go 182: Starting Prometheus metrics server on port 9094

2023-02-21 01:26:47.120 [INFO][1] main.go 418: Starting controller ControllerType="Node"

2023-02-21 01:26:47.120 [INFO][1] watchersyncer.go 127: Sending status update Status=wait-for-ready

2023-02-21 01:26:47.120 [INFO][1] node_syncer.go 65: Node controller syncer status updated: wait-for-ready

2023-02-21 01:26:47.120 [INFO][1] watchersyncer.go 147: Starting main event processing loop

2023-02-21 01:26:47.120 [INFO][1] watchercache.go 174: Full resync is required ListRoot="/calico/ipam/v2/assignment/"

2023-02-21 01:26:47.120 [INFO][1] node_controller.go 143: Starting Node controller

2023-02-21 01:26:47.121 [INFO][1] watchercache.go 174: Full resync is required ListRoot="/calico/resources/v3/projectcalico.org/nodes"

2023-02-21 01:26:47.121 [INFO][1] resources.go 349: Main client watcher loop

2023-02-21 01:26:47.121 [ERROR][1] status.go 138: Failed to write readiness file: open /status/status.json: permission denied

2023-02-21 01:26:47.121 [WARNING][1] status.go 66: Failed to write status error=open /status/status.json: permission denied

2023-02-21 01:26:47.121 [ERROR][1] status.go 138: Failed to write readiness file: open /status/status.json: permission denied

2023-02-21 01:26:47.121 [WARNING][1] status.go 66: Failed to write status error=open /status/status.json: permission denied

2023-02-21 01:26:47.124 [INFO][1] watchercache.go 271: Sending synced update ListRoot="/calico/ipam/v2/assignment/"

2023-02-21 01:26:47.125 [INFO][1] watchersyncer.go 127: Sending status update Status=resync

2023-02-21 01:26:47.125 [INFO][1] node_syncer.go 65: Node controller syncer status updated: resync

2023-02-21 01:26:47.125 [INFO][1] watchersyncer.go 209: Received InSync event from one of the watcher caches

2023-02-21 01:26:47.125 [ERROR][1] status.go 138: Failed to write readiness file: open /status/status.json: permission denied

2023-02-21 01:26:47.125 [WARNING][1] status.go 66: Failed to write status error=open /status/status.json: permission denied

2023-02-21 01:26:47.129 [INFO][1] watchercache.go 271: Sending synced update ListRoot="/calico/resources/v3/projectcalico.org/nodes"

2023-02-21 01:26:47.129 [ERROR][1] status.go 138: Failed to write readiness file: open /status/status.json: permission denied

2023-02-21 01:26:47.129 [WARNING][1] status.go 66: Failed to write status error=open /status/status.json: permission denied

2023-02-21 01:26:47.129 [INFO][1] watchersyncer.go 209: Received InSync event from one of the watcher caches

2023-02-21 01:26:47.129 [INFO][1] watchersyncer.go 221: All watchers have sync'd data - sending data and final sync

2023-02-21 01:26:47.129 [INFO][1] watchersyncer.go 127: Sending status update Status=in-sync

2023-02-21 01:26:47.129 [INFO][1] node_syncer.go 65: Node controller syncer status updated: in-sync

2023-02-21 01:26:47.137 [INFO][1] hostendpoints.go 90: successfully synced all hostendpoints

2023-02-21 01:26:47.221 [INFO][1] node_controller.go 159: Node controller is now running

2023-02-21 01:26:47.226 [INFO][1] ipam.go 69: Synchronizing IPAM data

2023-02-21 01:26:47.236 [INFO][1] ipam.go 78: Node and IPAM data is in sync定位问题在这里

Failed to write status error=open /status/status.json: permission denied

进入容器检查目录

尝试进入容器,但是该容器居然没 cat , ls 等常规命令,无法查看容器问题

检查配置

查看pod的配置,对比其它集群,没任何问题,一样的

[grg@i-A8259010 ~]$ kubectl describe pod calico-kube-controllers-9f49b98f6-njs2f -n kube-system

Name: calico-kube-controllers-9f49b98f6-njs2f

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: 10.254.39.2/10.254.39.2

Start Time: Thu, 16 Feb 2023 11:14:35 +0800

Labels: k8s-app=calico-kube-controllers

pod-template-hash=9f49b98f6

Annotations: cni.projectcalico.org/podIP: 10.244.29.73/32

cni.projectcalico.org/podIPs: 10.244.29.73/32

Status: Running

IP: 10.244.29.73

IPs:

IP: 10.244.29.73

Controlled By: ReplicaSet/calico-kube-controllers-9f49b98f6

Containers:

calico-kube-controllers:

Container ID: docker://21594e3517a3fc8ffc5224496cec373117138acf5417d9a335a1c5e80e0c3802

Image: registry.custom.local:12480/kubeadm-ha/calico_kube-controllers:v3.19.1

Image ID: docker-pullable://registry.cn-beijing.aliyuncs.com/dotbalo/kube-controllers@sha256:2ff71ba65cd7fe10e183ad80725ad3eafb59899d6f1b2610446b90c84bf2425a

Port: <none>

Host Port: <none>

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 2

Started: Tue, 21 Feb 2023 09:34:06 +0800

Finished: Tue, 21 Feb 2023 09:35:15 +0800

Ready: False

Restart Count: 1940

Liveness: exec [/usr/bin/check-status -l] delay=10s timeout=1s period=10s #success=1 #failure=6

Readiness: exec [/usr/bin/check-status -r] delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

ENABLED_CONTROLLERS: node

DATASTORE_TYPE: kubernetes

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-55jbn (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-55jbn:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: kubernetes.io/os=linux

Tolerations: CriticalAddonsOnly op=Exists

node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Unhealthy 31m (x15164 over 4d22h) kubelet Readiness probe failed: Failed to read status file /status/status.json: unexpected end of JSON input

Warning BackOff 6m23s (x23547 over 4d22h) kubelet Back-off restarting failed container

Warning Unhealthy 79s (x11571 over 4d22h) kubelet Liveness probe failed: Failed to read status file /status/status.json: unexpected end of JSON input对比镜像

检查镜像版本,与其它集群一致,没问题

Image: registry.custom.local:12480/kubeadm-ha/calico_kube-controllers:v3.19.1

Image ID: docker-pullable://registry.cn-beijing.aliyuncs.com/dotbalo/kube-controllers@sha256:2ff71ba65cd7fe10e183ad80725ad3eafb59899d6f1b2610446b90c84bf2425a检查其余集群配置差异

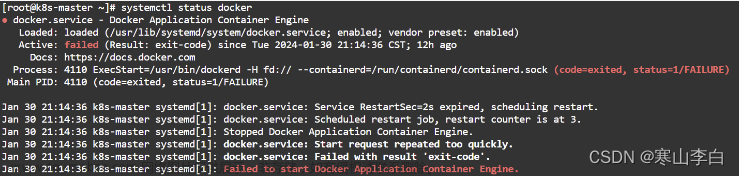

检查与其它集群的配置信息,该机器的 docker 是原来已经安装的,版本是 19,其它机器是新安装的版本 20 。

处理方案

在无法重装 docker 的情况下

重启 pod,无效

百度,无相关信息

调整 calico-kube-controllers 配置

配置文件在 /etc/kubernetes/plugins/network-plugin/calico-typha.yaml

我们针对无法写入目录 /status ,添加卷映射

应用配置文章来源:https://www.toymoban.com/news/detail-436730.html

mkdir /var/run/calico/status

chmod 777/var/run/calico/status

kubectl apply -f /etc/kubernetes/plugins/network-plugin/calico-typha.yaml到此系统恢复文章来源地址https://www.toymoban.com/news/detail-436730.html

到了这里,关于calico-kube-controllers 启动失败处理的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!