该实验使用 Azure CosmosDB,这个实验的点在于:

1:使用了 cosmicworks 生成了实验数据

2:弄清楚cosmosDB 的 accout Name 与 database id 和 container id 关系。

3:创建了 ADF 的连接和任务,让数据从 cosmicworks 数据库的 products 容器,迁移到 cosmicworks数据库的 flatproducts 容器。

实验来自于:练习:使用 Azure 数据工厂迁移现有数据 - Training | Microsoft Learn

Migrate existing data using Azure Data Factory

In Azure Data Factory, Azure Cosmos DB is supported as a source of data ingest and as a target (sink) of data output.

In this lab, we will populate Azure Cosmos DB using a helpful command-line utility and then use Azure Data Factory to move a subset of data from one container to another.

Create and seed your Azure Cosmos DB SQL API account

You will use a command-line utility that creates a cosmicworks database and a products container at 4,000 request units per second (RU/s). Once created, you will adjust the throughput down to 400 RU/s.

To accompany the products container, you will create a flatproducts container manually that will be the target of the ETL transformation and load operation at the end of this lab.

-

In a new web browser window or tab, navigate to the Azure portal (

portal.azure.com). -

Sign into the portal using the Microsoft credentials associated with your subscription.

-

Select + Create a resource, search for Cosmos DB, and then create a new Azure Cosmos DB SQL API account resource with the following settings, leaving all remaining settings to their default values:

Setting Value Subscription Your existing Azure subscription Resource group Select an existing or create a new resource group Account Name Enter a globally unique name Location Choose any available region Capacity mode Provisioned throughput Apply Free Tier Discount Do Not Apply Limit the total amount of throughput that can be provisioned on this account Unchecked 📝 Your lab environments may have restrictions preventing you from creating a new resource group. If that is the case, use the existing pre-created resource group.

-

Wait for the deployment task to complete before continuing with this task.

-

Go to the newly created Azure Cosmos DB account resource and navigate to the Keys pane.

-

This pane contains the connection details and credentials necessary to connect to the account from the SDK. Specifically:

-

Record the value of the URI field. You will use this endpoint value later in this exercise.

-

Record the value of the PRIMARY KEY field. You will use this key value later in this exercise.

-

-

Close your web browser window or tab.

-

Start Visual Studio Code.

📝 If you are not already familiar with the Visual Studio Code interface, review the Get Started guide for Visual Studio Code

-

In Visual Studio Code, open the Terminal menu and then select New Terminal to open a new terminal instance.

-

Install the cosmicworks command-line tool for global use on your machine.

dotnet tool install --global cosmicworks💡 This command may take a couple of minutes to complete. This command will output the warning message (*Tool 'cosmicworks' is already installed') if you have already installed the latest version of this tool in the past.

-

Run cosmicworks to seed your Azure Cosmos DB account with the following command-line options:

Option Value --endpoint The endpoint value you copied earlier in this lab --key The key value you coped earlier in this lab --datasets product cosmicworks --endpoint <cosmos-endpoint> --key <cosmos-key> --datasets product📝 For example, if your endpoint is: https://dp420.documents.azure.com:443/ and your key is: fDR2ci9QgkdkvERTQ==, then the command would be:

cosmicworks --endpoint https://dp420.documents.azure.com:443/ --key fDR2ci9QgkdkvERTQ== --datasets product -

Wait for the cosmicworks command to finish populating the account with a database, container, and items.

-

Close the integrated terminal.

-

Close Visual Studio Code.

-

In a new web browser window or tab, navigate to the Azure portal (

portal.azure.com). -

Sign into the portal using the Microsoft credentials associated with your subscription.

-

Select Resource groups, then select the resource group you created or viewed earlier in this lab, and then select the Azure Cosmos DB account resource you created in this lab.

-

Within the Azure Cosmos DB account resource, navigate to the Data Explorer pane.

-

In the Data Explorer, expand the cosmicworks database node, expand the products container node, and then select Items.

-

Observe and select the various JSON items in the products container. These are the items created by the command-line tool used in previous steps.

-

Select the Scale & Settings node. In the Scale & Settings tab, select Manual, update the required throughput setting from 4000 RU/s to 400 RU/s and then Save your changes**.

-

In the Data Explorer pane, select New Container.

-

In the New Container popup, enter the following values for each setting, and then select OK:

Setting Value Database id Use existing | cosmicworks Container id flatproductsPartition key /categoryContainer throughput (autoscale) Manual RU/s 400 -

Back in the Data Explorer pane, expand the cosmicworks database node and then observe the flatproducts container node within the hierarchy.

-

Return to the Home of the Azure portal.

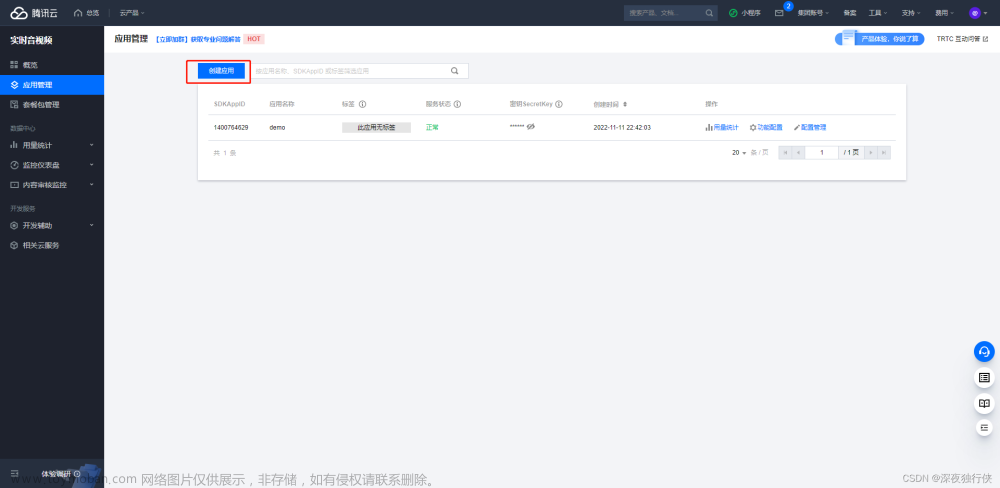

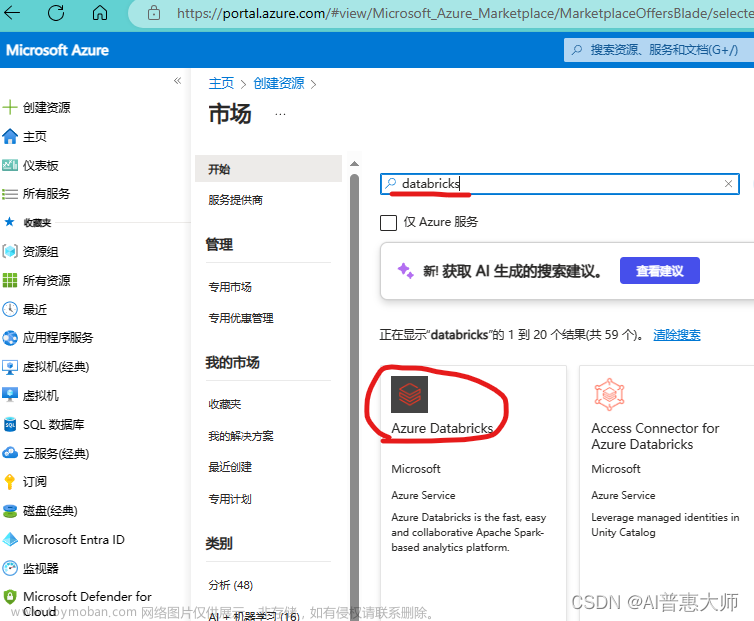

Create Azure Data Factory resource

Now that the Azure Cosmos DB SQL API resources are in place, you will create an Azure Data Factory resource and configure all of the necessary components and connections to perform a one-time data movement from one SQL API container to another to extract data, transform it, and load it to another SQL API container.

-

Select + Create a resource, search for Data Factory, and then create a new Azure Data Factory resource with the following settings, leaving all remaining settings to their default values:

Setting Value Subscription Your existing Azure subscription Resource group Select an existing or create a new resource group Name Enter a globally unique name Region Choose any available region Version V2 Git configuration Configure Git later 📝 Your lab environments may have restrictions preventing you from creating a new resource group. If that is the case, use the existing pre-created resource group.

-

Wait for the deployment task to complete before continuing with this task.

-

Go to the newly created Azure Data Factory resource and select Open Azure Data Factory Studio.

💡 Alternatively, you can navigate to (

adf.azure.com/home), select your newly created Data Factory resource, and then select the home icon. -

From the home screen. Select the Ingest option to begin the quick wizard to perform a one-time copy data at scale operation and move to the Properties step of the wizard.

-

Starting with the Properties step of the wizard, in the Task type section, select Built-in copy task.

-

In the Task cadence or task schedule section, select Run once now and then select Next to move to the Source step of the wizard.

-

In the Source step of the wizard, in the Source type list, select Azure Cosmos DB (SQL API).

-

In the Connection section, select + New connection.

-

In the New connection (Azure Cosmos DB (SQL API)) popup, configure the new connection with the following values, and then select Create:

Setting Value Name CosmosSqlConnConnect via integration runtime AutoResolveIntegrationRuntime Authentication method Account key | Connection string Account selection method From Azure subscription Azure subscription Your existing Azure subscription Azure Cosmos DB account name Your existing Azure Cosmos DB account name you chose earlier in this lab Database name cosmicworks -

Back in the Source data store section, within the Source tables section, select Use query.

-

In the Table name list, select products.

-

In the Query editor, delete the existing content and enter the following query:

SELECT p.name, p.categoryName as category, p.price FROM products p -

Select Preview data to test the query's validity. Select Next to move to the Target step of the wizard.

-

In the Target step of the wizard, in the Target type list, select Azure Cosmos DB (SQL API).

-

In the Connection list, select CosmosSqlConn.

-

In the Target list, select flatproducts and then select Next to move to the Settings step of the wizard.

-

In the Settings step of the wizard, in the Task name field, enter

FlattenAndMoveData. -

Leave all remaining fields to their default blank values and then select Next to move to the final step of the wizard.

-

Review the Summary of the steps you have selected in the wizard and then select Next.

-

Observe the various steps in the deployment. When the deployment has finished, select Finish.

-

Close your web browser window or tab.

-

In a new web browser window or tab, navigate to the Azure portal (

portal.azure.com). -

Sign into the portal using the Microsoft credentials associated with your subscription.

-

Select Resource groups, then select the resource group you created or viewed earlier in this lab, and then select the Azure Cosmos DB account resource you created in this lab.

-

Within the Azure Cosmos DB account resource, navigate to the Data Explorer pane.

-

In the Data Explorer, expand the cosmicworks database node, select the flatproducts container node, and then select New SQL Query.

-

Delete the contents of the editor area.

-

Create a new SQL query that will return all documents where the name is equivalent to HL Headset:

SELECT p.name, p.category, p.price FROM products p WHERE p.name = 'HL Headset' -

Select Execute Query.文章来源:https://www.toymoban.com/news/detail-439606.html

-

Observe the results of the query.文章来源地址https://www.toymoban.com/news/detail-439606.html

到了这里,关于Azure动手实验 - 使用Azure Data Factory 迁移数据的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[mysql]数据迁移之data目录复制方法](https://imgs.yssmx.com/Uploads/2024/02/612973-1.png)