本文介绍了使用Prometheus对k8s集群外的elasticsearch进行监控,这里Prometheus是使用operator部署于k8s集群中,相较于进程部署或docker部署的Prometheus,部署过程更为复杂,不能通过直接修改配置文件的方式增加job,而需要采用k8s的方式进行配置。

配置步骤为:

1,增加endpoint和service,使k8s集群连接至集群外的服务(这里使集群外的elasticsearch服务)

2,创建deployment,配置elasticsearch_exporter连接第1步的Service用于获取监控数据,并配置elasticsearch_exporter容器的启动参数

3,创建elasticsearch_exporter的Service用于被Prometheus发现并注册

4,创建ServiceMonitor将被监控资源注册至Prometheus的Job

5,配置PrometheusRule,增加告警规则

创建ep和svc连接集群外服务

通过yaml文件创建ep和svc,创建好后,访问svc集群ip的9200端口,看通过svc是否能正常访问elasticsearch

[root@VM-12-8-centos elk]# cat 1-es-endpoint-svr.yml

kind: Endpoints

apiVersion: v1

metadata:

name: external-es

labels:

app: external-es

subsets:

- addresses:

- ip: 10.0.20.10

ports:

- port: 9200

name: es

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: external-es

labels:

app: external-es

spec:

type: ClusterIP

ports:

- port: 9200

protocol: TCP

targetPort: 9200

name: es

[root@VM-12-8-centos elk]# kubectl apply -f 1-es-endpoint-svr.yml

endpoints/external-es created

service/external-es created

[root@VM-12-8-centos elk]# kubectl get svc external-es

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

external-es ClusterIP 10.1.250.204 <none> 9200/TCP 77s

[root@VM-12-8-centos elk]# kubectl get ep external-es

NAME ENDPOINTS AGE

external-es 10.0.20.10:9200 91s

[root@VM-12-8-centos elk]# curl 10.1.250.204:9200

{

"name" : "bVkU9LX",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "D-rkPesCRMalPQSi8nx8Hg",

"version" : {

"number" : "6.8.23",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "4f67856",

"build_date" : "2022-01-06T21:30:50.087716Z",

"build_snapshot" : false,

"lucene_version" : "7.7.3",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}创建ElasticSearch的exporter用于采集ElasticSearch集群的各类监控数据

创建一个Elasticsearch Exporter的deployment用于收集es集群的相应监控数据,并提供监控接口给到Prometheus

创建好pod后,访问9114端口,测试能否正常取到监控metircs数据

[root@VM-12-8-centos elk]# cat 2-es-deploy-svc.yml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: elasticsearch-exporter

name: elasticsearch-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch-exporter

template:

metadata:

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '9114'

prometheus.io/path: 'metrics'

labels:

app: elasticsearch-exporter

spec:

containers:

- command:

- '/bin/elasticsearch_exporter'

- --es.uri=http://10.1.250.204:9200

- --es.all

image: prometheuscommunity/elasticsearch-exporter:v1.5.0

imagePullPolicy: IfNotPresent

name: elasticsearch-exporter

ports:

- containerPort: 9114

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch-exporter

name: elasticsearch-exporter-svc

namespace: monitoring

spec:

ports:

- name: http

port: 9114

protocol: TCP

targetPort: 9114

selector:

app: elasticsearch-exporter

type: ClusterIP

[root@VM-12-8-centos elk]# kubectl apply -f 2-es-deploy-svc.yml

deployment.apps/elasticsearch-exporter created

service/elasticsearch-exporter-svc created

[root@VM-12-8-centos elk]# kubectl apply -f 2-es-deploy-svc.yml

deployment.apps/elasticsearch-exporter created

service/elasticsearch-exporter-svc created

[root@VM-12-8-centos elk]# kubectl get deploy -n monitoring elasticsearch-exporter

NAME READY UP-TO-DATE AVAILABLE AGE

elasticsearch-exporter 1/1 1 1 7s

[root@VM-12-8-centos elk]# kubectl get po -n monitoring

NAME READY STATUS RESTARTS AGE

elasticsearch-exporter-db8d85955-xjv4k 1/1 Running 0 15s

[root@VM-12-8-centos elk]# kubectl get svc -n monitoring elasticsearch-exporter-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch-exporter-svc ClusterIP 10.1.24.20 <none> 9114/TCP 25s

[root@VM-12-8-centos elk]# curl 10.1.24.20:9114

<html>

<head><title>Elasticsearch Exporter</title></head>

<body>

<h1>Elasticsearch Exporter</h1>

<p><a href="/metrics">Metrics</a></p>

</body>

</html>[root@VM-12-8-centos elk]#

[root@VM-12-8-centos elk]#

[root@VM-12-8-centos elk]#

[root@VM-12-8-centos elk]# curl 10.1.24.20:9114/metrics

# HELP elasticsearch_breakers_estimated_size_bytes Estimated size in bytes of breaker

# TYPE elasticsearch_breakers_estimated_size_bytes gauge

elasticsearch_breakers_estimated_size_bytes{breaker="accounting",cluster="elasticsearch",es_client_node="true",es_data_node="true",es_ingest_node="true",es_master_node="true",host="10.0.20.10",name="bVkU9LX"} 8.361645e+06

elasticsearch_breakers_estimated_size_bytes{breaker="fielddata",cluster="elasticsearch",es_client_node="true",es_data_node="true",es_ingest_node="true",es_master_node="true",host="10.0.20.10",name="bVkU9LX"} 105656

。。。。。。

创建ServiceMonitor将监控注册到Prometheus中

相应的yaml文件如下

[root@VM-12-8-centos elk]# vim 3-servicemonitor.yml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: elasticsearch-exporter

name: elasticsearch-exporter

namespace: monitoring

spec:

endpoints:

- honorLabels: true

interval: 1m

path: /metrics

port: http

scheme: http

params:

target:

- '10.1.24.20:9114'

relabelings:

- sourceLabels: [__param_target]

targetLabel: instance

namespaceSelector:

matchNames:

- monitoring

selector:

matchLabels:

app: elasticsearch-exporter

[root@VM-12-8-centos elk]# kubectl apply -f 3-servicemonitor.yml

servicemonitor.monitoring.coreos.com/elasticsearch-exporter created

[root@VM-12-8-centos elk]# kubectl get servicemonitor -n monitoring elasticsearch-exporter

NAME AGE

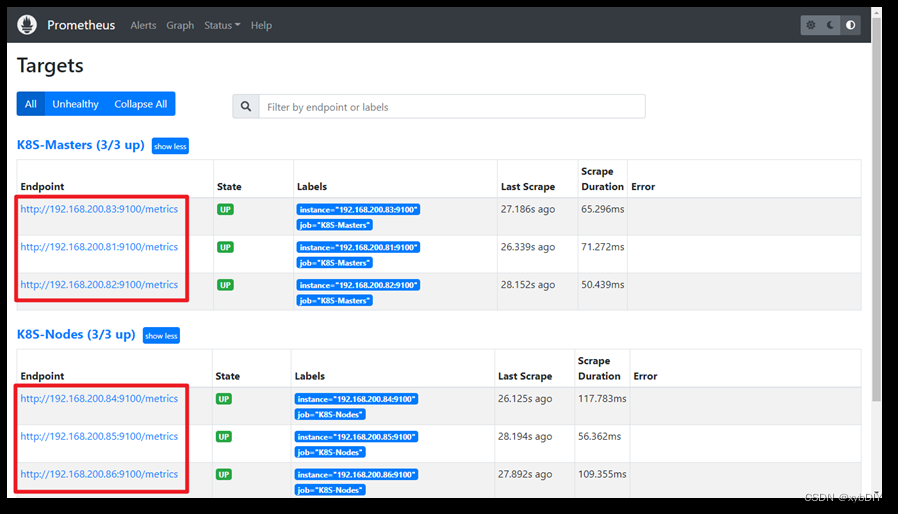

elasticsearch-exporter 13s创建好后,然后再到Prometheus界面查看是否又es的监控项生成

增加告警规则

配置PrometheusRule,增加告警规则

[root@VM-12-8-centos elk]# cat 4-prometheus-rules.yml

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: elasticsearch-exporter-rules

namespace: monitoring

spec:

groups:

- name: elasticsearch-exporter

rules:

- alert: es-ElasticsearchHealthyNodes

expr: elasticsearch_cluster_health_number_of_nodes < 1

for: 0m

labels:

severity: critical

annotations:

summary: Elasticsearch Healthy Nodes (instance {{ $labels.instance }})

description: "Missing node in Elasticsearch cluster\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: es-ElasticsearchClusterRed

expr: elasticsearch_cluster_health_status{color="red"} == 1

for: 0m

labels:

severity: critical

annotations:

summary: Elasticsearch Cluster Red (instance {{ $labels.instance }})

description: "Elastic Cluster Red status\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: es-ElasticsearchClusterYellow

expr: elasticsearch_cluster_health_status{color="yellow"} == 1

for: 0m

labels:

severity: warning

annotations:

summary: Elasticsearch Cluster Yellow (instance {{ $labels.instance }})

description: "Elastic Cluster Yellow status\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: es-ElasticsearchDiskOutOfSpace

expr: elasticsearch_filesystem_data_available_bytes / elasticsearch_filesystem_data_size_bytes * 100 < 10

for: 0m

labels:

severity: critical

annotations:

summary: Elasticsearch disk out of space (instance {{ $labels.instance }})

description: "The disk usage is over 90%\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: es-ElasticsearchHeapUsageTooHigh

expr: (elasticsearch_jvm_memory_used_bytes{area="heap"} / elasticsearch_jvm_memory_max_bytes{area="heap"}) * 100 > 90

for: 2m

labels:

severity: critical

annotations:

summary: Elasticsearch Heap Usage Too High (instance {{ $labels.instance }})

description: "The heap usage is over 90%\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- alert: ElasticsearchNoNewDocuments

expr: increase(elasticsearch_indices_docs{es_data_node="true"}[200m]) < 1

for: 0m

labels:

severity: warning

annotations:

summary: Elasticsearch no new documents (instance {{ $labels.instance }})

description: "No new documents for 10 min!\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"告警测试

由于我的试验环境,只有elasticsearch单机,创建副本分片是失败的,所以es集群状态是否为yellow这个告警条件会触发告警

检查界面,确实触发了告警

而且也收到了告警邮件文章来源:https://www.toymoban.com/news/detail-443378.html

文章来源地址https://www.toymoban.com/news/detail-443378.html

文章来源地址https://www.toymoban.com/news/detail-443378.html

到了这里,关于使用Prometheus对k8s集群外的Elasticsearch进行监控的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!