函数定义

torch.nn.functional.normalize(input, p=2.0, dim=1, eps=1e-12, out=None)

# type: (Tensor, float, int, float, Optional[Tensor]) -> Tensor

公式为

v

max

(

∥

v

∥

p

,

ϵ

)

\frac{v}{\max(\lVert v \rVert_p, \epsilon)}

max(∥v∥p,ϵ)v

参数及功能

F.normalize(data, p=2/1, dim=0/1/-1) 将某一个维度除以那个维度对应的范数(默认是2范数)

input:输入的数据(tensor)

p:L2/L1_norm运算,(默认是2范数)

dim:0表示按列操作,则每列都是除以该列下平方和的开方;1表示按行操作,则每行都是除以该行下所有元素平方和的开方,-1表示按行

eps:防止分母为0

功能:将某一个维度除以那个维度对应的范数(默认是2范数),也称为标准化。

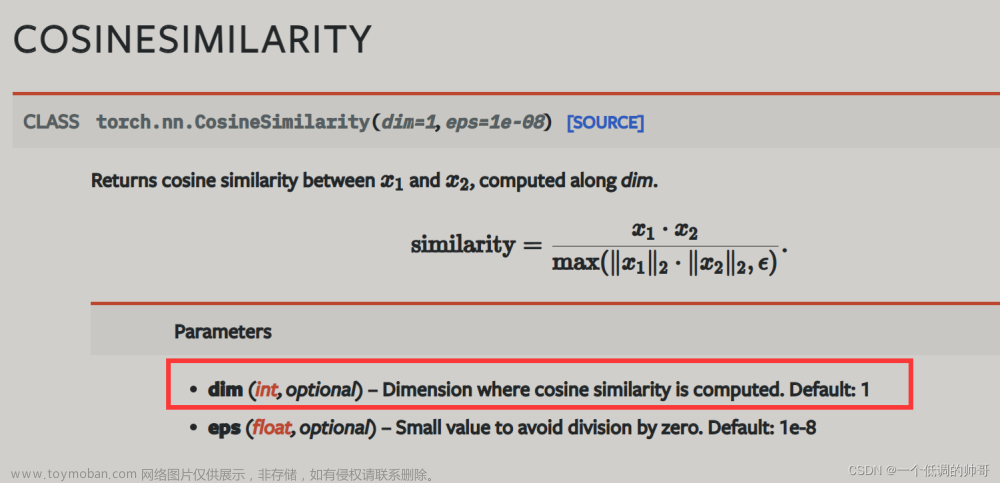

官方说明

先放两张图,如果能看明白就不需要看下面实例解释

二维数据实例解释

参数dim=0

import torch

import torch.nn.functional as F

a = torch.tensor([[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]])

print(a.shape)

print("=============================================")

print(a)

print("=============================================")

c = F.normalize(a, dim=0)

print(c)

结果为

torch.Size([3, 4])

=============================================

tensor([[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]])

=============================================

tensor([[0.1052, 0.1695, 0.0580, 0.6123],

[0.9858, 0.6438, 0.0663, 0.4973],

[0.1310, 0.7462, 0.9961, 0.6147]])

代码中针对维度0进行归一化,也就是对二维数据的列进行归一化,具体的计算细节为

0.1052

=

0.0861

0.086

1

2

+

0.806

7

2

+

0.107

2

2

0.1052=\frac{0.0861}{\sqrt{0.0861^2+0.8067^2+0.1072^2}}

0.1052=0.08612+0.80672+0.107220.0861

0.1695

=

0.1087

0.108

7

2

+

0.412

8

2

+

0.478

5

2

0.1695=\frac{0.1087}{\sqrt{0.1087^2+0.4128^2+0.4785^2}}

0.1695=0.10872+0.41282+0.478520.1087

0.0580

=

0.0518

0.051

8

2

+

0.059

2

2

+

0.889

0

2

0.0580=\frac{0.0518}{\sqrt{0.0518^2+0.0592^2+0.8890^2}}

0.0580=0.05182+0.05922+0.889020.0518

0.6123

=

0.3551

0.355

1

2

+

0.288

4

2

+

0.356

5

2

0.6123=\frac{0.3551}{\sqrt{0.3551^2+0.2884^2+0.3565^2}}

0.6123=0.35512+0.28842+0.356520.3551

参数dim=1

import torch

import torch.nn.functional as F

a = torch.tensor([[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]])

print(a.shape)

print("=============================================")

print(a)

print("=============================================")

c = F.normalize(a, dim=1)

print(c)

结果为

torch.Size([3, 4])

=============================================

tensor([[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]])

=============================================

tensor([[0.2237, 0.2825, 0.1347, 0.9230],

[0.8467, 0.4332, 0.0621, 0.3027],

[0.0996, 0.4447, 0.8262, 0.3313]])

代码中针对维度1进行归一化,也就是对二维数据的行进行归一化,具体的计算细节为

参数dim=-1

与dim=2结果一致,相当于看做逆序索引文章来源:https://www.toymoban.com/news/detail-451929.html

三维数据实例解释

参数dim=0

import torch

import torch.nn.functional as F

a = torch.tensor([[[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]]])

print(a.shape)

print("=============================================")

print(a)

print("=============================================")

c = F.normalize(a, dim=0)

print(c)

结果为

torch.Size([1, 3, 4])

=============================================

tensor([[[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]]])

=============================================

tensor([[[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]]])

这里作用的是维度0;维度0上只有1个通道,因此归一化之后全为1,即

参数dim=1

a = torch.tensor([[[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]]])

print(a.shape)

print("=============================================")

print(a)

print("=============================================")

c = F.normalize(a, dim=1)

print(c)

结果为

torch.Size([1, 3, 4])

=============================================

tensor([[[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]]])

=============================================

tensor([[[0.1052, 0.1695, 0.0580, 0.6123],

[0.9858, 0.6438, 0.0663, 0.4973],

[0.1310, 0.7462, 0.9961, 0.6147]]])

代码中针对维度1进行归一化。维度1有3个通道,具体的计算细节为

0.1052

=

0.0861

0.086

1

2

+

0.806

7

2

+

0.107

2

2

0.1052=\frac{0.0861}{\sqrt{0.0861^2+0.8067^2+0.1072^2}}

0.1052=0.08612+0.80672+0.107220.0861

0.1695

=

0.1087

0.108

7

2

+

0.412

8

2

+

0.478

5

2

0.1695=\frac{0.1087}{\sqrt{0.1087^2+0.4128^2+0.4785^2}}

0.1695=0.10872+0.41282+0.478520.1087

0.0580

=

0.0518

0.051

8

2

+

0.059

2

2

+

0.889

0

2

0.0580=\frac{0.0518}{\sqrt{0.0518^2+0.0592^2+0.8890^2}}

0.0580=0.05182+0.05922+0.889020.0518

0.6123

=

0.3551

0.355

1

2

+

0.288

4

2

+

0.356

5

2

0.6123=\frac{0.3551}{\sqrt{0.3551^2+0.2884^2+0.3565^2}}

0.6123=0.35512+0.28842+0.356520.3551

参数dim=2

a = torch.tensor([[[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]]])

print(a.shape)

print("=============================================")

print(a)

print("=============================================")

c = F.normalize(a, dim=2)

print(c)

结果为

torch.Size([1, 3, 4])

=============================================

tensor([[[0.0861, 0.1087, 0.0518, 0.3551],

[0.8067, 0.4128, 0.0592, 0.2884],

[0.1072, 0.4785, 0.8890, 0.3565]]])

=============================================

tensor([[[0.2237, 0.2825, 0.1347, 0.9230],

[0.8467, 0.4332, 0.0621, 0.3027],

[0.0996, 0.4447, 0.8262, 0.3313]]])

这里作用的是维度2,可以认为维度2有4个通道,计算细节为:

参数dim=-1

与dim=2结果一致,相当于看做逆序索引

参考博文及感谢

部分内容参考以下链接,这里表示感谢 Thanks♪(・ω・)ノ

参考博文1 官方文档

https://pytorch.org/docs/stable/generated/torch.nn.functional.normalize.html

参考博文2 Pytorch中关于F.normalize计算理解

https://www.jb51.net/article/274086.htm

参考博文3 【Pytorch】F.normalize计算理解

https://blog.csdn.net/lj2048/article/details/118115681文章来源地址https://www.toymoban.com/news/detail-451929.html

到了这里,关于torch.nn.functional.normalize参数说明的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!