目录

文章来源地址https://www.toymoban.com/news/detail-453759.html

一、环境

1.1getExecutionEnvironment

1.2createLocalEnvironment

1.3createRemoteEnvironment

二、从集合中读取数据

三、从文件中读取数据

四、从KafKa中读取数据

1.导入依赖

2.启动KafKa

3.java代码

一、环境

1.1getExecutionEnvironment

创建一个执行环境,表示当前执行程序的上下文。如果程序是独立调用的,则此方法返回本地执行环境;如果从命令行客户端调用程序以提交到集群,则此方法返回此集群的执行环境,也就是说,getExecutionEnvironment会根据查询运行的方式决定返回什么样的运行环境,是最常用的一种创建执行环境的方式。

#批处理环境

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

#流处理环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();设置并行度:如果没有设置并行度,会以flink-conf.yaml中的配置为准,默认为1

//设置并行度为8

env.setParallelism(8);1.2createLocalEnvironment

返回本地执行环境,需要在调用时指定默认的并行度

LocalStreamEnvironment env = StreamExecutionEnvironment.createLocalEnvironment(1); 1.3createRemoteEnvironment

返回集群执行环境,将Jar提交到远程服务器。需要在调用时指定JobManager的IP和端口号,并指定要在集群中运行的Jar包

StreamExecutionEnvironment env = StreamExecutionEnvironment.createRemoteEnvironment("IP",端口号,jar包路径)二、从集合中读取数据

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.Arrays;

/**

* @author : Ashiamd email: ashiamd@foxmail.com

* @date : 2021/1/31 5:13 PM

* 测试Flink从集合中获取数据

*/

public class SourceTest1_Collection {

public static void main(String[] args) throws Exception {

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置env并行度1,使得整个任务抢占同一个线程执行

env.setParallelism(1);

// Source: 从集合Collection中获取数据

DataStream<SensorReading> dataStream = env.fromCollection(

Arrays.asList(

new SensorReading("sensor_1", 1547718199L, 35.8),

new SensorReading("sensor_6", 1547718201L, 15.4),

new SensorReading("sensor_7", 1547718202L, 6.7),

new SensorReading("sensor_10", 1547718205L, 38.1)

)

);

DataStream<Integer> intStream = env.fromElements(1,2,3,4,5,6,7,8,9);

// 打印输出

dataStream.print("SENSOR");

intStream.print("INT");

// 执行

env.execute("JobName");

}

}

三、从文件中读取数据

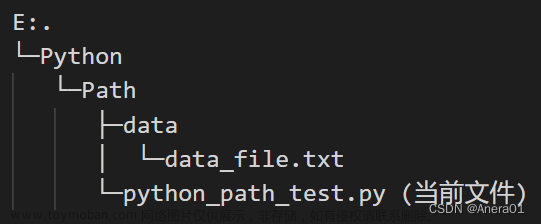

文件由自己创建一个txt文件

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* @author : Ashiamd email: ashiamd@foxmail.com

* @date : 2021/1/31 5:26 PM

* Flink从文件中获取数据

*/

public class SourceTest2_File {

public static void main(String[] args) throws Exception {

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 使得任务抢占同一个线程

env.setParallelism(1);

// 从文件中获取数据输出

DataStream<String> dataStream = env.readTextFile("/tmp/Flink_Tutorial/src/main/resources/sensor.txt");

dataStream.print();

env.execute();

}

}

四、从KafKa中读取数据

1.导入依赖

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>1.10.1</version>

</dependency>

<!-- kafka -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.11</artifactId>

<version>1.12.1</version>

</dependency>

</dependencies>2.启动KafKa

启动Zookeeper

./bin/zookeeper-server-start.sh [config/zookeeper.properties]启动KafKa服务

./bin/kafka-server-start.sh -daemon ./config/server.properties启动KafKa生产者

./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic sensor3.java代码

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import java.util.Properties;

/**

* @author : Ashiamd email: ashiamd@foxmail.com

* @date : 2021/1/31 5:44 PM

*/

public class SourceTest3_Kafka {

public static void main(String[] args) throws Exception {

// 创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 设置并行度1

env.setParallelism(1);

Properties properties = new Properties();

//监听的kafka端口

properties.setProperty("bootstrap.servers", "localhost:9092");

// 下面这些次要参数

properties.setProperty("group.id", "consumer-group");

properties.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.setProperty("auto.offset.reset", "latest");

// flink添加外部数据源

DataStream<String> dataStream = env.addSource(new FlinkKafkaConsumer<String>("sensor", new SimpleStringSchema(),properties));

// 打印输出

dataStream.print();

env.execute();

}

}

文章来源:https://www.toymoban.com/news/detail-453759.html

到了这里,关于Flink 流处理API的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!