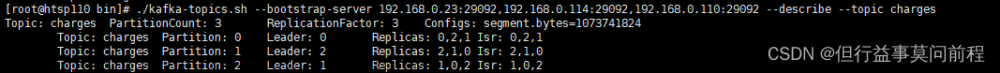

[root@centos6 bin]# ./kafka-topics.sh --create --bootstrap-server 127.0.0.1:9092 --replication-factor 1 --partitions 6 --topic test1

Created topic test1.

[root@centos6 kafka]# cat produce_kafka.py

from kafka import KafkaProducer

from kafka.errors import KafkaError

import os

producer = KafkaProducer(bootstrap_servers=['127.0.0.1:9092'])

# Asynchronous by default

def send_kafka(message):

future = producer.send('test1', message)

# Block for 'synchronous' sends

try:

record_metadata = future.get(timeout=10)

except KafkaError:

# Decide what to do if produce request failed...

# log.exception()

pass

print(record_metadata.topic)

print(record_metadata.partition)

print(record_metadata.offset)

# Successful result returns assigned partition and offset

f=open("./poc.txt")

content=f.readlines()

print(type(content))

for x in content:

print(x)

send_kafka(x.encode())

f.close()文章来源:https://www.toymoban.com/news/detail-457230.html

[root@centos6 kafka]# cat consumer_kafka_2.py

#!/usr/bin/env python

# coding=utf-8

"""

kafka的消费者不是线程安全的,不能多线程启用

kafka不像其他的MQ,消费完数据,直接丢掉,而是会默认存储7天,存储7天后自动清除,故而可以从头开始消费

从指定topic的partiotion的指定setoff开始消费

"""

import json

from kafka import KafkaConsumer

from kafka.structs import TopicPartition

consumer = KafkaConsumer(bootstrap_servers='localhost:9092',

auto_offset_reset='earliest')

consumer.subscribe(['test1'])

for message in consumer:

print (message)

[root@centos6 kafka]# cat out.txt

ConsumerRecord(topic=u'test1', partition=3, offset=0, timestamp=1684226903982, timestamp_type=0, key=None, value="'3---33333'\n", checksum=925189724, serialized_key_size=-1, serialized_value_size=12)

ConsumerRecord(topic=u'test1', partition=3, offset=1, timestamp=1684226903992, timestamp_type=0, key=None, value="'4---44444'\n", checksum=1373485295, serialized_key_size=-1, serialized_value_size=12)

ConsumerRecord(topic=u'test1', partition=3, offset=2, timestamp=1684226904014, timestamp_type=0, key=None, value="'6---66666'\n", checksum=-564428406, serialized_key_size=-1, serialized_value_size=12)

ConsumerRecord(topic=u'test1', partition=3, offset=3, timestamp=1684226904023, timestamp_type=0, key=None, value="'7---77777'\n", checksum=120339133, serialized_key_size=-1, serialized_value_size=12)

ConsumerRecord(topic=u'test1', partition=3, offset=4, timestamp=1684226904096, timestamp_type=0, key=None, value="'16---1616161616'\n", checksum=1304489750, serialized_key_size=-1, serialized_value_size=18)文章来源地址https://www.toymoban.com/news/detail-457230.html

到了这里,关于kafka topic多分区乱序问题的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!