源码地址见文末

1.项目与参数配置解读

首先,进入目录,使用pip install -r requirements.txt配置环境。

首先,对于demo的运行,首先需要准备好需要用于关键点匹配的数据,提供的代码中置于了image文件夹下,然后是训练的权重,代码中下载了室内场景和室外场景的训练权重。

配置参数指定:

--weight outdoor_ds.ckpt --save_video --input ./images/ --output_dir ./output/

2.Backbone特征提取

每次输入为两张图像,首先,使用卷积对两张图像进行特征提取。获得1/8和1/2尺度的粗粒度和细粒度特征图。源码中这部分使用的是resnet

class ResNetFPN_8_2(nn.Module):

"""

ResNet+FPN, output resolution are 1/8 and 1/2.

Each block has 2 layers.

"""

def __init__(self, config):

super().__init__()

# Config

block = BasicBlock

initial_dim = config['initial_dim']

block_dims = config['block_dims']

# Class Variable

self.in_planes = initial_dim

# Networks

self.conv1 = nn.Conv2d(1, initial_dim, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(initial_dim)

self.relu = nn.ReLU(inplace=True)

self.layer1 = self._make_layer(block, block_dims[0], stride=1) # 1/2

self.layer2 = self._make_layer(block, block_dims[1], stride=2) # 1/4

self.layer3 = self._make_layer(block, block_dims[2], stride=2) # 1/8

# 3. FPN upsample

self.layer3_outconv = conv1x1(block_dims[2], block_dims[2])

self.layer2_outconv = conv1x1(block_dims[1], block_dims[2])

self.layer2_outconv2 = nn.Sequential(

conv3x3(block_dims[2], block_dims[2]),

nn.BatchNorm2d(block_dims[2]),

nn.LeakyReLU(),

conv3x3(block_dims[2], block_dims[1]),

)

self.layer1_outconv = conv1x1(block_dims[0], block_dims[1])

self.layer1_outconv2 = nn.Sequential(

conv3x3(block_dims[1], block_dims[1]),

nn.BatchNorm2d(block_dims[1]),

nn.LeakyReLU(),

conv3x3(block_dims[1], block_dims[0]),

)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def _make_layer(self, block, dim, stride=1):

layer1 = block(self.in_planes, dim, stride=stride)

layer2 = block(dim, dim, stride=1)

layers = (layer1, layer2)

self.in_planes = dim

return nn.Sequential(*layers)

def forward(self, x):

# ResNet Backbone

x0 = self.relu(self.bn1(self.conv1(x)))

x1 = self.layer1(x0) # 1/2

x2 = self.layer2(x1) # 1/4

x3 = self.layer3(x2) # 1/8

# FPN

x3_out = self.layer3_outconv(x3)

x3_out_2x = F.interpolate(x3_out, scale_factor=2., mode='bilinear', align_corners=True)

x2_out = self.layer2_outconv(x2)

x2_out = self.layer2_outconv2(x2_out+x3_out_2x)

x2_out_2x = F.interpolate(x2_out, scale_factor=2., mode='bilinear', align_corners=True)

x1_out = self.layer1_outconv(x1)

x1_out = self.layer1_outconv2(x1_out+x2_out_2x)

return [x3_out, x1_out]3.注意力机制

在局部特征提取后,将1/8尺度的特征图加入正余弦位置编码通过LoFTR模块,提取与位置和上下文相关的局部特征。直观地说,LoFTR模块将特征转换为易于匹配的特征表示。

LoFTR包含一个自注意力机制和一个交叉注意力,自注意力机制作用于每张图像内部,交叉注意力机制作用于两张图片之间。

注意力机制这里使用的是线性注意力机制,即计算复杂度与图像序列线性相关。作者提出将原始的softmax(QK^T)使用核函数

代替,其中

代替,其中 。然后,首先计算

。然后,首先计算 和V,计算后的矩阵维度为D*D,再与

和V,计算后的矩阵维度为D*D,再与 计算点积,计算规模为O(DND),由于D<<N,论文中说计算规模缩减为了O(N)。

计算点积,计算规模为O(DND),由于D<<N,论文中说计算规模缩减为了O(N)。

代码如下:

class LocalFeatureTransformer(nn.Module):

"""A Local Feature Transformer (LoFTR) module."""

def __init__(self, config):

super(LocalFeatureTransformer, self).__init__()

self.config = config

self.d_model = config['d_model']

self.nhead = config['nhead']

self.layer_names = config['layer_names']

encoder_layer = LoFTREncoderLayer(config['d_model'], config['nhead'], config['attention'])

self.layers = nn.ModuleList([copy.deepcopy(encoder_layer) for _ in range(len(self.layer_names))])

self._reset_parameters()

def _reset_parameters(self):

for p in self.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

def forward(self, feat0, feat1, mask0=None, mask1=None):

"""

Args:

feat0 (torch.Tensor): [N, L, C]

feat1 (torch.Tensor): [N, S, C]

mask0 (torch.Tensor): [N, L] (optional)

mask1 (torch.Tensor): [N, S] (optional)

"""

assert self.d_model == feat0.size(2), "the feature number of src and transformer must be equal"

for layer, name in zip(self.layers, self.layer_names):

if name == 'self':

feat0 = layer(feat0, feat0, mask0, mask0)

feat1 = layer(feat1, feat1, mask1, mask1)

elif name == 'cross':

feat0 = layer(feat0, feat1, mask0, mask1)

feat1 = layer(feat1, feat0, mask1, mask0)

else:

raise KeyError

return feat0, feat14.粗粒度匹配过程

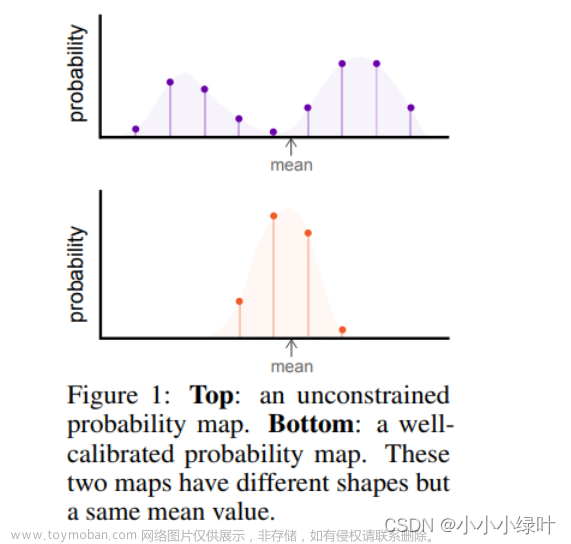

通过双softmax获得关键点之间的匹配概率,softmax是为了进行归一化,从而转化为概率。

在匹配过程中,首先通过阈值筛选,对结果进行过滤。然后使用互相最近邻,也就是说,如果说A图上的a点与B图的b点权重最大,如果两个点需要匹配上,也要求B图b点与A图a点的关系权重最大。然后,得到了两张图片粗粒度匹配的点的索引。

代码如下:

class CoarseMatching(nn.Module):

def __init__(self, config):

super().__init__()

self.config = config

# general config

self.thr = config['thr']

self.border_rm = config['border_rm']

# -- # for trainig fine-level LoFTR

self.train_coarse_percent = config['train_coarse_percent']

self.train_pad_num_gt_min = config['train_pad_num_gt_min']

# we provide 2 options for differentiable matching

self.match_type = config['match_type']

if self.match_type == 'dual_softmax':

self.temperature = config['dsmax_temperature']

elif self.match_type == 'sinkhorn':

try:

from .superglue import log_optimal_transport

except ImportError:

raise ImportError("download superglue.py first!")

self.log_optimal_transport = log_optimal_transport

self.bin_score = nn.Parameter(

torch.tensor(config['skh_init_bin_score'], requires_grad=True))

self.skh_iters = config['skh_iters']

self.skh_prefilter = config['skh_prefilter']

else:

raise NotImplementedError()

def forward(self, feat_c0, feat_c1, data, mask_c0=None, mask_c1=None):

"""

Args:

feat0 (torch.Tensor): [N, L, C]

feat1 (torch.Tensor): [N, S, C]

data (dict)

mask_c0 (torch.Tensor): [N, L] (optional)

mask_c1 (torch.Tensor): [N, S] (optional)

Update:

data (dict): {

'b_ids' (torch.Tensor): [M'],

'i_ids' (torch.Tensor): [M'],

'j_ids' (torch.Tensor): [M'],

'gt_mask' (torch.Tensor): [M'],

'mkpts0_c' (torch.Tensor): [M, 2],

'mkpts1_c' (torch.Tensor): [M, 2],

'mconf' (torch.Tensor): [M]}

NOTE: M' != M during training.

"""

N, L, S, C = feat_c0.size(0), feat_c0.size(1), feat_c1.size(1), feat_c0.size(2)

# normalize

feat_c0, feat_c1 = map(lambda feat: feat / feat.shape[-1]**.5,

[feat_c0, feat_c1])

if self.match_type == 'dual_softmax':

sim_matrix = torch.einsum("nlc,nsc->nls", feat_c0,

feat_c1) / self.temperature

if mask_c0 is not None:

sim_matrix.masked_fill_(

~(mask_c0[..., None] * mask_c1[:, None]).bool(),

-INF)

conf_matrix = F.softmax(sim_matrix, 1) * F.softmax(sim_matrix, 2)

elif self.match_type == 'sinkhorn':

# sinkhorn, dustbin included

sim_matrix = torch.einsum("nlc,nsc->nls", feat_c0, feat_c1)

if mask_c0 is not None:

sim_matrix[:, :L, :S].masked_fill_(

~(mask_c0[..., None] * mask_c1[:, None]).bool(),

-INF)

# build uniform prior & use sinkhorn

log_assign_matrix = self.log_optimal_transport(

sim_matrix, self.bin_score, self.skh_iters)

assign_matrix = log_assign_matrix.exp()

conf_matrix = assign_matrix[:, :-1, :-1]

# filter prediction with dustbin score (only in evaluation mode)

if not self.training and self.skh_prefilter:

filter0 = (assign_matrix.max(dim=2)[1] == S)[:, :-1] # [N, L]

filter1 = (assign_matrix.max(dim=1)[1] == L)[:, :-1] # [N, S]

conf_matrix[filter0[..., None].repeat(1, 1, S)] = 0

conf_matrix[filter1[:, None].repeat(1, L, 1)] = 0

if self.config['sparse_spvs']:

data.update({'conf_matrix_with_bin': assign_matrix.clone()})

data.update({'conf_matrix': conf_matrix})

# predict coarse matches from conf_matrix

data.update(**self.get_coarse_match(conf_matrix, data))

@torch.no_grad()

def get_coarse_match(self, conf_matrix, data):

"""

Args:

conf_matrix (torch.Tensor): [N, L, S]

data (dict): with keys ['hw0_i', 'hw1_i', 'hw0_c', 'hw1_c']

Returns:

coarse_matches (dict): {

'b_ids' (torch.Tensor): [M'],

'i_ids' (torch.Tensor): [M'],

'j_ids' (torch.Tensor): [M'],

'gt_mask' (torch.Tensor): [M'],

'm_bids' (torch.Tensor): [M],

'mkpts0_c' (torch.Tensor): [M, 2],

'mkpts1_c' (torch.Tensor): [M, 2],

'mconf' (torch.Tensor): [M]}

"""

axes_lengths = {

'h0c': data['hw0_c'][0],

'w0c': data['hw0_c'][1],

'h1c': data['hw1_c'][0],

'w1c': data['hw1_c'][1]

}

_device = conf_matrix.device

# 1. confidence thresholding 保留大于阈值的

mask = conf_matrix > self.thr

mask = rearrange(mask, 'b (h0c w0c) (h1c w1c) -> b h0c w0c h1c w1c',

**axes_lengths)

if 'mask0' not in data:

mask_border(mask, self.border_rm, False)

else:

mask_border_with_padding(mask, self.border_rm, False,

data['mask0'], data['mask1'])

mask = rearrange(mask, 'b h0c w0c h1c w1c -> b (h0c w0c) (h1c w1c)',

**axes_lengths)

# 2. mutual nearest

# 相互最近邻

mask = mask \

* (conf_matrix == conf_matrix.max(dim=2, keepdim=True)[0]) \

* (conf_matrix == conf_matrix.max(dim=1, keepdim=True)[0])

# 3. find all valid coarse matches

# this only works when at most one `True` in each row

mask_v, all_j_ids = mask.max(dim=2)

b_ids, i_ids = torch.where(mask_v)

j_ids = all_j_ids[b_ids, i_ids]

mconf = conf_matrix[b_ids, i_ids, j_ids]

# 4. Random sampling of training samples for fine-level LoFTR

# (optional) pad samples with gt coarse-level matches

if self.training:

# NOTE:

# The sampling is performed across all pairs in a batch without manually balancing

# #samples for fine-level increases w.r.t. batch_size

if 'mask0' not in data:

num_candidates_max = mask.size(0) * max(

mask.size(1), mask.size(2))

else:

num_candidates_max = compute_max_candidates(

data['mask0'], data['mask1'])

num_matches_train = int(num_candidates_max *

self.train_coarse_percent)

num_matches_pred = len(b_ids)

assert self.train_pad_num_gt_min < num_matches_train, "min-num-gt-pad should be less than num-train-matches"

# pred_indices is to select from prediction

if num_matches_pred <= num_matches_train - self.train_pad_num_gt_min:

pred_indices = torch.arange(num_matches_pred, device=_device)

else:

pred_indices = torch.randint(

num_matches_pred,

(num_matches_train - self.train_pad_num_gt_min, ),

device=_device)

# gt_pad_indices is to select from gt padding. e.g. max(3787-4800, 200)

gt_pad_indices = torch.randint(

len(data['spv_b_ids']),

(max(num_matches_train - num_matches_pred,

self.train_pad_num_gt_min), ),

device=_device)

mconf_gt = torch.zeros(len(data['spv_b_ids']), device=_device) # set conf of gt paddings to all zero

b_ids, i_ids, j_ids, mconf = map(

lambda x, y: torch.cat([x[pred_indices], y[gt_pad_indices]],

dim=0),

*zip([b_ids, data['spv_b_ids']], [i_ids, data['spv_i_ids']],

[j_ids, data['spv_j_ids']], [mconf, mconf_gt]))

# These matches select patches that feed into fine-level network

coarse_matches = {'b_ids': b_ids, 'i_ids': i_ids, 'j_ids': j_ids}

# 4. Update with matches in original image resolution

scale = data['hw0_i'][0] / data['hw0_c'][0]

scale0 = scale * data['scale0'][b_ids] if 'scale0' in data else scale

scale1 = scale * data['scale1'][b_ids] if 'scale1' in data else scale

mkpts0_c = torch.stack(

[i_ids % data['hw0_c'][1], i_ids // data['hw0_c'][1]],

dim=1) * scale0

mkpts1_c = torch.stack(

[j_ids % data['hw1_c'][1], j_ids // data['hw1_c'][1]],

dim=1) * scale1

# These matches is the current prediction (for visualization)

coarse_matches.update({

'gt_mask': mconf == 0,

'm_bids': b_ids[mconf != 0], # mconf == 0 => gt matches

'mkpts0_c': mkpts0_c[mconf != 0],

'mkpts1_c': mkpts1_c[mconf != 0],

'mconf': mconf[mconf != 0]

})

return coarse_matches5.细粒度匹配过程

对于细粒度特征图,首先对特征图有一个拆解过程,即对以5*5的大小将特征图拆解为4800个区域。得到4800*3200(4800是区域的个数,3200是特征的个数)3200表示,每个区域有5*5的像素点,通道数为128.以对应粗粒度的匹配结果。

之前经过粗粒度匹配,筛选出了匹配的区域,获得了匹配的索引。将索引与拆解的窗口进行对应,获得了粗粒度匹配的区域。每个匹配的区域有25个点,对每个点的特征经过自注意力和交叉注意力进行重构。

最后,找到区域的中间位置,使用点积得到每个点与中心点的关系向量。softmax归一化为热度图。整个区域热度图的期望即为匹配的点,再还原到原始的图像中。

细粒度特征图拆分代码:

class FinePreprocess(nn.Module):

def __init__(self, config):

super().__init__()

self.config = config

self.cat_c_feat = config['fine_concat_coarse_feat']

self.W = self.config['fine_window_size']

d_model_c = self.config['coarse']['d_model']

d_model_f = self.config['fine']['d_model']

self.d_model_f = d_model_f

if self.cat_c_feat:

self.down_proj = nn.Linear(d_model_c, d_model_f, bias=True)

self.merge_feat = nn.Linear(2*d_model_f, d_model_f, bias=True)

self._reset_parameters()

def _reset_parameters(self):

for p in self.parameters():

if p.dim() > 1:

nn.init.kaiming_normal_(p, mode="fan_out", nonlinearity="relu")

def forward(self, feat_f0, feat_f1, feat_c0, feat_c1, data):

W = self.W

stride = data['hw0_f'][0] // data['hw0_c'][0]

data.update({'W': W})

if data['b_ids'].shape[0] == 0:

feat0 = torch.empty(0, self.W**2, self.d_model_f, device=feat_f0.device)

feat1 = torch.empty(0, self.W**2, self.d_model_f, device=feat_f0.device)

return feat0, feat1

# 1. unfold(crop) all local windows

# 将特征图拆解为4800个长条,每个长条的窗口为5*5,以对应粗粒度的匹配。

feat_f0_unfold = F.unfold(feat_f0, kernel_size=(W, W), stride=stride, padding=W//2)

feat_f0_unfold = rearrange(feat_f0_unfold, 'n (c ww) l -> n l ww c', ww=W**2)

feat_f1_unfold = F.unfold(feat_f1, kernel_size=(W, W), stride=stride, padding=W//2)

feat_f1_unfold = rearrange(feat_f1_unfold, 'n (c ww) l -> n l ww c', ww=W**2)

# 2. select only the predicted matches

# 从粗粒度匹配得到的索引中,取出对应的窗口

feat_f0_unfold = feat_f0_unfold[data['b_ids'], data['i_ids']] # [n, ww, cf]

feat_f1_unfold = feat_f1_unfold[data['b_ids'], data['j_ids']]

# option: use coarse-level loftr feature as context: concat and linear

if self.cat_c_feat:

feat_c_win = self.down_proj(torch.cat([feat_c0[data['b_ids'], data['i_ids']],

feat_c1[data['b_ids'], data['j_ids']]], 0)) # [2n, c]

feat_cf_win = self.merge_feat(torch.cat([

torch.cat([feat_f0_unfold, feat_f1_unfold], 0), # [2n, ww, cf]

repeat(feat_c_win, 'n c -> n ww c', ww=W**2), # [2n, ww, cf]

], -1))

feat_f0_unfold, feat_f1_unfold = torch.chunk(feat_cf_win, 2, dim=0)

return feat_f0_unfold, feat_f1_unfold细粒度特征匹配代码:文章来源:https://www.toymoban.com/news/detail-467806.html

class FineMatching(nn.Module):

"""FineMatching with s2d paradigm"""

def __init__(self):

super().__init__()

def forward(self, feat_f0, feat_f1, data):

"""

Args:

feat0 (torch.Tensor): [M, WW, C]

feat1 (torch.Tensor): [M, WW, C]

data (dict)

Update:

data (dict):{

'expec_f' (torch.Tensor): [M, 3],

'mkpts0_f' (torch.Tensor): [M, 2],

'mkpts1_f' (torch.Tensor): [M, 2]}

"""

M, WW, C = feat_f0.shape

W = int(math.sqrt(WW))

scale = data['hw0_i'][0] / data['hw0_f'][0]

self.M, self.W, self.WW, self.C, self.scale = M, W, WW, C, scale

# corner case: if no coarse matches found

if M == 0:

assert self.training == False, "M is always >0, when training, see coarse_matching.py"

# logger.warning('No matches found in coarse-level.')

data.update({

'expec_f': torch.empty(0, 3, device=feat_f0.device),

'mkpts0_f': data['mkpts0_c'],

'mkpts1_f': data['mkpts1_c'],

})

return

# 找到区域的中间位置

feat_f0_picked = feat_f0_picked = feat_f0[:, WW//2, :]

# 使用点积得到每个点与中心点的关系向量

sim_matrix = torch.einsum('mc,mrc->mr', feat_f0_picked, feat_f1)

softmax_temp = 1. / C**.5

# softmax归一化为热度图

heatmap = torch.softmax(softmax_temp * sim_matrix, dim=1).view(-1, W, W)

# compute coordinates from heatmap

# 计算期望并还原到原始图像中

coords_normalized = dsnt.spatial_expectation2d(heatmap[None], True)[0] # [M, 2]

grid_normalized = create_meshgrid(W, W, True, heatmap.device).reshape(1, -1, 2) # [1, WW, 2]

# compute std over <x, y>

var = torch.sum(grid_normalized**2 * heatmap.view(-1, WW, 1), dim=1) - coords_normalized**2 # [M, 2]

std = torch.sum(torch.sqrt(torch.clamp(var, min=1e-10)), -1) # [M] clamp needed for numerical stability

# for fine-level supervision

data.update({'expec_f': torch.cat([coords_normalized, std.unsqueeze(1)], -1)})

# compute absolute kpt coords

self.get_fine_match(coords_normalized, data)

@torch.no_grad()

def get_fine_match(self, coords_normed, data):

W, WW, C, scale = self.W, self.WW, self.C, self.scale

# mkpts0_f and mkpts1_f

mkpts0_f = data['mkpts0_c']

scale1 = scale * data['scale1'][data['b_ids']] if 'scale0' in data else scale

mkpts1_f = data['mkpts1_c'] + (coords_normed * (W // 2) * scale1)[:len(data['mconf'])]

data.update({

"mkpts0_f": mkpts0_f,

"mkpts1_f": mkpts1_f

})链接:https://pan.baidu.com/s/1nWbz-dXyzO7dN8lrospMVw?pwd=8rgv

提取码:8rgv 文章来源地址https://www.toymoban.com/news/detail-467806.html

到了这里,关于关键点匹配——商汤LoFTR源码详解的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!