开源框架springboot框架中集成es。使用

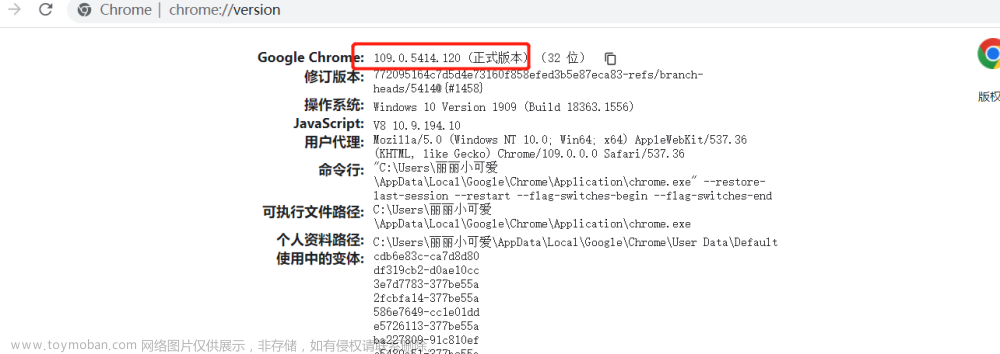

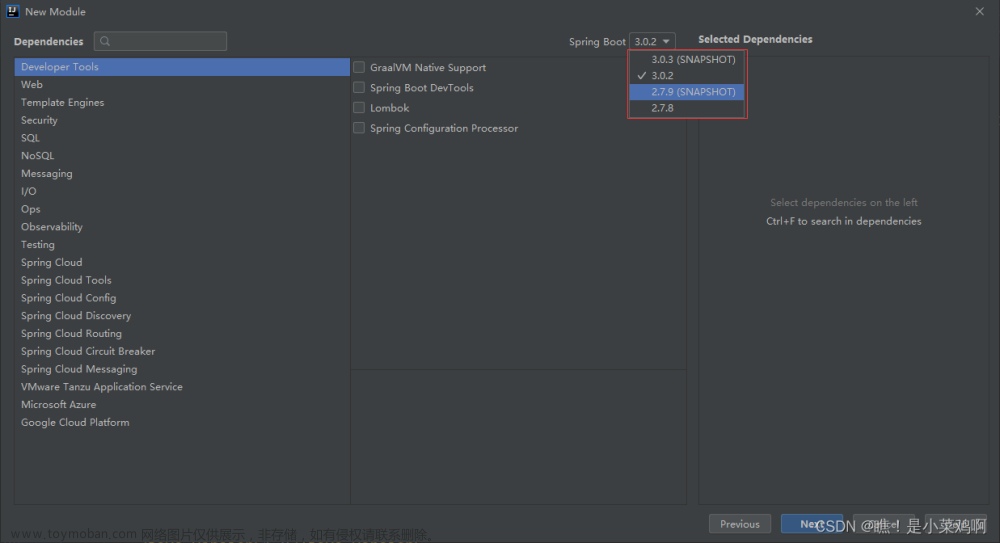

org.springframework.data.elasticsearch下的依赖,实现对elasticsearch的CURD,非常方便,但是springboot和elasticsearch版本对应很严格,对应版本不统一启动会报错。

开源框架

开源框架

Elasticsearch 7.x安装

Elasticsearch 7.x 安装步骤

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

注意:

springboot集成的elasticSearch的版本可能和

我们自己使用的不一致,可能会导致项目报错,需要手动把版本

改成和我们使用的一致

版本不一致异常信息:

Elasticsearch和springboot版本对应

Elasticsearch和springboot版本对应

springboot 2.1.6 对应 Elasticsearch 6.3.2

springboot 2.2.5 对应 Elasticsearch 7.6.0

springboot 2.2.6 对应 Elasticsearch 7.7.0

配置elasticSearch

@Configuration

public class ESClient {

@Bean

public RestHighLevelClient restHighLevelClient(){

RestHighLevelClient client = new RestHighLevelClient(

RestClient.builder(

new HttpHost("127.0.0.1",9200,"http")

)

);

return client;

}

}

测试类

@SpringBootTest

class EstestApplicationTests {

@Autowired

@Qualifier("restHighLevelClient")

private RestHighLevelClient client;

//测试创建索引库

@Test

void createIndex() throws IOException {

//创建请求

CreateIndexRequest request = new CreateIndexRequest("test1");

//执行请求

CreateIndexResponse response = client.indices().create(request, RequestOptions.DEFAULT);

System.out.println(response.toString());//org.elasticsearch.client.indices.CreateIndexResponse@6a5dc7e

}

//查看索引库是否存在 true存在,false不存在

@Test

void existsIndex() throws IOException {

//创建请求

GetIndexRequest request = new GetIndexRequest("test1");

boolean exists = client.indices().exists(request, RequestOptions.DEFAULT);

System.out.println(exists);

}

//删除索引库

@Test

void deleteIndex() throws IOException {

DeleteIndexRequest request = new DeleteIndexRequest("test1");

AcknowledgedResponse delete = client.indices().delete(request, RequestOptions.DEFAULT);

//删除成功返回true,失败返回false

System.out.println(delete.isAcknowledged());

}

//文档操作================================================================================

//添加文档

@Test

void createDocument() throws IOException {

//创建添加数据

User user = new User("张三",23);

//声明要保存到那个索引库

IndexRequest request = new IndexRequest("test1");

request.id("1").timeout("1s");

//给请求放入数据

request.source(JSON.toJSONString(user), XContentType.JSON);

//执行请求

IndexResponse resp = client.index(request, RequestOptions.DEFAULT);

System.out.println(resp);

System.out.println(resp.status());//CREATED

}

//修改文档

@Test

void updateDocument() throws IOException {

//声明修改数据

//User user = new User("李四",20);

User user = new User();

user.setName("王五");

//声明索引库

UpdateRequest request = new UpdateRequest("test1","1");

//设置修改的文档id和请求超时时间

request.id("1").timeout("1s");

request.doc(JSON.toJSONString(user),XContentType.JSON);

//执行修改 修改的时候,如果对象中某个字段没有给值,那么也会修改成默认值

UpdateResponse update = client.update(request,RequestOptions.DEFAULT);

System.out.println(update);

System.out.println(update.status());//ok

}

//查看文档是否存在

@Test

void existsDocument() throws IOException {

GetRequest request = new GetRequest("test1","1");

boolean exists = client.exists(request, RequestOptions.DEFAULT);

//存在返回true,不存在返回false

System.out.println(exists);

}

//删除文档

@Test

void deleteDocument() throws IOException {

DeleteRequest request = new DeleteRequest("test1","1");

DeleteResponse delete = client.delete(request, RequestOptions.DEFAULT);

System.out.println(delete);

System.out.println(delete.status());//ok

}

//根据id获取文档

@Test

void getDocument() throws IOException {

GetRequest request = new GetRequest("test1","1");

GetResponse resp = client.get(request, RequestOptions.DEFAULT);

System.out.println(resp);

//获取文档内容的字符串,没有数据为null

System.out.println(resp.getSourceAsString());

}

//批量操作,修改和删除操作只是改变request即可

@Test

void bulkadd() throws IOException {

BulkRequest bulkRequest = new BulkRequest();

bulkRequest.timeout("10s");

List<User> list = new ArrayList<>();

list.add(new User("chen1",20));

list.add(new User("chen2",20));

list.add(new User("chen3",20));

list.add(new User("chen4",20));

list.add(new User("chen5",20));

list.add(new User("chen6",20));

list.add(new User("chen7",20));

list.add(new User("chen8",20));

//注意:id要是重复,则会覆盖掉

for (int i = 0; i < list.size(); i++) {

bulkRequest.add(new IndexRequest("test1")

.id(""+(i+1))

.source(JSON.toJSONString(list.get(i)),XContentType.JSON));

}

//执行

BulkResponse bulk = client.bulk(bulkRequest, RequestOptions.DEFAULT);

System.out.println(bulk);

System.out.println(bulk.status());

}

//条件查询文档

@Test

void searchDocument() throws IOException {

//声明请求

SearchRequest request = new SearchRequest("test1");

//创建查询构造器对象

SearchSourceBuilder builder = new SearchSourceBuilder();

//精准查询条件构造器,还可以封装很多的构造器,都可以使用QueryBuilders这个类构建

//QueryBuilders里面封装了我们使用的所有查询筛选命令

TermQueryBuilder termQueryBuilder = QueryBuilders.termQuery("name", "chen1");

//把查询条件构造器放入到查询构造器中

builder.query(termQueryBuilder);

//把条件构造器放入到请求中

request.source(builder);

//执行查询

SearchResponse search = client.search(request, RequestOptions.DEFAULT);

//这个查询就和我们使用命令返回的结果是一致的

System.out.println(JSON.toJSONString(search.getHits().getHits()));

for (SearchHit hit : search.getHits().getHits()) {

//遍历获取到的hits,让每一个hit封装为map形式

System.out.println(hit.getSourceAsMap());

}

}

}

springframework实现对象操作es

es实体对象

org.springframework.data.annotation.Id;

org.springframework.data.elasticsearch.annotations.Document;

org.springframework.data.elasticsearch.annotations.Field;

org.springframework.data.elasticsearch.annotations.FieldType;

@Document(indexName = "bookinfosearch", type = "bookinfo")

public class BookInfoSearch implements Serializable {

private static final long serialVersionUID = 1L;

// 必须指定一个id,

@Id

private String id;

// 这里配置了分词器,字段类型,可以不配置,默认也可

// 名称

@Field(type = FieldType.Text, analyzer = "ik_max_word")

private String name;

// 简称

@Field(type = FieldType.Text, analyzer = "ik_max_word")

private String bookName;

//书本版本号

private BigDecimal currentVersion;

//书本首字母

@Field(type = FieldType.Keyword)

private String initials;

//操作类型add update delete

@Field(type = FieldType.Keyword)

private String type;

//书本分类信息的聚合体

private List<String> tagJson;

//分类Id

@Field(type = FieldType.Keyword)

private List<String> tagIds; //集合 1,2,3

//分类名称

@Field(type = FieldType.Keyword)

private List<String> tagNames;

//属性集合

@Field(type = FieldType.Text, analyzer = "ik_smart")

private List<String> attributes;

//创建时间

private Date createTime;

//更新时间

private Date updateTime;

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public BigDecimal getCurrentVersion() {

return currentVersion;

}

public void setCurrentVersion(BigDecimal currentVersion) {

this.currentVersion = currentVersion;

}

public String getInitials() {

return initials;

}

public void setInitials(String initials) {

this.initials = initials;

}

public String getType() {

return type;

}

public void setType(String type) {

this.type = type;

}

public List<String> getTagIds() {

return tagIds;

}

public void setTagIds(List<String> tagIds) {

this.tagIds = tagIds;

}

public List<String> getTagNames() {

return tagNames;

}

public void setTagNames(List<String> tagNames) {

this.tagNames = tagNames;

}

public List<String> getAttributes() {

return attributes;

}

public void setAttributes(List<String> attributes) {

this.attributes = attributes;

}

public Date getCreateTime() {

return createTime;

}

public void setCreateTime(Date createTime) {

this.createTime = createTime;

}

public Date getUpdateTime() {

return updateTime;

}

public void setUpdateTime(Date updateTime) {

this.updateTime = updateTime;

}

}

es接口

org.springframework.data.repository.CrudRepository

public interface KgInfoSearchMapper extends CrudRepository<KgInfoSearch, String> {

}

AbstractResultMapper

类抽象结果映射器

org.springframework.data.elasticsearch.core

java.lang.Object

org.springframework.data.elasticsearch.core.AbstractResultMapper

所有已实现的接口:

GetResultMapper,MultiGetResultMapper,ResultMapper,SearchResultMapper

构造函数和描述

AbstractResultMapper(EntityMapper entityMapper)

全部方法

EntityMapper getEntityMapper()

<T> T mapEntity(String source, Class<T> clazz)

public abstract class AbstractResultMapper implements ResultsMapper {

private EntityMapper entityMapper;

public AbstractResultMapper(EntityMapper entityMapper) {

Assert.notNull(entityMapper, "EntityMapper must not be null!");

this.entityMapper = entityMapper;

}

public <T> T mapEntity(String source, Class<T> clazz) {

if (StringUtils.isEmpty(source)) {

return null;

} else {

try {

return this.entityMapper.mapToObject(source, clazz);

} catch (IOException var4) {

throw new ElasticsearchException("failed to map source [ " + source + "] to class " + clazz.getSimpleName(), var4);

}

}

}

public EntityMapper getEntityMapper() {

return this.entityMapper;

}

}

Mapper

org.springframework.data.elasticsearch文章来源:https://www.toymoban.com/news/detail-468416.html

@Component

public class MyResultMapper extends AbstractResultMapper {

private final MappingContext<? extends ElasticsearchPersistentEntity<?>, ElasticsearchPersistentProperty> mappingContext;

public MyResultMapper() {

this(new SimpleElasticsearchMappingContext());

}

public MyResultMapper(MappingContext<? extends ElasticsearchPersistentEntity<?>, ElasticsearchPersistentProperty> mappingContext) {

super(new DefaultEntityMapper(mappingContext));

Assert.notNull(mappingContext, "MappingContext must not be null!");

this.mappingContext = mappingContext;

}

public MyResultMapper(EntityMapper entityMapper) {

this(new SimpleElasticsearchMappingContext(), entityMapper);

}

public MyResultMapper(

MappingContext<? extends ElasticsearchPersistentEntity<?>, ElasticsearchPersistentProperty> mappingContext,

EntityMapper entityMapper) {

super(entityMapper);

Assert.notNull(mappingContext, "MappingContext must not be null!");

this.mappingContext = mappingContext;

}

@Override

public <T> AggregatedPage<T> mapResults(SearchResponse response, Class<T> clazz, Pageable pageable) {

long totalHits = response.getHits().getTotalHits();

float maxScore = response.getHits().getMaxScore();

List<T> results = new ArrayList<>();

for (SearchHit hit : response.getHits()) {

if (hit != null) {

T result = null;

if (!StringUtils.isEmpty(hit.getSourceAsString())) {

result = mapEntity(hit.getSourceAsString(), clazz);

} else {

result = mapEntity(hit.getFields().values(), clazz);

}

setPersistentEntityId(result, hit.getId(), clazz);

setPersistentEntityVersion(result, hit.getVersion(), clazz);

setPersistentEntityScore(result, hit.getScore(), clazz);

populateScriptFields(result, hit);

results.add(result);

}

}

return new AggregatedPageImpl<T>(results, pageable, totalHits, response.getAggregations(), response.getScrollId(),

maxScore);

}

private String concat(Text[] texts) {

StringBuilder sb = new StringBuilder();

for (Text text : texts) {

sb.append(text.toString());

}

return sb.toString();

}

private <T> void populateScriptFields(T result, SearchHit hit) {

if (hit.getFields() != null && !hit.getFields().isEmpty() && result != null) {

for (java.lang.reflect.Field field : result.getClass().getDeclaredFields()) {

ScriptedField scriptedField = field.getAnnotation(ScriptedField.class);

if (scriptedField != null) {

String name = scriptedField.name().isEmpty() ? field.getName() : scriptedField.name();

DocumentField searchHitField = hit.getFields().get(name);

if (searchHitField != null) {

field.setAccessible(true);

try {

field.set(result, searchHitField.getValue());

} catch (IllegalArgumentException e) {

throw new ElasticsearchException(

"failed to set scripted field: " + name + " with value: " + searchHitField.getValue(), e);

} catch (IllegalAccessException e) {

throw new ElasticsearchException("failed to access scripted field: " + name, e);

}

}

}

}

}

for (HighlightField field : hit.getHighlightFields().values()) {

try {

PropertyUtils.setProperty(result, field.getName(), concat(field.fragments()));

} catch (InvocationTargetException | IllegalAccessException | NoSuchMethodException e) {

throw new ElasticsearchException("failed to set highlighted value for field: " + field.getName()

+ " with value: " + Arrays.toString(field.getFragments()), e);

}

}

}

private <T> T mapEntity(Collection<DocumentField> values, Class<T> clazz) {

return mapEntity(buildJSONFromFields(values), clazz);

}

private String buildJSONFromFields(Collection<DocumentField> values) {

JsonFactory nodeFactory = new JsonFactory();

try {

ByteArrayOutputStream stream = new ByteArrayOutputStream();

JsonGenerator generator = nodeFactory.createGenerator(stream, JsonEncoding.UTF8);

generator.writeStartObject();

for (DocumentField value : values) {

if (value.getValues().size() > 1) {

generator.writeArrayFieldStart(value.getName());

for (Object val : value.getValues()) {

generator.writeObject(val);

}

generator.writeEndArray();

} else {

generator.writeObjectField(value.getName(), value.getValue());

}

}

generator.writeEndObject();

generator.flush();

return new String(stream.toByteArray(), Charset.forName("UTF-8"));

} catch (IOException e) {

return null;

}

}

@Override

public <T> T mapResult(GetResponse response, Class<T> clazz) {

T result = mapEntity(response.getSourceAsString(), clazz);

if (result != null) {

setPersistentEntityId(result, response.getId(), clazz);

setPersistentEntityVersion(result, response.getVersion(), clazz);

}

return result;

}

@Override

public <T> LinkedList<T> mapResults(MultiGetResponse responses, Class<T> clazz) {

LinkedList<T> list = new LinkedList<>();

for (MultiGetItemResponse response : responses.getResponses()) {

if (!response.isFailed() && response.getResponse().isExists()) {

T result = mapEntity(response.getResponse().getSourceAsString(), clazz);

setPersistentEntityId(result, response.getResponse().getId(), clazz);

setPersistentEntityVersion(result, response.getResponse().getVersion(), clazz);

list.add(result);

}

}

return list;

}

private <T> void setPersistentEntityId(T result, String id, Class<T> clazz) {

if (clazz.isAnnotationPresent(Document.class)) {

ElasticsearchPersistentEntity<?> persistentEntity = mappingContext.getRequiredPersistentEntity(clazz);

ElasticsearchPersistentProperty idProperty = persistentEntity.getIdProperty();

// Only deal with String because ES generated Ids are strings !

if (idProperty != null && idProperty.getType().isAssignableFrom(String.class)) {

persistentEntity.getPropertyAccessor(result).setProperty(idProperty, id);

}

}

}

private <T> void setPersistentEntityVersion(T result, long version, Class<T> clazz) {

if (clazz.isAnnotationPresent(Document.class)) {

ElasticsearchPersistentEntity<?> persistentEntity = mappingContext.getPersistentEntity(clazz);

ElasticsearchPersistentProperty versionProperty = persistentEntity.getVersionProperty();

// Only deal with Long because ES versions are longs !

if (versionProperty != null && versionProperty.getType().isAssignableFrom(Long.class)) {

// check that a version was actually returned in the response, -1 would indicate that

// a search didn't request the version ids in the response, which would be an issue

Assert.isTrue(version != -1, "Version in response is -1");

persistentEntity.getPropertyAccessor(result).setProperty(versionProperty, version);

}

}

}

private <T> void setPersistentEntityScore(T result, float score, Class<T> clazz) {

if (clazz.isAnnotationPresent(Document.class)) {

ElasticsearchPersistentEntity<?> entity = mappingContext.getRequiredPersistentEntity(clazz);

if (!entity.hasScoreProperty()) {

return;

}

entity.getPropertyAccessor(result) //

.setProperty(entity.getScoreProperty(), score);

}

}

}

Service

/**

* 查询

* @param bookInfoSearchQueryParam 和实体对象字段一样

* @return

*/

PageEsResult searchInfoByBookName(BookInfoSearchQueryParam bookInfoSearchQueryParam);

ServiceImpl

@Override

public PageEsResult searchInfoByBookName(BookInfoSearchQueryParam bookInfoSearchQueryParam) {

BoolQueryBuilder queryBuilder = QueryBuilders.boolQuery();

//1. 创建一个查询条件对象

if (bookInfoSearchQueryParam != null && ObjectUtil.isNotEmpty(bookInfoSearchQueryParam.getKey())) {

String key = bookInfoSearchQueryParam.getKey();

key = QueryParser.escape(key);

MultiMatchQueryBuilder matchQueryBuilder = QueryBuilders

// 匹配多个字段 关键字 名称

.multiMatchQuery(key, "name").analyzer("ik_max_word")

.field("name", 0.1f);

queryBuilder.must(matchQueryBuilder);

}

if (bookInfoSearchQueryParam != null && ObjectUtil.isNotEmpty(bookInfoSearchQueryParam.getTagId())) {

queryBuilder.must(QueryBuilders.termQuery("tagIds", bookInfoSearchQueryParam.getTagId()));

}

//2.创建聚合查询

TermsAggregationBuilder agg = null;

if (bookInfoSearchQueryParam != null && ObjectUtil.isNotEmpty(bookInfoSearchQueryParam.getAgg())) {

agg = AggregationBuilders.terms(bookInfoSearchQueryParam.getAgg()).field(bookInfoSearchQueryParam.getAgg() + ".keyword").size(Integer.MAX_VALUE);

;//keyword表示不使用分词进行聚合

}

String sortField = "";

if (bookInfoSearchQueryParam != null && ObjectUtil.isNotEmpty(bookInfoSearchQueryParam.getSortField())) {

sortField = bookInfoSearchQueryParam.getSortField();

}

String sortRule = "";

if (bookInfoSearchQueryParam != null && ObjectUtil.isNotEmpty(bookInfoSearchQueryParam.getSortRule())) {

sortRule = bookInfoSearchQueryParam.getSortRule();

}

NativeSearchQueryBuilder nativeSearchQueryBuilder = new NativeSearchQueryBuilder();

nativeSearchQueryBuilder.withTypes("bookinfo"); // 类型

nativeSearchQueryBuilder.withIndices("bookinfosearch");//索引

nativeSearchQueryBuilder.withQuery(queryBuilder); //添加查询条件

if (agg != null) {

nativeSearchQueryBuilder.addAggregation(agg);

}

if (bookInfoSearchQueryParam != null && ObjectUtil.isNotEmpty(bookInfoSearchQueryParam.getKey())) {

HighlightBuilder.Field field = new HighlightBuilder.Field("name").preTags("<font style='color:red'>").postTags("</font>");

HighlightBuilder.Field fieldAttributes = new HighlightBuilder.Field("attributes").preTags("<font style='color:red'>").postTags("</font>");

field.fragmentSize(500);

nativeSearchQueryBuilder.withHighlightFields(field, fieldAttributes);

}

nativeSearchQueryBuilder.withPageable(PageRequest.of(bookInfoSearchQueryParam.getPage(), bookInfoSearchQueryParam.getPageSize())); //符合查询条件的文档分页(不是聚合的分页)

if (ObjectUtil.isNotEmpty(sortField) && ObjectUtil.isNotEmpty(sortRule)) {

nativeSearchQueryBuilder.withSort(SortBuilders.fieldSort(sortField).order(SortOrder.valueOf(sortRule)));

}

NativeSearchQuery build = nativeSearchQueryBuilder.build();

// 执行查询

AggregatedPage<KgInfoSearch> testEntities = elasticsearchTemplate.queryForPage(build, BookInfoSearch.class, myResultMapper);

// 取出聚合结果

ggregations entitiesAggregations = testEntities.getAggregations();

Terms terms = (Terms) entitiesAggregations.asMap().get(bookInfoSearchQueryParam.getAgg());

// 遍历取出聚合字段列的值,与对应的数量

if (terms != null && terms.getBuckets() != null && terms.getBuckets().size() > 0) {

for (Terms.Bucket bucket : terms.getBuckets()) {

String keyAsString = bucket.getKeyAsString(); // 聚合字段列的值

long docCount = bucket.getDocCount();// 聚合字段对应的数量

log.info("keyAsString={},value={}", keyAsString, docCount);

list集合.add(keyAsString);

}

}

//搜索数据保存搜索历史

JSONObject jsonObject = new JSONObject();

jsonObject.put("key", bookInfoSearchQueryParam.getKey());

jsonObject.put("key", bookInfoSearchQueryParam.getKey());

return 接收result;

}

请求参数Param对象

在es实体对象基础上 添加搜索条件字段文章来源地址https://www.toymoban.com/news/detail-468416.html

private String sortField; //排序字段 时间:updateTime /字母:initials

private String sortRule; //sortRule 排序规则 - 顺序(ASC)/倒序(DESC)

private boolean allQuery =false;//是否全部查询

private String agg;//聚类查询字段名称

private String sessionId;

private int pageSize = 10;

private int page = 0;

到了这里,关于SpringBoot 集成 elasticsearch 7.x和对应版本不一致异常信息处理的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!