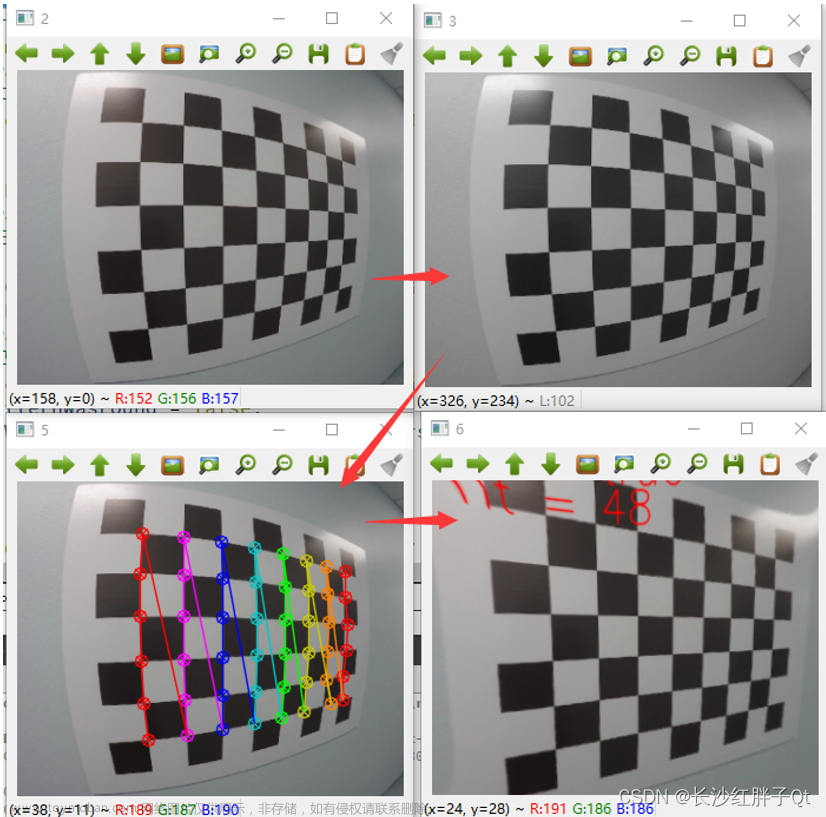

图像去畸变的思路

对于目标图像(无畸变图像)上的每个像素点,转换到normalize平面,再进行畸变变换,进行投影,得到这个像素点畸变后的位置,然后将这个位置的源图像(畸变图像)的像素值作为目标图像该点的像素值。

通常我们得到的原图是畸变后的图像(x_distort,y_distort),要计算畸变之前的真实图像(x,y),不是用逆运算,而是计算真实图像畸变后会投影在哪,对应过去。先把原图像设置为一个空的图像,把一个个像素畸变投影过去,找到和畸变后图像像素点的对应关系

鱼眼相机模型

世界坐标系内的点转换到相机坐标系

X_c = RX_w + t

转到归一化平面

对归一化平面内的点,进行畸变变换

畸变模型

径向畸变:桶形或者鱼眼

切向畸变:镜片安装和成像平面不平行引起的。

undistort

注意,OpenCV畸变系数矩阵的默认顺序 [k1, k2, p1, p2, k3]

#include <opencv2/opencv.hpp>

#include <chrono>

using namespace std;

int main(int argc, char **argv)

{

// 内参

double fx = 458.654, fy = 457.296, cx = 367.215, cy = 248.375;

/**内参矩阵K

* fx 0 cx

* 0 fy cy

* 0 0 1

*/

// 畸变参数

double k1 = -0.28340811, k2 = 0.07395907, p1 = 0.00019359, p2 = 1.76187114e-05;

cv::Mat image = cv::imread(argv[1], 0); // 图像是灰度图,CV_8UC1

int rows = image.rows, cols = image.cols;

cv::Mat image_undistort = cv::Mat(rows, cols, CV_8UC1); // 方法1去畸变以后的图

cv::Mat image_undistort2 = cv::Mat(rows, cols, CV_8UC1); // 方法2 OpenCV去畸变以后的图

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

//! 方法1. 自己写计算去畸变后图像的内容

for (int v = 0; v < rows; v++)

{

for (int u = 0; u < cols; u++)

{

double x = (u - cx) / fx, y = (v - cy) / fy; //要求解的真实图,归一化平面上的坐标

double r = sqrt(x * x + y * y);

double x_distorted = x * (1 + k1 * r * r + k2 * r * r * r * r) + 2 * p1 * x * y + p2 * (r * r + 2 * x * x); //畸变后归一化坐标

double y_distorted = y * (1 + k1 * r * r + k2 * r * r * r * r) + p1 * (r * r + 2 * y * y) + 2 * p2 * x * y;

double u_distorted = fx * x_distorted + cx; //畸变后像素坐标,即原图

double v_distorted = fy * y_distorted + cy;

// 投影赋值

if (u_distorted >= 0 && v_distorted >= 0 && u_distorted < cols && v_distorted < rows) //真实图畸变后仍然在图上的

{

image_undistort.at<uchar>(v, u) = image.at<uchar>((int)v_distorted, (int)u_distorted);

}

else

{

image_undistort.at<uchar>(v, u) = 0; //这里最好用插值法

}

}

}

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "time = " << time_used.count() << endl;

//! 方法2. OpenCV自带的undistort函数,更快速

cv::Mat K = cv::Mat::eye(3, 3, CV_32FC1); //内参矩阵

K.at<float>(0, 0) = fx;

K.at<float>(1, 1) = fy;

K.at<float>(0, 2) = cx;

K.at<float>(1, 2) = cy;

cv::Mat distort_coeffs = cv::Mat::zeros(1, 5, CV_32FC1); //畸变系数矩阵 顺序是[k1, k2, p1, p2, k3]

distort_coeffs.at<float>(0, 0) = k1;

distort_coeffs.at<float>(0, 1) = k2;

distort_coeffs.at<float>(0, 2) = p1;

distort_coeffs.at<float>(0, 3) = p2;

cout << "K = " << endl

<< K << endl;

cout << "distort_coeffs = " << endl

<< distort_coeffs << endl;

t1 = chrono::steady_clock::now();

cv::undistort(image, image_undistort2, K, distort_coeffs); //去畸变

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "time = " << time_used.count() << endl;

// 展示去畸变后图像

cv::imshow("distorted", image);

cv::imshow("undistorted", image_undistort);

cv::imshow("image_undistort2", image_undistort2);

cv::waitKey(0);

return 0;

}

undistortPoints

if (_distCoeffs)

{

// compensate tilt distortion

cv::Vec3d vecUntilt = invMatTilt * cv::Vec3d(x, y, 1);

double invProj = vecUntilt(2) ? 1. / vecUntilt(2) : 1;

x0 = x = invProj * vecUntilt(0);

y0 = y = invProj * vecUntilt(1);

double error = 1.7976931348623158e+308;

// compensate distortion iteratively

//注1: 开始循环迭代去除畸变

for (int j = 0;; j++)

{

//注2:一个是最大迭代次数条件

if ((criteria.type & cv::TermCriteria::COUNT) && j >= criteria.maxCount)

break;

//注3:一个是迭代最大误差条件

if ((criteria.type & cv::TermCriteria::EPS) && error < criteria.epsilon)

break;

//注4:在只有k1,k2,k3,p1,p2共5个畸变参数时,对应的k数组中只有k[0]~k[4]有值,其他都为0

double r2 = x*x + y*y;

double icdist = (1 + ((k[7] * r2 + k[6])*r2 + k[5])*r2) / (1 + ((k[4] * r2 + k[1])*r2 + k[0])*r2);

double deltaX = 2 * k[2] * x*y + k[3] * (r2 + 2 * x*x) + k[8] * r2 + k[9] * r2*r2;

double deltaY = k[2] * (r2 + 2 * y*y) + 2 * k[3] * x*y + k[10] * r2 + k[11] * r2*r2;

//注5:形如式(8)的迭代

x = (x0 - deltaX)*icdist;

y = (y0 - deltaY)*icdist;

if (criteria.type & cv::TermCriteria::EPS)

{

double r4, r6, a1, a2, a3, cdist, icdist2;

double xd, yd, xd0, yd0;

cv::Vec3d vecTilt;

//注6:将第k次计算的无畸变点代入畸变模型,得到于当前有畸变的偏差

r2 = x*x + y*y;

r4 = r2*r2;

r6 = r4*r2;

a1 = 2 * x*y;

a2 = r2 + 2 * x*x;

a3 = r2 + 2 * y*y;

cdist = 1 + k[0] * r2 + k[1] * r4 + k[4] * r6;

icdist2 = 1. / (1 + k[5] * r2 + k[6] * r4 + k[7] * r6);

xd0 = x*cdist*icdist2 + k[2] * a1 + k[3] * a2 + k[8] * r2 + k[9] * r4;

yd0 = y*cdist*icdist2 + k[2] * a3 + k[3] * a1 + k[10] * r2 + k[11] * r4;

vecTilt = matTilt*cv::Vec3d(xd0, yd0, 1);

invProj = vecTilt(2) ? 1. / vecTilt(2) : 1;

xd = invProj * vecTilt(0);

yd = invProj * vecTilt(1);

double x_proj = xd*fx + cx;

double y_proj = yd*fy + cy;

error = sqrt(pow(x_proj - u, 2) + pow(y_proj - v, 2));

}

}

}

void cvUndistortPointsInternal( const CvMat* _src, CvMat* _dst, const CvMat* _cameraMatrix,

const CvMat* _distCoeffs,

const CvMat* matR, const CvMat* matP, cv::TermCriteria criteria)

{

// 判断迭代条件是否有效

CV_Assert(criteria.isValid());

// 定义中间变量--A相机内参数组,和matA共享内存;RR-矫正变换数组,和_RR共享内存

// k-畸变系数数组

double A[3][3], RR[3][3], k[14]={0,0,0,0,0,0,0,0,0,0,0,0,0,0};

CvMat matA=cvMat(3, 3, CV_64F, A), _Dk;

CvMat _RR=cvMat(3, 3, CV_64F, RR);

cv::Matx33d invMatTilt = cv::Matx33d::eye();

cv::Matx33d matTilt = cv::Matx33d::eye();

// 检查输入变量是否有效

CV_Assert( CV_IS_MAT(_src) && CV_IS_MAT(_dst) &&

(_src->rows == 1 || _src->cols == 1) &&

(_dst->rows == 1 || _dst->cols == 1) &&

_src->cols + _src->rows - 1 == _dst->rows + _dst->cols - 1 &&

(CV_MAT_TYPE(_src->type) == CV_32FC2 || CV_MAT_TYPE(_src->type) == CV_64FC2) &&

(CV_MAT_TYPE(_dst->type) == CV_32FC2 || CV_MAT_TYPE(_dst->type) == CV_64FC2));

CV_Assert( CV_IS_MAT(_cameraMatrix) &&

_cameraMatrix->rows == 3 && _cameraMatrix->cols == 3 );

cvConvert( _cameraMatrix, &matA );// _cameraMatrix <--> matA / A

// 判断输入的畸变系数是否有效

if( _distCoeffs )

{

CV_Assert( CV_IS_MAT(_distCoeffs) &&

(_distCoeffs->rows == 1 || _distCoeffs->cols == 1) &&

(_distCoeffs->rows*_distCoeffs->cols == 4 ||

_distCoeffs->rows*_distCoeffs->cols == 5 ||

_distCoeffs->rows*_distCoeffs->cols == 8 ||

_distCoeffs->rows*_distCoeffs->cols == 12 ||

_distCoeffs->rows*_distCoeffs->cols == 14));

_Dk = cvMat( _distCoeffs->rows, _distCoeffs->cols,

CV_MAKETYPE(CV_64F,CV_MAT_CN(_distCoeffs->type)), k);// _Dk和数组k共享内存指针

cvConvert( _distCoeffs, &_Dk );

if (k[12] != 0 || k[13] != 0)

{

cv::detail::computeTiltProjectionMatrix<double>(k[12], k[13], NULL, NULL, NULL, &invMatTilt);

cv::detail::computeTiltProjectionMatrix<double>(k[12], k[13], &matTilt, NULL, NULL);

}

}

if( matR )

{

CV_Assert( CV_IS_MAT(matR) && matR->rows == 3 && matR->cols == 3 );

cvConvert( matR, &_RR );// matR和_RR共享内存指针

}

else

cvSetIdentity(&_RR);

if( matP )

{

double PP[3][3];

CvMat _P3x3, _PP=cvMat(3, 3, CV_64F, PP);

CV_Assert( CV_IS_MAT(matP) && matP->rows == 3 && (matP->cols == 3 || matP->cols == 4));

cvConvert( cvGetCols(matP, &_P3x3, 0, 3), &_PP );// _PP和数组PP共享内存指针

cvMatMul( &_PP, &_RR, &_RR );// _RR=_PP*_RR 放在一起计算比较高效

}

const CvPoint2D32f* srcf = (const CvPoint2D32f*)_src->data.ptr;

const CvPoint2D64f* srcd = (const CvPoint2D64f*)_src->data.ptr;

CvPoint2D32f* dstf = (CvPoint2D32f*)_dst->data.ptr;

CvPoint2D64f* dstd = (CvPoint2D64f*)_dst->data.ptr;

int stype = CV_MAT_TYPE(_src->type);

int dtype = CV_MAT_TYPE(_dst->type);

int sstep = _src->rows == 1 ? 1 : _src->step/CV_ELEM_SIZE(stype);

int dstep = _dst->rows == 1 ? 1 : _dst->step/CV_ELEM_SIZE(dtype);

double fx = A[0][0];

double fy = A[1][1];

double ifx = 1./fx;

double ify = 1./fy;

double cx = A[0][2];

double cy = A[1][2];

int n = _src->rows + _src->cols - 1;

// 开始对所有点开始遍历

for( int i = 0; i < n; i++ )

{

double x, y, x0 = 0, y0 = 0, u, v;

if( stype == CV_32FC2 )

{

x = srcf[i*sstep].x;

y = srcf[i*sstep].y;

}

else

{

x = srcd[i*sstep].x;

y = srcd[i*sstep].y;

}

u = x; v = y;

x = (x - cx)*ifx;//转换到归一化图像坐标系(含有畸变)

y = (y - cy)*ify;

//进行畸变矫正

if( _distCoeffs ) {

// compensate tilt distortion--该部分系数用来弥补沙氏镜头畸变??

// 如果不懂也没管,因为普通镜头中没有这些畸变系数

cv::Vec3d vecUntilt = invMatTilt * cv::Vec3d(x, y, 1);

double invProj = vecUntilt(2) ? 1./vecUntilt(2) : 1;

x0 = x = invProj * vecUntilt(0);

y0 = y = invProj * vecUntilt(1);

double error = std::numeric_limits<double>::max();// error设定为系统最大值

// compensate distortion iteratively

// 迭代去除镜头畸变

// 迭代公式 x′= (x−2p1 xy−p2 (r^2 + 2x^2))∕( 1 + k1*r^2 + k2*r^4 + k3*r^6)

// y′= (y−2p2 xy−p1 (r^2 + 2y^2))∕( 1 + k1*r^2 + k2*r^4 + k3*r^6)

for( int j = 0; ; j++ )

{

if ((criteria.type & cv::TermCriteria::COUNT) && j >= criteria.maxCount)// 迭代最大次数为5次

break;

if ((criteria.type & cv::TermCriteria::EPS) && error < criteria.epsilon)// 迭代误差阈值为0.01

break;

double r2 = x*x + y*y;

double icdist = (1 + ((k[7]*r2 + k[6])*r2 + k[5])*r2)/(1 + ((k[4]*r2 + k[1])*r2 + k[0])*r2);

double deltaX = 2*k[2]*x*y + k[3]*(r2 + 2*x*x)+ k[8]*r2+k[9]*r2*r2;

double deltaY = k[2]*(r2 + 2*y*y) + 2*k[3]*x*y+ k[10]*r2+k[11]*r2*r2;

x = (x0 - deltaX)*icdist;

y = (y0 - deltaY)*icdist;

// 对当前迭代的坐标加畸变,计算误差error用于判断迭代条件

if(criteria.type & cv::TermCriteria::EPS)

{

double r4, r6, a1, a2, a3, cdist, icdist2;

double xd, yd, xd0, yd0;

cv::Vec3d vecTilt;

r2 = x*x + y*y;

r4 = r2*r2;

r6 = r4*r2;

a1 = 2*x*y;

a2 = r2 + 2*x*x;

a3 = r2 + 2*y*y;

cdist = 1 + k[0]*r2 + k[1]*r4 + k[4]*r6;

icdist2 = 1./(1 + k[5]*r2 + k[6]*r4 + k[7]*r6);

xd0 = x*cdist*icdist2 + k[2]*a1 + k[3]*a2 + k[8]*r2+k[9]*r4;

yd0 = y*cdist*icdist2 + k[2]*a3 + k[3]*a1 + k[10]*r2+k[11]*r4;

vecTilt = matTilt*cv::Vec3d(xd0, yd0, 1);

invProj = vecTilt(2) ? 1./vecTilt(2) : 1;

xd = invProj * vecTilt(0);

yd = invProj * vecTilt(1);

double x_proj = xd*fx + cx;

double y_proj = yd*fy + cy;

error = sqrt( pow(x_proj - u, 2) + pow(y_proj - v, 2) );

}

}

}

// 将坐标从归一化图像坐标系转换到成像平面坐标系

double xx = RR[0][0]*x + RR[0][1]*y + RR[0][2];

double yy = RR[1][0]*x + RR[1][1]*y + RR[1][2];

double ww = 1./(RR[2][0]*x + RR[2][1]*y + RR[2][2]);

x = xx*ww;

y = yy*ww;

if( dtype == CV_32FC2 )

{

dstf[i*dstep].x = (float)x;

dstf[i*dstep].y = (float)y;

}

else

{

dstd[i*dstep].x = x;

dstd[i*dstep].y = y;

}

}

}

畸变矫正

通过calibrate Camera()得到的内参和畸变系数

图像的畸变矫正需要相机的内参和畸变系数, 在opencv中, 有以下两个函数可以实现:

- initUndistortRectifyMap() + remap()函数

- undistort()函数

推荐使用第一种方法:initUndistortRectifyMap只用运行一次,remap读取一次图像运行一次。

void initUndistortRectifyMap( InputArray cameraMatrix, InputArray distCoeffs,

InputArray R, InputArray newCameraMatrix, Size size, int m1type, OutputArray map1, OutputArray map2 );

参数说明:

cameraMatrix——输入的摄像头内参数矩阵(3X3矩阵)

distCoeffs——输入的摄像头畸变系数矩阵(5X1矩阵)

R——输入的第一和第二摄像头坐标系之间的旋转矩阵

newCameraMatrix——输入的校正后的3X3摄像机矩阵

size——摄像头采集的无失真图像尺寸

m1type——map1的数据类型,可以是CV_32FC1或CV_16SC2

map1——输出的X坐标重映射参数

map2——输出的Y坐标重映射参数

void remap(InputArray src, OutputArray dst, InputArray map1, InputArray map2, int interpolation, int borderMode=BORDER_CONSTANT, const Scalar& borderValue=Scalar())

参数说明:

src——输入图像,即原图像,需要单通道8位或者浮点类型的图像

dst(c++)——输出图像,即目标图像,需和原图形一样的尺寸和类型

map1——它有两种可能表示的对象:(1)表示点(x,y)的第一个映射;(2)表示CV_16SC2,CV_32FC1等

map2——有两种可能表示的对象:(1)若map1表示点(x,y)时,这个参数不代表任何值;(2)表示 CV_16UC1,CV_32FC1类型的Y值

intermap2polation——插值方式,有四中插值方式:

(1)INTER_NEAREST——最近邻插值

(2)INTER_LINEAR——双线性插值(默认)

(3)INTER_CUBIC——双三样条插值(默认)

(4)INTER_LANCZOS4——lanczos插值(默认)intborderMode——边界模式,默认BORDER_CONSTANT

borderValue——边界颜色,默认Scalar()黑色

projectPoints

先将世界坐标系内的点转换到相机坐标系、图像坐标系、像素坐标系。

然后再利用undistortPoint对这个点畸变矫正。

文章来源:https://www.toymoban.com/news/detail-469507.html

文章来源:https://www.toymoban.com/news/detail-469507.html

文章来源地址https://www.toymoban.com/news/detail-469507.html

文章来源地址https://www.toymoban.com/news/detail-469507.html

到了这里,关于opencv图像去畸变的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!