centos安装和配置masakari(stein版本)

一、基本环境参数

- 环境:centos7.6

- opentack-masakari版本stein

- python2.7.5/python3.6,都是系统自带python环境

- 离线部署,源码从openstack官网下载的,文档依然给出了

git的拉取地址

二、 控制节点安装 masakari

参考文档

# masakari 官方文档

https://docs.openstack.org/masakari/latest/

# masakari 官方文档pdf地址

https://docs.openstack.org//masakari/latest/doc-masakari.pdf

# 各版本代码官方地址

https://releases.openstack.org/teams/masakari.html

https://www.modb.pro/db/124466

https://docs.openstack.org//ha-guide/

https://docs.huihoo.com/openstack/docs.openstack.org/ha-guide/HAGuide.pdf

2.1 创建数据库

登录数据库:mysql -uroot -ptest123

# 创建 masakari 数据库

MariaDB [(none)]> CREATE DATABASE masakari CHARACTER SET utf8;

# 配置 masakari数据库的 masakari用户的库访问权限

MariaDB [(none)]> GRANT ALL PRIVILEGES ON masakari.* TO 'masakari'@'localhost' IDENTIFIED BY 'test123';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON masakari.* TO 'masakari'@'%' IDENTIFIED BY 'test123';

# 更新权限表

MariaDB [(none)]> flush privileges;

# 配置完成,退出数据库

MariaDB [(none)]> exit

2.2 创建openstack用户、服务、端点

加载admin凭据,用于以管理员权限使用openstack shell命令:. admin-openrc 或者 source /root/admin-openrc

# 默认masakari数据库及masakari服务密码 password: test123,可根据需要更改

[root@controller ~]# openstack user create --domain default --password test123 masakari

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 0f010f3765dd4c3cb8565088523cb278 |

| name | masakari |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

# 给masakari用户添加admin角色

[root@controller ~]# openstack role add --project service --user masakari admin

# 创建masakari服务实例

[root@controller ~]# openstack service create --name masakari --description "masakari high availability" instance-ha

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | masakari high availability |

| enabled | True |

| id | 5ee4f753c1114952b72b37b32c36e6b4 |

| name | masakari |

| type | instance-ha |

+-------------+----------------------------------+

# 创建endpoint

[root@controller ~]# openstack endpoint create --region RegionOne masakari public http://controller:15868/v1/%\(tenant_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | de47b437eee746598717b12b6f811b7c |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5ee4f753c1114952b72b37b32c36e6b4 |

| service_name | masakari |

| service_type | instance-ha |

| url | http://controller:15868/v1/%(tenant_id)s |

+--------------+------------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne masakari internal http://controller:15868/v1/%\(tenant_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | 3057e9d7a519456fa7624558e3339121 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5ee4f753c1114952b72b37b32c36e6b4 |

| service_name | masakari |

| service_type | instance-ha |

| url | http://controller:15868/v1/%(tenant_id)s |

+--------------+------------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne masakari admin http://controller:15868/v1/%\(tenant_id\)s

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | b45cc49917e04040b9d09fd0ade5a90a |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5ee4f753c1114952b72b37b32c36e6b4 |

| service_name | masakari |

| service_type | instance-ha |

| url | http://controller:15868/v1/%(tenant_id)s |

+--------------+------------------------------------------+

2.3 安装、启动masakari服务

2.3.1 创建用户、组

[root@controller ~]# groupadd --system masakari

[root@controller ~]# useradd --home-dir "/var/lib/masakari" --create-home --system --shell /bin/false -g masakari masakari

2.3.2 创建目录

[root@controller ~]# mkdir -p /etc/masakari

[root@controller ~]# chown masakari:masakari /etc/masakari

[root@controller ~]# mkdir -p /var/log/masakari/

[root@controller ~]# chown masakari:masakari /var/log/masakari/

2.3.3 下载masakari源码

# 使用git下载并安装masakari, 目录/var/lib/masakari不存在的话需要手动建立

[root@controller ~]# cd /var/lib/masakari

[root@controller masakari]# git clone -b stable/stein https://opendev.org/openstack/masakari.git

# 我是从openstack 官网直接下载的代码包 https://releases.openstack.org/teams/masakari.html

[root@controller masakari]# chown -R masakari:masakari masakari-7.1.0

2.3.4 下载配置文件并配置

[root@controller ~]# wget -O masakari.conf https://docs.openstack.org/masakari/latest/_downloads/a0fad818054d3abe3fd8a70e90d08166/masakari.conf.sample

[root@controller ~]# cp /root/masakari.conf.default /etc/masakari/masakari.conf

[root@controller ~]# cd /etc/masakari/

[root@controller masakari]# sed -i.default -e "/^#/d" -e "/^$/d" /etc/masakari/masakari.conf

复制api-paste.ini配置文件

[root@controller masakari]# cp /var/lib/masakari/masakari-7.1.0/etc/masakari/api-paste.ini /etc/masakari/

编辑配置文件vim /etc/masakari/masakari.conf,在合适位置添加以下内容

[DEFAULT]

transport_url = rabbit://openstack:test@123@controller

graceful_shutdown_timeout = 5

os_privileged_user_tenant = service

os_privileged_user_password = test123

os_privileged_user_auth_url = http://controller:5000

os_privileged_user_name = masakari

#logging_exception_prefix = %(color)s%(asctime)s.%(msecs)03d TRACE %(name)s [01;35m%(instance)s[00m

#logging_debug_format_suffix = [00;33mfrom (pid=%(process)d) %(funcName)s %(pathname)s:%(lineno)d[00m

#logging_default_format_string = %(asctime)s.%(msecs)03d %(color)s%(levelname)s %(name)s [[00;36m-%(color)s] [01;35m%(instance)s%(color)s%(message)s[00m

#logging_context_format_string = %(asctime)s.%(msecs)03d %(color)s%(levelname)s %(name)s [[01;36m%(request_id)s [00;36m%(project_name)s %(user_name)s%(color)s] [01;35m%(instance)s%(color)s%(message)s[00m

use_syslog = false

#debug = True

masakari_api_workers = 2

enabled_apis = masakari_api

masakari_api_listen = 192.168.230.107

debug = false

auth_strategy=keystone

use_ssl = false

nova_catalog_admin_info = compute:nova:adminURL

[cors]

[database]

connection = mysql+pymysql://masakari:test123@controller/masakari?charset=utf8

[healthcheck]

[host_failure]

[instance_failure]

process_all_instances = true

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = masakari

password = test123

auth_version = 3

service_token_roles = service

service_token_roles_required = True

[osapi_v1]

[oslo_messaging_amqp]

ssl = false

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

ssl = false

[oslo_middleware]

[oslo_policy]

[oslo_versionedobjects]

[process_failure]

[ssl]

[taskflow]

connection = mysql+pymysql://masakari:test123@controller/masakari?charset=utf8

[wsgi]

api_paste_config = /etc/masakari/api-paste.ini

为了确保文件权限正确,再次指定/etc/masakari 目录的所属组 chown -R masakari:masakari /etc/masakari

2.3.5 安装 masakari

[root@controller ~]# cd /var/lib/masakari/masakari-7.1.0/

# 安装py包

[root@controller masakari-7.1.0]$ pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

# 离线环境无法pip直接安装,缺少包请自行下载安装

[root@controller masakari-7.1.0]# python setup.py install

2.3.6 填充数据库

[root@controller masakari-7.1.0]# masakari-manage db sync

2.3.6 创建启动文件

vim /usr/lib/systemd/system/openstack-masakari-api.service

[Unit]

Description=Masakari Api

[Service]

Type=simple

User=masakari

Group=masakari

ExecStart=/usr/bin/masakari-api --config-file=/etc/masakari/masakari.conf --log-file=/var/log/masakari/masakari-api.log

[Install]

WantedBy=multi-user.target

vim /usr/lib/systemd/system/openstack-masakari-engine.service

[Unit]

Description=Masakari Engine

[Service]

Type=simple

User=masakari

Group=masakari

ExecStart=/usr/bin/masakari-engine --config-file=/etc/masakari/masakari.conf --log-file=/var/log/masakari/masakari-engine.log

[Install]

WantedBy=multi-user.target

2.3.7启动服务

[root@controller ~]$ systemctl daemon-reload

[root@controller ~]$ systemctl enable openstack-masakari-api openstack-masakari-engine

[root@controller ~]$ systemctl start openstack-masakari-api openstack-masakari-engine

[root@controller ~]$ systemctl status openstack-masakari-api openstack-masakari-engine

2.3.8 验证服务

启动masakari服务之后,可使用命令检查更新状态

[root@controller ~]# masakari-status upgrade check

+------------------------+

| Upgrade Check Results |

+------------------------+

| Check: Sample Check |

| Result: Success |

| Details: Sample detail |

+------------------------+

2.4 安装masakari 命令行工具 python-masakariclient

2.4.1 下载源码并安装

[root@controller ~]# mkdir -p /var/lib/python-masakariclient

[root@controller ~]# cd /var/lib/python-masakariclient

[root@controller python-masakariclient]# git clone https://github.com/openstack/python-masakariclient -b stable/stein

[root@controller python-masakariclient]# chown -R masakari:masakari python-masakariclient-5.4.0

[root@controller python-masakariclient-5.4.0]# python setup.py install

2.4.2 验证

# 安装成功之后,可使用 openstack segment list 查询 segment列表,返回空是正常的,因为还没有设置和添加segment信息

[root@controller python-masakariclient-5.4.0]# openstack segment list

2.5 安装UI模块 Masakari-dashboard

官方文档:https://github.com/openstack/masakari-dashboard

2.5.1 克隆代码,并安装Masakari-dashboard

[root@controller ~]# mkdir -p /var/lib/masakari-dashboard

[root@controller ~]# cd /var/lib/masakari-dashboard

[root@controller masakari-dashboard]# git clone https://github.com/openstack/masakari-dashboard -b stable/stein

[root@controller masakari-dashboard]# cd masakari-dashboard-0.3.1/

[root@controller masakari-dashboard-0.3.1]# python setup.py install

2.5.2 在Horizon中启用Masakari-dashboard

[root@controller masakari-dashboard-0.3.1]# cp masakaridashboard/local/enabled/_50_masakaridashboard.py /usr/share/openstack-dashboard/openstack_dashboard/local/enabled/

[root@controller masakari-dashboard-0.3.1]# cp masakaridashboard/local/local_settings.d/_50_masakari.py /usr/share/openstack-dashboard/openstack_dashboard/local/local_settings.d/

[root@controller masakari-dashboard-0.3.1]# mkdir -p /usr/share/openstack-dashboard/openstack_dashboard/conf/

[root@controller masakari-dashboard-0.3.1]# cp masakaridashboard/conf/masakari_policy.json /usr/share/openstack-dashboard/openstack_dashboard/conf/

[root@controller masakari-dashboard-0.3.1]# cp masakaridashboard/conf/masakari_policy.json /etc/openstack-dashboard

[root@controller masakari-dashboard-0.3.1]# python /usr/share/openstack-dashboard/manage.py collectstatic

[root@controller masakari-dashboard-0.3.1]# python /usr/share/openstack-dashboard/manage.py compress

2.5.3 重启Horizon

[root@controller masakari-dashboard]# systemctl restart uwsgi nginx

2.5.4 UI配置成功验证

虽然dashboard 页面上看到了Instance-ha 的相关展示页面,但是右上角有报错,此时暂时调整下源码,让masakari-dashboard 不校验OPENSTACK_SSL_CACERT即可 ,修改文件 vim /usr/lib/python2.7/site-packages/masakaridashboard/api/api.py , 第44 行,直接指定cacert = True ,如下图所示

调整完,重启dashboard(systemctl restart uwsgi nginx) , 即可看到页面不在报错

三、Pacemaker集群搭建

这里使用Pacemaker+corosync部署集群, 使用三台机器部署:192.168.230.107(controller)、192.168.230.106(compute-intel)、192.168.230.109(compute-hygon)

参考文档

# 阿里云镜像站, 用以下载相关rpm包

https://developer.aliyun.com/packageSearch

https://clusterlabs.org/pacemaker/

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/high_availability_add-on_administration/ch-startup-haaa

http://corosync.github.io/corosync/

https://www.cnblogs.com/monkey6/p/14890292.html

https://www.devstack.co.kr/openstack-vmha-utilizing-masakari-project/

3.1 安装软件

控制节点

[root@controller ~]# yum install -y pcs pacemaker corosync fence-agents resource-agents

# 这里采用离线安装方式,以pacemaker 为例,直接执行 yum install -y pacemaker,由于服务器没有公网环境,命令执行完会输出依赖列表,按列表提示,去阿里云镜像站下载对应

# rpm包即可, 通过命令

[root@controller pacemaker]# rpm -ivh *.rpm 进行安装

计算节点

[root@compute-hygon ~]# yum install -y pacemaker pacemaker-remote pcs fence-agents resource-agents

[root@compute-hygon pacemaker]# rpm -ivh *.rpm

[root@compute-hygon pacemaker-remote]# rpm -ivh *.rpm

[root@compute-hygon pcs]# rpm -ivh *.rpm

[root@compute-hygon fence-agents]# rpm -ivh *.rpm

[root@compute-intel ~]# yum install -y pcs pacemaker-remote pcs fence-agents resource-agents

[root@compute-intel pacemaker]# rpm -ivh *.rpm

[root@compute-intel pacemaker-remote]# rpm -ivh *.rpm

[root@compute-intel pcs]# rpm -ivh *.rpm

[root@compute-intel fence-agents]# rpm -ivh *.rpm

3.2 启动pcs服务

所有节点

[root@controller ~]# systemctl enable pcsd.service

[root@controller ~]# systemctl start pcsd.service

[root@controller ~]# systemctl status pcsd.service

[root@compute-hygon ~]# systemctl enable pcsd.service

[root@compute-hygon ~]# systemctl start pcsd.service

[root@compute-hygon ~]# systemctl status pcsd.service

[root@compute-intel ~]# systemctl enable pcsd.service

[root@compute-intel ~]# systemctl start pcsd.service

[root@compute-intel ~]# systemctl status pcsd.service

3.3 设置集群用户密码并认证

所有节点

# 密码设置成一样的,均为 test123

[root@controller ~]# echo test123 | passwd --stdin hacluster

[root@compute-hygon ~]# echo test123 | passwd --stdin hacluster

[root@compute-intel ~]# echo test123 | passwd --stdin hacluster

控制节点执行集群认证命令

# Password 输入 上面设置的 test123

[root@controller ~]# pcs cluster auth controller compute-hygon compute-intel

Username: hacluster

Password:

compute-intel: Authorized

controller: Authorized

compute-hygon: Authorized

# 或者执行

[root@controller ~]# pcs cluster auth controller compute-hygon compute-intel -u hacluster -p test123 --force

compute-intel: Authorized

controller: Authorized

compute-hygon: Authorized

3.4 创建集群,并添加节点

控制节点执行

#

[root@controller ~]# pcs cluster setup --start --name my-openstack-hacluster controller --force

Destroying cluster on nodes: controller...

controller: Stopping Cluster (pacemaker)...

controller: Successfully destroyed cluster

Sending 'pacemaker_remote authkey' to 'controller'

controller: successful distribution of the file 'pacemaker_remote authkey'

Sending cluster config files to the nodes...

controller: Succeeded

Starting cluster on nodes: controller...

controller: Starting Cluster (corosync)...

controller: Starting Cluster (pacemaker)...

Synchronizing pcsd certificates on nodes controller...

controller: Success

Restarting pcsd on the nodes in order to reload the certificates...

controller: Success

# 设置集群自启动

[root@controller ~]# pcs cluster enable --all

controller: Cluster Enabled

3.5 查看集群状态

控制节点

[root@controller ~]# pcs cluster status

Cluster Status:

Stack: corosync

Current DC: controller (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum

Last updated: Wed Jan 26 16:06:48 2022

Last change: Wed Jan 26 15:53:45 2022 by hacluster via crmd on controller

1 node configured

0 resource instances configured

PCSD Status:

controller: Online

3.6 查看Corosync状态

控制节点

[root@controller ~]# corosync-cfgtool -s

Printing ring status.

Local node ID 1

RING ID 0

id = 192.168.230.107

status = ring 0 active with no faults

You have mail in /var/spool/mail/root

3.7 验证集群

[root@controller ~]# crm_verify -L -V

error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

# 尚未配置 STONITH. 先忽略这个错误

[root@controller ~]# pcs property set stonith-enabled=false

# 忽略仲裁错误,原因是目前使用的节点过少,至少得有三个节点才能进行仲裁

[root@controller ~]# pcs property set no-quorum-policy=ignore

3.8 添加 pacemaker-remote 节点

[root@controller ~]# pcs cluster node add-remote compute-hygon

Sending remote node configuration files to 'compute-hygon'

compute-hygon: successful distribution of the file 'pacemaker_remote authkey'

Requesting start of service pacemaker_remote on 'compute-hygon'

compute-hygon: successful run of 'pacemaker_remote enable'

compute-hygon: successful run of 'pacemaker_remote start'

[root@controller ~]# pcs cluster node add-remote compute-intel

Sending remote node configuration files to 'compute-intel'

compute-intel: successful distribution of the file 'pacemaker_remote authkey'

Requesting start of service pacemaker_remote on 'compute-intel'

compute-intel: successful run of 'pacemaker_remote enable'

compute-intel: successful run of 'pacemaker_remote start'

通过pcs status 再次查看集群信息,可看到新添加进去的节点信息

[root@controller ~]# pcs status

Cluster name: my-openstack-hacluster

Stack: corosync

Current DC: controller (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum

Last updated: Wed Jan 26 16:21:01 2022

Last change: Wed Jan 26 16:17:16 2022 by root via cibadmin on controller

3 nodes configured

2 resource instances configured

Online: [ controller ]

RemoteOnline: [ compute-hygon compute-intel ]

Full list of resources:

compute-hygon (ocf::pacemaker:remote): Started controller

compute-intel (ocf::pacemaker:remote): Started controller

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

3.9 设置节点属性

# 设置属性,方便区分节点和批量处理

[root@controller ~]# pcs property set --node controller node_role=controller

[root@controller ~]# pcs property set --node compute-hygon node_role=compute

[root@controller ~]# pcs property set --node compute-intel node_role=compute

3.10 设置集群vip(针对云主机HA测试,该步骤可跳过)

# 创建vip

[root@controller ~]# pcs resource create HA_VIP ocf:heartbeat:IPaddr2 ip=192.168.230.111 cidr_netmask=24 op monitor interval=30s

# 将vip绑定到 属性 node_role=controller 的节点上

[root@controller ~]# pcs constraint location HA_VIP rule resource-discovery=exclusive score=0 node_role eq controller --force

# 验证资源状态

[root@controller ~]# pcs resource

compute-hygon (ocf::pacemaker:remote): Started controller

compute-intel (ocf::pacemaker:remote): Started controller

HA_VIP (ocf::heartbeat:IPaddr2): Started controller

将HA_VIP和haproxy服务关联起来,因此应保证控制节点的 haproxy 服务在线(systemctl status haproxy)

# 创建haproxy资源

[root@controller ~]# pcs resource create lb-haproxy systemd:haproxy --clone

[root@controller ~]# pcs resource

compute-hygon (ocf::pacemaker:remote): Started controller

compute-intel (ocf::pacemaker:remote): Started controller

HA_VIP (ocf::heartbeat:IPaddr2): Started controller

Clone Set: lb-haproxy-clone [lb-haproxy]

Started: [ compute-hygon compute-intel controller ]

# 绑定haproxy到 属性 node_role=controller 的节点上

[root@controller ~]# pcs constraint location lb-haproxy-clone rule resource-discovery=exclusive score=0 node_role eq controller --force

# 设置资源绑定到同一节点与设置启动顺序

[root@controller ~]# pcs constraint colocation add lb-haproxy-clone with HA_VIP

# 设置启动顺序

[root@controller ~]# pcs constraint order start HA_VIP then lb-haproxy-clone kind=Optional

Adding HA_VIP lb-haproxy-clone (kind: Optional) (Options: first-action=start then-action=start)

通过 pcs status 再次查看集群状态

[root@controller ~]# pcs status

Cluster name: my-openstack-hacluster

Stack: corosync

Current DC: controller (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum

Last updated: Wed Jan 26 17:44:52 2022

Last change: Wed Jan 26 17:44:42 2022 by root via cibadmin on controller

3 nodes configured

6 resource instances configured

Online: [ controller ]

RemoteOnline: [ compute-hygon compute-intel ]

Full list of resources:

compute-hygon (ocf::pacemaker:remote): Started controller

compute-intel (ocf::pacemaker:remote): Started controller

HA_VIP (ocf::heartbeat:IPaddr2): Started controller

Clone Set: lb-haproxy-clone [lb-haproxy]

Started: [ controller ]

Stopped: [ compute-hygon compute-intel ]

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

3.11 配置基于IPMI的stonith

所使用的三台服务器均是独立的物理主机,可直接使用IPMI进行远程管理

3.11.1 ipmitool lan print 查看ipmi lan 信息

ipmitool lan print 查看 ipmi lan 信息,主要获取IP Address内容,为fenceing 设备的IP地址,用以配置pcs stonith

[root@compute-hygon ~]# ipmitool lan print

Set in Progress : Set Complete

Auth Type Support : MD5

Auth Type Enable : Callback : MD5

: User : MD5

: Operator : MD5

: Admin : MD5

: OEM : MD5

IP Address Source : Static Address

IP Address : 192.168.230.209

Subnet Mask : 255.255.255.0

MAC Address : d4:5d:64:be:e5:66

SNMP Community String : BMC

IP Header : TTL=0x40 Flags=0x40 Precedence=0x00 TOS=0x10

BMC ARP Control : ARP Responses Enabled, Gratuitous ARP Disabled

Gratituous ARP Intrvl : 0.0 seconds

Default Gateway IP : 192.168.230.1

Default Gateway MAC : f2:52:30:d3:7e:aa

Backup Gateway IP : 0.0.0.0

Backup Gateway MAC : 00:00:00:00:00:00

802.1q VLAN ID : Disabled

802.1q VLAN Priority : 0

RMCP+ Cipher Suites : 0,1,2,3,6,7,8,11,12,15,16,17

Cipher Suite Priv Max : caaaaaaaaaaaXXX

: X=Cipher Suite Unused

: c=CALLBACK

: u=USER

: o=OPERATOR

: a=ADMIN

: O=OEM

Bad Password Threshold : 0

Invalid password disable: no

Attempt Count Reset Int.: 0

User Lockout Interval : 0

[root@compute-intel ~]# ipmitool lan print |grep 'IP Address'

IP Address Source : Static Address

IP Address : 192.168.230.206

3.11.2 ipmi user 查询用户信息

ID=2的用户信息用于设置pcs stonith

[root@compute-hygon ~]# ipmitool user list 1

ID Name Callin Link Auth IPMI Msg Channel Priv Limit

1 false false true ADMINISTRATOR

2 admin false false true ADMINISTRATOR

3 true false false NO ACCESS

[root@compute-intel ~]# ipmitool user list 1

ID Name Callin Link Auth IPMI Msg Channel Priv Limit

1 true false false Unknown (0x00)

2 ADMIN false false true ADMINISTRATOR

3 true false false Unknown (0x00)

# 还可以通过如下命令 操作用户,数字2 为用户ID

ipmitool user set name 2 vmhacluster

ipmitool user set password 2 test123

ipmitool user enable 2

3.11.3 使用控制节点测试远程连接计算节点ipmi

一定要进行测试连接,不然 stonith 会检测到失败

# compute-hygon 计算节点

[root@controller ~]# ipmitool -I lanplus -H 192.168.230.209 -U admin -P xxxxxxxx power status

Chassis Power is on

# compute-intel 计算节点

[root@controller ~]# ipmitool -I lanplus -H 192.168.230.206 -U ADMIN -P xxxxxxxx power status

Chassis Power is on

3.11.4 配置stonith

# 先前禁用了stonith,因此先启用

[root@controller ~]# pcs property set stonith-enabled=true

# compute-hygon 计算节点

[root@controller ~]# pcs stonith create ipmi-fence-compute-hygon fence_ipmilan lanplus=1 pcmk_host_list='compute-hygon' delay=5 pcmk_host_check='static-list' pcmk_off_action=off pcmk_reboot_action=off ipaddr='192.168.230.209' login='admin' passwd='admin' power_wait=4 op monitor interval=60s

# compute-intel 计算节点

[root@controller ~]# pcs stonith create ipmi-fence-compute-intel fence_ipmilan lanplus=1 pcmk_host_list='compute-intel' delay=5 pcmk_host_check='static-list' pcmk_off_action=off pcmk_reboot_action=off ipaddr='192.168.230.206' login='ADMIN' passwd='xxxxxxxx' power_wait=4 op monitor interval=60s

stonith配置好之后,系统会自动检测配置是否成功,此时使用 pcs status 查看配置结果(由于检测有时延,建议1分钟之后再次查看状态,确保无误)

[root@controller ~]# pcs status

Cluster name: my-openstack-hacluster

Stack: corosync

Current DC: controller (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum

Last updated: Thu Jan 27 11:43:30 2022

Last change: Thu Jan 27 11:41:22 2022 by root via cibadmin on controller

3 nodes configured

8 resource instances configured

Online: [ controller ]

RemoteOnline: [ compute-hygon compute-intel ]

Full list of resources:

compute-hygon (ocf::pacemaker:remote): Started controller

compute-intel (ocf::pacemaker:remote): Started controller

HA_VIP (ocf::heartbeat:IPaddr2): Started controller

Clone Set: lb-haproxy-clone [lb-haproxy]

Started: [ controller ]

Stopped: [ compute-hygon compute-intel ]

ipmi-fence-compute-hygon (stonith:fence_ipmilan): Started controller

ipmi-fence-compute-intel (stonith:fence_ipmilan): Started controller

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

配置成功:无错误描述信息,且fence 状态均为Started

pacemaker 集群还提供了web 管理页面,默认使用2224端口,使用https协议 如当前的管理页面为 https://192.168.230.107:2224/

四、计算节点安装masakari-monitors

参考文档

https://docs.openstack.org/masakari-monitors/latest/

https://docs.openstack.org//masakari-monitors/latest/doc-masakari-monitors.pdf

# 下载 masakarimonitors.conf.sample

https://docs.openstack.org/masakari-monitors/latest/reference/conf-file.html

4.1 创建用户、组

[root@compute-hygon ~]# groupadd --system masakarimonitors

[root@compute-hygon ~]# useradd --home-dir "/var/lib/masakarimonitors" --create-home --system --shell /bin/false -g masakarimonitors masakarimonitors

4.2 创建目录

[root@compute-hygon ~]# mkdir -p /etc/masakarimonitors

[root@compute-hygon ~]# chown masakarimonitors:masakarimonitors /etc/masakarimonitors

[root@compute-hygon ~]# mkdir -p /var/log/masakarimonitors/

[root@compute-hygon ~]# chown masakarimonitors:masakarimonitors /var/log/masakarimonitors/

4.3 下载masakari源码并安装

经过测试发现,Stein版本对应的masakari-monitors版本号是7.0.x, 该版本存在bug,无法获取pacemaker-remote节点的在线信息,会导致后续出错,经过测试,这里使用的Ussuri版本对应的masakari-monitors源码,版本号是9.0.x ,这里使用的9.0.3版本 , 该版本能检测到pacemaker-remote节点的在线信息,但是节点被关机时,获取信息会有报错,因此需要调整下源码, 修改/var/lib/masakarimonitors/masakari-monitors-9.0.3/masakarimonitors/hostmonitor/host_handler/parse_cib_xml.py 文件

# 使用git下载并安装masakari, 目录/var/lib/masakari不存在的话需要手动建立

[root@compute-hygon ~]# cd /var/lib/masakarimonitors

[root@compute-hygon masakarimonitors]# git clone -b stable/stein https://github.com/openstack/masakari-monitors.git

# 我是从openstack 官网直接下载的代码包 https://releases.openstack.org/teams/masakari.html

[root@controller masakari]# chown -R masakarimonitors:masakarimonitors masakari-monitors-9.0.3

# 安装

[root@compute-hygon masakari-monitors-9.0.3]# python setup.py install

# 将配置文件拷贝至/etc/masakarimonitors/

[root@compute-hygon masakari-monitors-9.0.3]# cp /root/masakarimonitors.conf.sample /etc/masakarimonitors/masakarimonitors.conf

[root@compute-hygon masakari-monitors-9.0.3]# cp etc/masakarimonitors/process_list.yaml.sample /etc/masakarimonitors/process_list.yaml

[root@compute-hygon masakari-monitors-9.0.3]# cp etc/masakarimonitors/hostmonitor.conf.sample /etc/masakarimonitors/hostmonitor.conf

[root@compute-hygon masakari-monitors-9.0.3]# cp etc/masakarimonitors/processmonitor.conf.sample /etc/masakarimonitors/processmonitor.conf

[root@compute-hygon masakari-monitors-9.0.3]# cp etc/masakarimonitors/proc.list.sample /etc/masakarimonitor

4.4 修改配置文件

4.4.1 修改masakarimonitors.conf

sed -i.default -e "/^#/d" -e "/^$/d" /etc/masakarimonitors/masakarimonitors.conf

[DEFAULT]

hostname = compute-hygon

log_dir = /var/log/masakarimonitors

[api]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = masakari

password = test123

[callback]

[cors]

[healthcheck]

[host]

monitoring_driver = default

monitoring_interval = 120

disable_ipmi_check =false

restrict_to_remotes = True

corosync_multicast_interfaces = ens4

corosync_multicast_ports = 5405

[introspectiveinstancemonitor]

[libvirt]

[oslo_middleware]

[process]

process_list_path = /etc/masakarimonitors/process_list.yaml

4.4.2 修改hostmonitor.conf

sed -i.default -e "/^#/d" -e "/^$/d" /etc/masakarimonitors/hostmonitor.conf

MONITOR_INTERVAL=120

NOTICE_TIMEOUT=30

NOTICE_RETRY_COUNT=3

NOTICE_RETRY_INTERVAL=3

STONITH_WAIT=30

STONITH_TYPE=ipmi

MAX_CHILD_PROCESS=3

TCPDUMP_TIMEOUT=10

IPMI_TIMEOUT=5

IPMI_RETRY_MAX=3

IPMI_RETRY_INTERVAL=30

HA_CONF="/etc/corosync/corosync.conf"

LOG_LEVEL="debug"

DOMAIN="Default"

ADMIN_USER="masakari"

ADMIN_PASS="test123"

PROJECT="service"

REGION="RegionOne"

AUTH_URL="http://controller:5000/"

IGNORE_RESOURCE_GROUP_NAME_PATTERN="stonith"

4.4.3 修改process_list.yaml

vim process_list.yaml

# Define the monitoring processes as follows:

# process_name: [Name of the process as it in 'ps -ef'.]

# start_command: [Start command of the process.]

# pre_start_command: [Command which is executed before start_command.]

# post_start_command: [Command which is executed after start_command.]

# restart_command: [Restart command of the process.]

# pre_restart_command: [Command which is executed before restart_command.]

# post_restart_command: [Command which is executed after restart_command.]

# run_as_root: [Bool value whether to execute commands as root authority.]

#

# These definitions need to be set according to the environment to use.

# Sample of definitions is shown below.

-

# libvirt-bin

process_name: /usr/sbin/libvirtd

start_command: systemctl start libvirtd

pre_start_command:

post_start_command:

restart_command: systemctl restart libvirtd

pre_restart_command:

post_restart_command:

run_as_root: True

-

# nova-compute

process_name: /usr/bin/nova-compute

start_command: systemctl start openstack-nova-compute

pre_start_command:

post_start_command:

restart_command: systemctl restart openstack-nova-compute

pre_restart_command:

post_restart_command:

run_as_root: True

-

# instancemonitor

process_name: /usr/bin/masakari-instancemonitor

start_command: systemctl start openstack-masakari-instancemonitor

pre_start_command:

post_start_command:

restart_command: systemctl restart openstack-masakari-instancemonitor

pre_restart_command:

post_restart_command:

run_as_root: True

-

# hostmonitor

process_name: /usr/bin/masakari-hostmonitor

start_command: systemctl start openstack-masakari-hostmonitor

pre_start_command:

post_start_command:

restart_command: systemctl restart openstack-masakari-hostmonitor

pre_restart_command:

post_restart_command:

run_as_root: True

-

# sshd

process_name: /usr/sbin/sshd

start_command: systemctl start sshd

pre_start_command:

post_start_command:

restart_command: systemctl restart sshd

pre_restart_command:

post_restart_command:

run_as_root: True

4.4.4 修改processmonitor.conf

PROCESS_CHECK_INTERVAL=5

PROCESS_REBOOT_RETRY=3

REBOOT_INTERVAL=5

MASAKARI_API_SEND_TIMEOUT=10

MASAKARI_API_SEND_RETRY=12

MASAKARI_API_SEND_DELAY=10

LOG_LEVEL="debug"

DOMAIN="Default"

PROJECT="service"

ADMIN_USER="masakari"

ADMIN_PASS="test123"

AUTH_URL="http://controller:5000/"

REGION="RegionOne"

4.4.5 修改proc.list

vim proc.list

01,/usr/sbin/libvirtd,sudo service libvirtd start,sudo servicelibvirtd start,,,,

02,/usr/bin/python /usr/bin/masakari-instancemonitor,sudo servicemasakari-instancemonitor start,sudo service masakari-instancemonitor start,,,,

4.5 配置启动服务文件

vim /usr/lib/systemd/system/openstack-masakari-hostmonitor.service

[Unit]

Description=Masakari Hostmonitor

[Service]

Type=simple

User=masakarimonitors

Group=masakarimonitors

ExecStart=/usr/bin/masakari-hostmonitor --log-file=/var/log/masakarimonitors/masakari-hostmonitor.log

[Install]

WantedBy=multi-user.target

vim /usr/lib/systemd/system/openstack-masakari-instancemonitor.service

[Unit]

Description=Masakari Instancemonitor

[Service]

Type=simple

User=masakarimonitors

Group=masakarimonitors

ExecStart=/usr/bin/masakari-instancemonitor --log-file=/var/log/masakarimonitors/masakari-instancemonitor.log

[Install]

WantedBy=multi-user.target

vim /usr/lib/systemd/system/openstack-masakari-processmonitor.service

[Unit]

Description=Masakari Processmonitor

[Service]

Type=simple

User=masakarimonitors

Group=masakarimonitors

ExecStart=/usr/bin/masakari-processmonitor --log-file=/var/log/masakarimonitors/masakari-processmonitor.log

[Install]

WantedBy=multi-user.target

4.6 设置masakarimonitors用户sudo权限

[root@compute-hygon ~]# echo "masakarimonitors ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

[root@compute-hygon ~]# chown -R masakarimonitors:masakarimonitors /etc/masakarimonitors/

[root@compute-hygon ~]# chown -R masakarimonitors:masakarimonitors /var/log/masakarimonitors/

4.6 启动服务

[root@compute-hygon masakari-monitors-7.0.1]# systemctl daemon-reload

[root@compute-hygon masakari-monitors-7.0.1]# systemctl enable openstack-masakari-hostmonitor openstack-masakari-instancemonitor openstack-masakari-processmonitor

[root@compute-hygon masakari-monitors-7.0.1]# systemctl start openstack-masakari-hostmonitor openstack-masakari-instancemonitor openstack-masakari-processmonitor

[root@compute-hygon masakari-monitors-7.0.1]# systemctl status openstack-masakari-hostmonitor openstack-masakari-instancemonitor openstack-masakari-processmonitor

五、云主机高可用测试

5.1 创建segment

[root@controller ~]# openstack segment create --description "segment for vm ha test" segment_for_vmha_test auto compute

+-----------------+--------------------------------------+

| Field | Value |

+-----------------+--------------------------------------+

| created_at | 2022-01-27T12:56:45.000000 |

| updated_at | None |

| uuid | 86c055bd-2701-4221-9dea-24b1d38454fd |

| name | segment_for_vmha_test |

| description | segment for vm ha test |

| id | 2 |

| service_type | compute |

| recovery_method | auto |

+-----------------+--------------------------------------+

5.2 将计算节点添加进segment

# 为 segment id 86c055bd-2701-4221-9dea-24b1d38454fd

[root@controller ~]# openstack segment host create compute-hygon COMPUTE SSH 86c055bd-2701-4221-9dea-24b1d38454fd

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| created_at | 2022-01-28T03:25:06.000000 |

| updated_at | None |

| uuid | 00bd3ca3-ec8d-4dd4-ad01-4aa9e543bde1 |

| name | compute-hygon |

| type | COMPUTE |

| control_attributes | SSH |

| reserved | False |

| on_maintenance | False |

| failover_segment_id | 86c055bd-2701-4221-9dea-24b1d38454fd |

+---------------------+--------------------------------------+

[root@controller ha]# openstack segment host create compute-intel COMPUTE SSH 86c055bd-2701-4221-9dea-24b1d38454fd

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| created_at | 2022-01-28T03:30:12.000000 |

| updated_at | None |

| uuid | fb1fbd8e-cf91-44c5-a03c-144bf02b4fde |

| name | compute-intel |

| type | COMPUTE |

| control_attributes | SSH |

| reserved | False |

| on_maintenance | False |

| failover_segment_id | 86c055bd-2701-4221-9dea-24b1d38454fd |

+---------------------+--------------------------------------+

5.3 测试云主机实例恢复

计算节点查看虚拟机列表

[root@compute-intel ~]# virsh list

Id Name State

-----------------------------------

1 instance-0000005a running

3 instance-0000005b running

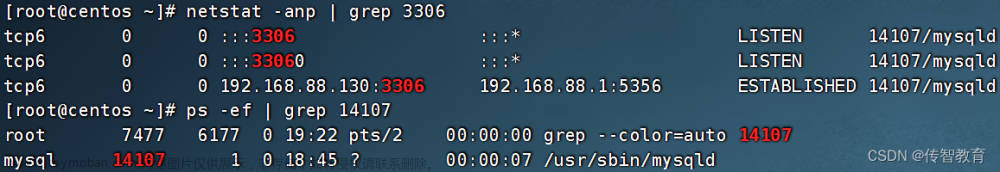

查看qemu 进程

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-heuWJSKW-1668262721893)(查看qume进程.png)]

关闭虚拟机instance-0000005a对应的进程

[root@compute-intel ~]# kill -9 225967

再次查看虚拟机列表,发现被关闭进程对应的虚拟机状态已经不是active 了

[root@compute-intel ~]# virsh list

Id Name State

-----------------------------------

3 instance-0000005b running

查看日志/var/log/masakarimonitors/masakari-instancemonitor.log, 发现masakari-instancemonitor 服务已经侦测到虚拟机进程异常,发送rpc信息

控制节点的masakari-api服务收到并解析rpc信息[root@controller masakari]# /var/log/masakari/masakari-api.log

由控制节点的masakari-engine服务完成虚拟机HA处理[root@controller masakari]# /var/log/masakari/masakari-engine.log

修复完成,再次查看虚拟机列表,发现关闭的实例instance-0000005a 已经被启动

[root@compute-intel ~]# virsh list

Id Name State

-----------------------------------

3 instance-0000005b running

4 instance-0000005a running

也可以通过dashboard 查看该修复信息

点击UUID看其详细的修复过程,由图也可发现,对于虚拟机异常退出的情况,masakari的修复步骤为

- 关闭虚拟机

- 开启虚拟机

- 确认实例被激活

5.4 测试nova-compute服务恢复

systemctl status openstack-nova-compute 查看当前nova-compute服务状态

systemctl stop openstack-nova-compute 停止当前nova-compute服务

查看日志/var/log/masakarimonitors/masakari-processmonitor.log, 发现masakari-monitor服务自动重启了nova-compute服务

再次查看 nova-compute服务,状态为active

5.5 测试host故障关机

控制节点查看虚拟机列表,并选中一台虚拟机,查看其所在的计算节点

[root@controller masakari]# openstack server list

+--------------------------------------+------------+-------------+------------------------------+--------------+-------------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+------------+-------------+------------------------------+--------------+-------------+

| 2c59ffb1-89f4-44a0-8632-3e436202b34c | hyj_0209_5 | ACTIVE | inn=2.3.4.167 | cirros | zwy_ciross |

| 02e5cd92-8e0f-4800-84f2-33a741592448 | earrr | ACTIVE | inn=2.3.4.86 | cirros | zwy_ciross |

| f48e4e82-2b85-4908-bf41-beb01e02073a | hyj_phy_01 | HARD_REBOOT | ironic-deploy=192.168.230.12 | Bare@centos7 | Bare@node_1 |

| 6881f768-125d-4a18-92fa-97137577fdcd | zwy_111w_3 | SHUTOFF | inn=2.3.4.27 | cirros | zwy_ciross |

| 052333b7-56fc-467b-ad11-a6b22304430c | ewewew | SHUTOFF | lb-mgmt-net=19.178.0.150 | cirros | zwy_ciross |

+--------------------------------------+------------+-------------+------------------------------+--------------+-------------+

[root@controller masakari]# openstack server show 02e5cd92-8e0f-4800-84f2-33a741592448 -c OS-EXT-SRV-ATTR:host -f value

compute-intel

下面将模拟该计算节点compute-intel宕机

控制节点查看计算节点compute-intel电源状态

[root@controller masakari]# fence_ipmilan -P -A password -a 192.168.230.206 -p xxxxxxxx -l ADMIN -o status

Status: ON

计算节点compute-intel查看当前虚拟机信息

[root@compute-intel ~]# virsh list

Id Name State

-----------------------------------

3 instance-0000005b running

5 instance-0000005a running

关闭计算节点compute-intel的网卡ifconfig enp59s0f1 down , 集群检测到compute-intel节点异常,会利用fence设备关闭该节点

[root@controller ~]# fence_ipmilan -P -A password -a 192.168.230.206 -p xxxxxxxx -l ADMIN -o status

Status: OFF

节点被关闭之后,另外一个计算节点compute-hygon可检测到节点离线,将发送rpc消息

控制节点masakari-api 服务解析该rpc信息,并交由masakari-engine服务处理, 最终的处理结果会将compute-intel上的所有虚拟机通过nova.evacuate功能疏散至compute-hygon 节点上,疏散完成,查看虚拟机的主机发生了变化

[root@controller ~]# openstack server show 02e5cd92-8e0f-4800-84f2-33a741592448 -c OS-EXT-SRV-ATTR:host -f value

compute-hygon

也可通过云管页面进行查看,发现节点全部被疏散至compute-hygon节点

通过dashboard页面,也可查询该HA处理信息

查看修复详情文章来源:https://www.toymoban.com/news/detail-471893.html

文章来源地址https://www.toymoban.com/news/detail-471893.html

文章来源地址https://www.toymoban.com/news/detail-471893.html

到了这里,关于centos安装和配置masakari(stein版本)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!