一、什么是FLink

Apache Flink 是一个框架和分布式处理引擎,用于在无边界和有边界数据流上进行有状态的计算。Flink 能在所有常见集群环境中运行,并能以内存速度和任意规模进行计算。

接下来,我们来介绍一下 Flink 架构中的重要方面。

处理无界和有界数据

任何类型的数据都可以形成一种事件流。信用卡交易、传感器测量、机器日志、网站或移动应用程序上的用户交互记录,所有这些数据都形成一种流。

数据可以被作为 无界 或者 有界 流来处理。

-

无界流 有定义流的开始,但没有定义流的结束。它们会无休止地产生数据。无界流的数据必须持续处理,即数据被摄取后需要立刻处理。我们不能等到所有数据都到达再处理,因为输入是无限的,在任何时候输入都不会完成。处理无界数据通常要求以特定顺序摄取事件,例如事件发生的顺序,以便能够推断结果的完整性。

-

有界流 有定义流的开始,也有定义流的结束。有界流可以在摄取所有数据后再进行计算。有界流所有数据可以被排序,所以并不需要有序摄取。有界流处理通常被称为批处理

Apache Flink 擅长处理无界和有界数据集 精确的时间控制和状态化使得 Flink 的运行时(runtime)能够运行任何处理无界流的应用。有界流则由一些专为固定大小数据集特殊设计的算法和数据结构进行内部处理,产生了出色的性能。

附:官方文档:

Apache Flink: Apache Flink 是什么?

二、基本概念

-

checkpoint机制

Checkpoint是Flink实现容错机制最核心的功能,它能够根据配置周期性地基于Stream中各个Operator/task的状态来生成快照,从而将这些状态数据定期持久化存储下来,当Flink程序一旦意外崩溃时,重新运行程序时可以有选择地从这些快照进行恢复,从而修正因为故障带来的程序数据异常。当然,为了保证exactly-once/at-least-once的特性,还需要数据源支持数据回放。Flink针对不同的容错和消息处理上提供了不同的容错语义。

exactly-once:Flink提供了可以恢复数据流应用的到一致状态的容错机制,确保在发生故障,程序的每一条记录只会作用于状态一次

-

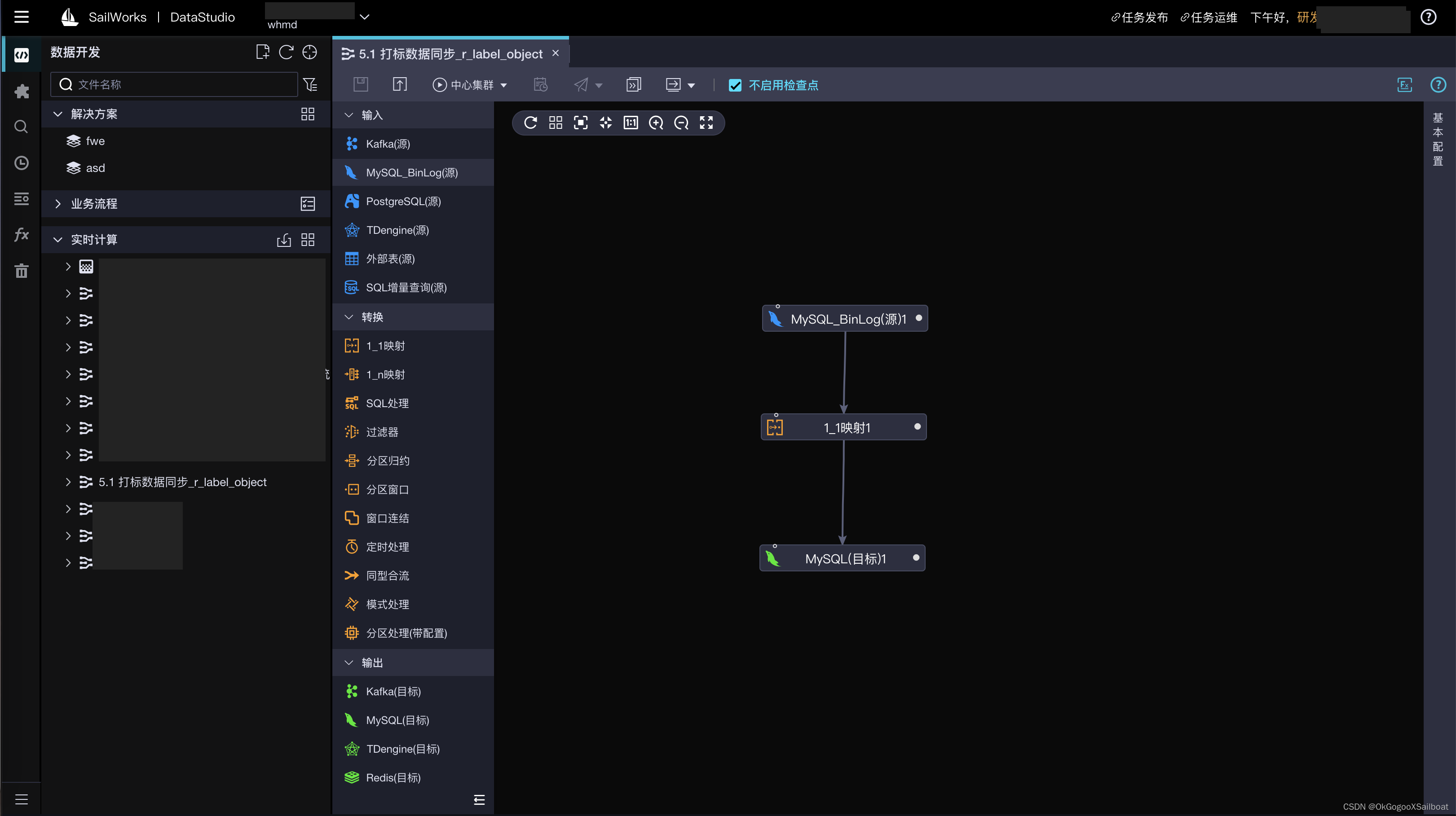

flink cdc

Flink CDC connector 可以捕获在一个或多个表中发生的所有变更。该模式通常有一个前记录和一个后记录。Flink CDC connector 可以直接在Flink中以非约束模式(流)使用,而不需要使用类似 kafka 之类的中间件中转数据

-

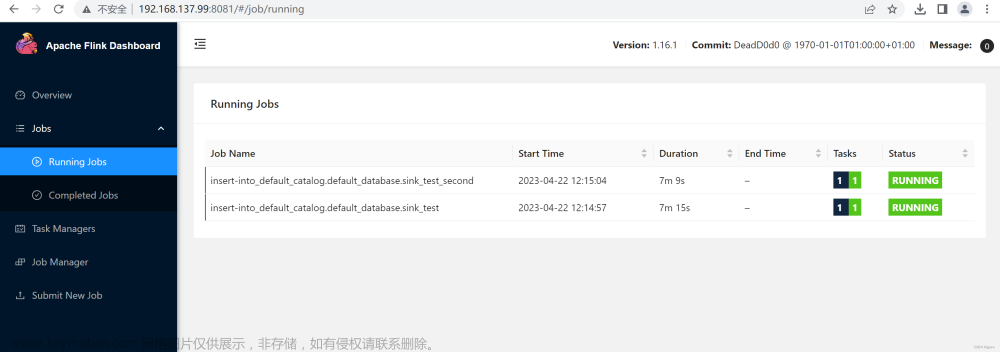

Flink提交流程

参考文档:

【Flink】Flink CDC介绍和原理概述_一个写湿的程序猿的博客-CSDN博客_flink的cdc

Flink CDC原理简述:

在最新 CDC 调研报告中,Debezium 和 Canal 是目前最流行使用的 CDC 工具,这些 CDC 工具的核心原理是抽取数据库日志获取变更。

在经过一系列调研后,目前 Debezium (支持全量、增量同步,同时支持 MySQL、PostgreSQL、Oracle 等数据库),使用较为广泛。

Flink SQL CDC 内置了 Debezium 引擎,利用其抽取日志获取变更的能力,将

changelog 转换为 Flink SQL 认识的 RowData 数据。(以下右侧是 Debezium

的数据格式,左侧是 Flink 的 RowData 数据格式)。

RowData 代表了一行的数据,在 RowData 上面会有一个元数据的信息 RowKind,RowKind 里面包括了插入(+I)、更新前(-U)、更新后(+U)、删除(-D),这样和数据库里面的 binlog 概念十分类似。

通过 Debezium 采集的数据,包含了旧数据(before)和新数据行(after)以及原数据信息(source),op 的 u 表示是update 更新操作标识符(op 字段的值 c,u,d,r 分别对应 create,update,delete,reade),ts_ms 表示同步的时间戳。

三、实时同步代码解析

源码:

以myrs_fc_data_sync分支为例:

-

配置flinkcdc基础属性

-

配置监听的数据源

-

flinkcdc环境配置

-

数据输出配置。

在 DBConf.java中,配置的为需要监听的Sqlserver数据源表

各种数据json如下:

sqlservercdc格式:

{"op":"u","before":{"area_partition_code":"1zVqkZ9AidRUsrikNu6868","latitude":"33.580258","upd_host":"172.20.15.192:8080","upd_name":"周波","upd_user":"3lw3kDQFGUXQoxwvkKT4NB","crt_job_no":"200786171","crt_time":1634923665000,"upd_time":1634923669187,"id":"1zVqkZ9AidRUsrikNu6868","crt_user":"3lw3kDQFGUXQoxwvkKT4NB","crt_name":"周波","longitude":"114.016521","area_partition_name":"漯河服务站","data_type":"my_base_sale_area_partition","crt_host":"172.20.15.192:8080","status":1,"exchange_status":1},"after":{"area_partition_code":"1zVqkZ9AidRUsrikNu6868","latitude":"33.580258","upd_host":"172.20.15.192:8080","upd_name":"周波","upd_user":"3lw3kDQFGUXQoxwvkKT4NB","crt_job_no":"200786171","crt_time":1634923666000,"upd_time":1634923669187,"id":"1zVqkZ9AidRUsrikNu6868","crt_user":"3lw3kDQFGUXQoxwvkKT4NB","crt_name":"周波","longitude":"114.016521","area_partition_name":"漯河服务站","data_type":"my_base_sale_area_partition","crt_host":"172.20.15.192:8080","status":1,"exchange_status":1},"source":{"schema":"dbo","event_serial_no":2,"connector":"sqlserver","name":"order_center_10.106.215.90","commit_lsn":"00027124:011d4308:0006","change_lsn":"00027124:011d4308:0005","version":"1.7.0.Final","ts_ms":1656569539697,"snapshot":"false","db":"MY_SlaughterProduct","table":"my_base_sale_area_partition"},"ts_ms":1656569542562}mysqlbinlog格式:文章来源:https://www.toymoban.com/news/detail-475405.html

{"data":[{"id":"5VVel5vwVcuJGb40BwBTZX","order_no":"XS-202206210916005325095828","cust_code":"MYRS00604735","cust_name":"浙江杭泰食品股份有限公司","company_code":"103","company_name":"内乡屠宰厂","cust_area_code":"2FqYTboHpr4xCvNut5XiBh","cust_area":"杭州服务站","arrival_addr":"内乡屠宰厂","arrival_date":null,"delivery_mode":"客户自提","delivery_mode_code":"1105001","order_weight":null,"order_amount":null,"service_manager":"余志军","service_manager_job_no":"210124517","note":null,"status":"8","crt_time":"2022-06-20 09:16:05.000","crt_user":"my-job-executor","crt_name":"余志军","crt_job_no":null,"upd_time":"2022-06-20 11:13:01.958","upd_name":"","upd_job_no":null,"delivery_addr":null,"service_tel":null,"generate":null,"generate_people":null,"generate_date":null,"father_order_no":null,"split_order_status":null,"prepare_status":null,"prepare_date":"2022-06-20 10:49:01.000","distribution_status":null,"distribution_people":null,"distribution_date":null,"data_type":null,"exchange_status":null,"pig_form_id":"103","financial_status":null,"financial_job_no":null,"financial_name":null,"financial_time":null,"order_type":"0","freight_deduction_amount":null,"arrival_time":null,"order_change_type":null,"order_date":"2022-06-21","province":"河南省","city":"南阳市","county":null,"two_category":"农贸","two_category_code":"1107002","delivery_branch":null,"branch_code":null,"branch_name":null,"area_status":null,"crt_dunning":null,"approval_status":null,"latest_delivery_date":null,"order_attributes":"1","retail_status":null,"retail_flag":"0"}],"database":"my_slaughter_finance","es":1655979696000,"id":87051,"isDdl":false,"mysqlType":{"id":"varchar(32)","order_no":"varchar(64)","cust_code":"varchar(64)","cust_name":"varchar(255)","company_code":"varchar(64)","company_name":"varchar(255)","cust_area_code":"varchar(64)","cust_area":"varchar(128)","arrival_addr":"varchar(1024)","arrival_date":"datetime(3)","delivery_mode":"varchar(64)","delivery_mode_code":"varchar(64)","order_weight":"decimal(18,2)","order_amount":"decimal(18,2)","service_manager":"varchar(64)","service_manager_job_no":"varchar(64)","note":"varchar(1024)","status":"int(11)","crt_time":"datetime(3)","crt_user":"varchar(64)","crt_name":"varchar(64)","crt_job_no":"varchar(64)","upd_time":"datetime(3)","upd_name":"varchar(255)","upd_job_no":"varchar(255)","delivery_addr":"varchar(255)","service_tel":"varchar(255)","generate":"int(11)","generate_people":"varchar(255)","generate_date":"datetime(3)","father_order_no":"varchar(2":null,"delivery_mode":null,"delivery_mode_code":"1105001","order_weight":"310.0","order_amount":"6416.0","service_manager":"熊巍东","service_manager_job_no":"200706045","note":null,"status":"7","crt_time":"2020-11-03 00:00:02.000","crt_user":null,"crt_name":"付宇","crt_job_no":"170704993","upd_time":null,"upd_name":null,"upd_job_no":null,"delivery_addr":null,"service_tel":null,"generate":null,"generate_people":null,"generate_date":null,"father_order_no":null,"split_order_status":null,"prepare_status":"0","prepare_date":"2020-11-04 00:00:00.000","distribution_status":null,"distribution_people":null,"distribution_date":null,"data_type":"my_sale_order","exchange_status":"1","pig_form_id":"103","financial_status":null,"financial_job_no":"200713613","financial_name":"王欢","financial_time":null,"order_type":"3","freight_deduction_amount":null,"arrival_time":null,"order_change_type":null,"order_date":"2020-11-03","province":null,"city":null,"county":null,"two_category":null,"two_category_code":null,"delivery_branch":null,"branch_code":null,"branch_name":null,"area_status":"1","crt_dunning":"0","approval_status":null,"latest_delivery_date":null,"order_attributes":null,"retail_status":null,"retail_flag":"0"}],"database":"my_slaughter_finance","es":1656043006000,"id":18387,"isDdl":false,"mysqlType":{"id":"varchar(32)","order_no":"varchar(64)","cust_code":"varchar(64)","cust_name":"varchar(255)","company_code":"varchar(64)","company_name":"varchar(255)","cust_area_code":"varchar(64)","cust_area":"varchar(128)","arrival_addr":"varchar(1024)","arrival_date":"datetime(3)","delivery_mode":"varchar(64)","delivery_mode_code":"varchar(64)","order_weight":"decimal(18,2)","order_amount":"decimal(18,2)","service_manager":"varchar(64)","service_manager_job_no":"varchar(64)","note":"varchar(1024)","status":"int(11)","crt_time":"datetime(3)","crt_user":"varchar(64)","crt_name":"varchar(64)","crt_job_no":"varchar(64)","upd_time":"datetime(3)","upd_name":"varchar(255)","upd_job_no":"varchar(255)","delivery_addr":"varchar(255)","service_tel":"varchar(255)","generate":"int(11)","generate_people":"varchar(255)","generate_date":"datetime(3)","father_order_no":"varchar(255)","split_order_status":"int(11)","prepare_status":"int(11)","prepare_date":"datetime(3)","distribution_status":"int(11)","distribution_people":"varchar(255)","distribution_date":"datetime(3)","data_type":"varchar(64)","exchange_status":"int(11)","pig_form_id":"varchar(64)","financial_status":"int(11)","financial_job_no":"varchar(255)","financial_name":"varchar(255)","financial_time":"datetime(3)","order_type":"int(11)","freight_deduction_amount":"decimal(18,2)","arrival_time":"datetime(3)","order_change_type":"int(11)","order_date":"varchar(32)","province":"varchar(64)","city":"varchar(64)","county":"varchar(64)","two_category":"varchar(32)","two_category_code":"varchar(64)","delivery_branch":"int(11)","branch_code":"varchar(255)","branch_name":"varchar(255)","area_status":"int(11)","crt_dunning":"int(11)","approval_status":"int(11)","latest_delivery_date":"varchar(64)","order_attributes":"int(11)","retail_status":"int(11)","retail_flag":"int(11)"},"old":[{"crt_time":"2020-11-03 00:00:00.000"}],"pkNames":["id"],"sql":"","sqlType":{"id":12,"order_no":12,"cust_code":12,"cust_name":12,"company_code":12,"company_name":12,"cust_area_code":12,"cust_area":12,"arrival_addr":12,"arrival_date":93,"delivery_mode":12,"delivery_mode_code":12,"order_weight":3,"order_amount":3,"service_manager":12,"service_manager_job_no":12,"note":12,"status":4,"crt_time":93,"crt_user":12,"crt_name":12,"crt_job_no":12,"upd_time":93,"upd_name":12,"upd_job_no":12,"delivery_addr":12,"service_tel":12,"generate":4,"generate_people":12,"generate_date":93,"father_order_no":12,"split_order_status":4,"prepare_status":4,"prepare_date":93,"distribution_status":4,"distribution_people":12,"distribution_date":93,"data_type":12,"exchange_status":4,"pig_form_id":12,"financial_status":4,"financial_job_no":12,"financial_name":12,"financial_time":93,"order_type":4,"freight_deduction_amount":3,"arrival_time":93,"order_change_type":4,"order_date":12,"province":12,"city":12,"county":12,"two_category":12,"two_category_code":12,"delivery_branch":4,"branch_code":12,"branch_name":12,"area_status":4,"crt_dunning":4,"approval_status":4,"latest_delivery_date":12,"order_attributes":4,"retail_status":4,"retail_flag":4},"table":"my_finance_sale_order","ts":1656043006603,"type":"UPDATE"}附:学习资料文章来源地址https://www.toymoban.com/news/detail-475405.html

到了这里,关于Flink CDC数据同步的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!