一、准备工作

1.1 前言

这是博主在升级过程中遇到的问题记录,大家不一定遇到过,如果不是 CDH 平台的话,单是 hive 服务升级应该是不会有这些问题的,且升级前博主也参考过几篇相关 CDH 升级 hive 服务的博文,前面的升级步骤基本一致,但是升级过程只有我遇到了这些问题吗?显然不是的,但是其他博文都未提及出现过的问题以及如何处理和排查,特别是当升级完之后 hive metastore 无法启动的问题,相信很多人也会遇到如下错误:

#典型错误1

Class "org.apache.hadoop.hive.metastore.model.MDatabase" field "org.apache.hadoop.hive.metastore.model.MDatabase.createTime" : declared in MetaData, but this field doesnt exist in the class!

org.datanucleus.metadata.InvalidClassMetaDataException: Class "org.apache.hadoop.hive.metastore.model.MDatabase" field "org.apache.hadoop.hive.metastore.model.MDatabase.createTime" : declared in MetaData, but this field doesnt exist in the class!

#典型错误2

Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

org.datanucleus.metadata.InvalidClassMetaDataException: Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

#其他错误可看博主下面的记录内容单拎出来这些报错,度娘多多少少还是可以找到一些类似的解决方案,不过经过博主测试,都不能解决出现的问题,因此才有了此篇博文,当然最后也完美解决了所有问题,不保证后面使用过程中是否会出现问题,因为还没有进一步测试,但是升级和使用,是没有问题的。

请大家一定看完整篇再进行实际操作,过程中有些点是踩坑的,没必要去进行!!!

1.2 元数据备份

[root@hadoop106 ~]# mysqldump -uroot -p****** hive > ./hive.sql#查看原版本信息,自带版本为:Hive 2.1.1

[root@hadoop105 ~]# hive --version

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive 2.1.1-cdh6.3.2

Subversion file:///container.redhat7/build/cdh/hive/2.1.1-cdh6.3.2/rpm/BUILD/hive-2.1.1-cdh6.3.2 -r b3393cf499504df1d2a12d34b4285e5d0c02be11

Compiled by jenkins on Fri Nov 8 06:02:22 PST 2019

From source with checksum 75df142c785df1d0b37a9d40fe16169f1.3 停止 hive 服务

1.4 获取资源

下载hive包: 官网下载:Downloads

#解压

[root@hadoop105 soft]# tar -zxvf apache-hive-3.1.3-bin.tar.gz

[root@hadoop105 soft]# mv apache-hive-3.1.3-bin /opt/cloudera/hive二、升级操作

2.1 修改相关配置

#修改jar包

#复制 jar 包目录

[root@hadoop105 soft]# cp -r /opt/cloudera/parcels/CDH/lib/hive/lib /opt/cloudera/parcels/CDH/lib/hive/lib313

#删除 hive 开头的 jar 包

[root@hadoop105 soft]# cd /opt/cloudera/parcels/CDH/lib/hive/lib313/

[root@hadoop105 lib313]# rm -rf ./hive-*.jar

#将解压出来的 hive 包中 hive 开头的 jar包复制进去

[root@hadoop105 lib313]# cp /opt/cloudera/hive/lib/hive-*.jar ./#修改启动文件

[root@hadoop105 lib313]# vi /opt/cloudera/parcels/CDH/lib/hive/bin/hive

#HIVE_LIB=${HIVE_HOME}/lib #注释

HIVE_LIB=${HIVE_HOME}/lib313 #新增2.2 升级元数据库

注意:尽量一步一步升级,不要跳着升级,不然可能会失败

这里升级包含了(upgrade-2.1.0-to-2.2.0.mysql.sql、upgrade-2.2.0-to-2.3.0.mysql.sql、upgrade-2.3.0-to-3.0.0.mysql.sql、upgrade-3.0.0-to-3.1.0.mysql.sql)

[root@hadoop105 lib313]# cd /opt/cloudera/hive/scripts/metastore/upgrade/mysql/

#因为我的 hive 和 mysql 不在同一个节点上,因此需要把升级文件拷贝到 mysql 节点上

[root@hadoop105 mysql]# scp ./* root@192.168.0.106:/opt/hive_sql

[root@hadoop106 hive_sql]# chown mysql /opt/hive_sql/*

#升级

[root@hadoop106 ~]# cd /opt/hive_sql

[root@hadoop106 ~]# mysql -uroot -p

mysql> use hive;

#升级到2.2.0

mysql> source upgrade-2.1.0-to-2.2.0.mysql.sql;

+------------------------------------------------+

| |

+------------------------------------------------+

| Upgrading MetaStore schema from 2.1.0 to 2.2.0 |

+------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 0 rows affected (0.04 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 5 rows affected (0.00 sec)

Rows matched: 5 Changed: 5 Warnings: 0

Query OK, 0 rows affected (0.04 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 11 rows affected (0.02 sec)

Records: 11 Duplicates: 0 Warnings: 0

Query OK, 10 rows affected (0.02 sec)

Records: 10 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 1 Changed: 0 Warnings: 0

+---------------------------------------------------------+

| |

+---------------------------------------------------------+

| Finished upgrading MetaStore schema from 2.1.0 to 2.2.0 |

+---------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

#升级到2.3.0

mysql> source upgrade-2.2.0-to-2.3.0.mysql.sql;

+------------------------------------------------+

| |

+------------------------------------------------+

| Upgrading MetaStore schema from 2.2.0 to 2.3.0 |

+------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 1 row affected (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 0

+---------------------------------------------------------+

| |

+---------------------------------------------------------+

| Finished upgrading MetaStore schema from 2.2.0 to 2.3.0 |

+---------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

#升级到3.0.0

mysql> source upgrade-2.3.0-to-3.0.0.mysql.sql;

+------------------------------------------------+

| |

+------------------------------------------------+

| Upgrading MetaStore schema from 2.3.0 to 3.0.0 |

+------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 0 rows affected, 1 warning (0.00 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 1 row affected (0.00 sec)

Records: 1 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.04 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.03 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.04 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.03 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Query OK, 0 rows affected (0.00 sec)

Query OK, 1 row affected (0.00 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.03 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 2 rows affected (0.00 sec)

Rows matched: 2 Changed: 2 Warnings: 0

Query OK, 0 rows affected (0.04 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 2 rows affected (0.04 sec)

Records: 2 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 1 row affected (0.01 sec)

Records: 1 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 1 row affected (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 0

+---------------------------------------------------------+

| |

+---------------------------------------------------------+

| Finished upgrading MetaStore schema from 2.3.0 to 3.0.0 |

+---------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

ERROR 1060 (42S21): Duplicate column name 'OWNER_TYPE'

#升级到3.1.0

mysql> source upgrade-3.0.0-to-3.1.0.mysql.sql;

+------------------------------------------------+

| |

+------------------------------------------------+

| Upgrading MetaStore schema from 3.0.0 to 3.1.0 |

+------------------------------------------------+

1 row in set, 1 warning (0.00 sec)

Query OK, 0 rows affected (0.04 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.04 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

ERROR 1061 (42000): Duplicate key name 'TAB_COL_STATS_IDX'

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Rows matched: 0 Changed: 0 Warnings: 0

Query OK, 0 rows affected (0.02 sec)

Records: 0 Duplicates: 0 Warnings: 0

Query OK, 0 rows affected (0.00 sec)

Query OK, 1 row affected (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 0

+---------------------------------------------------------+

| |

+---------------------------------------------------------+

| Finished upgrading MetaStore schema from 3.0.0 to 3.1.0 |

+---------------------------------------------------------+

1 row in set, 1 warning (0.00 sec)2.3 踩坑记录

以下是我实际过程中遇到的所有错误项

(1)启动 hive

#升级后无法启动 metastore

[root@hadoop105 hive]# hive --service metastore

Starting Hive Metastore Server

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "org.apache.hadoop.hive.metastore.metrics.JvmPauseMonitor$Monitor@4585542d" java.lang.NoSuchMethodError: com.google.common.base.Stopwatch.createUnstarted()Lcom/google/common/base/Stopwatch;

at org.apache.hadoop.hive.metastore.metrics.JvmPauseMonitor$Monitor.run(JvmPauseMonitor.java:174)

at java.lang.Thread.run(Thread.java:748)

23/06/05 16:58:36 INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

23/06/05 16:58:37 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:37 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:37 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:37 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:37 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:37 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:38 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:38 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:38 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:38 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:38 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:38 WARN DataNucleus.MetaData: Metadata has jdbc-type of null yet this is not valid. Ignored

23/06/05 16:58:39 INFO DataNucleus.JDO: Exception thrown

Class "org.apache.hadoop.hive.metastore.model.MDatabase" field "org.apache.hadoop.hive.metastore.model.MDatabase.createTime" : declared in MetaData, but this field doesnt exist in the class!

org.datanucleus.metadata.InvalidClassMetaDataException: Class "org.apache.hadoop.hive.metastore.model.MDatabase" field "org.apache.hadoop.hive.metastore.model.MDatabase.createTime" : declared in MetaData, but this field doesnt exist in the class!

at org.datanucleus.metadata.ClassMetaData.populateMemberMetaData(ClassMetaData.java:705)

at org.datanucleus.metadata.ClassMetaData.populate(ClassMetaData.java:217)

at org.datanucleus.metadata.MetaDataManagerImpl$1.run(MetaDataManagerImpl.java:2868)

at java.security.AccessController.doPrivileged(Native Method)

at org.datanucleus.metadata.MetaDataManagerImpl.populateAbstractClassMetaData(MetaDataManagerImpl.java:2862)

at org.datanucleus.metadata.MetaDataManagerImpl.populateFileMetaData(MetaDataManagerImpl.java:2685)

at org.datanucleus.api.jdo.metadata.JDOMetaDataManager.loadXMLMetaDataForClass(JDOMetaDataManager.java:806)

at org.datanucleus.api.jdo.metadata.JDOMetaDataManager.getMetaDataForClassInternal(JDOMetaDataManager.java:406)

at org.datanucleus.metadata.AbstractMemberMetaData.populate(AbstractMemberMetaData.java:943)

at org.datanucleus.metadata.ClassMetaData.populateMemberMetaData(ClassMetaData.java:698)

at org.datanucleus.metadata.ClassMetaData.populate(ClassMetaData.java:217)

at org.datanucleus.metadata.MetaDataManagerImpl$1.run(MetaDataManagerImpl.java:2868)

at java.security.AccessController.doPrivileged(Native Method)

at org.datanucleus.metadata.MetaDataManagerImpl.populateAbstractClassMetaData(MetaDataManagerImpl.java:2862)

at org.datanucleus.metadata.MetaDataManagerImpl.populateFileMetaData(MetaDataManagerImpl.java:2685)

at org.datanucleus.api.jdo.metadata.JDOMetaDataManager.loadXMLMetaDataForClass(JDOMetaDataManager.java:806)

at org.datanucleus.api.jdo.metadata.JDOMetaDataManager.getMetaDataForClassInternal(JDOMetaDataManager.java:406)

at org.datanucleus.metadata.AbstractMemberMetaData.populate(AbstractMemberMetaData.java:943)

at org.datanucleus.metadata.ClassMetaData.populateMemberMetaData(ClassMetaData.java:698)

at org.datanucleus.metadata.ClassMetaData.populate(ClassMetaData.java:217)

at org.datanucleus.metadata.MetaDataManagerImpl$1.run(MetaDataManagerImpl.java:2868)

at java.security.AccessController.doPrivileged(Native Method)

at org.datanucleus.metadata.MetaDataManagerImpl.populateAbstractClassMetaData(MetaDataManagerImpl.java:2862)

at org.datanucleus.metadata.MetaDataManagerImpl.populateFileMetaData(MetaDataManagerImpl.java:2685)

at org.datanucleus.api.jdo.metadata.JDOMetaDataManager.loadXMLMetaDataForClass(JDOMetaDataManager.java:806)

at org.datanucleus.api.jdo.metadata.JDOMetaDataManager.getMetaDataForClassInternal(JDOMetaDataManager.java:406)

at org.datanucleus.metadata.MetaDataManagerImpl.getMetaDataForClass(MetaDataManagerImpl.java:1659)

at org.datanucleus.ExecutionContextImpl.hasPersistenceInformationForClass(ExecutionContextImpl.java:5588)

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileInternal(JDOQLQuery.java:256)

at org.datanucleus.store.query.Query.executeQuery(Query.java:1805)

at org.datanucleus.store.query.Query.executeWithArray(Query.java:1733)

at org.datanucleus.store.query.Query.execute(Query.java:1715)

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:371)

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:213)

at org.apache.hadoop.hive.metastore.ObjectStore.getMSchemaVersion(ObjectStore.java:9137)

at org.apache.hadoop.hive.metastore.ObjectStore.getMetaStoreSchemaVersion(ObjectStore.java:9121)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:9078)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:9063)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy25.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:699)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:692)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:769)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:540)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8672)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:8937)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:8854)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

23/06/05 16:58:39 INFO DataNucleus.JDO: Exception thrown

Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

org.datanucleus.metadata.InvalidClassMetaDataException: Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

at org.datanucleus.metadata.ClassMetaData.populateMemberMetaData(ClassMetaData.java:705)

at org.datanucleus.metadata.ClassMetaData.populate(ClassMetaData.java:217)

at org.datanucleus.metadata.MetaDataManagerImpl$1.run(MetaDataManagerImpl.java:2868)

at java.security.AccessController.doPrivileged(Native Method)

at org.datanucleus.metadata.MetaDataManagerImpl.populateAbstractClassMetaData(MetaDataManagerImpl.java:2862)

at org.datanucleus.metadata.MetaDataManagerImpl.getMetaDataForClass(MetaDataManagerImpl.java:1665)

at org.datanucleus.ExecutionContextImpl.hasPersistenceInformationForClass(ExecutionContextImpl.java:5588)

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileInternal(JDOQLQuery.java:256)

at org.datanucleus.store.query.Query.executeQuery(Query.java:1805)

at org.datanucleus.store.query.Query.executeWithArray(Query.java:1733)

at org.datanucleus.store.query.Query.execute(Query.java:1715)

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:371)

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:213)

at org.apache.hadoop.hive.metastore.ObjectStore.getMSchemaVersion(ObjectStore.java:9137)

at org.apache.hadoop.hive.metastore.ObjectStore.getMetaStoreSchemaVersion(ObjectStore.java:9121)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:9078)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:9063)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy25.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:699)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:692)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:775)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:540)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8672)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:8937)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:8854)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)大致原因是:由于Hive Metastore服务升级后,元数据中的某些字段与新版本的Hive Metastore服务不兼容导致的。

处理:进行 metastore 初始化

前提:做好备份工作

(2)进行备份

#备份元数据(即上面有备份的)

[root@hadoop106 ~]# mysqldump -u <username> -p <password> <databasename> > <backupfile.sql>

#备份数据

[root@hadoop105 ~]# hadoop fs -copyToLocal /user/hive/warehouse /opt/hive_bak20230605(3)初始化 hive metastore 数据库

[root@hadoop105 soft]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 3.1.0

org.apache.hadoop.hive.metastore.HiveMetaException: Unknown version specified for initialization: 3.1.0

org.apache.hadoop.hive.metastore.HiveMetaException: Unknown version specified for initialization: 3.1.0

at org.apache.hadoop.hive.metastore.MetaStoreSchemaInfo.generateInitFileName(MetaStoreSchemaInfo.java:137)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:585)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:567)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1517)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

*** schemaTool failed ***大致原因是:说明没有找到初始化文件

处理:复制初始化 sql 文件再执行初始化

[root@hadoop105 soft]# cp /opt/cloudera/hive/scripts/metastore/upgrade/mysql/hive-schema-3.1.0.mysql.sql /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/scripts/metastore/upgrade/mysql/[root@hadoop105 cloudera]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 2.1.1-cdh6.3.2

Initialization script hive-schema-2.1.1.mysql.sql

Error: Syntax error: Encountered "<EOF>" at line 1, column 64. (state=42X01,code=30000)

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

Use --verbose for detailed stacktrace.

*** schemaTool failed ***处理:加上 --verbose 查看具体错误

[root@hadoop105 hive_sql]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 2.1.1-cdh6.3.2

Initialization script hive-schema-2.1.1.mysql.sql

Connecting to jdbc:derby:;databaseName=metastore_db;create=true

Connected to: Apache Derby (version 10.14.1.0 - (1808820))

Driver: Apache Derby Embedded JDBC Driver (version 10.14.1.0 - (1808820))

Transaction isolation: TRANSACTION_READ_COMMITTED

0: jdbc:derby:> !autocommit on

Autocommit status: true

0: jdbc:derby:> /*!40101 SET @OLD_CHARACTER_SET_CLIENT=@@CHARACTER_SET_CLIENT */

Error: Syntax error: Encountered "<EOF>" at line 1, column 64. (state=42X01,code=30000)

Closing: 0: jdbc:derby:;databaseName=metastore_db;create=true

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:568)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:541)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1137)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.io.IOException: Schema script failed, errorcode 2

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:962)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:941)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:564)

... 8 more

*** schemaTool failed ***大致原因是:这里看到连接的是:Connecting to jdbc:derby:;databaseName=metastore_db;create=true,我们是myql 不是derby,而我初始化的时候已经指定了 db 类型为 mysql:-dbType mysql

处理:可以尝试如下方法:

/etc/hive/conf/hive-site.xml

/etc/hive/conf/hive-default.xml

/opt/cloudera/parcels/CDH/lib/hive/conf/hive-site.xml

/opt/cloudera/parcels/CDH/lib/hive/conf/hive-default.xml

#在上面这些文件中找到 javax.jdo.option.ConnectionURL 属性 进行修改正确的类型

如果都没有,那么修改 /etc/hive/conf/hive-site.xml 增加如下配置并保存:

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>#重新执行初始化

[root@hadoop105 conf]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Mon Jun 05 18:44:26 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Underlying cause: java.sql.SQLException : Access denied for user 'APP'@'localhost' (using password: YES)

SQL Error code: 1045

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

at org.apache.hadoop.hive.metastore.tools.HiveSchemaHelper.getConnectionToMetastore(HiveSchemaHelper.java:77)

at org.apache.hive.beeline.HiveSchemaTool.getConnectionToMetastore(HiveSchemaTool.java:142)

at org.apache.hive.beeline.HiveSchemaTool.testConnectionToMetastore(HiveSchemaTool.java:449)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:555)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:541)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1137)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.sql.SQLException: Access denied for user 'APP'@'localhost' (using password: YES)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:965)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3978)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3914)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:871)

at com.mysql.jdbc.MysqlIO.proceedHandshakeWithPluggableAuthentication(MysqlIO.java:1714)

at com.mysql.jdbc.MysqlIO.doHandshake(MysqlIO.java:1224)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2199)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2230)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2025)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:778)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:386)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.hadoop.hive.metastore.tools.HiveSchemaHelper.getConnectionToMetastore(HiveSchemaHelper.java:73)

... 11 more

*** schemaTool failed ***大致原因是:这里的 APP 用户是 Hive metastore 使用的默认用户。如果没有为 Hive metastore 配置其他用户,则 Hive metastore 将使用 APP 用户作为默认用户,但是因为我们用的是 CDH 的 hive,本身就没有 APP 这个用户。

处理:尝试在 hive 用户下执行初始化

sudo -u hive /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema[root@hadoop105 ~]# sudo - hive

[hive@hadoop105 ~]$ /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

ERROR StatusLogger File not found in file system or classpath: hive-log4j2.properties

ERROR StatusLogger Reconfiguration failed: No configuration found for '3abfe836' at 'null' in 'null'

Exception in thread "main" java.io.IOException: Error opening job jar: /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/lib313/hive-cli-3.1.3.jar

at org.apache.hadoop.util.RunJar.run(RunJar.java:252)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.io.FileNotFoundException: /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/lib313/hive-cli-3.1.3.jar (Permission denied)

at java.util.zip.ZipFile.open(Native Method)

at java.util.zip.ZipFile.<init>(ZipFile.java:225)

at java.util.zip.ZipFile.<init>(ZipFile.java:155)

at java.util.jar.JarFile.<init>(JarFile.java:166)

at java.util.jar.JarFile.<init>(JarFile.java:103)

at org.apache.hadoop.util.RunJar.run(RunJar.java:250)

... 1 more大致原因:前面复制这些文件之后没有相应赋权

处理:赋上权限

[root@hadoop105 ~]# chmod 777 /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/lib313/hive-*.jar#重新执行初始化

[hive@hadoop105 ~]$ /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://hadoop106:3306/hive?createDatabaseIfNotExist=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Tue Jun 06 10:24:31 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Underlying cause: java.sql.SQLException : Access denied for user 'APP'@'localhost' (using password: YES)

SQL Error code: 1045

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

at org.apache.hadoop.hive.metastore.tools.HiveSchemaHelper.getConnectionToMetastore(HiveSchemaHelper.java:94)

at org.apache.hive.beeline.HiveSchemaTool.getConnectionToMetastore(HiveSchemaTool.java:169)

at org.apache.hive.beeline.HiveSchemaTool.testConnectionToMetastore(HiveSchemaTool.java:475)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:581)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:567)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1517)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.sql.SQLException: Access denied for user 'APP'@'localhost' (using password: YES)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:965)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3978)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3914)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:871)

at com.mysql.jdbc.MysqlIO.proceedHandshakeWithPluggableAuthentication(MysqlIO.java:1714)

at com.mysql.jdbc.MysqlIO.doHandshake(MysqlIO.java:1224)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2199)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2230)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2025)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:778)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:386)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at java.sql.DriverManager.getConnection(DriverManager.java:664)

at java.sql.DriverManager.getConnection(DriverManager.java:247)

at org.apache.hadoop.hive.metastore.tools.HiveSchemaHelper.getConnectionToMetastore(HiveSchemaHelper.java:88)

... 11 more

*** schemaTool failed ***问题:发现还是报 APP 用户的问题,兜兜转转,突然想起来我的配置文件里并没有指定用户,所以默认才用了app用户。

处理:我们在 hive-site.xml 加入如下配置指定 root 用户

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>#重新执行初始化

[root@hadoop105 ~]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

Error: Table 'CTLGS' already exists (state=42S01,code=1050)

Closing: 0: jdbc:mysql://hadoop106:3306/hive?createDatabaseIfNotExist=true

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:594)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:567)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1517)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.io.IOException: Schema script failed, errorcode 2

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1226)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1204)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:590)

... 8 more

*** schemaTool failed ***问题:提示表已存在

处理:我们把它重命名

mysql> use hive;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> ALTER TABLE CTLGS RENAME TO CTLGS_bak20230606;

Query OK, 0 rows affected (0.01 sec)#重新执行初始化

[root@hadoop105 ~]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

Error: Duplicate entry 'org.apache.hadoop.hive.metastore.model.MNotificationLog' for key 'PRIMARY' (state=23000,code=1062)

Closing: 0: jdbc:mysql://hadoop106:3306/hive?createDatabaseIfNotExist=true

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:594)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:567)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1517)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.io.IOException: Schema script failed, errorcode 2

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1226)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1204)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:590)

... 8 more

*** schemaTool failed ***问题:因为在初始化过程中重复插入了具有相同主键的行而导致的。在这种情况下,主键是 'org.apache.hadoop.hive.metastore.model.MNotificationLog',并且已经存在于表中,因此无法再次插入。

处理:删除重复主键

#删除重复主键

mysql> DELETE FROM mysql.metastore WHERE UUID = 'org.apache.hadoop.hive.metastore.model.MNotificationLog';

ERROR 1146 (42S02): Table 'mysql.metastore' doesn't exist

#没有表信息

mysql> SHOW TABLES FROM mysql;

#确认 mysql 库确实没有metasotre表

#创建metasotre表

mysql> CREATE TABLE mysql.metastore (

-> UUID VARCHAR(128) NOT NULL,

-> -- add other columns here

-> PRIMARY KEY (UUID)

-> );

Query OK, 0 rows affected (0.01 sec)

#重新执行初始化

====略====问题:如果又报 Error: Table 'CTLGS' already exists

处理:把该表 drop 掉 或者 删除掉 hive 数据库,再重新创建一次,前提是做好备份,然后再执行初始化。

#建议两个同时执行,不然没完没了

mysql> DROP TABLE CTLGS;

Query OK, 0 rows affected (0.00 sec)

mysql> DELETE FROM mysql.metastore WHERE UUID = 'org.apache.hadoop.hive.metastore.model.MNotificationLog';

Query OK, 0 rows affected (0.00 sec)最后重新执行一次初始化(这次初始化已没有错误项),重启 cdh hive 服务

以上到此解决了元数据初始化的问题

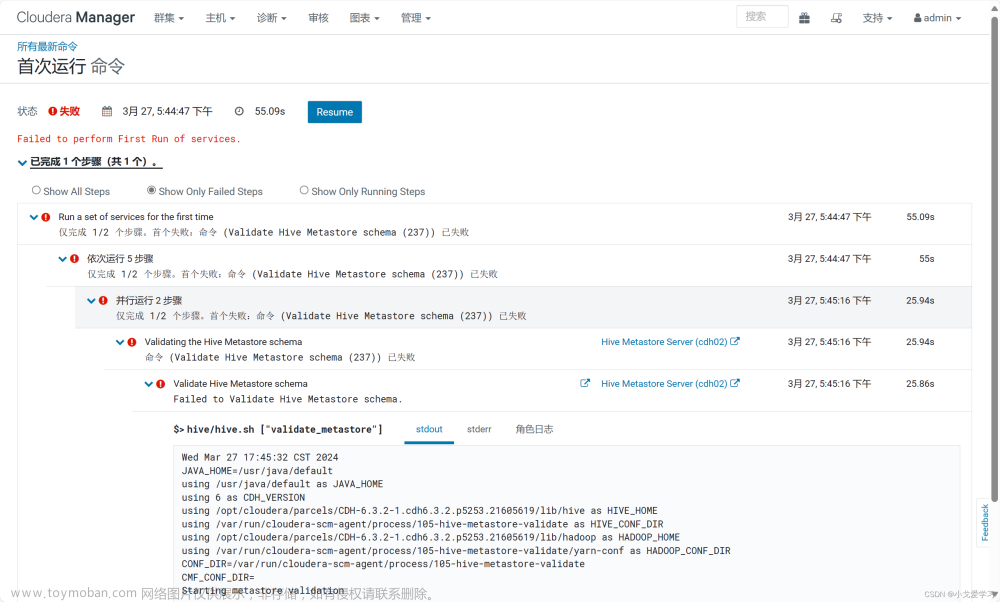

(4)重启 cdh 的 hive 服务

虽然重启过程中没有出现问题,但是实际 Hive Metastore Server 启动后会自动退出,查看 hive 日志:/var/log/hive/hadoop-cmf-hive-HIVEMETASTORE-hadoop105.log.out

2023-06-06 13:46:22,525 ERROR org.apache.hadoop.hive.metastore.RetryingHMSHandler: [main]: Retrying HMSHandler after 2000 ms (attempt 9 of 10) with error: javax.jdo.JDOFatalUserException: Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:616)

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:388)

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:213)

at org.apache.hadoop.hive.metastore.ObjectStore.getMSchemaVersion(ObjectStore.java:9137)

at org.apache.hadoop.hive.metastore.ObjectStore.getMetaStoreSchemaVersion(ObjectStore.java:9121)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:9078)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:9063)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy24.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:699)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:692)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:775)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:540)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8672)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:8937)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:8854)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

NestedThrowablesStackTrace:

Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

org.datanucleus.metadata.InvalidClassMetaDataException: Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

at org.datanucleus.metadata.ClassMetaData.populateMemberMetaData(ClassMetaData.java:705)

at org.datanucleus.metadata.ClassMetaData.populate(ClassMetaData.java:217)

at org.datanucleus.metadata.MetaDataManagerImpl$1.run(MetaDataManagerImpl.java:2868)

at java.security.AccessController.doPrivileged(Native Method)

at org.datanucleus.metadata.MetaDataManagerImpl.populateAbstractClassMetaData(MetaDataManagerImpl.java:2862)

at org.datanucleus.metadata.MetaDataManagerImpl.getMetaDataForClass(MetaDataManagerImpl.java:1665)

at org.datanucleus.ExecutionContextImpl.hasPersistenceInformationForClass(ExecutionContextImpl.java:5588)

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileInternal(JDOQLQuery.java:256)

at org.datanucleus.store.query.Query.executeQuery(Query.java:1805)

at org.datanucleus.store.query.Query.executeWithArray(Query.java:1733)

at org.datanucleus.store.query.Query.execute(Query.java:1715)

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:371)

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:213)

at org.apache.hadoop.hive.metastore.ObjectStore.getMSchemaVersion(ObjectStore.java:9137)

at org.apache.hadoop.hive.metastore.ObjectStore.getMetaStoreSchemaVersion(ObjectStore.java:9121)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:9078)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:9063)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy24.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:699)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:692)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:775)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:540)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8672)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:8937)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:8854)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

2023-06-06 13:52:26,156 INFO org.apache.hadoop.hive.metastore.HiveMetaStore: [shutdown-hook-0]: Shutting down hive metastore.

2023-06-06 13:52:26,158 INFO org.apache.hadoop.hive.metastore.HiveMetaStore: [shutdown-hook-0]: SHUTDOWN_MSG: 大致原因是: Hive Metastore 数据库架构与正在运行的 Hive 版本不兼容,表明 Hive 元数据存储中的版本表的定义与实际代码不匹配,导致无法启动 Hive 服务,尝试了删除元数据,重启服务也是一样的问题

处理:尝试更换mysql jar包

(5)更换 jar 包测试

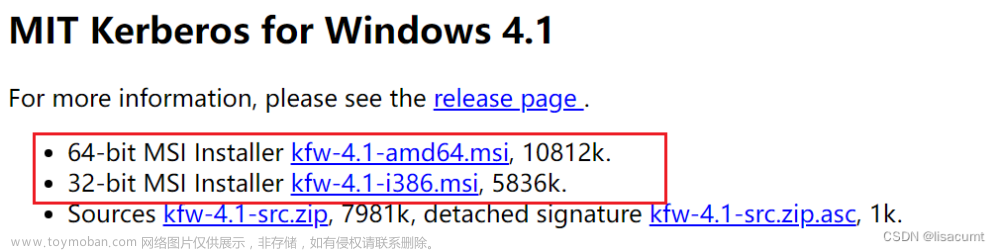

下载地址:https://mvnrepository.com/artifact/mysql/mysql-connector-java/5.1.49

#原版本是5.1.47,更换成5.1.38

[root@hadoop105 ~]# mv /usr/share/java/mysql-connector-java.jar /usr/share/java/mysql-connector-java.jar_bak

[root@hadoop105 ~]# cp /home/rsjcs/mysql-connector-java-5.1.49.jar /usr/share/java/mysql-connector-java.jar#重启 hive Metastore 服务错误如下:

Caused by: org.datanucleus.exceptions.NucleusException: Attempt to invoke the "HikariCP" plugin to create a ConnectionPool gave an error : The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver

ERROR org.apache.hadoop.hive.metastore.RetryingHMSHandler: [main]: HMSHandler Fatal error: MetaException(message:Error creating transactional connection factory)

ERROR org.apache.hadoop.hive.metastore.HiveMetaStore: [main]: MetaException(message:Error creating transactional connection factory)

Caused by: org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException: The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver

大致原因是:没有找到 mysql 连接驱动

处理:重新初始化元数据

[root@hadoop105 ~]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

Error: Table 'CTLGS' already exists (state=42S01,code=1050)

Closing: 0: jdbc:mysql://hadoop106:3306/hive?createDatabaseIfNotExist=true

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:594)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:567)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1517)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.io.IOException: Schema script failed, errorcode 2

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1226)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1204)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:590)问题:GTLGS 表已经存在

处理:删除该表

#删除 CTLGS 表

mysql> use hive;

Database changed

mysql> DROP TABLE CTLGS;

ERROR 1217 (23000): Cannot delete or update a parent row: a foreign key constraint fails

#这是删除一个有外键约束的表而引起的

#查看收约束的外键信息

mysql> SELECT TABLE_NAME, CONSTRAINT_NAME

-> FROM INFORMATION_SCHEMA.KEY_COLUMN_USAGE

-> WHERE REFERENCED_TABLE_NAME = 'CTLGS';

+------------+-----------------+

| TABLE_NAME | CONSTRAINT_NAME |

+------------+-----------------+

| DBS | CTLG_FK1 |

+------------+-----------------+

1 row in set (0.03 sec)

#删除引用表的外键约束(数据重要的记得做好备份)

mysql> alter table DBS drop foreign key CTLG_FK1;

Query OK, 0 rows affected (0.00 sec)

Records: 0 Duplicates: 0 Warnings: 0

#重新删除表

mysql> DROP TABLE CTLGS;

Query OK, 0 rows affected (0.01 sec)

mysql> DELETE FROM mysql.metastore WHERE UUID = 'org.apache.hadoop.hive.metastore.model.MNotificationLog';

Query OK, 0 rows affected (0.00 sec)#再次初始化

[root@hadoop105 ~]# /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/bin/schematool -dbType mysql -initSchema --verbose

Error: Duplicate entry 'org.apache.hadoop.hive.metastore.model.MNotificationLog' for key 'PRIMARY' (state=23000,code=1062)

Closing: 0: jdbc:mysql://hadoop106:3306/hive?createDatabaseIfNotExist=true

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:594)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:567)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1517)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:313)

at org.apache.hadoop.util.RunJar.main(RunJar.java:227)

Caused by: java.io.IOException: Schema script failed, errorcode 2

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1226)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:1204)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:590)

... 8 more

*** schemaTool failed ***问题:这里看着也是没玩没了了,也是提示 SEQUENCE_TABLE 表已经存在,

处理:为了避免又这么多问题,我删除掉 hive 库,再次新建空的 hive 库来进行初始化(切记做好备份,前面备份了可略过)

Database changed

mysql> drop database hive;

Query OK, 74 rows affected (0.25 sec)

mysql> CREATE DATABASE hive DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

Query OK, 1 row affected (0.00 sec)问题:再次初始化已经正常。再启动测试依旧报

Caused by: org.datanucleus.exceptions.NucleusException: Attempt to invoke the "HikariCP" plugin to create a ConnectionPool gave an error : The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver

ERROR org.apache.hadoop.hive.metastore.RetryingHMSHandler: [main]: HMSHandler Fatal error: MetaException(message:Error creating transactional connection factory)

ERROR org.apache.hadoop.hive.metastore.HiveMetaStore: [main]: MetaException(message:Error creating transactional connection factory)

Caused by: org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException: The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver处理:把mysql-connector-java.jar放到 hive/lib313目录下并授权

chmod 777 /opt/cloudera/parcels/CDH/lib/hive/lib313/mysql-connector-java.jar问题:重新启动,又回到原来的报错。。。

ERROR org.apache.hadoop.hive.metastore.HiveMetaStore: [main]: Metastore Thrift Server threw an exception...

org.apache.hadoop.hive.metastore.api.MetaException: Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

Caused by: javax.jdo.JDOFatalUserException: Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

过程到这里,也说明了和 mysql 的 jar 包没有关系,以上纯属实验过程,如果实际操作,可忽略替换 mysql jar 包这个问题。

那么有可能是不是 hive merastore 版本和 mysql 的 hive 不一致导致的?可以通过以下命令查到的版本是3.1.3了,而且也重新初始化过几次 hive 元数据了呀

[root@hadoop105 ~]# hive --service metastore --version

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive 3.1.3

Git git://MacBook-Pro.fios-router.home/Users/ngangam/commit/hive -r 4df4d75bf1e16fe0af75aad0b4179c34c07fc975

Compiled by ngangam on Sun Apr 3 16:58:16 EDT 2022

From source with checksum 5da234766db5dfbe3e92926c9bbab2af考虑是不是因为用了旧的 hive-site.xml 配置的问题,我重新把升级的 hive-3.1.3 的 模板配置文件给替换了旧的 hive-site.xml ,但是依然是同样的报错,到这里基本上该测试的都测试了,似乎没有办法了。。。

------------------------------------------------------此处是转折点---------------------------------------------------------

(6)执行文件的替换

那么单独在 shell 命令行启动 hive metastore 和 hiveserver2 呢?

/opt/cloudera/parcels/CDH/lib/hive/bin/hive --service metastore#发现报错一样是

Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class!

org.datanucleus.metadata.InvalidClassMetaDataException: Class "org.apache.hadoop.hive.metastore.model.MVersionTable" field "org.apache.hadoop.hive.metastore.model.MVersionTable.schemaVersionV2" : declared in MetaData, but this field doesnt exist in the class有没有一种可能是 bin 下有些配置也需要变更呢?于是

#备份原来的bin目录

[root@hadoop105 hive]# mv bin bin_bak

#将之前解压hive-3.1.3的hive下的bin目录拷贝过来使用

[root@hadoop105hive]# mv /opt/cloudera/hive/bin ./#然后尝试启动 hive metastore

[root@hadoop33 bin]# ./hive --service metastore

2023-06-08 09:24:45: Starting Hive Metastore Server

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "org.apache.hadoop.hive.metastore.metrics.JvmPauseMonitor$Monitor@2f89bf28" java.lang.NoSuchMethodError: com.google.common.base.Stopwatch.createUnstarted()Lcom/google/common/base/Stopwatch;

at org.apache.hadoop.hive.metastore.metrics.JvmPauseMonitor$Monitor.run(JvmPauseMonitor.java:174)

at java.lang.Thread.run(Thread.java:748)

Thu Jun 08 09:24:49 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Thu Jun 08 09:24:50 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.果然启动没有问题,然后退出去到 cdh 平台上去 启动 hive metastore 服务,不过这次直接启动失败,没有输出任何日志,/var/log/hive/hadoop-cmf-hive-HIVEMETASTORE-hadoop105.log.out 日志文件也没有任何打印信息,那么可以确定的是,肯定是有配置需要修改的,当我单独只替换掉 hive/bin/ 下的 hive 可执行文件时,也会出现上面的报错,整个目录替换则不会,但是 metastore 启动应该只是用到可执行文件 hive 和配置文件 hive-site.xml 才是。

(7)恢复日志打印

仔细查看 hive 可执行文件的内容,看到有个环境文件是有被引入的,即:hive-env.sh,通过查找可以发现文件是:/etc/hive/conf.cloudera.hive/hive-env.sh

[root@hadoop105 bin]# find / -name hive-env.sh

/run/cloudera-scm-agent/process/ccdeploy_hive-conf_etchiveconf.cloudera.hive_-3511402930136781029/hive-conf/hive-env.sh