DataX3.0系列文章

1、datax3.0部署与验证

2、mysql相关同步-mysql同步到mysql、mysql和hdfs相互同步

3、oracle相关同步-oracle到hdfs

4、sybase相关同步-sybase到hdfs

5、ETL工具的比较(DataPipeline,Kettle,Talend,Informatica,Datax ,Oracle Goldeng

本文介绍sybase的相关同步,sybase到hdfs同步。

本文分为三部分,。

本文的前提:数据库对应的表已经建好。

一、Sybase同步介绍

同步sybase数据库的数据,需要使用通用数据库同步的reader和writer,即RDBMSReader和RDBMWriter。

RDBMSReader插件实现了从RDBMS读取数据。在底层实现上,RDBMSReader通过JDBC连接远程RDBMS数据库,并执行相应的sql语句将数据从RDBMS库中SELECT出来。目前支持达梦、db2、PPAS、Sybase数据库的读取。RDBMSReader是一个通用的关系数据库读插件,您可以通过注册数据库驱动等方式增加任意多样的关系数据库读支持。

RDBMSReader通过JDBC连接器连接到远程的RDBMS数据库,并根据用户配置的信息生成查询SELECT SQL语句并发送到远程RDBMS数据库,并将该SQL执行返回结果使用DataX自定义的数据类型拼装为抽象的数据集,并传递给下游Writer处理。

对于用户配置Table、Column、Where的信息,RDBMSReader将其拼接为SQL语句发送到RDBMS数据库;对于用户配置querySql信息,RDBMS直接将其发送到RDBMS数据库。

配置一个从RDBMS数据库同步抽取数据作业文章来源:https://www.toymoban.com/news/detail-478856.html

{

"job": {

"setting": {

"speed": {

"byte": 1048576

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "rdbmsreader",

"parameter": {

"username": "xxx",

"password": "xxx",

"column": [

"id",

"name"

],

"splitPk": "pk",

"connection": [

{

"table": [

"table"

],

"jdbcUrl": [

"jdbc:dm://ip:port/database"

]

}

],

"fetchSize": 1024,

"where": "1 = 1"

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print": true

}

}

}

]

}

}

1、参数说明

1)、jdbcUrl

- 描述:描述的是到对端数据库的JDBC连接信息,jdbcUrl按照RDBMS官方规范,并可以填写连接附件控制信息。请注意不同的数据库jdbc的格式是不同的,DataX会根据具体jdbc的格式选择合适的数据库驱动完成数据读取。

达梦 jdbc:dm://ip:port/database

db2格式 jdbc:db2://ip:port/database

PPAS格式 jdbc:edb://ip:port/database - rdbmswriter如何增加新的数据库支持

进入rdbmsreader对应目录,这里${DATAX_HOME}为DataX主目录,即: ${DATAX_HOME}/plugin/reader/rdbmsreader

在rdbmsreader插件目录下有plugin.json配置文件,在此文件中注册您具体的数据库驱动,具体放在drivers数组中。rdbmsreader插件在任务执行时会动态选择合适的数据库驱动连接数据库。

{

"name": "rdbmsreader",

"class": "com.alibaba.datax.plugin.reader.rdbmsreader.RdbmsReader",

"description": "useScene: prod. mechanism: Jdbc connection using the database, execute select sql, retrieve data from the ResultSet. warn: The more you know about the database, the less problems you encounter.",

"developer": "alibaba",

"drivers": [

"dm.jdbc.driver.DmDriver",

"com.ibm.db2.jcc.DB2Driver",

"com.sybase.jdbc3.jdbc.SybDriver",

"com.edb.Driver",

"com.sybase.jdbc4.jdbc.SybDriver"

]

}

- 在rdbmsreader插件目录下有libs子目录,您需要将您具体的数据库驱动放到libs目录下。系统部署好了后的sybase驱动可能不一定适用所有的sybase数据库,本示例是使用新增的sybase驱动

$tree

.

|-- libs

| |-- Dm7JdbcDriver16.jar

| |-- commons-collections-3.0.jar

| |-- commons-io-2.4.jar

| |-- commons-lang3-3.3.2.jar

| |-- commons-math3-3.1.1.jar

| |-- datax-common-0.0.1-SNAPSHOT.jar

| |-- datax-service-face-1.0.23-20160120.024328-1.jar

| |-- db2jcc4.jar

| |-- druid-1.0.15.jar

| |-- edb-jdbc16.jar

| |-- fastjson-1.1.46.sec01.jar

| |-- guava-r05.jar

| |-- hamcrest-core-1.3.jar

| |-- jconn3-1.0.0-SNAPSHOT.jar

| |-- logback-classic-1.0.13.jar

| |-- logback-core-1.0.13.jar

| |-- plugin-rdbms-util-0.0.1-SNAPSHOT.jar

| |-- jconn4.jar

| `-- slf4j-api-1.7.10.jar

|-- plugin.json

|-- plugin_job_template.json

`-- rdbmsreader-0.0.1-SNAPSHOT.jar

必选:是

默认值:无

二、sybase到hdfs

1、配置文件模板

python ../bin/datax.py -r rdbmsreader -w hdfswriter

[root@bd-node-05 job]# python ../bin/datax.py -r rdbmsreader -w hdfswriter

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

Please refer to the rdbmsreader document:

https://github.com/alibaba/DataX/blob/master/rdbmsreader/doc/rdbmsreader.md

Please refer to the hdfswriter document:

https://github.com/alibaba/DataX/blob/master/hdfswriter/doc/hdfswriter.md

Please save the following configuration as a json file and use

python {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.

{

"job": {

"content": [

{

"reader": {

"name": "rdbmsreader",

"parameter": {

"column": [],

"connection": [

{

"jdbcUrl": [],

"table": []

}

],

"password": "",

"username": "",

"where": ""

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [],

"compress": "",

"defaultFS": "",

"fieldDelimiter": "",

"fileName": "",

"fileType": "",

"path": "",

"writeMode": ""

}

}

}

],

"setting": {

"speed": {

"channel": ""

}

}

}

2、编写配置文件

{

"job": {

"content": [

{

"reader": {

"name": "rdbmsreader",

"parameter": {

"connection": [

{

"jdbcUrl": ["jdbc:sybase:Tds:192.168.*.*:4100/DATABASE=test" ],

"querySql": [ "select * from dbo.t_k"]

}

],

"password": "×××××",

"username": "etl_Bigdatatest"

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://hdfsHA",

"hadoopConfig":{

"dfs.nameservices": "hdfsHA",

"dfs.ha.namenodes.hdfsHA": "namenode36,namenode38",

"dfs.namenode.rpc-address.hdfsHA.namenode36": "bd-node-02:8020",

"dfs.namenode.rpc-address.hdfsHA.namenode38": "bd-node-03:8020",

"dfs.client.failover.proxy.provider.hdfsHA": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"column": [

{ "name": "lsh","type": "STRING"},

{ "name": "djy_lsh","type": "string"},

{ "name": "dshh","type": "string"},

{ "name": "dzd_num","type": "string"},

{ "name": "force","type": "STRING"},

{ "name": "dddbh","type": "string"},

{ "name": "dkhzh","type": "string"},

{ "name": "ddgje","type": "double"},

{ "name": "dtkje","type": "double"},

{ "name": "dj_dshh","type": "string"},

{ "name": "dj_dzd_num","type": "string"},

{ "name": "ddjry","type": "STRING"},

{ "name": "ddjrq","type": "date"},

{ "name": "hd_dshh","type": "string"},

{ "name": "dhdry","type": "string"},

{ "name": "dhdrq","type": "date"},

{ "name": "hd_dzd_num","type": "string"},

{ "name": "flag","type": "string"},

{ "name": "dqr","type": "STRING"},

{ "name": "Sret","type": "string"},

{ "name": "dbz","type": "string"},

{ "name": "dmsg","type": "string"},

{ "name": "dhdrq","type": "string"},

{ "name": "dxgdddbh","type": "string"},

{ "name": "tklx","type": "string"},

{ "name": "dxgkhzh","type": "string"}

],

"compress": "",

"fieldDelimiter": ",",

"fileName": "t_k",

"fileType": "text",

"path": "/user/hive/warehouse/test.db/t_k",

"writeMode": "append"

}

}

}

],

"setting": {

"speed": {

"channel": 6

}

}

}

}

3、提交任务

python ../bin/datax.py --jvm="-Xms32G -Xmx32G" t_k.json

4、验证结果

[root@bd-node-05 job]# python ../bin/datax.py --jvm="-Xms32G -Xmx32G" t_k.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2023-04-06 10:01:09.372 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2023-04-06 10:01:09.381 [main] INFO Engine - the machine info =>

osInfo: Oracle Corporation 1.8 25.181-b13

jvmInfo: Linux amd64 3.10.0-1160.88.1.el7.x86_64

cpu num: 32

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 8,192.50MB | 8,192.50MB

Code Cache | 240.00MB | 2.44MB

PS Survivor Space | 1,365.00MB | 1,365.00MB

PS Old Gen | 21,845.50MB | 21,845.50MB

Metaspace | -0.00MB | 0.00MB

......

2023-04-06 10:01:41.520 [job-0] INFO JobContainer - DataX Reader.Job [rdbmsreader] do post work.

2023-04-06 10:01:41.520 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2023-04-06 10:01:41.522 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /usr/local/datax/hook

2023-04-06 10:01:41.626 [job-0] INFO JobContainer -

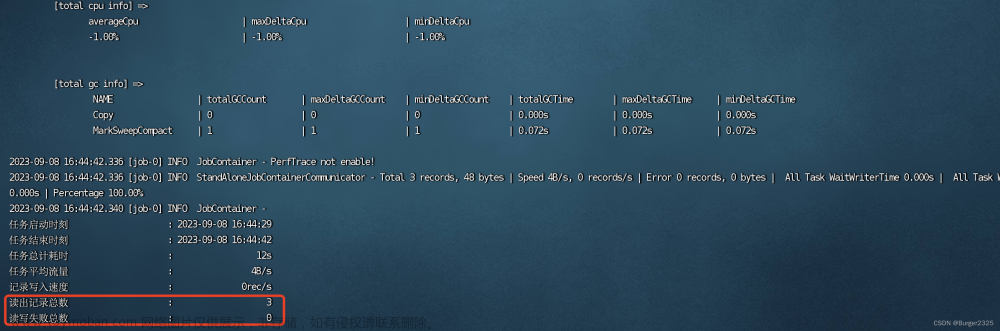

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 1 | 1 | 1 | 0.067s | 0.067s | 0.067s

PS Scavenge | 3 | 3 | 3 | 0.045s | 0.045s | 0.045s

2023-04-06 10:01:41.626 [job-0] INFO JobContainer - PerfTrace not enable!

2023-04-06 10:01:41.627 [job-0] INFO StandAloneJobContainerCommunicator - Total 1033495 records, 191629349 bytes | Speed 6.09MB/s, 34449 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 4.336s | All Task WaitReaderTime 0.441s | Percentage 100.00%

2023-04-06 10:01:41.632 [job-0] INFO JobContainer -

任务启动时刻 : 2023-04-06 10:01:09

任务结束时刻 : 2023-04-06 10:01:41

任务总计耗时 : 32s

任务平均流量 : 6.09MB/s

记录写入速度 : 34449rec/s

读出记录总数 : 1033495

读写失败总数 : 0

至此,完成了sybase到hdfs的同步。文章来源地址https://www.toymoban.com/news/detail-478856.html

到了这里,关于4、sybase相关同步-sybase通过datax同步到hdfs的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!