1 部署 elasticsearch

1.1 部署 ECK

Elastic Cloud on Kubernetes,这是一款基于 Kubernetes Operator 模式的新型编排产品,用户可使用该产品在 Kubernetes 上配置、管理和运行 Elasticsearch 集群。ECK 的愿景是为 Kubernetes 上的 Elastic 产品和解决方案提供 SaaS 般的体验。

# 官网

https://www.elastic.co/guide/en/cloud-on-k8s/1.9/k8s-deploy-eck.html

k8s 版本

root@sz-k8s-master-01:~# kubectl version

Client Version: version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.1", GitCommit:"b7394102d6ef778017f2ca4046abbaa23b88c290", GitTreeState:"clean", BuildDate:"2019-04-08T17:11:31Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"14+", GitVersion:"v1.14.1-jobgc-dirty", GitCommit:"4c19bc5525dc468017cc2cf14585537ed24e7d4c", GitTreeState:"dirty", BuildDate:"2020-02-21T04:47:43Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

root@sz-k8s-master-01:~#

部署 ECK

1)If you are running a version of Kubernetes before 1.16 you have to use the legacy version of the manifests

kubectl create -f https://download.elastic.co/downloads/eck/1.9.1/crds-legacy.yaml -n logs

2)下载 yaml 文件,修改其中的 namespace 为 logs(如果不想修改,可忽略,直接执行即可)

wget https://download.elastic.co/downloads/eck/1.9.1/operator-legacy.yaml

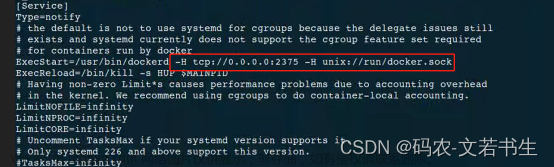

3)修改镜像地址

# https://hub.docker.com/r/elastic/eck-operator/tags

docker pull elastic/eck-operator:1.9.1

因为原有的镜像无法下载

root@sz-k8s-master-01:~# docker pull docker.elastic.co/eck-operator:1.9.1

Error response from daemon: Get https://docker.elastic.co/v2/: x509: certificate signed by unknown authority

root@sz-k8s-master-01:~#

查看文件如下

root@sz-k8s-master-01:~# cat operator-legacy.yaml

# Source: eck-operator/templates/operator-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: elastic-system

labels:

name: elastic-system

---

# Source: eck-operator/templates/service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: elastic-operator

namespace: logs

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

---

# Source: eck-operator/templates/webhook.yaml

apiVersion: v1

kind: Secret

metadata:

name: elastic-webhook-server-cert

namespace: logs

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

---

# Source: eck-operator/templates/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: elastic-operator

namespace: logs

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

data:

eck.yaml: |-

log-verbosity: 0

metrics-port: 0

container-registry: docker.elastic.co

max-concurrent-reconciles: 3

ca-cert-validity: 8760h

ca-cert-rotate-before: 24h

cert-validity: 8760h

cert-rotate-before: 24h

set-default-security-context: true

kube-client-timeout: 60s

elasticsearch-client-timeout: 180s

disable-telemetry: false

distribution-channel: all-in-one

validate-storage-class: true

enable-webhook: true

webhook-name: elastic-webhook.k8s.elastic.co

---

# Source: eck-operator/templates/cluster-roles.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: elastic-operator

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

rules:

- apiGroups:

- "authorization.k8s.io"

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- pods

- events

- persistentvolumeclaims

- secrets

- services

- configmaps

verbs:

- get

- list

- watch

- create

- update

- patch

- delete

- apiGroups:

- apps

resources:

- deployments

- statefulsets

- daemonsets

verbs:

- get

- list

- watch

- create

- update

- patch

- delete

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- get

- list

- watch

- create

- update

- patch

- delete

- apiGroups:

- elasticsearch.k8s.elastic.co

resources:

- elasticsearches

- elasticsearches/status

- elasticsearches/finalizers # needed for ownerReferences with blockOwnerDeletion on OCP

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- kibana.k8s.elastic.co

resources:

- kibanas

- kibanas/status

- kibanas/finalizers # needed for ownerReferences with blockOwnerDeletion on OCP

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- apm.k8s.elastic.co

resources:

- apmservers

- apmservers/status

- apmservers/finalizers # needed for ownerReferences with blockOwnerDeletion on OCP

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- enterprisesearch.k8s.elastic.co

resources:

- enterprisesearches

- enterprisesearches/status

- enterprisesearches/finalizers # needed for ownerReferences with blockOwnerDeletion on OCP

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- beat.k8s.elastic.co

resources:

- beats

- beats/status

- beats/finalizers # needed for ownerReferences with blockOwnerDeletion on OCP

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- agent.k8s.elastic.co

resources:

- agents

- agents/status

- agents/finalizers # needed for ownerReferences with blockOwnerDeletion on OCP

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- maps.k8s.elastic.co

resources:

- elasticmapsservers

- elasticmapsservers/status

- elasticmapsservers/finalizers # needed for ownerReferences with blockOwnerDeletion on OCP

verbs:

- get

- list

- watch

- create

- update

- patch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

verbs:

- get

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- list

- watch

- create

- update

- patch

- delete

---

# Source: eck-operator/templates/cluster-roles.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: "elastic-operator-view"

labels:

rbac.authorization.k8s.io/aggregate-to-view: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

rules:

- apiGroups: ["elasticsearch.k8s.elastic.co"]

resources: ["elasticsearches"]

verbs: ["get", "list", "watch"]

- apiGroups: ["apm.k8s.elastic.co"]

resources: ["apmservers"]

verbs: ["get", "list", "watch"]

- apiGroups: ["kibana.k8s.elastic.co"]

resources: ["kibanas"]

verbs: ["get", "list", "watch"]

- apiGroups: ["enterprisesearch.k8s.elastic.co"]

resources: ["enterprisesearches"]

verbs: ["get", "list", "watch"]

- apiGroups: ["beat.k8s.elastic.co"]

resources: ["beats"]

verbs: ["get", "list", "watch"]

- apiGroups: ["agent.k8s.elastic.co"]

resources: ["agents"]

verbs: ["get", "list", "watch"]

- apiGroups: ["maps.k8s.elastic.co"]

resources: ["elasticmapsservers"]

verbs: ["get", "list", "watch"]

---

# Source: eck-operator/templates/cluster-roles.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: "elastic-operator-edit"

labels:

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-admin: "true"

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

rules:

- apiGroups: ["elasticsearch.k8s.elastic.co"]

resources: ["elasticsearches"]

verbs: ["create", "delete", "deletecollection", "patch", "update"]

- apiGroups: ["apm.k8s.elastic.co"]

resources: ["apmservers"]

verbs: ["create", "delete", "deletecollection", "patch", "update"]

- apiGroups: ["kibana.k8s.elastic.co"]

resources: ["kibanas"]

verbs: ["create", "delete", "deletecollection", "patch", "update"]

- apiGroups: ["enterprisesearch.k8s.elastic.co"]

resources: ["enterprisesearches"]

verbs: ["create", "delete", "deletecollection", "patch", "update"]

- apiGroups: ["beat.k8s.elastic.co"]

resources: ["beats"]

verbs: ["create", "delete", "deletecollection", "patch", "update"]

- apiGroups: ["agent.k8s.elastic.co"]

resources: ["agents"]

verbs: ["create", "delete", "deletecollection", "patch", "update"]

- apiGroups: ["maps.k8s.elastic.co"]

resources: ["elasticmapsservers"]

verbs: ["create", "delete", "deletecollection", "patch", "update"]

---

# Source: eck-operator/templates/role-bindings.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: elastic-operator

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: elastic-operator

subjects:

- kind: ServiceAccount

name: elastic-operator

namespace: logs

---

# Source: eck-operator/templates/webhook.yaml

apiVersion: v1

kind: Service

metadata:

name: elastic-webhook-server

namespace: logs

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

spec:

ports:

- name: https

port: 443

targetPort: 9443

selector:

control-plane: elastic-operator

---

# Source: eck-operator/templates/statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elastic-operator

namespace: logs

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

spec:

selector:

matchLabels:

control-plane: elastic-operator

serviceName: elastic-operator

replicas: 1

template:

metadata:

annotations:

# Rename the fields "error" to "error.message" and "source" to "event.source"

# This is to avoid a conflict with the ECS "error" and "source" documents.

"co.elastic.logs/raw": "[{\"type\":\"container\",\"json.keys_under_root\":true,\"paths\":[\"/var/log/containers/*${data.kubernetes.container.id}.log\"],\"processors\":[{\"convert\":{\"mode\":\"rename\",\"ignore_missing\":true,\"fields\":[{\"from\":\"error\",\"to\":\"_error\"}]}},{\"convert\":{\"mode\":\"rename\",\"ignore_missing\":true,\"fields\":[{\"from\":\"_error\",\"to\":\"error.message\"}]}},{\"convert\":{\"mode\":\"rename\",\"ignore_missing\":true,\"fields\":[{\"from\":\"source\",\"to\":\"_source\"}]}},{\"convert\":{\"mode\":\"rename\",\"ignore_missing\":true,\"fields\":[{\"from\":\"_source\",\"to\":\"event.source\"}]}}]}]"

"checksum/config": 239de074c87fe1f7254f5c93ff9f4a0949c8f111ba15696c460d786d6279e4d6

labels:

control-plane: elastic-operator

spec:

terminationGracePeriodSeconds: 10

serviceAccountName: elastic-operator

securityContext:

runAsNonRoot: true

containers:

- image: "elastic/eck-operator:1.9.1"

imagePullPolicy: IfNotPresent

name: manager

args:

- "manager"

- "--config=/conf/eck.yaml"

env:

- name: OPERATOR_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: WEBHOOK_SECRET

value: elastic-webhook-server-cert

resources:

limits:

cpu: 1

memory: 512Mi

requests:

cpu: 100m

memory: 150Mi

ports:

- containerPort: 9443

name: https-webhook

protocol: TCP

volumeMounts:

- mountPath: "/conf"

name: conf

readOnly: true

- mountPath: /tmp/k8s-webhook-server/serving-certs

name: cert

readOnly: true

volumes:

- name: conf

configMap:

name: elastic-operator

- name: cert

secret:

defaultMode: 420

secretName: elastic-webhook-server-cert

---

# Source: eck-operator/templates/webhook.yaml

apiVersion: admissionregistration.k8s.io/v1beta1

kind: ValidatingWebhookConfiguration

metadata:

name: elastic-webhook.k8s.elastic.co

labels:

control-plane: elastic-operator

app.kubernetes.io/version: "1.9.1"

webhooks:

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-agent-k8s-elastic-co-v1alpha1-agent

failurePolicy: Ignore

name: elastic-agent-validation-v1alpha1.k8s.elastic.co

rules:

- apiGroups:

- agent.k8s.elastic.co

apiVersions:

- v1alpha1

operations:

- CREATE

- UPDATE

resources:

- agents

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-apm-k8s-elastic-co-v1-apmserver

failurePolicy: Ignore

name: elastic-apm-validation-v1.k8s.elastic.co

rules:

- apiGroups:

- apm.k8s.elastic.co

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- apmservers

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-apm-k8s-elastic-co-v1beta1-apmserver

failurePolicy: Ignore

name: elastic-apm-validation-v1beta1.k8s.elastic.co

rules:

- apiGroups:

- apm.k8s.elastic.co

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- apmservers

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-beat-k8s-elastic-co-v1beta1-beat

failurePolicy: Ignore

name: elastic-beat-validation-v1beta1.k8s.elastic.co

rules:

- apiGroups:

- beat.k8s.elastic.co

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- beats

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-enterprisesearch-k8s-elastic-co-v1-enterprisesearch

failurePolicy: Ignore

name: elastic-ent-validation-v1.k8s.elastic.co

rules:

- apiGroups:

- enterprisesearch.k8s.elastic.co

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- enterprisesearches

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-enterprisesearch-k8s-elastic-co-v1beta1-enterprisesearch

failurePolicy: Ignore

name: elastic-ent-validation-v1beta1.k8s.elastic.co

rules:

- apiGroups:

- enterprisesearch.k8s.elastic.co

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- enterprisesearches

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-elasticsearch-k8s-elastic-co-v1-elasticsearch

failurePolicy: Ignore

name: elastic-es-validation-v1.k8s.elastic.co

rules:

- apiGroups:

- elasticsearch.k8s.elastic.co

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- elasticsearches

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-elasticsearch-k8s-elastic-co-v1beta1-elasticsearch

failurePolicy: Ignore

name: elastic-es-validation-v1beta1.k8s.elastic.co

rules:

- apiGroups:

- elasticsearch.k8s.elastic.co

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- elasticsearches

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-kibana-k8s-elastic-co-v1-kibana

failurePolicy: Ignore

name: elastic-kb-validation-v1.k8s.elastic.co

rules:

- apiGroups:

- kibana.k8s.elastic.co

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- kibanas

- clientConfig:

caBundle: Cg==

service:

name: elastic-webhook-server

namespace: logs

path: /validate-kibana-k8s-elastic-co-v1beta1-kibana

failurePolicy: Ignore

name: elastic-kb-validation-v1beta1.k8s.elastic.co

rules:

- apiGroups:

- kibana.k8s.elastic.co

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- kibanas

root@sz-k8s-master-01:~#

发布 operator-legacy.yaml

kubectl apply -f operator-legacy.yaml

一段时间后,查看 operator 日志

kubectl -n logs logs -f statefulset.apps/elastic-operator

一段时间后,查看 elastic-operator 是否运行正常,ECK 中只有一个 elastic-operator pod

root@sz-k8s-master-01:~# kubectl -n logs get pod|grep elastic

elastic-operator-0 1/1 Running 1 5d1h

root@sz-k8s-master-01:~#

1.2 使用ECK部署 elasticsearch 集群(存储使用百度cfs)

cat <<EOF | kubectl apply -f - -n logs

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: cluster01

namespace: logs

spec:

version: 7.10.1

nodeSets:

- name: master-nodes

count: 3

config:

node.master: true

node.data: false

podTemplate:

spec:

initContainers:

- name: sysctl

securityContext:

privileged: true

runAsUser: 0

command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144']

containers:

- name: elasticsearch

image: elasticsearch:7.10.1

env:

- name: ES_JAVA_OPTS

value: "-Xms6g -Xmx6g"

resources:

limits:

memory: 8Gi

cpu: 2

requests:

memory: 8Gi

cpu: 2

imagePullSecrets:

- name: mlpull

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

storageClassName: capacity-bdycloud-cfs

- name: data-nodes

count: 3

config:

node.master: false

node.data: true

podTemplate:

spec:

initContainers:

- name: sysctl

securityContext:

privileged: true

runAsUser: 0

command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144']

containers:

- name: elasticsearch

image: elasticsearch:7.10.1

env:

- name: ES_JAVA_OPTS

value: "-Xms8g -Xmx8g"

resources:

limits:

memory: 10Gi

cpu: 4

requests:

memory: 10Gi

cpu: 4

imagePullSecrets:

- name: mlpull

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1000Gi

storageClassName: capacity-bdycloud-cfs

http:

tls:

selfSignedCertificate:

disabled: true

EOF

一段时间后,查看 elasticsearch 集群的状态,其中 HEALTH 为 green 表示部署成功

root@sz-k8s-master-01:~# kubectl -n logs get elasticsearch

NAME HEALTH NODES VERSION PHASE AGE

cluster01 green 6 7.10.1 Ready 4m26s

root@sz-k8s-master-01:~#

# 查看 pod

root@sz-k8s-master-01:~# kubectl -n logs get pod|grep cluster01

cluster01-es-data-nodes-0 1/1 Running 0 5m8s

cluster01-es-data-nodes-1 1/1 Running 0 5m8s

cluster01-es-data-nodes-2 1/1 Running 0 5m8s

cluster01-es-master-nodes-0 1/1 Running 0 5m8s

cluster01-es-master-nodes-1 1/1 Running 0 5m8s

cluster01-es-master-nodes-2 1/1 Running 0 5m8s

root@sz-k8s-master-01:~#

查看申请的 pvc 状态,发现创建且绑定成功(Bound)

root@sz-k8s-master-01:~# kubectl -n logs get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

elasticsearch-data-cluster01-es-data-nodes-0 Bound pvc-b253e810-e62c-11ec-bc77-fa163efe36ed 1000Gi RWO capacity-bdycloud-cfs 6m18s

elasticsearch-data-cluster01-es-data-nodes-1 Bound pvc-b2561699-e62c-11ec-bc77-fa163efe36ed 1000Gi RWO capacity-bdycloud-cfs 6m18s

elasticsearch-data-cluster01-es-data-nodes-2 Bound pvc-b259b21a-e62c-11ec-bc77-fa163efe36ed 1000Gi RWO capacity-bdycloud-cfs 6m18s

elasticsearch-data-cluster01-es-master-nodes-0 Bound pvc-b2482de7-e62c-11ec-bc77-fa163efe36ed 50Gi RWO capacity-bdycloud-cfs 6m18s

elasticsearch-data-cluster01-es-master-nodes-1 Bound pvc-b24a8bf4-e62c-11ec-bc77-fa163efe36ed 50Gi RWO capacity-bdycloud-cfs 6m18s

elasticsearch-data-cluster01-es-master-nodes-2 Bound pvc-b24d8c2a-e62c-11ec-bc77-fa163efe36ed 50Gi RWO capacity-bdycloud-cfs 6m18s

logstash Bound pvc-7bb27b91-8ed0-11ec-8e66-fa163efe36ed 2Gi RWX bdycloud-cfs 111d

root@sz-k8s-master-01:~#

1.3 创建 ingress 访问

cat <<EOF | kubectl apply -f - -n logs

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: bdy-sz-es

namespace: logs

spec:

rules:

- host: sz-es.test.cn

http:

paths:

- backend:

serviceName: cluster01-es-http

servicePort: 9200

path: /

EOF

1.4 Elastcisearch 用户名和密码

默认集群开启了 basic 认证,用户名为 elastic,密码可以通过 secret 获取。默认集群也开启了自签名证书 https 访问。我们可以通过 service 资源来访问 elasticsearch:

root@sz-k8s-master-01:~# kubectl get secret -n logs cluster01-es-elastic-user -o=jsonpath='{.data.elastic}' | base64 --decode; echo

uOGv138dX123434342t130

root@sz-k8s-master-01:~#

集群内访问测试

root@sz-k8s-master-01:~# kubectl -n logs get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cerebro ClusterIP 10.2.214.84 <none> 9000/TCP 4h21m

cluster01-es-data-nodes ClusterIP None <none> 9200/TCP 7m10s

cluster01-es-http ClusterIP 10.2.178.25 <none> 9200/TCP 7m12s

cluster01-es-master-nodes ClusterIP None <none> 9200/TCP 7m10s

cluster01-es-transport ClusterIP None <none> 9300/TCP 7m12s

elastic-webhook-server ClusterIP 10.2.46.89 <none> 443/TCP 5d5h

kibana-kb-http ClusterIP 10.2.247.192 <none> 5601/TCP 23h

logstash ClusterIP 10.2.97.210 <none> 5044/TCP 2y88d

root@sz-k8s-master-01:~# curl http://10.2.178.25:9200 -u 'elastic:uOGv138dX123434342t130' -k

{

"name" : "cluster01-es-data-nodes-2",

"cluster_name" : "cluster01",

"cluster_uuid" : "pKUYTOzuS_i3yT2UCiQw3w",

"version" : {

"number" : "7.10.1",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "1c34507e66d7db1211f66f3513706fdf548736aa",

"build_date" : "2020-12-05T01:00:33.671820Z",

"build_snapshot" : false,

"lucene_version" : "8.7.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

root@sz-k8s-master-01:~#

如果有要卸载 Elaticsearch 的话,执行如下命令即可

root@sz-k8s-master-01:~# kubectl -n logs delete elasticsearch cluster01

elasticsearch.elasticsearch.k8s.elastic.co "cluster01" deleted

root@sz-k8s-master-01:~#

修改 elasticsearch 配置

kubectl -n logs edit elasticsearch cluster01

2 kibana

# 官网

https://www.elastic.co/guide/en/cloud-on-k8s/1.9/k8s-deploy-kibana.html

由于默认 kibana 也开启了自签名证书的 https 访问,我们可以选择关闭,我们来使用 ECK 部署 kibana

2.1 部署 Kibana 并与 Elasticsearch 群集关联

cat <<EOF | kubectl apply -f - -n logs

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: kibana

spec:

version: 7.10.1

count: 1

elasticsearchRef:

name: cluster01 # kubectl -n logs get elasticsearch 获取

EOF

2.2 查看状态

root@sz-k8s-master-01:~# kubectl -n logs get kibana

NAME HEALTH NODES VERSION AGE

kibana green 1 7.10.1 18m

root@sz-k8s-master-01:~#

2.3 集群内访问 kibana

查看服务地址

root@sz-k8s-master-01:~# kubectl -n logs get service kibana-kb-http

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kibana-kb-http ClusterIP 10.2.195.7 <none> 5601/TCP 17m

root@sz-k8s-master-01:~#

使用 kubectl port-forward 从集群中访问 Kibana:

root@sz-k8s-master-01:~# kubectl -n logs port-forward service/kibana-kb-http 5601

Forwarding from 127.0.0.1:5601 -> 5601

Forwarding from [::1]:5601 -> 5601

^Croot@sz-k8s-master-01:~#

2.4 创建 ingress 访问

cat <<EOF | kubectl apply -f - -n logs

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: bdy-sz-kibana

namespace: logs

spec:

rules:

- host: sz-kibana.test.cn

http:

paths:

- backend:

serviceName: kibana-kb-http

servicePort: 5601

path: /

EOF

3 部署 cerebro 并与 Elasticsearch 群集关联

3.1 发布配置

定义配置文件文章来源:https://www.toymoban.com/news/detail-479658.html

cat application.conf

es = {

gzip = true

}

auth = {

type: basic

settings {

username = "admin"

password = "uOGv138dX123434342t130"

}

}

hosts = [

{

host = "http://cluster01-es-http:9200"

name = "sz-cerebro"

auth = {

username = "elastic"

password = "uOGv138dX123434342t130"

}

}

]

发布 cm文章来源地址https://www.toymoban.com/news/detail-479658.html

root@sz-k8s-master-01:/opt/cerebro# kubectl create configmap cerebro-application --from-file=application.conf -n logs

configmap/cerebro-application created

root@sz-k8s-master-01:/opt/cerebro#

3.2 发布deploy、service、ingress

cat <<EOF | kubectl apply -f - -n logs

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: cerebro

name: cerebro

namespace: logs

spec:

replicas: 1

selector:

matchLabels:

app: cerebro

template:

metadata:

labels:

app: cerebro

name: cerebro

spec:

containers:

- image: lmenezes/cerebro:0.8.3

imagePullPolicy: IfNotPresent

name: cerebro

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 1

memory: 1Gi

volumeMounts:

- name: cerebro-conf

mountPath: /etc/cerebro

volumes:

- name: cerebro-conf

configMap:

name: cerebro-application

---

apiVersion: v1

kind: Service

metadata:

labels:

app: cerebro

name: cerebro

namespace: logs

spec:

ports:

- port: 9000

protocol: TCP

targetPort: 9000

selector:

app: cerebro

type: ClusterIP

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: cerebro

namespace: logs

spec:

rules:

- host: sz-cerebro.test.cn

http:

paths:

- backend:

serviceName: cerebro

servicePort: 9000

path: /

EOF

到了这里,关于k8s Operator 部署 elasticsearch 7.10 + kibana + cerebro的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!