paddle nlp作为自然语言处理领域的全家桶,具有很多的不错的开箱即用的nlp能力。今天我们来一起看看基于paddle nlp中taskflow开箱即用的能力有哪些。

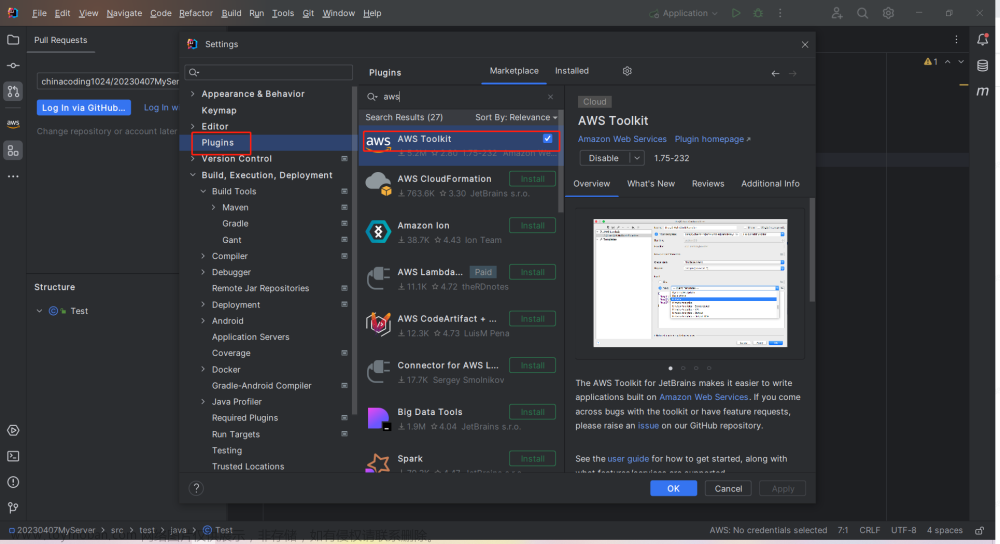

第一步先升级aistudio中的paddlenlp 保持最新版本。

pip install -U paddlenlp

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: paddlenlp in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (2.4.2)

Collecting paddlenlp

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/ff/93/f383925fdd45e9ea1c3dbb2ea7b6a7521c6a0169dd2f797252eb81712700/paddlenlp-2.4.5-py3-none-any.whl (1.9 MB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/1.9 MB ? eta -:--:--━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.1/1.9 MB 4.1 MB/s eta 0:00:01━━━━━━━━╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.4/1.9 MB 6.3 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━ 0.8/1.9 MB 8.2 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━ 1.3/1.9 MB 9.8 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 1.9/1.9 MB 11.4 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 1.9/1.9 MB 10.1 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.9/1.9 MB 9.1 MB/s eta 0:00:00

[?25hRequirement already satisfied: tqdm in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (4.64.1)

Requirement already satisfied: multiprocess<=0.70.12.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.70.11.1)

Requirement already satisfied: paddle2onnx in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (1.0.0)

Requirement already satisfied: jieba in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.42.1)

Requirement already satisfied: huggingface-hub>=0.10.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.11.0)

Collecting fastapi

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/d8/09/ce090f6d53ce8b6335954488087210fa1e054c4a65f74d5f76aed254c159/fastapi-0.88.0-py3-none-any.whl (55 kB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/55.5 kB ? eta -:--:--━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/55.5 kB ? eta -:--:--━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/55.5 kB ? eta -:--:--━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/55.5 kB ? eta -:--:--━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/55.5 kB ? eta -:--:--━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/55.5 kB ? eta -:--:--━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/55.5 kB ? eta -:--:--━━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/55.5 kB ? eta -:--:--━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 55.5/55.5 kB 176.9 kB/s eta 0:00:00

[?25hRequirement already satisfied: dill<0.3.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.3.3)

Requirement already satisfied: seqeval in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (1.2.2)

Requirement already satisfied: rich in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (12.6.0)

Requirement already satisfied: datasets>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (2.7.0)

Requirement already satisfied: protobuf<=3.20.0,>=3.1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (3.20.0)

Requirement already satisfied: colorama in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.4.4)

Requirement already satisfied: colorlog in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (4.1.0)

Requirement already satisfied: paddlefsl in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (1.1.0)

Requirement already satisfied: sentencepiece in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (0.1.96)

Collecting typer

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/0d/44/56c3f48d2bb83d76f5c970aef8e2c3ebd6a832f09e3621c5395371fe6999/typer-0.7.0-py3-none-any.whl (38 kB)

Collecting uvicorn

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/96/f3/f39ac8ac3bdf356b4934b8f7e56173e96681f67ef0cd92bd33a5059fae9e/uvicorn-0.20.0-py3-none-any.whl (56 kB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/56.9 kB ? eta -:--:--━━━━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━━━━━ 30.7/56.9 kB 1.5 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 56.9/56.9 kB 875.6 kB/s eta 0:00:00

[?25hRequirement already satisfied: visualdl in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlenlp) (2.4.0)

Requirement already satisfied: pyyaml>=5.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (5.1.2)

Requirement already satisfied: pyarrow>=6.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (10.0.0)

Requirement already satisfied: xxhash in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (3.1.0)

Requirement already satisfied: importlib-metadata in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (4.2.0)

Requirement already satisfied: responses<0.19 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (0.18.0)

Requirement already satisfied: packaging in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (21.3)

Requirement already satisfied: aiohttp in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (3.8.3)

Requirement already satisfied: pandas in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (1.1.5)

Requirement already satisfied: fsspec[http]>=2021.11.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (2022.11.0)

Requirement already satisfied: requests>=2.19.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (2.24.0)

Requirement already satisfied: numpy>=1.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from datasets>=2.0.0->paddlenlp) (1.19.5)

Requirement already satisfied: filelock in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from huggingface-hub>=0.10.1->paddlenlp) (3.0.12)

Requirement already satisfied: typing-extensions>=3.7.4.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from huggingface-hub>=0.10.1->paddlenlp) (4.3.0)

Collecting starlette==0.22.0

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/1d/4e/30eda84159d5b3ad7fe663c40c49b16dd17436abe838f10a56c34bee44e8/starlette-0.22.0-py3-none-any.whl (64 kB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/64.3 kB ? eta -:--:--━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 10.2/64.3 kB ? eta -:--:--━━━━━━━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━ 41.0/64.3 kB 835.3 kB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╺━ 61.4/64.3 kB 497.5 kB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 64.3/64.3 kB 488.3 kB/s eta 0:00:00

[?25hCollecting pydantic!=1.7,!=1.7.1,!=1.7.2,!=1.7.3,!=1.8,!=1.8.1,<2.0.0,>=1.6.2

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/22/53/196c9a5752e30d682e493d7c00ea0a02377446578e577ae5e085010dc0bd/pydantic-1.10.2-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (11.8 MB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/11.8 MB ? eta -:--:--━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/11.8 MB ? eta -:--:--━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/11.8 MB 490.3 kB/s eta 0:00:25━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.1/11.8 MB 897.5 kB/s eta 0:00:14━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.1/11.8 MB 881.4 kB/s eta 0:00:14╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.2/11.8 MB 1.1 MB/s eta 0:00:11╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.3/11.8 MB 1.2 MB/s eta 0:00:10╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.3/11.8 MB 1.2 MB/s eta 0:00:10━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.4/11.8 MB 1.3 MB/s eta 0:00:09━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.4/11.8 MB 1.4 MB/s eta 0:00:09━╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.5/11.8 MB 1.5 MB/s eta 0:00:08━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.6/11.8 MB 1.6 MB/s eta 0:00:08━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.7/11.8 MB 1.7 MB/s eta 0:00:07━━╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.8/11.

[?25hRequirement already satisfied: anyio<5,>=3.4.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from starlette==0.22.0->fastapi->paddlenlp) (3.6.1)

Requirement already satisfied: commonmark<0.10.0,>=0.9.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from rich->paddlenlp) (0.9.1)

Requirement already satisfied: pygments<3.0.0,>=2.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from rich->paddlenlp) (2.13.0)

Requirement already satisfied: scikit-learn>=0.21.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from seqeval->paddlenlp) (0.24.2)

Requirement already satisfied: click<9.0.0,>=7.1.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from typer->paddlenlp) (8.0.4)

Collecting h11>=0.8

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/95/04/ff642e65ad6b90db43e668d70ffb6736436c7ce41fcc549f4e9472234127/h11-0.14.0-py3-none-any.whl (58 kB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/58.3 kB ? eta -:--:--━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 58.3/58.3 kB 2.2 MB/s eta 0:00:00

[?25hRequirement already satisfied: six>=1.14.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp) (1.16.0)

Requirement already satisfied: Pillow>=7.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp) (8.2.0)

Requirement already satisfied: matplotlib in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp) (2.2.3)

Requirement already satisfied: Flask-Babel>=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp) (1.0.0)

Requirement already satisfied: bce-python-sdk in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp) (0.8.53)

Requirement already satisfied: flask>=1.1.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from visualdl->paddlenlp) (1.1.1)

Requirement already satisfied: itsdangerous>=0.24 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddlenlp) (1.1.0)

Requirement already satisfied: Werkzeug>=0.15 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddlenlp) (0.16.0)

Requirement already satisfied: Jinja2>=2.10.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.1->visualdl->paddlenlp) (3.0.0)

Requirement already satisfied: Babel>=2.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl->paddlenlp) (2.8.0)

Requirement already satisfied: pytz in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Flask-Babel>=1.0.0->visualdl->paddlenlp) (2019.3)

Requirement already satisfied: multidict<7.0,>=4.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (6.0.2)

Requirement already satisfied: yarl<2.0,>=1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (1.7.2)

Requirement already satisfied: asynctest==0.13.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (0.13.0)

Requirement already satisfied: charset-normalizer<3.0,>=2.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (2.1.1)

Requirement already satisfied: frozenlist>=1.1.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (1.3.0)

Requirement already satisfied: aiosignal>=1.1.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (1.2.0)

Requirement already satisfied: attrs>=17.3.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (22.1.0)

Requirement already satisfied: async-timeout<5.0,>=4.0.0a3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from aiohttp->datasets>=2.0.0->paddlenlp) (4.0.2)

Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from packaging->datasets>=2.0.0->paddlenlp) (3.0.9)

Requirement already satisfied: idna<3,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.19.0->datasets>=2.0.0->paddlenlp) (2.8)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.19.0->datasets>=2.0.0->paddlenlp) (1.25.11)

Requirement already satisfied: chardet<4,>=3.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.19.0->datasets>=2.0.0->paddlenlp) (3.0.4)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests>=2.19.0->datasets>=2.0.0->paddlenlp) (2019.9.11)

Requirement already satisfied: scipy>=0.19.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp) (1.6.3)

Requirement already satisfied: threadpoolctl>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp) (2.1.0)

Requirement already satisfied: joblib>=0.11 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from scikit-learn>=0.21.3->seqeval->paddlenlp) (0.14.1)

Requirement already satisfied: future>=0.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl->paddlenlp) (0.18.0)

Requirement already satisfied: pycryptodome>=3.8.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from bce-python-sdk->visualdl->paddlenlp) (3.9.9)

Requirement already satisfied: zipp>=0.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from importlib-metadata->datasets>=2.0.0->paddlenlp) (3.8.1)

Requirement already satisfied: python-dateutil>=2.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->visualdl->paddlenlp) (2.8.2)

Requirement already satisfied: cycler>=0.10 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->visualdl->paddlenlp) (0.10.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from matplotlib->visualdl->paddlenlp) (1.1.0)

Requirement already satisfied: sniffio>=1.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from anyio<5,>=3.4.0->starlette==0.22.0->fastapi->paddlenlp) (1.3.0)

Requirement already satisfied: MarkupSafe>=2.0.0rc2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Jinja2>=2.10.1->flask>=1.1.1->visualdl->paddlenlp) (2.0.1)

Requirement already satisfied: setuptools in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from kiwisolver>=1.0.1->matplotlib->visualdl->paddlenlp) (56.2.0)

Installing collected packages: pydantic, h11, starlette, uvicorn, typer, fastapi, paddlenlp

Attempting uninstall: paddlenlp

Found existing installation: paddlenlp 2.4.2

Uninstalling paddlenlp-2.4.2:

Successfully uninstalled paddlenlp-2.4.2

Successfully installed fastapi-0.88.0 h11-0.14.0 paddlenlp-2.4.5 pydantic-1.10.2 starlette-0.22.0 typer-0.7.0 uvicorn-0.20.0

[notice] A new release of pip available: 22.1.2 -> 22.3.1

[notice] To update, run: pip install --upgrade pip

Note: you may need to restart the kernel to use updated packages.

第一个能力非常的惊艳,是一个代码生成模型。上来就来了一个超必杀。

pip install regex

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting regex

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/42/d8/8a7131e7d0bf237f7bcd3191541a4bf21863c253fe6bee0796900a1a9a29/regex-2022.10.31-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (757 kB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/757.1 kB ? eta -:--:--━━━━━━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 122.9/757.1 kB 3.5 MB/s eta 0:00:01━━━━━━━━╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 174.1/757.1 kB 2.6 MB/s eta 0:00:01━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━━━━━━━━━━ 307.2/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━━━━━━━ 399.4/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━╸━━━━━━━━━━━━ 522.2/757.1 kB 3.0 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸━━━━━━ 634.9/757.1 kB 3.0 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸━━━ 696.3/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 747.5/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 747.5/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 747.5/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 747.5/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 747.5/757.1 kB 2.9 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━

[?25hInstalling collected packages: regex

Successfully installed regex-2022.10.31

[notice] A new release of pip available: 22.1.2 -> 22.3.1

[notice] To update, run: pip install --upgrade pip

Note: you may need to restart the kernel to use updated packages.

from paddlenlp import Taskflow

codegen = Taskflow("code_generation")

codegen("def hello_world():")

# '''

# ['\n print("Hello world")']

# '''

[2022-12-23 11:07:33,283] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/Salesforce/codegen-350M-mono/vocab.json

[2022-12-23 11:07:33,286] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/Salesforce/codegen-350M-mono/merges.txt

[2022-12-23 11:07:33,288] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/Salesforce/codegen-350M-mono/added_tokens.json

[2022-12-23 11:07:33,291] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/Salesforce/codegen-350M-mono/special_tokens_map.json

[2022-12-23 11:07:33,293] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/Salesforce/codegen-350M-mono/tokenizer_config.json

[2022-12-23 11:07:33,373] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,375] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,377] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,379] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,381] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,383] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,385] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,387] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,389] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,391] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,393] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,394] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,398] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,400] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,402] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,404] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,406] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,408] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,410] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,412] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,414] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,416] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,418] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,419] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,421] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,423] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,425] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,427] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,430] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,432] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,434] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,436] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,439] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,441] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,443] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,445] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,447] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,450] [ INFO] - Adding to the vocabulary

[2022-12-23 11:07:33,452] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/Salesforce/codegen-350M-mono/model_state.pdparams

[2022-12-23 11:07:33,454] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/Salesforce/codegen-350M-mono/model_config.json

['\n """Prints out a greeting."""\n print("Hello World!")']

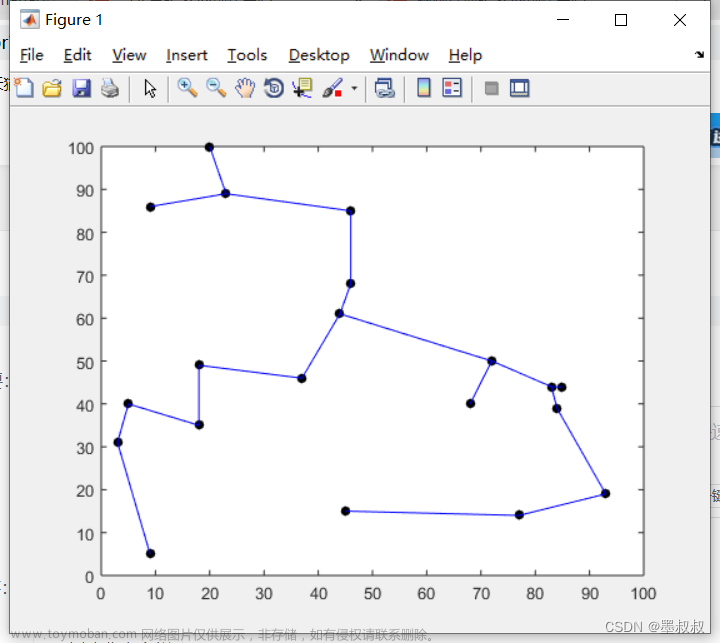

句法依存分析

需要安装LAC

pip install LAC --upgrade

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting LAC

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/c3/88/966e99c95cac93730a7f3cdf92a17e2a0e924bea61b9a86ae7995feaa4fe/LAC-2.1.2.tar.gz (64.8 MB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/64.8 MB ? eta -:--:--━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.2/64.8 MB 5.6 MB/s eta 0:00:12━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.3/64.8 MB 4.5 MB/s eta 0:00:15━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.5/64.8 MB 4.4 MB/s eta 0:00:15━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.6/64.8 MB 4.3 MB/s eta 0:00:15━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.7/64.8 MB 4.2 MB/s eta 0:00:16╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.9/64.8 MB 4.2 MB/s eta 0:00:16╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.0/64.8 MB 4.2 MB/s eta 0:00:16╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.1/64.8 MB 4.2 MB/s eta 0:00:16╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.3/64.8 MB 4.1 MB/s eta 0:00:16╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.4/64.8 MB 4.1 MB/s eta 0:00:16╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.5/64.8 MB 4.0 MB/s eta 0:00:16╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.6/64.8 MB 3.9 MB/s eta 0:00:17━╺━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.8/64

[?25h Preparing metadata (setup.py) ... [?25ldone

[?25hBuilding wheels for collected packages: LAC

Building wheel for LAC (setup.py) ... [?25ldone

[?25h Created wheel for LAC: filename=LAC-2.1.2-py2.py3-none-any.whl size=64814683 sha256=651514f67476935e9c56b3793243ab9d4549695b1941ecc3f5c9edb4aff5d1f0

Stored in directory: /home/aistudio/.cache/pip/wheels/7b/db/c0/9ca0a499e40c78935cd8afd72372cc4a85d986ba2265285d20

Successfully built LAC

Installing collected packages: LAC

Successfully installed LAC-2.1.2

[notice] A new release of pip available: 22.1.2 -> 22.3.1

[notice] To update, run: pip install --upgrade pip

Note: you may need to restart the kernel to use updated packages.

from paddlenlp import Taskflow

ddp = Taskflow("dependency_parsing")

ddp("三亚是一座美丽的城市")

'''

[{'word': ['三亚', '是', '一座', '美丽', '的', '城市'], 'head': [2, 0, 6, 6, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'ATT', 'MT', 'VOB']}]

'''

ddp(["三亚是一座美丽的城市", "他送了一本书"])

'''

[{'word': ['三亚', '是', '一座', '美丽', '的', '城市'], 'head': [2, 0, 6, 6, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'ATT', 'MT', 'VOB']}, {'word': ['他', '送', '了', '一本', '书'], 'head': [2, 0, 2, 5, 2], 'deprel': ['SBV', 'HED', 'MT', 'ATT', 'VOB']}]

'''

ddp = Taskflow("dependency_parsing", prob=True, use_pos=True)

ddp("三亚是一座美丽的城市")

'''

[{'word': ['三亚', '是', '一座', '美丽的城市'], 'head': [2, 0, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'VOB'], 'postag': ['LOC', 'v', 'm', 'n'], 'prob': [1.0, 1.0, 1.0, 1.0]}]

'''

ddp = Taskflow("dependency_parsing", model="ddparser-ernie-1.0")

ddp("三亚是一座美丽的城市")

'''

[{'word': ['三亚', '是', '一座', '美丽', '的', '城市'], 'head': [2, 0, 6, 6, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'ATT', 'MT', 'VOB']}]

'''

ddp = Taskflow("dependency_parsing", model="ddparser-ernie-gram-zh")

ddp("三亚是一座美丽的城市")

'''

[{'word': ['三亚', '是', '一座', '美丽', '的', '城市'], 'head': [2, 0, 6, 6, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'ATT', 'MT', 'VOB']}]

'''

# 已分词输入

ddp = Taskflow("dependency_parsing", segmented=True)

ddp.from_segments([["三亚", "是", "一座", "美丽", "的", "城市"]])

'''

[{'word': ['三亚', '是', '一座', '美丽', '的', '城市'], 'head': [2, 0, 6, 6, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'ATT', 'MT', 'VOB']}]

'''

ddp.from_segments([['三亚', '是', '一座', '美丽', '的', '城市'], ['他', '送', '了', '一本', '书']])

'''

[{'word': ['三亚', '是', '一座', '美丽', '的', '城市'], 'head': [2, 0, 6, 6, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'ATT', 'MT', 'VOB']}, {'word': ['他', '送', '了', '一本', '书'], 'head': [2, 0, 2, 5, 2], 'deprel': ['SBV', 'HED', 'MT', 'ATT', 'VOB']}]

'''

W1223 11:15:20.603538 215 analysis_predictor.cc:2160] Deprecated. Please use CreatePredictor instead.

[2022-12-23 11:15:20,775] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:15:22,356] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/dependency_parsing/ddparser/static/inference

[2022-12-23 11:15:23,832] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/ddparser-ernie-1.0/model_state.pdparams

100%|██████████| 386M/386M [00:15<00:00, 26.5MB/s]

[2022-12-23 11:15:40,157] [ INFO] - Downloading word_vocab.json from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/ddparser-ernie-1.0/word_vocab.json

100%|██████████| 612k/612k [00:00<00:00, 2.14MB/s]

[2022-12-23 11:15:40,588] [ INFO] - Downloading rel_vocab.json from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/ddparser-ernie-1.0/rel_vocab.json

100%|██████████| 526/526 [00:00<00:00, 374kB/s]

[2022-12-23 11:15:40,708] [ INFO] - Downloading SourceHanSansCN-Regular.ttf from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/SourceHanSansCN-Regular.ttf

100%|██████████| 8.28M/8.28M [00:00<00:00, 15.2MB/s]

[2022-12-23 11:15:41,430] [ INFO] - We are using <class 'paddlenlp.transformers.ernie.modeling.ErnieModel'> to load 'ernie-1.0'.

[2022-12-23 11:15:41,433] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/transformers/ernie/ernie_v1_chn_base.pdparams and saved to /home/aistudio/.paddlenlp/models/ernie-1.0

[2022-12-23 11:15:41,435] [ INFO] - Downloading ernie_v1_chn_base.pdparams from https://bj.bcebos.com/paddlenlp/models/transformers/ernie/ernie_v1_chn_base.pdparams

100%|██████████| 383M/383M [00:18<00:00, 21.2MB/s]

[2022-12-23 11:16:01,458] [ INFO] - Weights from pretrained model not used in ErnieModel: ['cls.predictions.layer_norm.weight', 'cls.predictions.decoder_bias', 'cls.predictions.transform.bias', 'cls.predictions.transform.weight', 'cls.predictions.layer_norm.bias']

[2022-12-23 11:16:02,480] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:16:16,645] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/dependency_parsing/ddparser-ernie-1.0/static/inference

[2022-12-23 11:16:19,060] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/ddparser-ernie-gram-zh/model_state.pdparams

100%|██████████| 386M/386M [00:14<00:00, 27.1MB/s]

[2022-12-23 11:16:35,036] [ INFO] - Downloading word_vocab.json from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/ddparser-ernie-gram-zh/word_vocab.json

100%|██████████| 590k/590k [00:00<00:00, 5.34MB/s]

[2022-12-23 11:16:35,329] [ INFO] - Downloading rel_vocab.json from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/ddparser-ernie-gram-zh/rel_vocab.json

100%|██████████| 526/526 [00:00<00:00, 522kB/s]

[2022-12-23 11:16:35,439] [ INFO] - Downloading SourceHanSansCN-Regular.ttf from https://bj.bcebos.com/paddlenlp/taskflow/dependency_parsing/SourceHanSansCN-Regular.ttf

100%|██████████| 8.28M/8.28M [00:00<00:00, 20.0MB/s]

[2022-12-23 11:16:36,016] [ INFO] - We are using <class 'paddlenlp.transformers.ernie_gram.modeling.ErnieGramModel'> to load 'ernie-gram-zh'.

[2022-12-23 11:16:36,019] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/transformers/ernie_gram_zh/ernie_gram_zh.pdparams and saved to /home/aistudio/.paddlenlp/models/ernie-gram-zh

[2022-12-23 11:16:36,021] [ INFO] - Downloading ernie_gram_zh.pdparams from https://bj.bcebos.com/paddlenlp/models/transformers/ernie_gram_zh/ernie_gram_zh.pdparams

100%|██████████| 570M/570M [00:17<00:00, 34.8MB/s]

[2022-12-23 11:16:57,735] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:17:09,064] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/dependency_parsing/ddparser-ernie-gram-zh/static/inference

"\n[{'word': ['三亚', '是', '一座', '美丽', '的', '城市'], 'head': [2, 0, 6, 6, 4, 2], 'deprel': ['SBV', 'HED', 'ATT', 'ATT', 'MT', 'VOB']}, {'word': ['他', '送', '了', '一本', '书'], 'head': [2, 0, 2, 5, 2], 'deprel': ['SBV', 'HED', 'MT', 'ATT', 'VOB']}]\n"

taskflow中基于plato-mini的

from paddlenlp import Taskflow

# 非交互模式

dialogue = Taskflow("dialogue")

dialogue(["吃饭了吗"])

'''

['刚吃完饭,你在干什么呢?']

'''

dialogue(["你好", "吃饭了吗"], ["你是谁?"])

'''

['吃过了,你呢', '我是李明啊']

'''

dialogue = Taskflow("dialogue")

# 进入交互模式 (输入exit退出)

dialogue.interactive_mode(max_turn=3)

[2022-12-23 11:36:39,441] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/dialogue/plato-mini/model_state.pdparams

100%|██████████| 518M/518M [00:12<00:00, 42.3MB/s]

[2022-12-23 11:36:53,635] [ INFO] - Downloading model_config.json from https://bj.bcebos.com/paddlenlp/taskflow/dialogue/plato-mini/model_config.json

100%|██████████| 518/518 [00:00<00:00, 296kB/s]

[2022-12-23 11:36:53,726] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/transformers/unified_transformer/plato-mini-vocab.txt and saved to /home/aistudio/.paddlenlp/models/plato-mini

[2022-12-23 11:36:53,729] [ INFO] - Downloading plato-mini-vocab.txt from https://bj.bcebos.com/paddlenlp/models/transformers/unified_transformer/plato-mini-vocab.txt

100%|██████████| 410k/410k [00:00<00:00, 3.24MB/s]

[2022-12-23 11:36:53,952] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/transformers/unified_transformer/plato-mini-spm.model and saved to /home/aistudio/.paddlenlp/models/plato-mini

[2022-12-23 11:36:53,955] [ INFO] - Downloading plato-mini-spm.model from https://bj.bcebos.com/paddlenlp/models/transformers/unified_transformer/plato-mini-spm.model

100%|██████████| 711k/711k [00:00<00:00, 6.92MB/s]

[2022-12-23 11:36:54,234] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/plato-mini/tokenizer_config.json

[2022-12-23 11:36:54,237] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/plato-mini/special_tokens_map.json

Building prefix dict from the default dictionary ...

Dumping model to file cache /tmp/jieba.cache

Loading model cost 0.791 seconds.

Prefix dict has been built successfully.

[2022-12-23 11:37:03,058] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/plato-mini/plato-mini-vocab.txt

[2022-12-23 11:37:03,060] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/plato-mini/plato-mini-spm.model

[2022-12-23 11:37:03,119] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/plato-mini/tokenizer_config.json

[2022-12-23 11:37:03,122] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/plato-mini/special_tokens_map.json

[Human]: 对话实现

[Bot]:你是个很有爱心的人,我很高兴认识你

[Human]: 你是一个什么样的人

[Bot]:我是一个很有爱心的人,你有什么事情吗?

[Human]: 你哪里有爱心了

[Bot]:这不,我说的是你的事情,我只是一个很有爱心的人

[Human]: exit

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

/tmp/ipykernel_215/731744024.py in <module>

14 dialogue = Taskflow("dialogue")

15 # 进入交互模式 (输入exit退出)

---> 16 dialogue.interactive_mode(max_turn=3)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddlenlp/taskflow/taskflow.py in interactive_mode(self, max_turn)

610 human = input("[Human]:").strip()

611 if human.lower() == "exit":

--> 612 exit()

613 robot = self.task_instance(human)[0]

614 print("[Bot]:%s" % robot)

NameError: name 'exit' is not defined

taskflow实现的基于uie模型的信息抽取

from paddlenlp import Taskflow

# 实体抽取 Entity Extraction

schema = ['时间', '选手', '赛事名称'] # Define the schema for entity extraction

ie = Taskflow('information_extraction', schema=schema)

ie("2月8日上午北京冬奥会自由式滑雪女子大跳台决赛中中国选手谷爱凌以188.25分获得金牌!")

'''

[{'时间': [{'text': '2月8日上午', 'start': 0, 'end': 6, 'probability': 0.9857378532924486}], '选手': [{'text': '谷爱凌', 'start': 28, 'end': 31, 'probability': 0.8981548639781138}], '赛事名称': [{'text': '北京冬奥会自由式滑雪女子大跳台决赛', 'start': 6, 'end': 23, 'probability': 0.8503089953268272}]}]

'''

# 关系抽取 Relation Extraction

schema = [{"歌曲名称":["歌手", "所属专辑"]}] # Define the schema for relation extraction

ie.set_schema(schema) # Reset schema

ie("《告别了》是孙耀威在专辑爱的故事里面的歌曲")

'''

[{'歌曲名称': [{'text': '告别了', 'start': 1, 'end': 4, 'probability': 0.6296155977145546, 'relations': {'歌手': [{'text': '孙耀威', 'start': 6, 'end': 9, 'probability': 0.9988381005599081}], '所属专辑': [{'text': '爱的故事', 'start': 12, 'end': 16, 'probability': 0.9968462078543183}]}}, {'text': '爱的故事', 'start': 12, 'end': 16, 'probability': 0.2816869478191606, 'relations': {'歌手': [{'text': '孙耀威', 'start': 6, 'end': 9, 'probability': 0.9951415104192272}]}}]}]

'''

# 事件抽取 Event Extraction

schema = [{'地震触发词': ['地震强度', '时间', '震中位置', '震源深度']}] # Define the schema for event extraction

ie.set_schema(schema) # Reset schema

ie('中国地震台网正式测定:5月16日06时08分在云南临沧市凤庆县(北纬24.34度,东经99.98度)发生3.5级地震,震源深度10千米。')

'''

[{'地震触发词': [{'text': '地震', 'start': 56, 'end': 58, 'probability': 0.9977425555988333, 'relations': {'地震强度': [{'text': '3.5级', 'start': 52, 'end': 56, 'probability': 0.998080217831891}], '时间': [{'text': '5月16日06时08分', 'start': 11, 'end': 22, 'probability': 0.9853299772936026}], '震中位置': [{'text': '云南临沧市凤庆县(北纬24.34度,东经99.98度)', 'start': 23, 'end': 50, 'probability': 0.7874012889740385}], '震源深度': [{'text': '10千米', 'start': 63, 'end': 67, 'probability': 0.9937974422968665}]}}]}]

'''

# 观点提取Opinion Extraction

schema = [{'评价维度': ['观点词', '情感倾向[正向,负向]']}] # Define the schema for opinion extraction

ie.set_schema(schema) # Reset schema

ie("地址不错,服务一般,设施陈旧")

'''

[{'评价维度': [{'text': '地址', 'start': 0, 'end': 2, 'probability': 0.9888139270606509, 'relations': {'观点词': [{'text': '不错', 'start': 2, 'end': 4, 'probability': 0.9927847072459528}], '情感倾向[正向,负向]': [{'text': '正向', 'probability': 0.998228967796706}]}}, {'text': '设施', 'start': 10, 'end': 12, 'probability': 0.9588297379365116, 'relations': {'观点词': [{'text': '陈旧', 'start': 12, 'end': 14, 'probability': 0.9286753967902683}], '情感倾向[正向,负向]': [{'text': '负向', 'probability': 0.9949389795770394}]}}, {'text': '服务', 'start': 5, 'end': 7, 'probability': 0.9592857070501211, 'relations': {'观点词': [{'text': '一般', 'start': 7, 'end': 9, 'probability': 0.9949359182521675}], '情感倾向[正向,负向]': [{'text': '负向', 'probability': 0.9952498258302498}]}}]}]

'''

#句子级别文本分类 Sentence-level Sentiment Classification

schema = ['情感倾向[正向,负向]'] # Define the schema for sentence-level sentiment classification

ie.set_schema(schema) # Reset schema

ie('这个产品用起来真的很流畅,我非常喜欢')

'''

[{'情感倾向[正向,负向]': [{'text': '正向', 'probability': 0.9990024058203417}]}]

'''

# 英文模型 English Model

schema = [{'Person': ['Company', 'Position']}]

ie_en = Taskflow('information_extraction', schema=schema, model='uie-base-en')

ie_en('In 1997, Steve was excited to become the CEO of Apple.')

'''

[{'Person': [{'text': 'Steve', 'start': 9, 'end': 14, 'probability': 0.999631971804547, 'relations': {'Company': [{'text': 'Apple', 'start': 48, 'end': 53, 'probability': 0.9960158209451642}], 'Position': [{'text': 'CEO', 'start': 41, 'end': 44, 'probability': 0.8871063806420736}]}}]}]

'''

schema = ['Sentiment classification [negative, positive]']

ie_en.set_schema(schema)

ie_en('I am sorry but this is the worst film I have ever seen in my life.')

'''

[{'Sentiment classification [negative, positive]': [{'text': 'negative', 'probability': 0.9998415771287057}]}]

'''

schema = [{'Comment object': ['Opinion', 'Sentiment classification [negative, positive]']}]

ie_en.set_schema(schema)

ie_en("overall i 'm happy with my toy.")

[2022-12-23 11:46:16,047] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base_v1.0/model_state.pdparams

100%|██████████| 450M/450M [00:08<00:00, 54.8MB/s]

[2022-12-23 11:46:25,850] [ INFO] - Downloading model_config.json from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base/model_config.json

100%|██████████| 377/377 [00:00<00:00, 238kB/s]

[2022-12-23 11:46:25,917] [ INFO] - Downloading vocab.txt from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base/vocab.txt

100%|██████████| 182k/182k [00:00<00:00, 2.11MB/s]

[2022-12-23 11:46:26,088] [ INFO] - Downloading special_tokens_map.json from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base/special_tokens_map.json

100%|██████████| 112/112 [00:00<00:00, 93.7kB/s]

[2022-12-23 11:46:26,162] [ INFO] - Downloading tokenizer_config.json from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base/tokenizer_config.json

100%|██████████| 172/172 [00:00<00:00, 115kB/s]

[2022-12-23 11:46:27,262] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:46:38,138] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/information_extraction/uie-base/static/inference

[2022-12-23 11:46:40,277] [ INFO] - We are using <class 'paddlenlp.transformers.ernie.tokenizer.ErnieTokenizer'> to load '/home/aistudio/.paddlenlp/taskflow/information_extraction/uie-base'.

[2022-12-23 11:46:40,749] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base_en_v1.1/model_state.pdparams

100%|██████████| 418M/418M [00:11<00:00, 37.7MB/s]

[2022-12-23 11:46:53,499] [ INFO] - Downloading model_config.json from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base_en/model_config.json

100%|██████████| 347/347 [00:00<00:00, 256kB/s]

[2022-12-23 11:46:53,599] [ INFO] - Downloading vocab.txt from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base_en/vocab.txt

100%|██████████| 226k/226k [00:00<00:00, 2.52MB/s]

[2022-12-23 11:46:53,890] [ INFO] - Downloading special_tokens_map.json from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base_en/special_tokens_map.json

100%|██████████| 112/112 [00:00<00:00, 96.5kB/s]

[2022-12-23 11:46:53,972] [ INFO] - Downloading tokenizer_config.json from https://bj.bcebos.com/paddlenlp/taskflow/information_extraction/uie_base_en/tokenizer_config.json

100%|██████████| 172/172 [00:00<00:00, 151kB/s]

[2022-12-23 11:46:54,977] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:47:05,378] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/information_extraction/uie-base-en/static/inference

[2022-12-23 11:47:07,383] [ INFO] - We are using <class 'paddlenlp.transformers.ernie.tokenizer.ErnieTokenizer'> to load '/home/aistudio/.paddlenlp/taskflow/information_extraction/uie-base-en'.

[{}]

基于WordTag的知识标注

from paddlenlp import Taskflow

# 默认使用WordTag词类知识标注工具

wordtag = Taskflow("knowledge_mining", model="wordtag")

wordtag("《孤女》是2010年九州出版社出版的小说,作者是余兼羽")

'''

[{'text': '《孤女》是2010年九州出版社出版的小说,作者是余兼羽', 'items': [{'item': '《', 'offset': 0, 'wordtag_label': 'w', 'length': 1}, {'item': '孤女', 'offset': 1, 'wordtag_label': '作品类_实体', 'length': 2}, {'item': '》', 'offset': 3, 'wordtag_label': 'w', 'length': 1}, {'item': '是', 'offset': 4, 'wordtag_label': '肯定词', 'length': 1, 'termid': '肯定否定词_cb_是'}, {'item': '2010年', 'offset': 5, 'wordtag_label': '时间类', 'length': 5, 'termid': '时间阶段_cb_2010年'}, {'item': '九州出版社', 'offset': 10, 'wordtag_label': '组织机构类', 'length': 5, 'termid': '组织机构_eb_九州出版社'}, {'item': '出版', 'offset': 15, 'wordtag_label': '场景事件', 'length': 2, 'termid': '场景事件_cb_出版'}, {'item': '的', 'offset': 17, 'wordtag_label': '助词', 'length': 1, 'termid': '助词_cb_的'}, {'item': '小说', 'offset': 18, 'wordtag_label': '作品类_概念', 'length': 2, 'termid': '小说_cb_小说'}, {'item': ',', 'offset': 20, 'wordtag_label': 'w', 'length': 1}, {'item': '作者', 'offset': 21, 'wordtag_label': '人物类_概念', 'length': 2, 'termid': '人物_cb_作者'}, {'item': '是', 'offset': 23, 'wordtag_label': '肯定词', 'length': 1, 'termid': '肯定否定词_cb_是'}, {'item': '余兼羽', 'offset': 24, 'wordtag_label': '人物类_实体', 'length': 3}]}]

'''

wordtag= Taskflow("knowledge_mining", batch_size=2)

wordtag(["热梅茶是一道以梅子为主要原料制作的茶饮",

"《孤女》是2010年九州出版社出版的小说,作者是余兼羽"])

'''

[{'text': '热梅茶是一道以梅子为主要原料制作的茶饮', 'items': [{'item': '热梅茶', 'offset': 0, 'wordtag_label': '饮食类_饮品', 'length': 3}, {'item': '是', 'offset': 3, 'wordtag_label': '肯定词', 'length': 1, 'termid': '肯定否定词_cb_是'}, {'item': '一道', 'offset': 4, 'wordtag_label': '数量词', 'length': 2}, {'item': '以', 'offset': 6, 'wordtag_label': '介词', 'length': 1, 'termid': '介词_cb_以'}, {'item': '梅子', 'offset': 7, 'wordtag_label': '饮食类', 'length': 2, 'termid': '饮食_cb_梅'}, {'item': '为', 'offset': 9, 'wordtag_label': '肯定词', 'length': 1, 'termid': '肯定否定词_cb_为'}, {'item': '主要原料', 'offset': 10, 'wordtag_label': '物体类', 'length': 4, 'termid': '物品_cb_主要原料'}, {'item': '制作', 'offset': 14, 'wordtag_label': '场景事件', 'length': 2, 'termid': '场景事件_cb_制作'}, {'item': '的', 'offset': 16, 'wordtag_label': '助词', 'length': 1, 'termid': '助词_cb_的'}, {'item': '茶饮', 'offset': 17, 'wordtag_label': '饮食类_饮品', 'length': 2, 'termid': '饮品_cb_茶饮'}]}, {'text': '《孤女》是2010年九州出版社出版的小说,作者是余兼羽', 'items': [{'item': '《', 'offset': 0, 'wordtag_label': 'w', 'length': 1}, {'item': '孤女', 'offset': 1, 'wordtag_label': '作品类_实体', 'length': 2}, {'item': '》', 'offset': 3, 'wordtag_label': 'w', 'length': 1}, {'item': '是', 'offset': 4, 'wordtag_label': '肯定词', 'length': 1, 'termid': '肯定否定词_cb_是'}, {'item': '2010年', 'offset': 5, 'wordtag_label': '时间类', 'length': 5, 'termid': '时间阶段_cb_2010年'}, {'item': '九州出版社', 'offset': 10, 'wordtag_label': '组织机构类', 'length': 5, 'termid': '组织机构_eb_九州出版社'}, {'item': '出版', 'offset': 15, 'wordtag_label': '场景事件', 'length': 2, 'termid': '场景事件_cb_出版'}, {'item': '的', 'offset': 17, 'wordtag_label': '助词', 'length': 1, 'termid': '助词_cb_的'}, {'item': '小说', 'offset': 18, 'wordtag_label': '作品类_概念', 'length': 2, 'termid': '小说_cb_小说'}, {'item': ',', 'offset': 20, 'wordtag_label': 'w', 'length': 1}, {'item': '作者', 'offset': 21, 'wordtag_label': '人物类_概念', 'length': 2, 'termid': '人物_cb_作者'}, {'item': '是', 'offset': 23, 'wordtag_label': '肯定词', 'length': 1, 'termid': '肯定否定词_cb_是'}, {'item': '余兼羽', 'offset': 24, 'wordtag_label': '人物类_实体', 'length': 3}]}]

'''

# 使用WordTag-IE进行信息抽取

wordtag = Taskflow("knowledge_mining", model="wordtag", with_ie=True)

'''

[[{'text': '《忘了所有》是一首由王杰作词、作曲并演唱的歌曲,收录在专辑同名《忘了所有》中,由波丽佳音唱片于1996年08月31日发行。', 'items': [{'item': '《', 'offset': 0, 'wordtag_label': 'w', 'length': 1}, {'item': '忘了所有', 'offset': 1, 'wordtag_label': '作品类_实体', 'length': 4}, {'item': '》', 'offset': 5, 'wordtag_label': 'w', 'length': 1}, {'item': '是', 'offset': 6, 'wordtag_label': '肯定词', 'length': 1}, {'item': '一首', 'offset': 7, 'wordtag_label': '数量词_单位数量词', 'length': 2}, {'item': '由', 'offset': 9, 'wordtag_label': '介词', 'length': 1}, {'item': '王杰', 'offset': 10, 'wordtag_label': '人物类_实体', 'length': 2}, {'item': '作词', 'offset': 12, 'wordtag_label': '场景事件', 'length': 2}, {'item': '、', 'offset': 14, 'wordtag_label': 'w', 'length': 1}, {'item': '作曲', 'offset': 15, 'wordtag_label': '场景事件', 'length': 2}, {'item': '并', 'offset': 17, 'wordtag_label': '连词', 'length': 1}, {'item': '演唱', 'offset': 18, 'wordtag_label': '场景事件', 'length': 2}, {'item': '的', 'offset': 20, 'wordtag_label': '助词', 'length': 1}, {'item': '歌曲', 'offset': 21, 'wordtag_label': '作品类_概念', 'length': 2}, {'item': ',', 'offset': 23, 'wordtag_label': 'w', 'length': 1}, {'item': '收录', 'offset': 24, 'wordtag_label': '场景事件', 'length': 2}, {'item': '在', 'offset': 26, 'wordtag_label': '介词', 'length': 1}, {'item': '专辑', 'offset': 27, 'wordtag_label': '作品类_概念', 'length': 2}, {'item': '同名', 'offset': 29, 'wordtag_label': '场景事件', 'length': 2}, {'item': '《', 'offset': 31, 'wordtag_label': 'w', 'length': 1}, {'item': '忘了所有', 'offset': 32, 'wordtag_label': '作品类_实体', 'length': 4}, {'item': '》', 'offset': 36, 'wordtag_label': 'w', 'length': 1}, {'item': '中', 'offset': 37, 'wordtag_label': '词汇用语', 'length': 1}, {'item': ',', 'offset': 38, 'wordtag_label': 'w', 'length': 1}, {'item': '由', 'offset': 39, 'wordtag_label': '介词', 'length': 1}, {'item': '波丽佳音', 'offset': 40, 'wordtag_label': '人物类_实体', 'length': 4}, {'item': '唱片', 'offset': 44, 'wordtag_label': '作品类_概念', 'length': 2}, {'item': '于', 'offset': 46, 'wordtag_label': '介词', 'length': 1}, {'item': '1996年08月31日', 'offset': 47, 'wordtag_label': '时间类_具体时间', 'length': 11}, {'item': '发行', 'offset': 58, 'wordtag_label': '场景事件', 'length': 2}, {'item': '。', 'offset': 60, 'wordtag_label': 'w', 'length': 1}]}], [[{'HEAD_ROLE': {'item': '王杰', 'offset': 10, 'type': '人物类_实体'}, 'TAIL_ROLE': [{'item': '忘了所有', 'type': '作品类_实体', 'offset': 1}], 'GROUP': '创作', 'TRIG': [{'item': '作词', 'offset': 12}, {'item': '作曲', 'offset': 15}, {'item': '演唱', 'offset': 18}], 'SRC': 'REVERSE'}, {'HEAD_ROLE': {'item': '忘了所有', 'type': '作品类_实体', 'offset': 1}, 'TAIL_ROLE': [{'item': '王杰', 'offset': 10, 'type': '人物类_实体'}], 'GROUP': '创作者', 'SRC': 'HTG', 'TRIG': [{'item': '作词', 'offset': 12}, {'item': '作曲', 'offset': 15}, {'item': '演唱', 'offset': 18}]}, {'HEAD_ROLE': {'item': '忘了所有', 'type': '作品类_实体', 'offset': 1}, 'TAIL_ROLE': [{'item': '歌曲', 'offset': 21, 'type': '作品类_概念'}], 'GROUP': '类型', 'SRC': 'TAIL'}, {'HEAD_ROLE': {'item': '忘了所有', 'offset': 32, 'type': '作品类_实体'}, 'TAIL_ROLE': [{'item': '忘了所有', 'type': '作品类_实体', 'offset': 1}], 'GROUP': '收录', 'TRIG': [{'item': '收录', 'offset': 24}], 'SRC': 'REVERSE'}, {'HEAD_ROLE': {'item': '忘了所有', 'type': '作品类_实体', 'offset': 1}, 'TAIL_ROLE': [{'item': '忘了所有', 'offset': 32, 'type': '作品类_实体'}], 'GROUP': '收录于', 'SRC': 'HGT', 'TRIG': [{'item': '收录', 'offset': 24}]}, {'HEAD_ROLE': {'item': '忘了所有', 'offset': 32, 'type': '作品类_实体'}, 'TAIL_ROLE': [{'item': '王杰', 'type': '人物类_实体', 'offset': 10}], 'GROUP': '创作者', 'TRIG': [{'item': '专辑', 'offset': 27}], 'SRC': 'REVERSE'}, {'HEAD_ROLE': {'item': '王杰', 'type': '人物类_实体', 'offset': 10}, 'TAIL_ROLE': [{'item': '忘了所有', 'offset': 32, 'type': '作品类_实体'}], 'GROUP': '创作', 'SRC': 'HGT', 'TRIG': [{'item': '专辑', 'offset': 27}]}, {'HEAD_ROLE': {'item': '忘了所有', 'type': '作品类_实体', 'offset': 32}, 'TAIL_ROLE': [{'item': '唱片', 'offset': 44, 'type': '作品类_概念'}], 'GROUP': '类型', 'SRC': 'TAIL'}]]]

'''

# 切换为NPTag名词短语标注工具

nptag = Taskflow("knowledge_mining", model="nptag")

nptag("糖醋排骨")

'''

[{'text': '糖醋排骨', 'label': '菜品'}]

'''

nptag(["糖醋排骨", "红曲霉菌"])

'''

[{'text': '糖醋排骨', 'label': '菜品'}, {'text': '红曲霉菌', 'label': '微生物'}]

'''

# 输出粗粒度类别标签`category`,即WordTag的词汇标签。

nptag = Taskflow("knowledge_mining", model="nptag", linking=True)

nptag(["糖醋排骨", "红曲霉菌"])

'''

[{'text': '糖醋排骨', 'label': '菜品', 'category': '饮食类_菜品'}, {'text': '红曲霉菌', 'label': '微生物', 'category': '生物类_微生物'}]

'''

[2022-12-23 11:53:56,452] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/wordtag_v1.3/model_state.pdparams

100%|██████████| 367M/367M [00:06<00:00, 59.1MB/s]

[2022-12-23 11:54:03,922] [ INFO] - Downloading model_config.json from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/wordtag_v1.1/model_config.json

100%|██████████| 482/482 [00:00<00:00, 334kB/s]

[2022-12-23 11:54:04,019] [ INFO] - Downloading termtree_type.csv from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/wordtag/termtree_type.csv

100%|██████████| 9.03k/9.03k [00:00<00:00, 7.59MB/s]

[2022-12-23 11:54:04,113] [ INFO] - Downloading termtree_data from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/wordtag/termtree_data

100%|██████████| 354M/354M [00:07<00:00, 51.9MB/s]

[2022-12-23 11:54:12,229] [ INFO] - Downloading tags.txt from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/wordtag_v1.1/tags.txt

100%|██████████| 7.58k/7.58k [00:00<00:00, 5.64MB/s]

[2022-12-23 11:54:12,304] [ INFO] - Downloading spo_config.pkl from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/wordtag_v1.1/spo_config.pkl

100%|██████████| 1.62M/1.62M [00:00<00:00, 5.83MB/s]

[2022-12-23 11:54:54,760] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/transformers/ernie_ctm/vocab.txt and saved to /home/aistudio/.paddlenlp/models/wordtag

[2022-12-23 11:54:54,764] [ INFO] - Downloading vocab.txt from https://bj.bcebos.com/paddlenlp/models/transformers/ernie_ctm/vocab.txt

100%|██████████| 89.6k/89.6k [00:00<00:00, 3.66MB/s]

[2022-12-23 11:54:54,889] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/wordtag/tokenizer_config.json

[2022-12-23 11:54:54,892] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/wordtag/special_tokens_map.json

[2022-12-23 11:54:56,057] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:55:06,051] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/wordtag/static/inference

[2022-12-23 11:55:53,046] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/wordtag/vocab.txt

[2022-12-23 11:55:53,070] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/wordtag/tokenizer_config.json

[2022-12-23 11:55:53,073] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/wordtag/special_tokens_map.json

[2022-12-23 11:56:34,541] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/wordtag/vocab.txt

[2022-12-23 11:56:34,564] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/wordtag/tokenizer_config.json

[2022-12-23 11:56:34,566] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/wordtag/special_tokens_map.json

[2022-12-23 11:56:38,055] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/nptag/model_state.pdparams

100%|██████████| 377M/377M [00:19<00:00, 19.9MB/s]

[2022-12-23 11:56:59,048] [ INFO] - Downloading model_config.json from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/nptag/model_config.json

100%|██████████| 463/463 [00:00<00:00, 92.5kB/s]

[2022-12-23 11:56:59,206] [ INFO] - Downloading name_category_map.json from https://bj.bcebos.com/paddlenlp/taskflow/knowledge_mining/nptag/name_category_map.json

100%|██████████| 84.2k/84.2k [00:00<00:00, 1.99MB/s]

[2022-12-23 11:56:59,368] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/transformers/ernie_ctm/vocab.txt and saved to /home/aistudio/.paddlenlp/models/nptag

[2022-12-23 11:56:59,370] [ INFO] - Downloading vocab.txt from https://bj.bcebos.com/paddlenlp/models/transformers/ernie_ctm/vocab.txt

100%|██████████| 89.6k/89.6k [00:00<00:00, 2.60MB/s]

[2022-12-23 11:56:59,497] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/nptag/tokenizer_config.json

[2022-12-23 11:56:59,500] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/nptag/special_tokens_map.json

[2022-12-23 11:57:01,623] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:57:17,786] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/knowledge_mining/nptag/static/inference

[2022-12-23 11:57:19,289] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/nptag/vocab.txt

[2022-12-23 11:57:19,307] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/nptag/tokenizer_config.json

[2022-12-23 11:57:19,310] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/nptag/special_tokens_map.json

"\n[{'text': '糖醋排骨', 'label': '菜品', 'category': '饮食类_菜品'}, {'text': '红曲霉菌', 'label': '微生物', 'category': '生物类_微生物'}]\n"

基于lac的文本分词taskflow

from paddlenlp import Taskflow

lac = Taskflow("lexical_analysis")

lac("LAC是个优秀的分词工具")

'''

[{'text': 'LAC是个优秀的分词工具', 'segs': ['LAC', '是', '个', '优秀', '的', '分词', '工具'], 'tags': ['nz', 'v', 'q', 'a', 'u', 'n', 'n']}]

'''

lac(["LAC是个优秀的分词工具", "三亚是一个美丽的城市"])

'''

[{'text': 'LAC是个优秀的分词工具', 'segs': ['LAC', '是', '个', '优秀', '的', '分词', '工具'], 'tags': ['nz', 'v', 'q', 'a', 'u', 'n', 'n']},

{'text': '三亚是一个美丽的城市', 'segs': ['三亚', '是', '一个', '美丽', '的', '城市'], 'tags': ['LOC', 'v', 'm', 'a', 'u', 'n']}

]

'''

[2022-12-23 11:57:21,066] [ INFO] - Downloading model_state.pdparams from https://bj.bcebos.com/paddlenlp/taskflow/lexical_analysis/lac/model_state.pdparams

100%|██████████| 32.3M/32.3M [00:01<00:00, 26.6MB/s]

[2022-12-23 11:57:22,566] [ INFO] - Downloading tag.dic from https://bj.bcebos.com/paddlenlp/taskflow/lexical_analysis/lac/tag.dic

100%|██████████| 425/425 [00:00<00:00, 207kB/s]

[2022-12-23 11:57:22,666] [ INFO] - Downloading q2b.dic from https://bj.bcebos.com/paddlenlp/taskflow/lexical_analysis/lac/q2b.dic

100%|██████████| 44.1k/44.1k [00:00<00:00, 2.24MB/s]

[2022-12-23 11:57:22,776] [ INFO] - Downloading word.dic from https://bj.bcebos.com/paddlenlp/taskflow/lexical_analysis/lac/word.dic

100%|██████████| 745k/745k [00:00<00:00, 7.45MB/s]

[2022-12-23 11:57:23,164] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 11:57:23,580] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/lac/static/inference

"\n[{'text': 'LAC是个优秀的分词工具', 'segs': ['LAC', '是', '个', '优秀', '的', '分词', '工具'], 'tags': ['nz', 'v', 'q', 'a', 'u', 'n', 'n']}, \n {'text': '三亚是一个美丽的城市', 'segs': ['三亚', '是', '一个', '美丽', '的', '城市'], 'tags': ['LOC', 'v', 'm', 'a', 'u', 'n']}\n]\n"

命名实体识别

from paddlenlp import Taskflow

# WordTag精确模式

ner = Taskflow("ner")

ner("《孤女》是2010年九州出版社出版的小说,作者是余兼羽")

'''

[('《', 'w'), ('孤女', '作品类_实体'), ('》', 'w'), ('是', '肯定词'), ('2010年', '时间类'), ('九州出版社', '组织机构类'), ('出版', '场景事件'), ('的', '助词'), ('小说', '作品类_概念'), (',', 'w'), ('作者', '人物类_概念'), ('是', '肯定词'), ('余兼羽', '人物类_实体')]

'''

ner(["热梅茶是一道以梅子为主要原料制作的茶饮", "《孤女》是2010年九州出版社出版的小说,作者是余兼羽"])

'''

[[('热梅茶', '饮食类_饮品'), ('是', '肯定词'), ('一道', '数量词'), ('以', '介词'), ('梅子', '饮食类'), ('为', '肯定词'), ('主要原料', '物体类'), ('制作', '场景事件'), ('的', '助词'), ('茶饮', '饮食类_饮品')], [('《', 'w'), ('孤女', '作品类_实体'), ('》', 'w'), ('是', '肯定词'), ('2010年', '时间类'), ('九州出版社', '组织机构类'), ('出版', '场景事件'), ('的', '助词'), ('小说', '作品类_概念'), (',', 'w'), ('作者', '人物类_概念'), ('是', '肯定词'), ('余兼羽', '人物类_实体')]]

'''

# 只返回实体/概念词

ner = Taskflow("ner", entity_only=True)

ner("《孤女》是2010年九州出版社出版的小说,作者是余兼羽")

'''

[('孤女', '作品类_实体'), ('2010年', '时间类'), ('九州出版社', '组织机构类'), ('出版', '场景事件'), ('小说', '作品类_概念'), ('作者', '人物类_概念'), ('余兼羽', '人物类_实体')]

'''

# 使用快速模式,只返回实体词

ner = Taskflow("ner", mode="fast", entity_only=True)

ner("三亚是一个美丽的城市")

'''

[('三亚', 'LOC')]

'''

[2022-12-23 13:35:58,923] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/wordtag/vocab.txt

[2022-12-23 13:35:58,941] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/wordtag/tokenizer_config.json

[2022-12-23 13:35:58,943] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/wordtag/special_tokens_map.json

[2022-12-23 13:36:02,014] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/wordtag/vocab.txt

[2022-12-23 13:36:02,032] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/wordtag/tokenizer_config.json

[2022-12-23 13:36:02,034] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/wordtag/special_tokens_map.json

"\n[('三亚', 'LOC')]\n"

paddle nlp taskflow 生成古诗

采用gpt-cpm-large-cn模型进行古诗生成

文本生成比较占用显存。在推理过程中占用了11.9Gb的显存。这个使用的时候一定要注意。

from paddlenlp import Taskflow

poetry = Taskflow("poetry_generation")

poetry("林密不见人")

'''

[{'text': '林密不见人', 'answer': ',但闻人语响。'}]

'''

poetry(["林密不见人", "举头邀明月"])

'''

[{'text': '林密不见人', 'answer': ',但闻人语响。'}, {'text': '举头邀明月', 'answer': ',低头思故乡。'}]

'''

poetry(["夜岚风不止", "岂止万千关"])

[2022-12-23 14:09:18,841] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/gpt-cpm-cn-sentencepiece.model

[2022-12-23 14:09:18,884] [ INFO] - Adding <bod> to the vocabulary

[2022-12-23 14:09:18,886] [ INFO] - Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

[2022-12-23 14:09:18,890] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/tokenizer_config.json

[2022-12-23 14:09:18,892] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/special_tokens_map.json

[2022-12-23 14:09:18,894] [ INFO] - added tokens file saved in /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/added_tokens.json

Building prefix dict from the default dictionary ...

Loading model from cache /tmp/jieba.cache

Loading model cost 0.755 seconds.

Prefix dict has been built successfully.

[{'text': '夜岚风不止', 'answer': ', '}, {'text': '岂止万千关', 'answer': '山,'}]

poetry(["刘郎他乡遇故知", "山外青山楼外楼"])

[{'text': '刘郎他乡遇故知', 'answer': ', '},

{'text': '山外青山楼外楼', 'answer': ',西湖歌舞几时休? '}]

taskflow 问答生成模型

这个问答实现的模型也是和诗歌基于同样的模型 gpt cpm large cn模型

from paddlenlp import Taskflow

qa = Taskflow("question_answering")

qa("中国的国土面积有多大?")

[2022-12-23 17:40:19,423] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/gpt-cpm-cn-sentencepiece.model

[2022-12-23 17:40:19,470] [ INFO] - Adding <bod> to the vocabulary

[2022-12-23 17:40:19,475] [ INFO] - Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

[2022-12-23 17:40:19,478] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/tokenizer_config.json

[2022-12-23 17:40:19,480] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/special_tokens_map.json

[2022-12-23 17:40:19,482] [ INFO] - added tokens file saved in /home/aistudio/.paddlenlp/models/gpt-cpm-large-cn/added_tokens.json

Building prefix dict from the default dictionary ...

Loading model from cache /tmp/jieba.cache

Loading model cost 0.769 seconds.

Prefix dict has been built successfully.

[{'text': '中国的国土面积有多大?', 'answer': '960万平方公里。'}]

qa(["中国国土面积有多大?", "中国的首都在哪里?"])

[{'text': '中国国土面积有多大?', 'answer': '960万平方公里。'},

{'text': '中国的首都在哪里?', 'answer': '北京。'}]

文本纠错模型 基于 ernie,这点让我很惊讶,ernie 1.0版本竟然可以自带文本纠错能力。 需要安装pypinyin

pip install pypinyin

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Collecting pypinyin

Downloading https://pypi.tuna.tsinghua.edu.cn/packages/6f/b4/44e3270db224eede315b7b1edea982d4d90fbabf18c49a843f3f20d8c730/pypinyin-0.47.1-py2.py3-none-any.whl (1.4 MB)

l ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.0/1.4 MB ? eta -:--:--━━━━━━━━━╸━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 0.3/1.4 MB 10.3 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╺━━━━━━━━━━ 1.0/1.4 MB 15.0 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╸ 1.4/1.4 MB 15.1 MB/s eta 0:00:01━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.4/1.4 MB 12.7 MB/s eta 0:00:00

[?25hInstalling collected packages: pypinyin

Successfully installed pypinyin-0.47.1

[notice] A new release of pip available: 22.1.2 -> 22.3.1

[notice] To update, run: pip install --upgrade pip

Note: you may need to restart the kernel to use updated packages.

from paddlenlp import Taskflow

text_correction = Taskflow("text_correction")

[2022-12-23 17:50:34,183] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/ernie-1.0/vocab.txt

[2022-12-23 17:50:34,198] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/ernie-1.0/tokenizer_config.json

[2022-12-23 17:50:34,201] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/ernie-1.0/special_tokens_map.json

[{'source': '遇到逆竟时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。',

'target': '遇到逆境时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。',

'errors': [{'position': 3, 'correction': {'竟': '境'}}]}]

# 推理一条

text_correction('遇到逆竟时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。')

[{'source': '遇到逆竟时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。',

'target': '遇到逆境时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。',

'errors': [{'position': 3, 'correction': {'竟': '境'}}]}]

# 批量推理多条数据

text_correction(['遇到逆竟时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。',

'人生就是如此,经过磨练才能让自己更加拙壮,才能使自己更加乐观。'])

[{'source': '遇到逆竟时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。',

'target': '遇到逆境时,我们必须勇于面对,而且要愈挫愈勇,这样我们才能朝著成功之路前进。',

'errors': [{'position': 3, 'correction': {'竟': '境'}}]},

{'source': '人生就是如此,经过磨练才能让自己更加拙壮,才能使自己更加乐观。',

'target': '人生就是如此,经过磨练才能让自己更加茁壮,才能使自己更加乐观。',

'errors': [{'position': 18, 'correction': {'拙': '茁'}}]}]

paddle nlp taskflow 文本相似度模型 基于simbert-base-chinese模型进行

from paddlenlp import Taskflow

similarity = Taskflow("text_similarity", model='simbert-base-chinese')

[2022-12-23 18:46:22,446] [ INFO] - loading configuration file /home/aistudio/.paddlenlp/taskflow/text_similarity/simbert-base-chinese/model_config.json

[2022-12-23 18:46:22,452] [ INFO] - Model config BertConfig {

"architectures": [

"BertModel"

],

"attention_probs_dropout_prob": 0.1,

"fuse": false,

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"initializer_range": 0.02,

"intermediate_size": 3072,

"layer_norm_eps": 1e-12,

"max_position_embeddings": 512,

"model_type": "bert",

"num_attention_heads": 12,

"num_hidden_layers": 12,

"pad_token_id": 0,

"paddlenlp_version": null,

"pool_act": "linear",

"type_vocab_size": 2,

"vocab_size": 13685

}

[2022-12-23 18:46:22,455] [ INFO] - Configuration saved in /home/aistudio/.paddlenlp/taskflow/text_similarity/simbert-base-chinese/config.json

W1223 18:46:22.461328 3190 gpu_resources.cc:61] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 11.2

W1223 18:46:22.466092 3190 gpu_resources.cc:91] device: 0, cuDNN Version: 8.2.

[2022-12-23 18:46:28,173] [ WARNING] - Some weights of the model checkpoint at /home/aistudio/.paddlenlp/taskflow/text_similarity/simbert-base-chinese were not used when initializing BertModel: ['cls.predictions.transform.weight', 'cls.predictions.transform.LayerNorm.bias', 'bert.embeddings.position_ids', 'cls.seq_relationship.bias', 'cls.predictions.transform.LayerNorm.weight', 'cls.predictions.decoder_bias', 'cls.predictions.decoder_weight', 'cls.predictions.decoder.bias', 'cls.seq_relationship.weight', 'cls.predictions.transform.bias']

- This IS expected if you are initializing BertModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

[2022-12-23 18:46:28,176] [ INFO] - All the weights of BertModel were initialized from the model checkpoint at /home/aistudio/.paddlenlp/taskflow/text_similarity/simbert-base-chinese.

If your task is similar to the task the model of the checkpoint was trained on, you can already use BertModel for predictions without further training.

[2022-12-23 18:46:28,180] [ INFO] - Converting to the inference model cost a little time.

[2022-12-23 18:46:38,656] [ INFO] - The inference model save in the path:/home/aistudio/.paddlenlp/taskflow/text_similarity/simbert-base-chinese/static/inference

[2022-12-23 18:46:42,772] [ INFO] - Downloading https://bj.bcebos.com/paddlenlp/models/transformers/simbert/vocab.txt and saved to /home/aistudio/.paddlenlp/models/simbert-base-chinese

[2022-12-23 18:46:42,846] [ INFO] - Downloading vocab.txt from https://bj.bcebos.com/paddlenlp/models/transformers/simbert/vocab.txt

100%|██████████| 63.4k/63.4k [00:00<00:00, 5.19MB/s]

[2022-12-23 18:46:42,968] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/simbert-base-chinese/tokenizer_config.json

[2022-12-23 18:46:42,970] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/simbert-base-chinese/special_tokens_map.json

# 推理一条数据

similarity([["世界上什么东西最小", "世界上什么东西最小?"]])

[{'text1': '世界上什么东西最小', 'text2': '世界上什么东西最小?', 'similarity': 0.992725}]

# 推理多条数据

similarity([["光眼睛大就好看吗", "眼睛好看吗?"], ["小蝌蚪找妈妈怎么样", "小蝌蚪找妈妈是谁画的"]])

[{'text1': '光眼睛大就好看吗', 'text2': '眼睛好看吗?', 'similarity': 0.7450272},

{'text1': '小蝌蚪找妈妈怎么样', 'text2': '小蝌蚪找妈妈是谁画的', 'similarity': 0.8192149}]

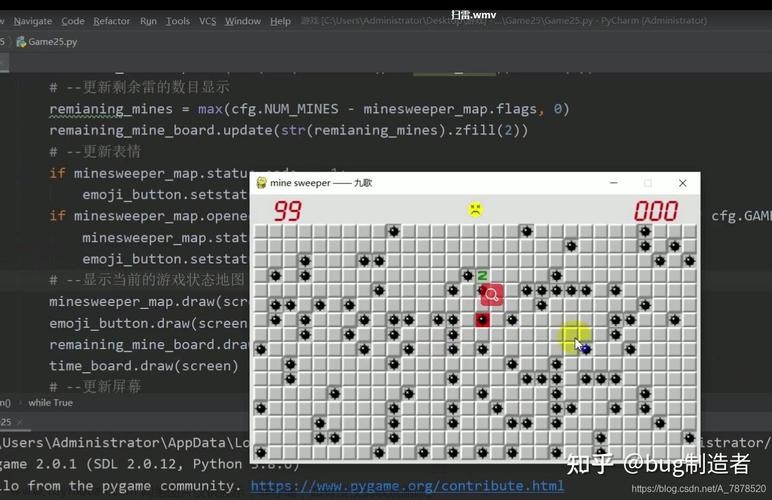

task flow 中最重磅的应用 AIGC 基于pai-painter-painting-base-zh进行文本生成图片

from paddlenlp import Taskflow

text_to_image = Taskflow("text_to_image")

images = text_to_image("风阁水帘今在眼,且来先看早梅红")

[2022-12-23 18:49:27,321] [ INFO] - We are using <class 'paddlenlp.transformers.artist.tokenizer.ArtistTokenizer'> to load 'pai-painter-painting-base-zh'.

[2022-12-23 18:49:27,324] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/pai-painter-painting-base-zh/vocab.txt

[2022-12-23 18:49:27,340] [ INFO] - tokenizer config file saved in /home/aistudio/.paddlenlp/models/pai-painter-painting-base-zh/tokenizer_config.json

[2022-12-23 18:49:27,343] [ INFO] - Special tokens file saved in /home/aistudio/.paddlenlp/models/pai-painter-painting-base-zh/special_tokens_map.json

[2022-12-23 18:49:27,346] [ INFO] - We are using <class 'paddlenlp.transformers.artist.modeling.ArtistForImageGeneration'> to load 'pai-painter-painting-base-zh'.

[2022-12-23 18:49:27,348] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/pai-painter-painting-base-zh/model_state.pdparams

images = text_to_image("刘孟水帘今在眼,且来先看早梅红")

# images[0].save("figure.png")

for image in images:

for image_one in image:

image_one.show()

文章来源:https://www.toymoban.com/news/detail-483633.html

文章来源:https://www.toymoban.com/news/detail-483633.html

不知道是不是我的版本问题,今天好几个应用的结果看起来并不是很对。后来在paddlenlp的工作人员的帮助下解决了很多的问题。在此感谢paddlenlp的工作人员对于问题的解答。文章来源地址https://www.toymoban.com/news/detail-483633.html

到了这里,关于paddle nlp taskflow 全家桶 包括代码生成与AIGC图片生成 一起探索paddle nlp 开包即用的能力吧的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!