完整踩坑和精简内容

k8s containerd配置

containerd安装参考

k8s安装参考

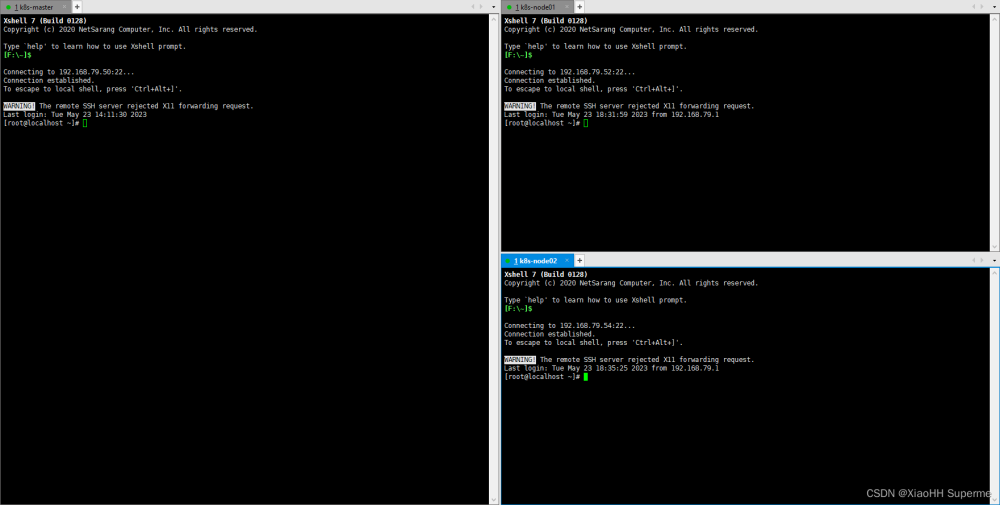

环境

两台机器

hostnamectl set-hostname master

hostnamectl set-hostname node1

# 10.1.1.10 master

# 10.1.1.11 node1

cat > /etc/hosts << EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.1.1.10 master

10.1.1.11 node1

EOF

系统

CentOS 7.9

必要的设置

- 1、关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

cat > /etc/sysctl.conf << EOF

vm.swappiness=0

EOF

# 生效

sysctl -p

free -m

- 2、网桥设置

cat <<EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

modprobe br_netfilter

modprobe overlay

lsmod | grep -e br_netfilter -e overlay

# overlay 91659 0

# br_netfilter 22256 0

# bridge 151336 1 br_netfilter

- 3、ipvs设置

yum install ipset ipvsadm -y

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod +x /etc/sysconfig/modules/ipvs.modules

/bin/bash /etc/sysconfig/modules/ipvs.modules

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

# nf_conntrack_ipv4 15053 0

# nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

# ip_vs_sh 12688 0

# ip_vs_wrr 12697 0

# ip_vs_rr 12600 0

# ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

# nf_conntrack 139264 2 ip_vs,nf_conntrack_ipv4

# libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

- 4、关闭防火墙

iptables -F

iptables -X

- 5、禁用selinux

getenforce

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/g' /etc/selinux/config

- 6、添加源

yum install -y wget && wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

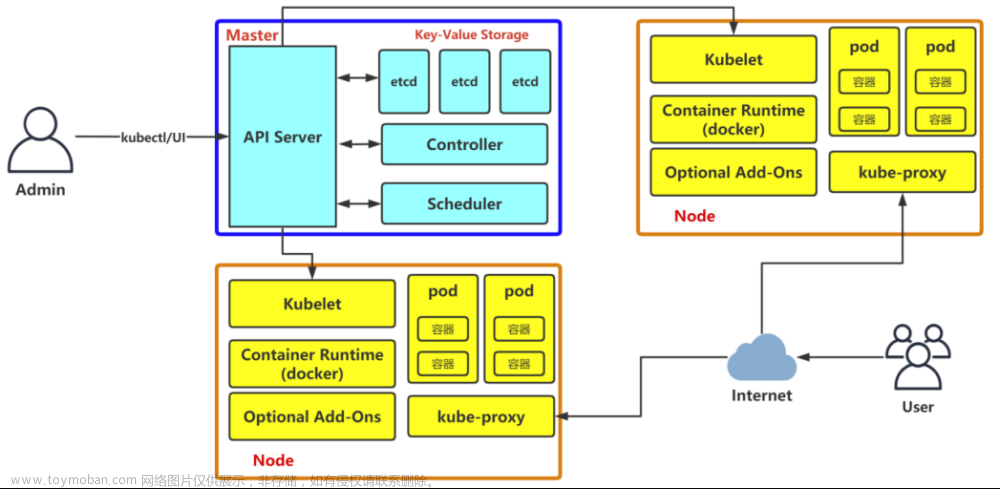

集群搭建

在所有节点上执行

- 1、安装最新的kubectl kubelet kubeadm

yum install kubectl kubelet kubeadm -y

systemctl start kubelet.service

systemctl enable kubelet.service

- 2、安装containerd

安装

yum install containerd -y

systemctl enable containerd.service

配置

containerd config default | tee /etc/containerd/config.toml

cat > /etc/containerd/config.toml << EOF

disabled_plugins = []

imports = []

oom_score = 0

plugin_dir = ""

required_plugins = []

root = "/var/lib/containerd"

state = "/run/containerd"

temp = ""

version = 2

[cgroup]

path = ""

[debug]

address = ""

format = ""

gid = 0

level = ""

uid = 0

[grpc]

address = "/run/containerd/containerd.sock"

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

tcp_address = ""

tcp_tls_ca = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

[metrics]

address = ""

grpc_histogram = false

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

deletion_threshold = 0

mutation_threshold = 100

pause_threshold = 0.02

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

device_ownership_from_security_context = false

disable_apparmor = false

disable_cgroup = false

disable_hugetlb_controller = true

disable_proc_mount = false

disable_tcp_service = true

enable_selinux = false

enable_tls_streaming = false

enable_unprivileged_icmp = false

enable_unprivileged_ports = false

ignore_image_defined_volumes = false

max_concurrent_downloads = 3

max_container_log_line_size = 16384

netns_mounts_under_state_dir = false

restrict_oom_score_adj = false

# 注释上面那行,添加下面这行,注意pause版本

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.8"

selinux_category_range = 1024

stats_collect_period = 10

stream_idle_timeout = "4h0m0s"

stream_server_address = "127.0.0.1"

stream_server_port = "0"

systemd_cgroup = false

tolerate_missing_hugetlb_controller = true

unset_seccomp_profile = ""

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

conf_template = ""

ip_pref = ""

max_conf_num = 1

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "runc"

disable_snapshot_annotations = true

discard_unpacked_layers = false

ignore_rdt_not_enabled_errors = false

no_pivot = false

snapshotter = "overlayfs"

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

# 修改下面这行

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime.options]

[plugins."io.containerd.grpc.v1.cri".image_decryption]

key_model = "node"

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = ""

[plugins."io.containerd.grpc.v1.cri".registry.auths]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.headers]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

#endpoint = ["https://registry-1.docker.io"]

# 注释上面那行,添加下面三行

endpoint = ["https://docker.mirrors.ustc.edu.cn"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]

endpoint = ["https://registry.aliyuncs.com/google_containers"]

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

[plugins."io.containerd.internal.v1.opt"]

path = "/opt/containerd"

[plugins."io.containerd.internal.v1.restart"]

interval = "10s"

[plugins."io.containerd.internal.v1.tracing"]

sampling_ratio = 1.0

service_name = "containerd"

[plugins."io.containerd.metadata.v1.bolt"]

content_sharing_policy = "shared"

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.runtime.v1.linux"]

no_shim = false

runtime = "runc"

runtime_root = ""

shim = "containerd-shim"

shim_debug = false

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

sched_core = false

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.service.v1.tasks-service"]

rdt_config_file = ""

[plugins."io.containerd.snapshotter.v1.aufs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.btrfs"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.devmapper"]

async_remove = false

base_image_size = ""

discard_blocks = false

fs_options = ""

fs_type = ""

pool_name = ""

root_path = ""

[plugins."io.containerd.snapshotter.v1.native"]

root_path = ""

[plugins."io.containerd.snapshotter.v1.overlayfs"]

root_path = ""

upperdir_label = false

[plugins."io.containerd.snapshotter.v1.zfs"]

root_path = ""

[plugins."io.containerd.tracing.processor.v1.otlp"]

endpoint = ""

insecure = false

protocol = ""

[proxy_plugins]

[stream_processors]

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar"]

accepts = ["application/vnd.oci.image.layer.v1.tar+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar"

[stream_processors."io.containerd.ocicrypt.decoder.v1.tar.gzip"]

accepts = ["application/vnd.oci.image.layer.v1.tar+gzip+encrypted"]

args = ["--decryption-keys-path", "/etc/containerd/ocicrypt/keys"]

env = ["OCICRYPT_KEYPROVIDER_CONFIG=/etc/containerd/ocicrypt/ocicrypt_keyprovider.conf"]

path = "ctd-decoder"

returns = "application/vnd.oci.image.layer.v1.tar+gzip"

[timeouts]

"io.containerd.timeout.bolt.open" = "0s"

"io.containerd.timeout.shim.cleanup" = "5s"

"io.containerd.timeout.shim.load" = "5s"

"io.containerd.timeout.shim.shutdown" = "3s"

"io.containerd.timeout.task.state" = "2s"

[ttrpc]

address = ""

gid = 0

uid = 0

EOF

重启

systemctl daemon-reload

systemctl restart containerd

- 3、安装crictl

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.24.2/crictl-v1.24.2-linux-amd64.tar.gz

tar -zxf crictl-v1.24.2-linux-amd64.tar.gz

mv crictl /usr/local/bin/

编写配置文件

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///var/run/containerd/containerd.sock

image-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

pull-image-on-create: false

EOF

下载镜像

kubeadm --config=kubeadm.yml config images list

master

tee ./images.sh <<'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.25.0

kube-controller-manager:v1.25.0

kube-scheduler:v1.25.0

kube-proxy:v1.25.0

pause:3.8

etcd:3.5.4-0

coredns:v1.9.3

)

for imageName in ${images[@]} ; do

crictl pull registry.aliyuncs.com/google_containers/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

node

tee ./images.sh <<'EOF'

#!/bin/bash

images=(

kube-proxy:v1.25.0

pause:3.8

)

for imageName in ${images[@]} ; do

crictl pull registry.aliyuncs.com/google_containers/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

此后步骤master上执行

- 4、通过kubeadm生成默认config文件

kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm.yml

修改配置文件

1)修改 criSocket:默认使用docker做为runtime,修改为containerd.sock,使用containerd做为runtime

2)修改imageRepository,改为aliyun的镜像仓库地址

3)修改podSubnet以及serviceSubnet,根据的自己的环境进行设置

4)设置cgroupDriver为systemd,非必要

5)修改advertiseAddress为正确ip地址或者0.0.0.0

修改后的配置文件

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 0.0.0.0

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: master

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.25.0

networking:

dnsDomain: cluster.local

podSubnet: 10.10.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

- 5、初始化master集群

# 重置命令

kubeadm reset -f

# 初始化

# kubeadm init --config=kubeadm.yml --upload-certs --ignore-preflight-errors=ImagePull

W0823 07:37:26.188671 6862 initconfiguration.go:306] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeadm.k8s.io", Version:"v1beta3", Kind:"ClusterConfiguration"}: strict decoding error: unknown field "cgroupdriver"

[init] Using Kubernetes version: v1.24.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 10.1.1.10]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [10.1.1.10 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [10.1.1.10 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 19.504370 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

ae70a9e894995efc394ec730e11bc660f54d717c670e3cc0b5c87a6bae5705c3

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.1.1.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3effc72f50d0cefff23de6b4bece8962b59bc8e57b2ab641cb0bfd36460f1c4f

- 6、设置.kube/config(只在master执行)

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

- 7、calico网络插件安装

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

查看master状态

[root@master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5b97f5d8cf-qrg87 0/1 Pending 0 2m36s

kube-system calico-node-4j8s9 0/1 Init:0/3 0 2m36s

kube-system calico-node-7vnhb 0/1 Init:0/3 0 2m36s

kube-system coredns-74586cf9b6-glsgz 0/1 Pending 0 14h

kube-system coredns-74586cf9b6-hgtf7 0/1 Pending 0 14h

kube-system etcd-master 1/1 Running 1 (54m ago) 14h

kube-system kube-apiserver-master 1/1 Running 1 (54m ago) 14h

kube-system kube-controller-manager-master 1/1 Running 1 (54m ago) 14h

kube-system kube-proxy-mq7b9 1/1 Running 1 (53m ago) 14h

kube-system kube-proxy-wwr6d 1/1 Running 1 (54m ago) 14h

kube-system kube-scheduler-master 1/1 Running 1 (54m ago) 14h

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 14h v1.24.4

- 8、node1节点加入集群

# kubeadm join 10.1.1.10:6443 --token abcdef.0123456789abcdef \

# > --discovery-token-ca-cert-hash sha256:3effc72f50d0cefff23de6b4bece8962b59bc8e57b2ab641cb0bfd36460f1c4f

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

加入后在master节点查看状态

kubectl get nodes

- 9、node节点加入集群令牌有效期为24小时,master节点可以生成新令牌

[root@master ~]# kubeadm token create --print-join-command

kubeadm join 10.1.1.10:6443 --token 52nf1s.puunevi6fxb9xit6 --discovery-token-ca-cert-hash sha256:3effc72f50d0cefff23de6b4bece8962b59bc8e57b2ab641cb0bfd36460f1c4f

- 10、使node节点也可以执行kubectl命令

scp -r $HOME/.kube node1:$HOME

kubectl get nodes

- 11、master节点部署dashboard

关于dashborad和k8s版本的对应关系

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5b97f5d8cf-qrg87 1/1 Running 0 21m

kube-system calico-node-4j8s9 1/1 Running 0 21m

kube-system calico-node-7vnhb 1/1 Running 0 21m

kube-system coredns-74586cf9b6-glsgz 1/1 Running 0 15h

kube-system coredns-74586cf9b6-hgtf7 1/1 Running 0 15h

kube-system etcd-master 1/1 Running 1 (73m ago) 15h

kube-system kube-apiserver-master 1/1 Running 1 (73m ago) 15h

kube-system kube-controller-manager-master 1/1 Running 1 (73m ago) 15h

kube-system kube-proxy-mq7b9 1/1 Running 1 (72m ago) 15h

kube-system kube-proxy-wwr6d 1/1 Running 1 (73m ago) 15h

kube-system kube-scheduler-master 1/1 Running 1 (73m ago) 15h

kubernetes-dashboard dashboard-metrics-scraper-7bfdf779ff-pbnzr 1/1 Running 0 14s

kubernetes-dashboard kubernetes-dashboard-6465b7f54c-wtmf2 0/1 ContainerCreating 0 14s

设置访问端口

将type: ClusterIP改为:type: NodePort

[root@master ~]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kubernetes-dashboard"},"spec":{"ports":[{"port":443,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}}

creationTimestamp: "2022-08-24T02:50:05Z"

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

resourceVersion: "6865"

uid: ecf9f542-d1b3-4aa2-93db-89068fd71814

spec:

clusterIP: 10.100.215.190

clusterIPs:

- 10.100.215.190

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

查看端口

[root@master ~]# kubectl get svc -A | grep kubernetes-dashboard

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.101.228.146 <none> 8000/TCP 4m39s

kubernetes-dashboard kubernetes-dashboard NodePort 10.100.215.190 <none> 443:31132/TCP 4m39s

查看pods所有信息

[root@master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-5b97f5d8cf-qrg87 1/1 Running 0 40m 10.10.219.66 master <none> <none>

kube-system calico-node-4j8s9 1/1 Running 0 40m 10.1.1.10 master <none> <none>

kube-system calico-node-7vnhb 1/1 Running 0 40m 10.1.1.11 node1 <none> <none>

kube-system coredns-74586cf9b6-glsgz 1/1 Running 0 15h 10.10.219.65 master <none> <none>

kube-system coredns-74586cf9b6-hgtf7 1/1 Running 0 15h 10.10.219.67 master <none> <none>

kube-system etcd-master 1/1 Running 1 (91m ago) 15h 10.1.1.10 master <none> <none>

kube-system kube-apiserver-master 1/1 Running 1 (91m ago) 15h 10.1.1.10 master <none> <none>

kube-system kube-controller-manager-master 1/1 Running 1 (91m ago) 15h 10.1.1.10 master <none> <none>

kube-system kube-proxy-mq7b9 1/1 Running 1 (91m ago) 15h 10.1.1.11 node1 <none> <none>

kube-system kube-proxy-wwr6d 1/1 Running 1 (91m ago) 15h 10.1.1.10 master <none> <none>

kube-system kube-scheduler-master 1/1 Running 1 (91m ago) 15h 10.1.1.10 master <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-7bfdf779ff-pbnzr 1/1 Running 0 18m 10.10.166.131 node1 <none> <none>

kubernetes-dashboard kubernetes-dashboard-6465b7f54c-wtmf2 1/1 Running 0 18m 10.10.166.132 node1 <none> <none>

查看面板 https://10.1.1.11:31132

创建访问账号

tee ./dash.yaml <<'EOF'

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

kubectl apply -f dash.yaml

# serviceaccount/admin-user created

# clusterrolebinding.rbac.authorization.k8s.io/admin-user created

获取访问令牌

用户创建参考

相关报错参考

[root@master ~]# kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6Im5HTDhEcGZlcjFoR2N0eTJ6cGdVTzJ6M3pLUFZlRWR4SnluT0VEcWNLV1kifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjYxMzI1NjA4LCJpYXQiOjE2NjEzMjIwMDgsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiOGY1ZDA4MzItZWFiYy00MjhiLWE2ZmYtOTcyMGRjN2Y3NDQ3In19LCJuYmYiOjE2NjEzMjIwMDgsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.S2V1A8HkWLzHVA5lKmG1Ib1R7xfzWTZj7VyHOUy_aqmDeU2089t9bmXZENje2oJSzPNasWAGt6C9pI-V4M9pElLvEYRZ6yDZIndMFu4ioGILqoKrvuhI-Kyakx6r-cijmRQy3B5Uzh9l3GtZzf9xv5DuPvjauPcOhRKW6euejMH5oJEZfX-8Ng87ZzwT__r6m-6DDzybAsBlefWVtDzSuwcC1yj3pMK54ohLC8J_qZ8BEq67atHafX2XGD3wXedwMB1W0I6Yb0Jb4O3mU6wPTKuadKI5eoxxQ-qXhpK4d13N3IHDQG69gDhkfdeaA_kpA0cvkFkkrq6AnHUGaYkVlg

黏贴访问

- 12、nginx安装测试

kubectl create deployment nginx --image=nginx

watch -n 3 kubectl get pods -A -o wide

暴露端口

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@master ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-76d6c9b8c-5xwm8 1/1 Running 0 2m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 56m

service/nginx NodePort 10.97.190.10 <none> 80:30856/TCP 7s

访问

http://10.1.1.10:30856/

http://10.1.1.11:30856/

其他

metrics-server

ipvs开启

ingress-nginx的安装

nfs搭建

注意k8s证书的过期

证书更新参考

[root@master ~]# openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text |grep ' Not '

Not Before: Aug 23 11:37:27 2022 GMT

Not After : Aug 23 11:37:27 2023 GMT

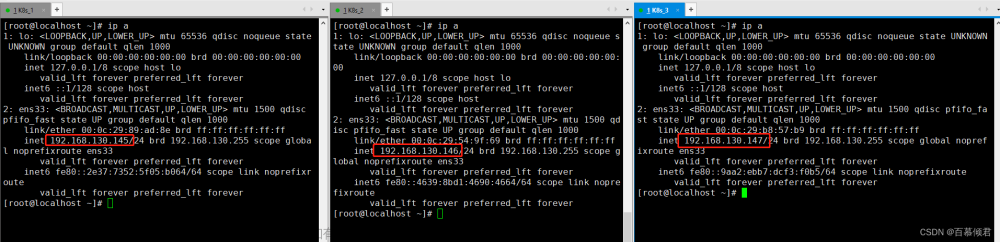

查看部署完毕的集群网卡,iptables,路由,内核

[root@node1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:6d:1b:ff brd ff:ff:ff:ff:ff:ff

inet 10.1.1.11/24 brd 10.1.1.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::8ae3:1458:f8a8:e3a3/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.10.166.128/32 scope global tunl0

valid_lft forever preferred_lft forever

[root@node1 ~]# iptables -S

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-N KUBE-EXTERNAL-SERVICES

-N KUBE-FIREWALL

-N KUBE-FORWARD

-N KUBE-KUBELET-CANARY

-N KUBE-NODEPORTS

-N KUBE-PROXY-CANARY

-N KUBE-SERVICES

-N cali-FORWARD

-N cali-INPUT

-N cali-OUTPUT

-N cali-cidr-block

-N cali-from-hep-forward

-N cali-from-host-endpoint

-N cali-from-wl-dispatch

-N cali-to-hep-forward

-N cali-to-host-endpoint

-N cali-to-wl-dispatch

-N cali-wl-to-host

-A INPUT -m comment --comment "cali:Cz_u1IQiXIMmKD4c" -j cali-INPUT

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m comment --comment "cali:wUHhoiAYhphO9Mso" -j cali-FORWARD

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A FORWARD -m comment --comment "cali:S93hcgKJrXEqnTfs" -m comment --comment "Policy explicitly accepted packet." -m mark --mark 0x10000/0x10000 -j ACCEPT

-A FORWARD -m comment --comment "cali:mp77cMpurHhyjLrM" -j MARK --set-xmark 0x10000/0x10000

-A OUTPUT -m comment --comment "cali:tVnHkvAo15HuiPy0" -j cali-OUTPUT

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A cali-FORWARD -m comment --comment "cali:vjrMJCRpqwy5oRoX" -j MARK --set-xmark 0x0/0xe0000

-A cali-FORWARD -m comment --comment "cali:A_sPAO0mcxbT9mOV" -m mark --mark 0x0/0x10000 -j cali-from-hep-forward

-A cali-FORWARD -i cali+ -m comment --comment "cali:8ZoYfO5HKXWbB3pk" -j cali-from-wl-dispatch

-A cali-FORWARD -o cali+ -m comment --comment "cali:jdEuaPBe14V2hutn" -j cali-to-wl-dispatch

-A cali-FORWARD -m comment --comment "cali:12bc6HljsMKsmfr-" -j cali-to-hep-forward

-A cali-FORWARD -m comment --comment "cali:NOSxoaGx8OIstr1z" -j cali-cidr-block

-A cali-INPUT -p ipv4 -m comment --comment "cali:PajejrV4aFdkZojI" -m comment --comment "Allow IPIP packets from Calico hosts" -m set --match-set cali40all-hosts-net src -m addrtype --dst-type LOCAL -j ACCEPT

-A cali-INPUT -p ipv4 -m comment --comment "cali:_wjq-Yrma8Ly1Svo" -m comment --comment "Drop IPIP packets from non-Calico hosts" -j DROP

-A cali-INPUT -i cali+ -m comment --comment "cali:8TZGxLWh_Eiz66wc" -g cali-wl-to-host

-A cali-INPUT -m comment --comment "cali:6McIeIDvPdL6PE1T" -m mark --mark 0x10000/0x10000 -j ACCEPT

-A cali-INPUT -m comment --comment "cali:YGPbrUms7NId8xVa" -j MARK --set-xmark 0x0/0xf0000

-A cali-INPUT -m comment --comment "cali:2gmY7Bg2i0i84Wk_" -j cali-from-host-endpoint

-A cali-INPUT -m comment --comment "cali:q-Vz2ZT9iGE331LL" -m comment --comment "Host endpoint policy accepted packet." -m mark --mark 0x10000/0x10000 -j ACCEPT

-A cali-OUTPUT -m comment --comment "cali:Mq1_rAdXXH3YkrzW" -m mark --mark 0x10000/0x10000 -j ACCEPT

-A cali-OUTPUT -o cali+ -m comment --comment "cali:69FkRTJDvD5Vu6Vl" -j RETURN

-A cali-OUTPUT -p ipv4 -m comment --comment "cali:AnEsmO6bDZbQntWW" -m comment --comment "Allow IPIP packets to other Calico hosts" -m set --match-set cali40all-hosts-net dst -m addrtype --src-type LOCAL -j ACCEPT

-A cali-OUTPUT -m comment --comment "cali:9e9Uf3GU5tX--Lxy" -j MARK --set-xmark 0x0/0xf0000

-A cali-OUTPUT -m comment --comment "cali:0f3LDz_VKuHFaA2K" -m conntrack ! --ctstate DNAT -j cali-to-host-endpoint

-A cali-OUTPUT -m comment --comment "cali:OgU2f8BVEAZ_fwkq" -m comment --comment "Host endpoint policy accepted packet." -m mark --mark 0x10000/0x10000 -j ACCEPT

-A cali-from-wl-dispatch -m comment --comment "cali:zTj6P0TIgYvgz-md" -m comment --comment "Unknown interface" -j DROP

-A cali-to-wl-dispatch -m comment --comment "cali:7KNphB1nNHw80nIO" -m comment --comment "Unknown interface" -j DROP

-A cali-wl-to-host -m comment --comment "cali:Ee9Sbo10IpVujdIY" -j cali-from-wl-dispatch

-A cali-wl-to-host -m comment --comment "cali:nSZbcOoG1xPONxb8" -m comment --comment "Configured DefaultEndpointToHostAction" -j ACCEPT

[root@node1 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.1.1.2 0.0.0.0 UG 100 0 0 ens33

10.1.1.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33

10.10.166.128 0.0.0.0 255.255.255.192 U 0 0 0 *

10.10.219.64 10.1.1.10 255.255.255.192 UG 0 0 0 tunl0

[root@node1 ~]# sysctl -a | grep net.ipv4.ip_forward

net.ipv4.ip_forward = 1

net.ipv4.ip_forward_use_pmtu = 0

sysctl: reading key "net.ipv6.conf.all.stable_secret"

sysctl: reading key "net.ipv6.conf.default.stable_secret"

sysctl: reading key "net.ipv6.conf.ens33.stable_secret"

sysctl: reading key "net.ipv6.conf.lo.stable_secret"

sysctl: reading key "net.ipv6.conf.tunl0.stable_secret"

基于之前的第三步ipvs设置将k8s切换为ipvs转发模式

参考

master上修改模式

# kubectl -n kube-system edit cm kube-proxy

# 修改

metricsBindAddress: "127.0.0.1:10249"

mode: 'ipvs'

将master上原来的kube-proxy删除

kubectl -n kube-system get pod -l k8s-app=kube-proxy | grep -v 'NAME' | awk '{print $1}' | xargs kubectl -n kube-system delete pod

master和node上同时运行处理防火墙文章来源:https://www.toymoban.com/news/detail-483786.html

实际上iptables依然会生成规则

iptables-save>./iptables.`date +%F`

iptables -t filter -F

iptables -t filter -X

iptables -t nat -F

iptables -t nat -X

iptables -S

iptables -S -t nat

测试文章来源地址https://www.toymoban.com/news/detail-483786.html

kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

ping www.baidu.com

到了这里,关于k8s containerd集群配置安装完整踩坑教程的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!