先安装Docker

华为云arm架构安装Docker

设置主机名称

#查看Linux内核版本

uname -r

4.18.0-80.7.2.el7.aarch64

#或者使用 uname -a

#设置主机名称为k8s-master,重新连接显示生效

hostnamectl --static set-hostname k8s-master

#查看主机名称

hostname

配置k8s的yum源 arm64的源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-aarch64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#清除缓存

yum clean all

#把服务器的包信息下载到本地电脑缓存起来,makecache建立一个缓存

yum makecache

#列出kubectl可用的版本

yum list kubectl --showduplicates | sort -r

#列出信息如下:

Loading mirror speeds from cached hostfile

Loaded plugins: fastestmirror

kubectl.aarch64 1.9.9-0 kubernetes

kubectl.aarch64 1.9.8-0 kubernetes

kubectl.aarch64 1.9.7-0 kubernetes

kubectl.aarch64 1.9.6-0 kubernetes

kubectl.aarch64 1.9.5-0 kubernetes

kubectl.aarch64 1.9.4-0 kubernetes

kubectl.aarch64 1.9.3-0 kubernetes

kubectl.aarch64 1.9.2-0 kubernetes

kubectl.aarch64 1.9.11-0 kubernetes

kubectl.aarch64 1.9.1-0 kubernetes

kubectl.aarch64 1.9.10-0 kubernetes

kubectl.aarch64 1.9.0-0 kubernetes

kubectl.aarch64 1.8.9-0 kubernetes

kubectl.aarch64 1.8.8-0 kubernetes

kubectl.aarch64 1.8.7-0 kubernetes

kubectl.aarch64 1.8.6-0 kubernetes

kubectl.aarch64 1.8.5-0 kubernetes

kubectl.aarch64 1.8.4-0 kubernetes

kubectl.aarch64 1.8.3-0 kubernetes

kubectl.aarch64 1.8.2-0 kubernetes

kubectl.aarch64 1.8.15-0 kubernetes

kubectl.aarch64 1.8.14-0 kubernetes

kubectl.aarch64 1.8.13-0 kubernetes

kubectl.aarch64 1.8.12-0 kubernetes

kubectl.aarch64 1.8.11-0 kubernetes

kubectl.aarch64 1.8.1-0 kubernetes

kubectl.aarch64 1.8.10-0 kubernetes

kubectl.aarch64 1.8.0-0 kubernetes

kubectl.aarch64 1.7.9-0 kubernetes

kubectl.aarch64 1.7.8-1 kubernetes

kubectl.aarch64 1.7.7-1 kubernetes

kubectl.aarch64 1.7.6-1 kubernetes

kubectl.aarch64 1.7.5-0 kubernetes

kubectl.aarch64 1.7.4-0 kubernetes

kubectl.aarch64 1.7.3-1 kubernetes

kubectl.aarch64 1.7.2-0 kubernetes

配置iptables

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

#让上述配置命令生效

sysctl --system

#或者这样去设置

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

echo "1" >/proc/sys/net/bridge/bridge-nf-call-ip6tables

#保证输出的都是1

cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

cat /proc/sys/net/bridge/bridge-nf-call-iptables

安装kubelet,kubeadm,kubectl

#安装最新版本,也可安装指定版本

yum install -y kubelet kubeadm kubectl

#安装指定版本的kubelet,kubeadm,kubectl

yum install -y kubelet-1.19.3-0 kubeadm-1.19.3-0 kubectl-1.19.3-0

#查看kubelet版本

kubelet --version

#版本如下:

Kubernetes v1.19.3

#查看kubeadm版本

kubeadm version

#版本信息如下:

kubeadm version: &version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:47:53Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/arm64"}

启动kubelet并设置开机启动服务

#重新加载配置文件

systemctl daemon-reload

#启动kubelet

systemctl start kubelet

#查看kubelet启动状态

systemctl status kubelet

#没启动成功,报错先不管,后面的kubeadm init会拉起

#设置开机自启动

systemctl enable kubelet

#查看kubelet开机启动状态 enabled:开启, disabled:关闭

systemctl is-enabled kubelet

#查看日志

journalctl -xefu kubelet

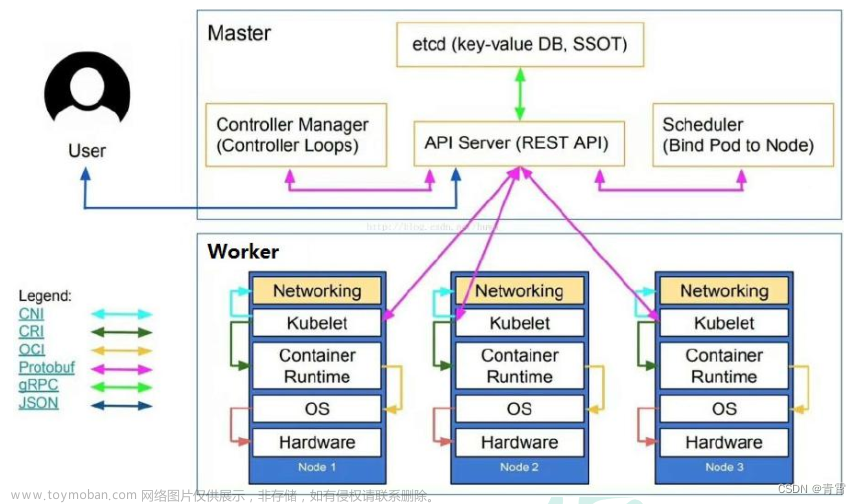

初始化k8s集群Master

–apiserver-advertise-address=192.168.0.5 为Master的IP

–image-repository registry.aliyuncs.com/google_containers 指定镜像仓库,如果不指定默认是k8s.gcr.io,国内需要翻墙才能下载镜像

#执行初始化命令

kubeadm init --image-repository registry.aliyuncs.com/google_containers --apiserver-advertise-address=192.168.0.5 --kubernetes-version=v1.19.3 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16

#打印如下信息表示成功:

W0511 11:11:24.998096 15272 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.19.3

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 19.03

[WARNING Hostname]: hostname "k8s-master" could not be reached

[WARNING Hostname]: hostname "k8s-master": lookup k8s-master on 100.125.1.250:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 192.168.0.147]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.0.5 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.0.5 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.501683 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: rt0fpo.4axz6cd6eqpm1ihf

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.5:6443 --token rt0fpo.4axz6.....m1ihf \

--discovery-token-ca-cert-hash sha256:ac20e89e8bf43b56......516a41305c1c1fd5c7

一定要记住输出的最后一个命令: kubeadm join…

###记住这个命令,后续添加节点时,需要此命令

###kubeadm join 192.168.0.5:6443 --token rt0fpo.4axz6....

#按提示要求执行如下命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看k8s集群节点

#查看节点

kubectl get node

#输出如下:

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 4m13s v1.19.3

#发现状态是NotReady,是因为没有安装网络插件

#查看kubelet的日志

journalctl -xef -u kubelet -n 20

#输出如下: 提示未安装cni 网络插件

May 11 11:15:26 k8s-master kubelet[16678]: W0511 11:15:26.356793 16678 cni.go:239] Unable to update cni config: no networks found in /etc/cni/net.d

May 11 11:15:28 k8s-master kubelet[16678]: E0511 11:15:28.237122 16678 kubelet.go:2103] Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

安装flannel网络插件(CNI)

#创建文件夹

mkdir flannel && cd flannel

#下载文件

curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# kube-flannel.yml里需要下载镜像,我这里提前先下载

docker pull quay.io/coreos/flannel:v0.14.0-rc1

#创建flannel网络插件

kubectl apply -f kube-flannel.yml

#过一会查看k8s集群节点,变成Ready状态了

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 9m39s v1.19.3

节点添加到k8s集群中

参考上面的,在节点安装好docker、kubelet、kubectl、kubeadm

执行k8s初始化最后输出的命令

kubeadm join 192.168.0.5:6443 --token rt0fpo.4axz6....

#节点成功加入后,在Master上执行命令查看

kubectl get nodes

#列出k8s需要下载的镜像

kubeadm config images list

#如下:

I0511 09:36:15.377901 9508 version.go:252] remote version is much newer: v1.21.0; falling back to: stable-1.19

W0511 09:36:17.124062 9508 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

k8s.gcr.io/kube-apiserver:v1.19.10

k8s.gcr.io/kube-controller-manager:v1.19.10

k8s.gcr.io/kube-scheduler:v1.19.10

k8s.gcr.io/kube-proxy:v1.19.10

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

如果初始化没有配置–image-repository registry.aliyuncs.com/google_containers 指定镜像仓库,就会要翻墙下载这些镜像,或者找其他镜像,然后修改镜像名文章来源:https://www.toymoban.com/news/detail-484899.html

注意:–apiserver-advertise-address=192.168.0.5 的IP使用内网IP,如果使用外网IP会报如下错误:

W0511 09:58:49.950542 20273 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.19.3

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 19.03

[WARNING Hostname]: hostname "k8s-master" could not be reached

[WARNING Hostname]: hostname "k8s-master": lookup k8s-master on 100.125.1.250:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 116.65.37.123]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [116.65.37.123 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [116.65.37.123 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

提示加上–v=5 可以打印详细信息文章来源地址https://www.toymoban.com/news/detail-484899.html

#在次执行时

kubeadm init --image-repository registry.aliyuncs.com/google_containers --apiserver-advertise-address=116.73.117.123 --kubernetes-version=v1.19.3 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16 --v=5

#输出错误如下:

W0511 10:04:28.999779 24707 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.19.3

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.6. Latest validated version: 19.03

[WARNING Hostname]: hostname "k8s-master" could not be reached

[WARNING Hostname]: hostname "k8s-master": lookup k8s-master on 100.125.1.250:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-10259]: Port 10259 is in use

[ERROR Port-10257]: Port 10257 is in use

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[ERROR Port-10250]: Port 10250 is in use

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

#10259 10257等端口已经被使用等错误信息

#重置k8s

kubeadm reset

#或者使用 kubeadm reset -f 命令

#在重新初始化

kubeadm init --image-repository registry.aliyuncs.com/google_containers --apiserver-advertise-address=116.73.117.123 --kubernetes-version=v1.19.3 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16 --v=5

#还是报错,卡在这里,原因就是用了外网IP导致,坑了一把:

[kubelet-check] Initial timeout of 40s passed.

到了这里,关于华为云arm架构安装k8s(kubernetes)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[k8s] arm64架构下k8s部署的坑](https://imgs.yssmx.com/Uploads/2024/02/513037-1.png)