Apache Hive 系列文章

1、apache-hive-3.1.2简介及部署(三种部署方式-内嵌模式、本地模式和远程模式)及验证详解

2、hive相关概念详解–架构、读写文件机制、数据存储

3、hive的使用示例详解-建表、数据类型详解、内部外部表、分区表、分桶表

4、hive的使用示例详解-事务表、视图、物化视图、DDL(数据库、表以及分区)管理详细操作

5、hive的load、insert、事务表使用详解及示例

6、hive的select(GROUP BY、ORDER BY、CLUSTER BY、SORT BY、LIMIT、union、CTE)、join使用详解及示例

7、hive shell客户端与属性配置、内置运算符、函数(内置运算符与自定义UDF运算符)

8、hive的关系运算、逻辑预算、数学运算、数值运算、日期函数、条件函数和字符串函数的语法与使用示例详解

9、hive的explode、Lateral View侧视图、聚合函数、窗口函数、抽样函数使用详解

10、hive综合示例:数据多分隔符(正则RegexSerDe)、url解析、行列转换常用函数(case when、union、concat和explode)详细使用示例

11、hive综合应用示例:json解析、窗口函数应用(连续登录、级联累加、topN)、拉链表应用

12、Hive优化-文件存储格式和压缩格式优化与job执行优化(执行计划、MR属性、join、优化器、谓词下推和数据倾斜优化)详细介绍及示例

13、java api访问hive操作示例

本文介绍了hive的事务表、视图、物化视图的概念以及使用示例,同时介绍了数据库、表以及分区DDL的详细操作。

本文依赖hive环境可用。

本文分为7个部分,即事务表、视图、物化视图、数据库ddl操作、表的ddl操作、分区修复以及常见的show语法。

一、Hive Transactional Tables事务表

1、Hive事务表应用场景

Hive本身从设计之初时,是不支持事务的,从Hive0.14版本开始,具有ACID语义的事务已添加到Hive中,以解决以下场景下遇到的问题:

- 流式传输数据

使用如Apache Flume、Apache Kafka之类的工具将数据流式传输到Hadoop集群中。虽然这些工具可以每秒数百行或更多行的速度写入数据,但是Hive一般不会以每秒创建一个时间分区。因此通常使用这些工具将数据流式传输到已有分区中,但这有可能会造成脏读(数据传输一半失败,回滚了)。需要通过事务功能,允许用户获得一致的数据视图并避免过多的小文件产生。 - 尺寸变化缓慢-缓慢变化维

星型模式数据仓库中,维度表随时间缓慢变化。例如,零售商将开设新商店,需要将其添加到商店表中,或者现有商店可能会更改其平方英尺或某些其他跟踪的特征。这些更改导致需要插入单个记录或更新单条记录(取决于所选策略)。 - 数据更新或修改

有时发现收集的数据不正确,需要更正。

2、Hive事务表局限性

虽然Hive支持了具有ACID语义的事务,但需要在一定条件下使用。如下:

- 不支持BEGIN,COMMIT和ROLLBACK。所有语言操作都是自动提交的。

- 仅支持ORC文件格式(STORED AS ORC)。

- 默认情况下事务配置为关闭。需要配置参数开启使用。

- 表必须是分桶表(Bucketed)才可以使用事务功能。

- 表参数transactional必须为true;

- 外部表不能成为ACID表,不允许从非ACID会话读取/写入ACID表。

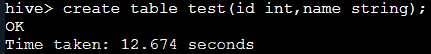

3、示例

以下以示例的形式详细介绍了事务表的使用、验证。

--Hive中事务表的创建使用

--1、开启事务配置(可以使用set设置当前session生效 也可以配置在hive-site.xml中)

set hive.support.concurrency = true; --Hive是否支持并发

set hive.enforce.bucketing = true; --从Hive2.0开始不再需要 是否开启分桶功能

set hive.exec.dynamic.partition.mode = nonstrict; --动态分区模式 非严格

set hive.txn.manager = org.apache.hadoop.hive.ql.lockmgr.DbTxnManager; --

set hive.compactor.initiator.on = true; --是否在Metastore实例上运行启动线程和清理线程

set hive.compactor.worker.threads = 1; --在此metastore实例上运行多少个压缩程序工作线程。

--2、创建Hive事务表

create table trans_student(

id int,

name String,

age int

) clustered by (id) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true');

--3、针对事务表进行insert update delete操作

insert into trans_student (id, name, age) values (1,"allen",18);

update trans_student set age = 20 where id = 1;

delete from trans_student where id =1;

select * from trans_student;

0: jdbc:hive2://server4:10000> set hive.support.concurrency = true;

No rows affected (0.003 seconds)

0: jdbc:hive2://server4:10000> set hive.enforce.bucketing = true;

No rows affected (0.003 seconds)

0: jdbc:hive2://server4:10000> set hive.exec.dynamic.partition.mode = nonstrict;

No rows affected (0.003 seconds)

0: jdbc:hive2://server4:10000> set hive.txn.manager = org.apache.hadoop.hive.ql.lockmgr.DbTxnManager;

No rows affected (0.003 seconds)

0: jdbc:hive2://server4:10000> set hive.compactor.initiator.on = true;

No rows affected (0.003 seconds)

0: jdbc:hive2://server4:10000> set hive.compactor.worker.threads = 1;

No rows affected (0.003 seconds)

0: jdbc:hive2://server4:10000> create table trans_student(

. . . . . . . . . . . . . . .> id int,

. . . . . . . . . . . . . . .> name String,

. . . . . . . . . . . . . . .> age int

. . . . . . . . . . . . . . .> )clustered by (id) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true');

INFO : Compiling command(queryId=alanchan_20221018180956_6db8ba96-3429-466c-af8f-b3866e38a717): create table trans_student(

id int,

name String,

age int

)clustered by (id) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true')

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018180956_6db8ba96-3429-466c-af8f-b3866e38a717); Time taken: 0.144 seconds

INFO : Executing command(queryId=alanchan_20221018180956_6db8ba96-3429-466c-af8f-b3866e38a717): create table trans_student(

id int,

name String,

age int

)clustered by (id) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true')

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=alanchan_20221018180956_6db8ba96-3429-466c-af8f-b3866e38a717); Time taken: 0.243 seconds

INFO : OK

No rows affected (0.481 seconds)

0: jdbc:hive2://server4:10000> insert into trans_student (id, name, age) values (1,"allen",18);

INFO : Compiling command(queryId=alanchan_20221018181004_dba275a9-2ad4-4c02-91d4-0496f4f9296a): insert into trans_student (id, name, age) values (1,"allen",18)

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:_col0, type:int, comment:null), FieldSchema(name:_col1, type:string, comment:null), FieldSchema(name:_col2, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018181004_dba275a9-2ad4-4c02-91d4-0496f4f9296a); Time taken: 0.397 seconds

INFO : Executing command(queryId=alanchan_20221018181004_dba275a9-2ad4-4c02-91d4-0496f4f9296a): insert into trans_student (id, name, age) values (1,"allen",18)

WARN : Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

INFO : Query ID = alanchan_20221018181004_dba275a9-2ad4-4c02-91d4-0496f4f9296a

INFO : Total jobs = 2

INFO : Launching Job 1 out of 2

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks determined at compile time: 2

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=<number>

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=<number>

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=<number>

INFO : number of splits:1

INFO : Submitting tokens for job: job_1666082878454_0013

INFO : Executing with tokens: []

INFO : The url to track the job: http://server1:8088/proxy/application_1666082878454_0013/

INFO : Starting Job = job_1666082878454_0013, Tracking URL = http://server1:8088/proxy/application_1666082878454_0013/

INFO : Kill Command = /usr/local/bigdata/hadoop-3.1.4/bin/mapred job -kill job_1666082878454_0013

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 2

INFO : 2022-10-18 18:10:56,845 Stage-1 map = 0%, reduce = 0%

INFO : 2022-10-18 18:11:04,981 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.06 sec

INFO : 2022-10-18 18:11:12,100 Stage-1 map = 100%, reduce = 50%, Cumulative CPU 6.11 sec

INFO : 2022-10-18 18:11:16,176 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 9.8 sec

INFO : MapReduce Total cumulative CPU time: 9 seconds 800 msec

INFO : Ended Job = job_1666082878454_0013

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table test.trans_student from hdfs://HadoopHAcluster/user/hive/warehouse/test.db/trans_student/.hive-staging_hive_2022-10-18_18-10-04_420_2262593005380306446-1/-ext-10000

INFO : Launching Job 2 out of 2

INFO : Starting task [Stage-3:MAPRED] in serial mode

INFO : Number of reduce tasks determined at compile time: 1

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=<number>

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=<number>

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=<number>

INFO : number of splits:1

INFO : Submitting tokens for job: job_1666082878454_0014

INFO : Executing with tokens: []

INFO : The url to track the job: http://server1:8088/proxy/application_1666082878454_0014/

INFO : Starting Job = job_1666082878454_0014, Tracking URL = http://server1:8088/proxy/application_1666082878454_0014/

INFO : Kill Command = /usr/local/bigdata/hadoop-3.1.4/bin/mapred job -kill job_1666082878454_0014

INFO : Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 1

INFO : 2022-10-18 18:12:11,091 Stage-3 map = 0%, reduce = 0%

INFO : 2022-10-18 18:12:12,112 Stage-3 map = 100%, reduce = 100%, Cumulative CPU 3.15 sec

INFO : MapReduce Total cumulative CPU time: 3 seconds 150 msec

INFO : Ended Job = job_1666082878454_0014

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Reduce: 2 Cumulative CPU: 9.8 sec HDFS Read: 18938 HDFS Write: 1233 SUCCESS

INFO : Stage-Stage-3: Map: 1 Reduce: 1 Cumulative CPU: 3.15 sec HDFS Read: 17697 HDFS Write: 910294 SUCCESS

INFO : Total MapReduce CPU Time Spent: 12 seconds 950 msec

INFO : Completed executing command(queryId=alanchan_20221018181004_dba275a9-2ad4-4c02-91d4-0496f4f9296a); Time taken: 129.483 seconds

INFO : OK

No rows affected (129.91 seconds)

0: jdbc:hive2://server4:10000> select * from trans_student;

INFO : Compiling command(queryId=alanchan_20221018181231_c207860b-e7d8-4e2a-8ecb-e66f682b33d2): select * from trans_student

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:trans_student.id, type:int, comment:null), FieldSchema(name:trans_student.name, type:string, comment:null), FieldSchema(name:trans_student.age, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018181231_c207860b-e7d8-4e2a-8ecb-e66f682b33d2); Time taken: 0.133 seconds

INFO : Executing command(queryId=alanchan_20221018181231_c207860b-e7d8-4e2a-8ecb-e66f682b33d2): select * from trans_student

INFO : Completed executing command(queryId=alanchan_20221018181231_c207860b-e7d8-4e2a-8ecb-e66f682b33d2); Time taken: 0.0 seconds

INFO : OK

+-------------------+---------------------+--------------------+

| trans_student.id | trans_student.name | trans_student.age |

+-------------------+---------------------+--------------------+

| 1 | allen | 18 |

+-------------------+---------------------+--------------------+

1 row selected (0.278 seconds)

0: jdbc:hive2://server4:10000> update trans_student

. . . . . . . . . . . . . . .> set age = 20

. . . . . . . . . . . . . . .> where id = 1;

INFO : Compiling command(queryId=alanchan_20221018181259_50db9156-55fd-446c-8d70-b467665ad739): update trans_student

set age = 20

where id = 1

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:row__id, type:struct<writeid:bigint,bucketid:int,rowid:bigint>, comment:null), FieldSchema(name:id, type:int, comment:null), FieldSchema(name:name, type:string, comment:null), FieldSchema(name:_c3, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018181259_50db9156-55fd-446c-8d70-b467665ad739); Time taken: 0.191 seconds

INFO : Executing command(queryId=alanchan_20221018181259_50db9156-55fd-446c-8d70-b467665ad739): update trans_student

set age = 20

where id = 1

WARN : Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

INFO : Query ID = alanchan_20221018181259_50db9156-55fd-446c-8d70-b467665ad739

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks determined at compile time: 2

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=<number>

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=<number>

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=<number>

INFO : number of splits:1

INFO : Submitting tokens for job: job_1666082878454_0015

INFO : Executing with tokens: []

INFO : The url to track the job: http://server1:8088/proxy/application_1666082878454_0015/

INFO : Starting Job = job_1666082878454_0015, Tracking URL = http://server1:8088/proxy/application_1666082878454_0015/

INFO : Kill Command = /usr/local/bigdata/hadoop-3.1.4/bin/mapred job -kill job_1666082878454_0015

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 2

INFO : 2022-10-18 18:13:56,064 Stage-1 map = 0%, reduce = 0%

INFO : 2022-10-18 18:14:04,199 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.6 sec

INFO : 2022-10-18 18:14:13,341 Stage-1 map = 100%, reduce = 50%, Cumulative CPU 6.25 sec

INFO : 2022-10-18 18:14:17,407 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 9.45 sec

INFO : MapReduce Total cumulative CPU time: 9 seconds 450 msec

INFO : Ended Job = job_1666082878454_0015

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table test.trans_student from hdfs://HadoopHAcluster/user/hive/warehouse/test.db/trans_student/.hive-staging_hive_2022-10-18_18-12-59_718_5259662380576737591-1/-ext-10000

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Reduce: 2 Cumulative CPU: 9.45 sec HDFS Read: 20717 HDFS Write: 1630 SUCCESS

INFO : Total MapReduce CPU Time Spent: 9 seconds 450 msec

INFO : Completed executing command(queryId=alanchan_20221018181259_50db9156-55fd-446c-8d70-b467665ad739); Time taken: 78.863 seconds

INFO : OK

No rows affected (79.071 seconds)

0: jdbc:hive2://server4:10000> select * from trans_student;

INFO : Compiling command(queryId=alanchan_20221018181423_7ef19231-c9db-42fd-a407-d7de169f37f4): select * from trans_student

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:trans_student.id, type:int, comment:null), FieldSchema(name:trans_student.name, type:string, comment:null), FieldSchema(name:trans_student.age, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018181423_7ef19231-c9db-42fd-a407-d7de169f37f4); Time taken: 0.116 seconds

INFO : Executing command(queryId=alanchan_20221018181423_7ef19231-c9db-42fd-a407-d7de169f37f4): select * from trans_student

INFO : Completed executing command(queryId=alanchan_20221018181423_7ef19231-c9db-42fd-a407-d7de169f37f4); Time taken: 0.0 seconds

INFO : OK

+-------------------+---------------------+--------------------+

| trans_student.id | trans_student.name | trans_student.age |

+-------------------+---------------------+--------------------+

| 1 | allen | 20 |

+-------------------+---------------------+--------------------+

1 row selected (0.155 seconds)

0: jdbc:hive2://server4:10000> delete from trans_student where id =1;

INFO : Compiling command(queryId=alanchan_20221018181451_331d24a0-380d-436a-829f-3beb00ece998): delete from trans_student where id =1

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:row__id, type:struct<writeid:bigint,bucketid:int,rowid:bigint>, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018181451_331d24a0-380d-436a-829f-3beb00ece998); Time taken: 0.177 seconds

INFO : Executing command(queryId=alanchan_20221018181451_331d24a0-380d-436a-829f-3beb00ece998): delete from trans_student where id =1

WARN : Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

INFO : Query ID = alanchan_20221018181451_331d24a0-380d-436a-829f-3beb00ece998

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Number of reduce tasks determined at compile time: 2

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=<number>

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=<number>

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=<number>

INFO : number of splits:2

INFO : Submitting tokens for job: job_1666082878454_0016

INFO : Executing with tokens: []

INFO : The url to track the job: http://server1:8088/proxy/application_1666082878454_0016/

INFO : Starting Job = job_1666082878454_0016, Tracking URL = http://server1:8088/proxy/application_1666082878454_0016/

INFO : Kill Command = /usr/local/bigdata/hadoop-3.1.4/bin/mapred job -kill job_1666082878454_0016

INFO : Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 2

INFO : 2022-10-18 18:15:40,476 Stage-1 map = 0%, reduce = 0%

INFO : 2022-10-18 18:15:47,604 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 3.76 sec

INFO : 2022-10-18 18:15:52,692 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 7.37 sec

INFO : 2022-10-18 18:15:55,746 Stage-1 map = 100%, reduce = 50%, Cumulative CPU 10.03 sec

INFO : 2022-10-18 18:15:59,827 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 13.62 sec

INFO : MapReduce Total cumulative CPU time: 13 seconds 620 msec

INFO : Ended Job = job_1666082878454_0016

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table test.trans_student from hdfs://HadoopHAcluster/user/hive/warehouse/test.db/trans_student/.hive-staging_hive_2022-10-18_18-14-51_041_4068059741455713828-1/-ext-10000

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 2 Reduce: 2 Cumulative CPU: 13.62 sec HDFS Read: 29074 HDFS Write: 848 SUCCESS

INFO : Total MapReduce CPU Time Spent: 13 seconds 620 msec

INFO : Completed executing command(queryId=alanchan_20221018181451_331d24a0-380d-436a-829f-3beb00ece998); Time taken: 71.099 seconds

INFO : OK

No rows affected (71.293 seconds)

0: jdbc:hive2://server4:10000> select * from trans_student;

INFO : Compiling command(queryId=alanchan_20221018181605_e66179c2-dcbf-45e6-9db1-4e0e148f97a6): select * from trans_student

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:trans_student.id, type:int, comment:null), FieldSchema(name:trans_student.name, type:string, comment:null), FieldSchema(name:trans_student.age, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018181605_e66179c2-dcbf-45e6-9db1-4e0e148f97a6); Time taken: 1.244 seconds

INFO : Executing command(queryId=alanchan_20221018181605_e66179c2-dcbf-45e6-9db1-4e0e148f97a6): select * from trans_student

INFO : Completed executing command(queryId=alanchan_20221018181605_e66179c2-dcbf-45e6-9db1-4e0e148f97a6); Time taken: 0.0 seconds

INFO : OK

+-------------------+---------------------+--------------------+

| trans_student.id | trans_student.name | trans_student.age |

+-------------------+---------------------+--------------------+

+-------------------+---------------------+--------------------+

No rows selected (1.289 seconds)

二、Hive Views 视图

1、视图介绍

Hive中的视图(view)是一种虚拟表,只保存定义,不实际存储数据。

通常从真实的物理表查询中创建生成视图,也可以从已经存在的视图上创建新视图。

创建视图时,将冻结视图的架构,如果删除或更改基础表,则视图将失败。

视图是用来简化操作的,不缓冲记录,也没有提高查询性能。

2、示例

--hive中有一张真实的基础表t_usa_covid19

select * from test.t_usa_covid19;

--1、创建视图

create view v_usa_covid19_V as select count_date, county,state,deaths from t_usa_covid19 limit 5;

--从已有的视图中创建视图

create view v_usa_covid19_from_view as select * from v_usa_covid19_V limit 2;

--2、显示当前已有的视图

show tables;

show views;--hive v2.2.0之后支持

--3、视图的查询使用

select * from v_usa_covid19_V ;

--能否插入数据到视图中呢?

--不行 报错 SemanticException:A view cannot be used as target table for LOAD or INSERT

insert into v_usa_covid19_V select count_date,county,state,deaths from t_usa_covid19;

--4、查看视图定义

show create table v_usa_covid19_V ;

--5、删除视图

drop view v_usa_covid19_from_view;

--6、更改视图属性

alter view v_usa_covid19_V set TBLPROPERTIES ('comment' = 'This is a view');

--7、更改视图定义

alter view v_usa_covid19_V as select county,deaths from t_usa_covid19 limit 2;

0: jdbc:hive2://server4:10000> create view v_usa_covid19_V as select count_date, county,state,deaths from t_usa_covid19 limit 5;

INFO : Compiling command(queryId=alanchan_20221018181947_6074d102-1d5a-47f2-b393-5b30fa8f1135): create view v_usa_covid19_V as select count_date, county,state,deaths from t_usa_covid19 limit 5

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:count_date, type:string, comment:null), FieldSchema(name:county, type:string, comment:null), FieldSchema(name:state, type:string, comment:null), FieldSchema(name:deaths, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018181947_6074d102-1d5a-47f2-b393-5b30fa8f1135); Time taken: 0.105 seconds

INFO : Executing command(queryId=alanchan_20221018181947_6074d102-1d5a-47f2-b393-5b30fa8f1135): create view v_usa_covid19_V as select count_date, county,state,deaths from t_usa_covid19 limit 5

INFO : Starting task [Stage-1:DDL] in serial mode

INFO : Completed executing command(queryId=alanchan_20221018181947_6074d102-1d5a-47f2-b393-5b30fa8f1135); Time taken: 0.018 seconds

INFO : OK

No rows affected (0.138 seconds)

0: jdbc:hive2://server4:10000> select * from v_usa_covid19_V;

INFO : Compiling command(queryId=alanchan_20221018182000_c2a0d786-407c-49e6-bd2f-a74f41b8a79e): select * from v_usa_covid19_V

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:v_usa_covid19_v.count_date, type:string, comment:null), FieldSchema(name:v_usa_covid19_v.county, type:string, comment:null), FieldSchema(name:v_usa_covid19_v.state, type:string, comment:null), FieldSchema(name:v_usa_covid19_v.deaths, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018182000_c2a0d786-407c-49e6-bd2f-a74f41b8a79e); Time taken: 0.123 seconds

INFO : Executing command(queryId=alanchan_20221018182000_c2a0d786-407c-49e6-bd2f-a74f41b8a79e): select * from v_usa_covid19_V

INFO : Completed executing command(queryId=alanchan_20221018182000_c2a0d786-407c-49e6-bd2f-a74f41b8a79e); Time taken: 0.0 seconds

INFO : OK

+-----------------------------+-------------------------+------------------------+-------------------------+

| v_usa_covid19_v.count_date | v_usa_covid19_v.county | v_usa_covid19_v.state | v_usa_covid19_v.deaths |

+-----------------------------+-------------------------+------------------------+-------------------------+

| 2021-01-28 | Autauga | Alabama | 69 |

| 2021-01-28 | Baldwin | Alabama | 225 |

| 2021-01-28 | Barbour | Alabama | 40 |

| 2021-01-28 | Bibb | Alabama | 51 |

| 2021-01-28 | Blount | Alabama | 98 |

+-----------------------------+-------------------------+------------------------+-------------------------+

5 rows selected (0.144 seconds)

0: jdbc:hive2://server4:10000> create view v_usa_covid19_from_view as select * from v_usa_covid19_V limit 2;

INFO : Compiling command(queryId=alanchan_20221018182049_8cb61a56-2523-459d-b93f-9a6d0b2104dd): create view v_usa_covid19_from_view as select * from v_usa_covid19_V limit 2

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:count_date, type:string, comment:null), FieldSchema(name:county, type:string, comment:null), FieldSchema(name:state, type:string, comment:null), FieldSchema(name:deaths, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018182049_8cb61a56-2523-459d-b93f-9a6d0b2104dd); Time taken: 0.105 seconds

INFO : Executing command(queryId=alanchan_20221018182049_8cb61a56-2523-459d-b93f-9a6d0b2104dd): create view v_usa_covid19_from_view as select * from v_usa_covid19_V limit 2

INFO : Starting task [Stage-1:DDL] in serial mode

INFO : Completed executing command(queryId=alanchan_20221018182049_8cb61a56-2523-459d-b93f-9a6d0b2104dd); Time taken: 0.016 seconds

INFO : OK

No rows affected (0.135 seconds)

0: jdbc:hive2://server4:10000> select * from v_usa_covid19_from_view;

INFO : Compiling command(queryId=alanchan_20221018182103_3a9abfae-23f1-4529-a4b5-415c676f9064): select * from v_usa_covid19_from_view

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:v_usa_covid19_from_view.count_date, type:string, comment:null), FieldSchema(name:v_usa_covid19_from_view.county, type:string, comment:null), FieldSchema(name:v_usa_covid19_from_view.state, type:string, comment:null), FieldSchema(name:v_usa_covid19_from_view.deaths, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018182103_3a9abfae-23f1-4529-a4b5-415c676f9064); Time taken: 0.12 seconds

INFO : Executing command(queryId=alanchan_20221018182103_3a9abfae-23f1-4529-a4b5-415c676f9064): select * from v_usa_covid19_from_view

INFO : Completed executing command(queryId=alanchan_20221018182103_3a9abfae-23f1-4529-a4b5-415c676f9064); Time taken: 0.0 seconds

INFO : OK

+-------------------------------------+---------------------------------+--------------------------------+---------------------------------+

| v_usa_covid19_from_view.count_date | v_usa_covid19_from_view.county | v_usa_covid19_from_view.state | v_usa_covid19_from_view.deaths |

+-------------------------------------+---------------------------------+--------------------------------+---------------------------------+

| 2021-01-28 | Autauga | Alabama | 69 |

| 2021-01-28 | Baldwin | Alabama | 225 |

+-------------------------------------+---------------------------------+--------------------------------+---------------------------------+

2 rows selected (0.141 seconds)

0: jdbc:hive2://server4:10000> alter view v_usa_covid19_V as select county,deaths from t_usa_covid19 limit 2;

INFO : Compiling command(queryId=alanchan_20221018182353_b1857b4b-2845-46dd-83f4-02183058d0e4): alter view v_usa_covid19_V as select county,deaths from t_usa_covid19 limit 2

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:county, type:string, comment:null), FieldSchema(name:deaths, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018182353_b1857b4b-2845-46dd-83f4-02183058d0e4); Time taken: 0.073 seconds

INFO : Executing command(queryId=alanchan_20221018182353_b1857b4b-2845-46dd-83f4-02183058d0e4): alter view v_usa_covid19_V as select county,deaths from t_usa_covid19 limit 2

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=alanchan_20221018182353_b1857b4b-2845-46dd-83f4-02183058d0e4); Time taken: 0.029 seconds

INFO : OK

No rows affected (0.114 seconds)

0: jdbc:hive2://server4:10000> select * from v_usa_covid19_V;

INFO : Compiling command(queryId=alanchan_20221018182405_efc9a951-3284-49c0-ba80-592204c3f0c8): select * from v_usa_covid19_V

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:v_usa_covid19_v.county, type:string, comment:null), FieldSchema(name:v_usa_covid19_v.deaths, type:int, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018182405_efc9a951-3284-49c0-ba80-592204c3f0c8); Time taken: 0.13 seconds

INFO : Executing command(queryId=alanchan_20221018182405_efc9a951-3284-49c0-ba80-592204c3f0c8): select * from v_usa_covid19_V

INFO : Completed executing command(queryId=alanchan_20221018182405_efc9a951-3284-49c0-ba80-592204c3f0c8); Time taken: 0.0 seconds

INFO : OK

+-------------------------+-------------------------+

| v_usa_covid19_v.county | v_usa_covid19_v.deaths |

+-------------------------+-------------------------+

| Autauga | 69 |

| Baldwin | 225 |

+-------------------------+-------------------------+

2 rows selected (0.149 seconds)

3、视图优点

将真实表中特定的列数据提供给用户,保护数据隐式

降低查询的复杂度,优化查询语句

三、Materialized Views 物化视图

1、介绍

- 物化视图(Materialized View)是一个包括查询结果的数据库对像,可以用于预先计算并保存表连接或聚集等耗时较多的操作的结果。在执行查询时,就可以避免进行这些耗时的操作,而从快速的得到结果。

- 使用物化视图的目的就是通过预计算,提高查询性能,当然需要占用一定的存储空间。

- Hive3.0开始引入物化视图,并提供对于物化视图的查询自动重写机制(基于Apache Calcite实现)。

- Hive的物化视图还提供了物化视图存储选择机制,可以本地存储在Hive,也可以通过用户自定义storage handlers存储在其他系统(如Druid)。

- Hive引入物化视图的目的就是为了优化数据查询访问的效率,相当于从数据预处理的角度优化数据访问。

- Hive从3.0丢弃了index索引的语法支持,推荐使用物化视图和列式存储文件格式来加快查询的速度。

2、物化视图、视图区别

- 视图是虚拟的,逻辑存在的,只有定义没有存储数据。

- 物化视图是真实的,物理存在的,里面存储着预计算的数据。

- 物化视图能够缓存数据,在创建物化视图的时候就把数据缓存起来了,Hive把物化视图当成一张“表”,将数据缓存。而视图只是创建一个虚表,只有表结构,没有数据,实际查询的时候再去改写SQL去访问实际的数据表。

- 视图的目的是简化降低查询的复杂度,而物化视图的目的是提高查询性能。

3、物化视图使用注意事项

源数据是指基表数据,即基于table创建的物化视图的数据。

-

源数据更新后,物化视图需要手动重建。

-

如果源数据仅仅是insert ,物化视图更新时则是增量更新;

-

如果有update和delete,物化视图更新时则是完全重建(rebuild)。

-

增量更新的条件:

1、物化视图对应的表是事务表,micromanaged or ACID表。

2、If the materialized view definition contains a Group By clause, the materialized view should be stored in an ACID table, since it needs to support MERGE operation. For materialized view definitions consisting of Scan-Project-Filter-Join, this restriction does not exist。

如果物化视图中包含Group By,则该物化视图必须存储在ACID表中,因为它需要支持MERGE操作。对于由Scan-Project-Filter-Join组成的物化视图,不存在该限制。

A rebuild operation acquires an exclusive write lock over the materialized view, i.e., for a given materialized view, only one rebuild operation can be executed at a given time. -

物化视图是特殊的表,存储实际的数据,占用物理空间。

-

删除基表之前必须先删除基于该基表所建立的物化视图。

-

物化视图创建语句是原子的,这意味着在填充所有查询结果之前,其他用户看不到物化视图。

-

不能基于物化视图的查询结果建立物化视图。

-

不能基于无表查询得到的查询结果建立物化视图。

-

不能对物化视图做增删改操作(即insert、update、delete、load、merge)。

-

能对物化视图做复杂查询操作,因其本质就是一张特殊的表。

-

当基表数据更新,需要手动对物化视图进行更新,否则物化视图将保留旧数据,即过期。

-

可通过describe语法查看基于acid表创建的物化视图是否过期。

-

基于非acid表创建的物化视图,无法通过descirbe语句查询物化视图是否过期。

-

创建物化视图只支持文件存储格式是“ORC”,并且支持事务(即“TBLPROPERTIES (‘transactional’=‘true’)”)的Hive内部表。

4、语法

- 物化视图创建后,select查询执行数据自动落地,“自动”也即在query的执行期间,任何用户对该物化视图是不可见的,执行完毕之后物化视图可用;

- 默认情况下,创建好的物化视图可被用于查询优化器optimizer查询重写,在物化视图创建期间可以通过DISABLE REWRITE参数设置禁止使用。

- 默认SerDe和storage format为hive.materializedview.serde、 hive.materializedview.fileformat;

- 物化视图支持将数据存储在外部系统(如druid)

- 目前支持物化视图的drop和show操作

- 当数据源变更(新数据插入inserted、数据修改modified),物化视图也需要更新以保持数据一致性,目前需要用户主动触发rebuild重构。

--物化视图的创建语法

CREATE MATERIALIZED VIEW [IF NOT EXISTS] [db_name.]materialized_view_name

[DISABLE REWRITE]

[COMMENT materialized_view_comment]

[PARTITIONED ON (col_name, ...)]

[CLUSTERED ON (col_name, ...) | DISTRIBUTED ON (col_name, ...) SORTED ON (col_name, ...)]

[

[ROW FORMAT row_format]

[STORED AS file_format]

| STORED BY 'storage.handler.class.name' [WITH SERDEPROPERTIES (...)]

]

[LOCATION hdfs_path]

[TBLPROPERTIES (property_name=property_value, ...)]

AS SELECT ...;

--存储在Druid中

CREATE MATERIALIZED VIEW druid_wiki_mv

STORED AS 'org.apache.hadoop.hive.druid.DruidStorageHandler'

AS

SELECT __time, page, user, c_added, c_removed

FROM src;

-- Drops a materialized view

DROP MATERIALIZED VIEW [db_name.]materialized_view_name;

-- Shows materialized views (with optional filters)

SHOW MATERIALIZED VIEWS [IN database_name];

-- Shows information about a specific materialized view

DESCRIBE [EXTENDED | FORMATTED] [db_name.]materialized_view_name;

ALTER MATERIALIZED VIEW [db_name.]materialized_view_name REBUILD;

5、物化视图的维护

When data in the source tables used by a materialized view changes, e.g., new data is inserted or existing data is modified, we will need to refresh the contents of the materialized view to keep it up-to-date with those changes. Currently, the rebuild operation for a materialized view needs to be triggered by the user. In particular, the user should execute the following statement:

ALTER MATERIALIZED VIEW [db_name.]materialized_view_name REBUILD;

Hive supports incremental view maintenance, i.e., only refresh data that was affected by the changes in the original source tables. Incremental view maintenance will decrease the rebuild step execution time. In addition, it will preserve LLAP cache for existing data in the materialized view.

By default, Hive will attempt to rebuild a materialized view incrementally, falling back to full rebuild if it is not possible. Current implementation only supports incremental rebuild when there were INSERT operations over the source tables, while UPDATE and DELETE operations will force a full rebuild of the materialized view.

To execute incremental maintenance, following conditions should be met:

The materialized view should only use transactional tables, either micromanaged or ACID.

If the materialized view definition contains a Group By clause, the materialized view should be stored in an ACID table, since it needs to support MERGE operation. For materialized view definitions consisting of Scan-Project-Filter-Join, this restriction does not exist.

A rebuild operation acquires an exclusive write lock over the materialized view, i.e., for a given materialized view, only one rebuild operation can be executed at a given time.

6、物化视图生命周期(Materialized view lifecycle)

若物化视图包含旧数据,没有更新,那么不会启动自动重写。

如果物化视图使用的是非事务表,那么无法判断数据是否过时,然而我们还希望,优化器会对查询进行自动重写。

这时可以通过SET hive.materializedview.rewriting.time.window=10min;设置定期刷新。

The parameter value can be also overridden by a concrete materialized view just by setting it as a TBLPROPERTIES when the materialization is created.

CREATE MATERIALIZED VIEW student_trans_agg

TBLPROPERTIES('hive.materializedview.rewriting.time.window'='1min')

AS SELECT dept, count(*) as dept_cnt from student_trans group by dept;

7、基于物化视图的查询重写

物化视图创建后即可用于相关查询的加速,即:用户提交查询query,若该query经过重写后可以命中已经存在的物化视图,则直接通过物化视图查询数据返回结果,以实现查询加速。

是否重写查询使用物化视图可以通过全局参数控制,默认为true: hive.materializedview.rewriting=true;

用户可选择性的控制指定的物化视图查询重写机制,语法如下:

ALTER MATERIALIZED VIEW [db_name.]materialized_view_name ENABLE|DISABLE REWRITE;

8、示例

1)、基本使用示例

物化视图好像需要一张事务表,如果是非事务表则出现如下错误

Error while compiling statement: FAILED: SemanticException org.apache.hadoop.hive.ql.parse.SemanticException: Automatic rewriting for materialized view cannot be enabled if the materialized view uses non-transactional tables (state=42000,code=40000)

--1、新建一张事务表 trans_student

set hive.support.concurrency = true; --Hive是否支持并发

set hive.enforce.bucketing = true; --从Hive2.0开始不再需要 是否开启分桶功能

set hive.exec.dynamic.partition.mode = nonstrict; --动态分区模式 非严格

set hive.txn.manager = org.apache.hadoop.hive.ql.lockmgr.DbTxnManager; --

set hive.compactor.initiator.on = true; --是否在Metastore实例上运行启动线程和清理线程

set hive.compactor.worker.threads = 1; --在此metastore实例上运行多少个压缩程序工作线程。

CREATE TABLE trans_student(

num int,

name string,

dept string

)

clustered by (num) into 2 buckets stored as orc TBLPROPERTIES('transactional'='true');

--2、导入数据到trans_student中

insert overwrite table trans_student select num,name,dept from student;

select * from trans_student ;

--3、对student_trans建立聚合物化视图

CREATE MATERIALIZED VIEW trans_student_agg

AS SELECT dept, count(*) as dept_cnt from trans_student group by dept;

--注意 这里当执行CREATE MATERIALIZED VIEW,会启动一个MR对物化视图进行构建

--可以发现当下的数据库中有了一个物化视图

show tables;

show materialized views;

--4、对原始表trans_student 查询

--由于会命中物化视图,重写query查询物化视图,查询速度会加快(没有启动MR,只是普通的table scan)

SELECT dept, count(*) as dept_cnt from trans_student group by dept;

0: jdbc:hive2://server4:10000> SELECT dept, count(*) as dept_cnt from trans_student group by dept;

INFO : Compiling command(queryId=alanchan_20221018192458_d0129519-e1e7-48df-93da-71685702a75f): SELECT dept, count(*) as dept_cnt from trans_student group by dept

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:dept, type:string, comment:null), FieldSchema(name:dept_cnt, type:bigint, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018192458_d0129519-e1e7-48df-93da-71685702a75f); Time taken: 0.257 seconds

INFO : Executing command(queryId=alanchan_20221018192458_d0129519-e1e7-48df-93da-71685702a75f): SELECT dept, count(*) as dept_cnt from trans_student group by dept

INFO : Completed executing command(queryId=alanchan_20221018192458_d0129519-e1e7-48df-93da-71685702a75f); Time taken: 0.0 seconds

INFO : OK

+-------+-----------+

| dept | dept_cnt |

+-------+-----------+

| CS | 7 |

| IS | 6 |

| MA | 9 |

+-------+-----------+

3 rows selected (0.286 seconds)

--5、查询执行计划可以发现 查询被自动重写为TableScan alias: test.trans_student_agg

--转换成了对物化视图的查询 提高了查询效率

explain SELECT dept, count(*) as dept_cnt from trans_student group by dept;

0: jdbc:hive2://server4:10000> explain SELECT dept, count(*) as dept_cnt from trans_student group by dept;

INFO : Compiling command(queryId=alanchan_20221018192526_3e801e68-ea6d-41c3-828b-be8321e97f91): explain SELECT dept, count(*) as dept_cnt from trans_student group by dept

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:Explain, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221018192526_3e801e68-ea6d-41c3-828b-be8321e97f91); Time taken: 0.105 seconds

INFO : Executing command(queryId=alanchan_20221018192526_3e801e68-ea6d-41c3-828b-be8321e97f91): explain SELECT dept, count(*) as dept_cnt from trans_student group by dept

INFO : Starting task [Stage-1:EXPLAIN] in serial mode

INFO : Completed executing command(queryId=alanchan_20221018192526_3e801e68-ea6d-41c3-828b-be8321e97f91); Time taken: 0.009 seconds

INFO : OK

+----------------------------------------------------+

| Explain |

+----------------------------------------------------+

| STAGE DEPENDENCIES: |

| Stage-0 is a root stage |

| |

| STAGE PLANS: |

| Stage: Stage-0 |

| Fetch Operator |

| limit: -1 |

| Processor Tree: |

| TableScan |

| alias: test.trans_student_agg |

| Statistics: Num rows: 3 Data size: 282 Basic stats: COMPLETE Column stats: NONE |

| Select Operator |

| expressions: dept (type: string), dept_cnt (type: bigint) |

| outputColumnNames: _col0, _col1 |

| Statistics: Num rows: 3 Data size: 282 Basic stats: COMPLETE Column stats: NONE |

| ListSink |

| |

+----------------------------------------------------+

17 rows selected (0.137 seconds)

#直接查询物化视图

0: jdbc:hive2://server4:10000> SELECT * from trans_student_agg;

INFO : Compiling command(queryId=alanchan_20221019090037_168ce833-5fdd-4dae-acf1-99c260ae35b3): SELECT * from trans_student_agg

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:trans_student_agg.dept, type:string, comment:null), FieldSchema(name:trans_student_agg.dept_cnt, type:bigint, comment:null)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221019090037_168ce833-5fdd-4dae-acf1-99c260ae35b3); Time taken: 0.132 seconds

INFO : Executing command(queryId=alanchan_20221019090037_168ce833-5fdd-4dae-acf1-99c260ae35b3): SELECT * from trans_student_agg

INFO : Completed executing command(queryId=alanchan_20221019090037_168ce833-5fdd-4dae-acf1-99c260ae35b3); Time taken: 0.0 seconds

INFO : OK

+-------------------------+-----------------------------+

| trans_student_agg.dept | trans_student_agg.dept_cnt |

+-------------------------+-----------------------------+

| CS | 7 |

| IS | 6 |

| MA | 9 |

+-------------------------+-----------------------------+

3 rows selected (0.152 seconds)

2)、物化视图数据更新

# 定期更新

#1、手动重建更新

ALTER MATERIALIZED VIEW trans_student_agg REBUILD;

#2、设置参数定时更新

删除顺序

drop MATERIALIZED VIEW student_trans_agg;

drop table student_trans;

#出现过数据变化,但未发现有什么规律,没找到正确使用方式

create table student_trans(

num int,

name string,

sex string,

age int,

dept string)

stored as orc

TBLPROPERTIES('transactional'='true');

#向student_trans插入数据

insert into table student_trans(num,name,dept) select num,name,dept from student;

CREATE MATERIALIZED VIEW student_trans_agg

TBLPROPERTIES('hive.materializedview.rewriting.time.window'='1min')

AS SELECT dept, count(*) as dept_cnt from student_trans group by dept;

ALTER MATERIALIZED VIEW student_trans_agg REBUILD;

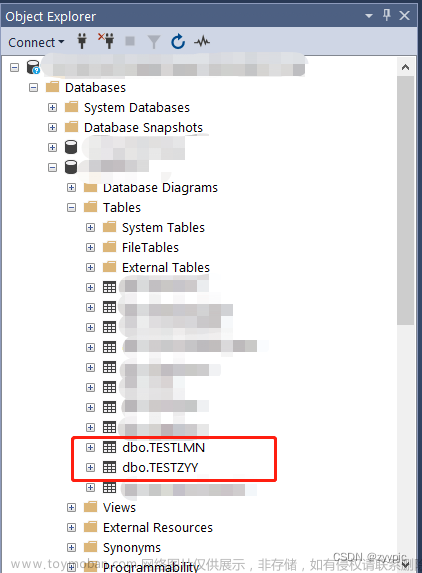

四、Hive Database|Schema(数据库)DDL操作

1、概述

在Hive中,DATABASE的概念和RDBMS中类似,我们称之为数据库,DATABASE和SCHEMA是可互换的,都可以使用。

默认的数据库叫做default,存储数据位置位于/user/hive/warehouse下。

用户自己创建的数据库存储位置是/user/hive/warehouse/database_name.db下。

--create database

--用于创建新的数据库

COMMENT:数据库的注释说明语句

LOCATION:指定数据库在HDFS存储位置,默认/user/hive/warehouse/dbname.db

WITH DBPROPERTIES:用于指定一些数据库的属性配置。

CREATE (DATABASE|SCHEMA) [IF NOT EXISTS] database_name

[COMMENT database_comment]

[LOCATION hdfs_path]

[WITH DBPROPERTIES (property_name=property_value, ...)];

2、示例

-- 创建数据库test

-- 注意:如果需要使用location指定路径的时候,最好指向的是一个新创建的空文件夹。

create database if not exists test

comment "this is my first db"

with dbproperties ('createdBy'='alan');

-- describe database

-- 显示Hive中数据库的名称,注释(如果已设置)及其在文件系统上的位置等信息。

-- EXTENDED关键字用于显示更多信息。可以将关键字describe简写成desc使用。

DESCRIBE DATABASE/SCHEMA [EXTENDED] db_name;

0: jdbc:hive2://server4:10000> DESCRIBE DATABASE EXTENDED test;

INFO : Compiling command(queryId=alanchan_20221019083119_617c71e4-5164-41c3-acad-392d08d9a71d): DESCRIBE DATABASE EXTENDED test

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:db_name, type:string, comment:from deserializer), FieldSchema(name:comment, type:string, comment:from deserializer), FieldSchema(name:location, type:string, comment:from deserializer), FieldSchema(name:owner_name, type:string, comment:from deserializer), FieldSchema(name:owner_type, type:string, comment:from deserializer), FieldSchema(name:parameters, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=alanchan_20221019083119_617c71e4-5164-41c3-acad-392d08d9a71d); Time taken: 0.034 seconds

INFO : Executing command(queryId=alanchan_20221019083119_617c71e4-5164-41c3-acad-392d08d9a71d): DESCRIBE DATABASE EXTENDED test

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=alanchan_20221019083119_617c71e4-5164-41c3-acad-392d08d9a71d); Time taken: 0.002 seconds

INFO : OK

+----------+----------+----------------------------------------------------+-------------+-------------+-------------+

| db_name | comment | location | owner_name | owner_type | parameters |

+----------+----------+----------------------------------------------------+-------------+-------------+-------------+

| test | | hdfs://HadoopHAcluster/user/hive/warehouse/test.db | alanchan | USER | |

+----------+----------+----------------------------------------------------+-------------+-------------+-------------+

1 row selected (0.057 seconds)

-- use database

--选择特定的数据库

--切换当前会话使用哪一个数据库进行操作

--drop database

--删除数据库

--默认行为是RESTRICT,这意味着仅在数据库为空时才删除它。

--要删除带有表的数据库(不为空的数据库),我们可以使用CASCADE。

DROP (DATABASE|SCHEMA) [IF EXISTS] database_name [RESTRICT|CASCADE];

drop database test CASCADE;

--alter database

--更改与Hive中的数据库关联的元数据

--更改数据库属性

ALTER (DATABASE|SCHEMA) database_name SET DBPROPERTIES (property_name=property_value, ...);

--更改数据库所有者

ALTER (DATABASE|SCHEMA) database_name SET OWNER USER user;

--更改数据库位置

ALTER (DATABASE|SCHEMA) database_name SET LOCATION hdfs_path;

五、Hive Table(表)DDL操作

1、概述

Hive中针对表的DDL操作可以说是DDL中的核心操作,包括建表、修改表、删除表、描述表元数据信息。

由于Hive建表之后加载映射数据很快,实际中如果建表有问题,可以不用修改,直接删除重建。

2、示例

--describe table

--显示Hive中表的元数据信息

--如果指定了EXTENDED关键字,则它将以Thrift序列化形式显示表的所有元数据。

--如果指定了FORMATTED关键字,则它将以表格格式显示元数据。

--drop table

--删除该表的元数据和数据

--如果已配置垃圾桶且未指定PURGE,则该表对应的数据实际上将移动到HDFS垃圾桶,而元数据完全丢失。

--删除EXTERNAL表时,该表中的数据不会从文件系统中删除,只删除元数据。

--如果指定了PURGE,则表数据跳过HDFS垃圾桶直接被删除。因此如果DROP失败,则无法挽回该表数据。

DROP TABLE [IF EXISTS] table_name [PURGE]; -- (Note: PURGE available in Hive 0.14.0 and later)

--truncate table

--从表中删除所有行。

--可以简单理解为清空表的所有数据但是保留表的元数据结构。

--如果HDFS启用了垃圾桶,数据将被丢进垃圾桶,否则将被删除。

TRUNCATE [TABLE] table_name;

truncate table t_student;

--alter table

--1、更改表名

ALTER TABLE table_name RENAME TO new_table_name;

ALTER table t_student RENAME TO students;

--2、更改表属性

ALTER TABLE table_name SET TBLPROPERTIES (property_name = property_value, ... );

--更改表注释

ALTER TABLE students SET TBLPROPERTIES ('comment' = "new comment for student table");

--3、更改SerDe属性

ALTER TABLE table_name SET SERDE serde_class_name [WITH SERDEPROPERTIES (property_name = property_value, ... )];

ALTER TABLE table_name [PARTITION partition_spec] SET SERDEPROPERTIES serde_properties;

ALTER TABLE table_name SET SERDEPROPERTIES ('field.delim' = ',');

--移除SerDe属性

ALTER TABLE table_name [PARTITION partition_spec] UNSET SERDEPROPERTIES (property_name, ... );

--4、更改表的文件存储格式 该操作仅更改表元数据。现有数据的任何转换都必须在Hive之外进行。

ALTER TABLE table_name SET FILEFORMAT file_format;

--5、更改表的存储位置路径

ALTER TABLE table_name SET LOCATION "new location";

--6、更改列名称/类型/位置/注释

CREATE TABLE test_change (a int, b int, c int);

// First change column a's name to a1.

ALTER TABLE test_change CHANGE a a1 INT;

// Next change column a1's name to a2, its data type to string, and put it after column b.

ALTER TABLE test_change CHANGE a1 a2 STRING AFTER b;

// The new table's structure is: b int, a2 string, c int.

// Then change column c's name to c1, and put it as the first column.

ALTER TABLE test_change CHANGE c c1 INT FIRST;

// The new table's structure is: c1 int, b int, a2 string.

// Add a comment to column a1

ALTER TABLE test_change CHANGE a1 a1 INT COMMENT 'this is column a1';

--7、添加/替换列

--使用ADD COLUMNS,您可以将新列添加到现有列的末尾但在分区列之前。

--REPLACE COLUMNS 将删除所有现有列,并添加新的列集。

ALTER TABLE table_name ADD|REPLACE COLUMNS (col_name data_type,...);

ALTER TABLE students ADD COLUMNS (age int);

ALTER TABLE students REPLACE COLUMNS (age int);

0: jdbc:hive2://server4:10000> select * from students;

+--------------+----------------+---------------+

| students.id | students.name | students.age |

+--------------+----------------+---------------+

+--------------+----------------+---------------+

No rows selected (0.138 seconds)

0: jdbc:hive2://server4:10000> ALTER TABLE students REPLACE COLUMNS (age int);

0: jdbc:hive2://server4:10000> select * from students;

+---------------+

| students.age |

+---------------+

六、Hive Partition(分区)DDL操作

1、概述

Hive中针对分区Partition的操作主要包括:增加分区、删除分区、重命名分区、修复分区、修改分区。

2、示例

--1、add partition

--ADD PARTITION会更改表元数据,但不会加载数据。如果分区位置中不存在数据,查询时将不会返回结果。

--因此需要保证增加的分区位置路径下,数据已经存在,或者增加完分区之后导入分区数据。

--1、增加分区

创建一个单分区表

create table user_dept (

num int,

name string,

sex string,

age int)

partitioned by (dept string)

row format delimited fields terminated by ',';

--加载数据

load data inpath '/hivetest/partition/students_MA.txt' into table user_dept partition(dept ="MA");

-- 一次添加一个分区

ALTER TABLE user_dept ADD PARTITION (dept='IS') '/user/hive/warehouse/testhive.db/user_dept/dept=IS';

--加载数据

load data inpath '/hivetest/partition/students_IS.txt' into table user_dept partition(dept ="IS");

-- 添加多级分区

ALTER TABLE table_name ADD [IF NOT EXISTS] PARTITION partition_spec [LOCATION 'location'][, PARTITION partition_spec [LOCATION 'location'], ...];

partition_spec:

: (partition_column = partition_col_value, partition_column = partition_col_value, ...)

创建一个二级分区表

create table user_dept_sex (id int, name string,age int)

partitioned by (dept string, sex string)

row format delimited fields terminated by ",";

增加多分区

ALTER TABLE user_dept_sex ADD PARTITION (dept='MA', sex='M')

PARTITION (dept='MA', sex='F')

PARTITION (dept='IS', sex='M')

PARTITION (dept='IS', sex='F') ;

加载数据

load data inpath '/hivetest/partition/user_dept/ma/m' into table user_dept_sex partition(dept='MA', sex='M');

load data inpath '/hivetest/partition/user_dept/ma/f' into table user_dept_sex partition(dept='MA', sex='F');

load data inpath '/hivetest/partition/user_dept/is/m' into table user_dept_sex partition(dept='IS', sex='M');

load data inpath '/hivetest/partition/user_dept/is/f' into table user_dept_sex partition(dept='IS', sex='F');

===============================================================================================================

下面部分是按照官方网站做的例子,执行不了

ALTER TABLE table_name ADD PARTITION (dt='2008-08-08', country='us')

location '/path/to/us/part080808'

PARTITION (dt='2008-08-09', country='us') location '/path/to/us/part080809';

ALTER TABLE user_dept_sex ADD PARTITION (dept='MA', sex='M') '/hivetest/partition/user_dept/ma/m'

PARTITION (dept='MA', sex='F') '/hivetest/partition/user_dept/ma/f'

PARTITION (dept='IS', sex='M') '/hivetest/partition/user_dept/is/m'

PARTITION (dept='IS', sex='F') '/hivetest/partition/user_dept/is/f';

===============================================================================================================

--2、rename partition

--2、重命名分区

ALTER TABLE table_name PARTITION partition_spec RENAME TO PARTITION partition_spec;

ALTER TABLE user_dept_sex PARTITION (dept='MA', sex='M') RENAME TO PARTITION (dept='MA', sex='Male');

ALTER TABLE user_dept_sex PARTITION (dept='MA', sex='F') RENAME TO PARTITION (dept='MA', sex='Female');

ALTER TABLE user_dept_sex PARTITION (dept='IS', sex='M') RENAME TO PARTITION (dept='IS', sex='Male');

ALTER TABLE user_dept_sex PARTITION (dept='IS', sex='F') RENAME TO PARTITION (dept='IS', sex='Female');

--3、删除分区

delete partition

删除表的分区。这将删除该分区的数据和元数据。

ALTER TABLE table_name DROP [IF EXISTS] PARTITION (dt='2008-08-08', country='us');

ALTER TABLE table_name DROP [IF EXISTS] PARTITION (dt='2008-08-08', country='us') PURGE; --直接删除数据 不进垃圾桶

--5、修改分区

alter partition

--更改分区文件存储格式

ALTER TABLE table_name PARTITION (dt='2008-08-09') SET FILEFORMAT file_format;

--更改分区位置

ALTER TABLE table_name PARTITION (dt='2008-08-09') SET LOCATION "new location";

3、Hive MSCK partition

1)、介绍

Hive将每个表的分区列表信息存储在其metastore中。但是,如果将新分区直接添加到HDFS(例如通过使用hadoop fs -put命令)或从HDFS中直接删除分区文件夹,则除非用户ALTER TABLE table_name ADD/DROP PARTITION在每个新添加的分区上运行命令,否则metastore(也就是Hive)将不会意识到分区信息的这些更改。

MSCK是metastore check的缩写,表示元数据检查操作,可用于元数据的修复。

--4、修复分区语法

MSCK [REPAIR] TABLE table_name [ADD/DROP/SYNC PARTITIONS];

MSCK partition

--MSCK默认行为ADD PARTITIONS,使用此选项,它将把HDFS上存在但元存储中不存在的所有分区添加到metastore。

--DROP PARTITIONS选项将从已经从HDFS中删除的metastore中删除分区信息。

--SYNC PARTITIONS选项等效于调用ADD和DROP PARTITIONS。

--如果存在大量未跟踪的分区,则可以批量运行MSCK REPAIR TABLE,以避免OOME(内存不足错误)。

2)、Hive MSCK 修复partition 示例

--4、修复分区语法

MSCK [REPAIR] TABLE table_name [ADD/DROP/SYNC PARTITIONS];

- 创建一张分区表,直接使用HDFS命令在表文件夹下创建分区文件夹并上传数据,此时在Hive中查询是无法显示表数据的,因为metastore中没有记录,使用MSCK ADD PARTITIONS进行修复。

- 针对分区表,直接使用HDFS命令删除分区文件夹,此时在Hive中查询显示分区还在,因为metastore中还没有被删除,使用MSCK DROP PARTITIONS进行修复。

----- MSCK ADD PARTITIONS

--Step1:创建分区表

create table t_all_hero_part_msck(

id int,

name string,

hp_max int,

mp_max int,

attack_max int,

defense_max int,

attack_range string,

role_main string,

role_assist string

) partitioned by (role string)

row format delimited

fields terminated by "\t";

--Step2:在linux上,使用HDFS命令创建分区文件夹

hadoop fs -mkdir -p /user/hive/warehouse/testhive.db/t_all_hero_part_msck/role=sheshou

hadoop fs -mkdir -p /user/hive/warehouse/testhive.db/t_all_hero_part_msck/role=tanke

--Step3:把数据文件上传到对应的分区文件夹下

hadoop fs -put archer.txt /user/hive/warehouse/testhive.db/t_all_hero_part_msck/role=sheshou

hadoop fs -put tank.txt /user/hive/warehouse/testhive.db/t_all_hero_part_msck/role=tanke

--Step4:查询表 可以发现没有数据

select * from t_all_hero_part_msck;

--Step5:使用MSCK命令进行修复

--add partitions可以不写 因为默认就是增加分区

MSCK repair table t_all_hero_part_msck add partitions;

--再查询t_all_hero_part_msck,发现已经有数据了

select * from t_all_hero_part_msck;

----- MSCK DROP PARTITIONS

--Step1:直接使用HDFS命令删除分区表的某一个分区文件夹

hadoop fs -rm -r /user/hive/warehouse/testhive.db/t_all_hero_part_msck/role=sheshou

--再查询t_all_hero_part_msck,发现该分区的数据已经没有了

select * from t_all_hero_part_msck;

--Step2:查询发现还有分区信息

--因为元数据信息没有删除

show partitions t_all_hero_part_msck;

--Step3:使用MSCK命令进行修复

MSCK repair table t_all_hero_part_msck drop partitions;

七、Hive Show语法

1、概述

Show相关的语句提供了一种查询Hive metastore的方法。可以帮助用户查询相关信息。比如最常使用的查询当前数据库下有哪些表 show tables.文章来源:https://www.toymoban.com/news/detail-487353.html

2、示例

--1、显示所有数据库 SCHEMAS和DATABASES的用法 功能一样

show databases;

show schemas;

--2、显示当前数据库所有表/视图/物化视图/分区/索引

show tables;

SHOW TABLES [IN database_name]; --指定某个数据库

--3、显示当前数据库下所有视图

Show Views;

SHOW VIEWS 'test_*'; -- show all views that start with "test_"

SHOW VIEWS FROM test1; -- show views from database test1

SHOW VIEWS [IN/FROM database_name];

--4、显示当前数据库下所有物化视图

SHOW MATERIALIZED VIEWS [IN/FROM database_name];

--5、显示表分区信息,分区按字母顺序列出,不是分区表执行该语句会报错

show partitions table_name;

--6、显示表/分区的扩展信息

SHOW TABLE EXTENDED [IN|FROM database_name] LIKE table_name;

show table extended like student;

--7、显示表的属性信息

SHOW TBLPROPERTIES table_name;

show tblproperties student;

--8、显示表、视图的创建语句

SHOW CREATE TABLE ([db_name.]table_name|view_name);

show create table student;

--9、显示表中的所有列,包括分区列。

SHOW COLUMNS (FROM|IN) table_name [(FROM|IN) db_name];

show columns in student;

--10、显示当前支持的所有自定义和内置的函数

show functions;

--11、Describe desc命令

--查看表信息

desc extended table_name;

--查看表信息(格式化美观)

desc formatted table_name;

--查看数据库相关信息

describe database database_name;

以上,介绍了hive的事务表、视图、物化视图的概念以及使用示例,同时介绍了数据库、表以及分区DDL的详细操作。文章来源地址https://www.toymoban.com/news/detail-487353.html

到了这里,关于4、hive的使用示例详解-事务表、视图、物化视图、DDL(数据库、表以及分区)管理详细操作的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!