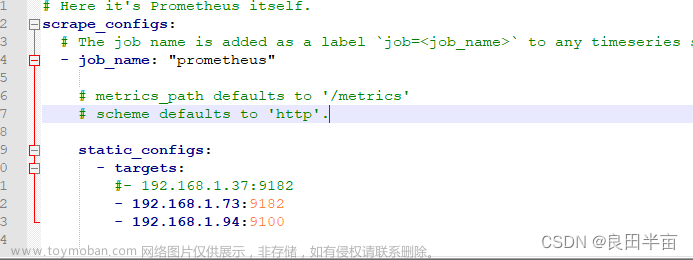

公司网络更改重启服务器后,发现Prometheus监控中node节点三个挂掉了,实际上节点服务器是正常的,但是监控的node_exporter请求http://IP:9100/metrics超过10秒没有获取返回数据则认为服务挂掉。

到各个节点服务器用curl命令检测多久返回数据

curl -o /dev/null -s -w '%{time_connect}:%{time_starttransfer}:%{time_total}\n' 'http://NodeIP:9100/metrics'time_connect :连接时间,从开始到TCP三次握手完成时间,这里面包括DNS解析的时候,如果想求连接时间,需要减去上面的解析时间;

time_starttransfer :开始传输时间,从发起请求开始,到服务器返回第一个字段的时间;

time_total :总时间;

可以看到time_connect时间是很快的,排除网络问题。除了167返回时间正常其他几个节点都是有问题的。

查看pod日志

ts=2023-01-11T06:11:08.189Z caller=stdlib.go:105 level=error caller="error encoding and sending metric family: write tcp 163:9100" msg="-> 167:40743: write: broken pipe"

ts=2023-01-11T06:11:08.189Z caller=stdlib.go:105 level=error caller="error encoding and sending metric family: write tcp 163:9100" msg="->.167:40743: write: broken pipe"可以看到有大量的这种日志

参考资料: https://asktug.com/t/topic/153284/36 和 https://blog.csdn.net/lyf0327/article/details/99971590

有些说可以调大scrape_timeout这个时间,但是这个不是解决问题的根本

也有说调大node_exporter的yaml中的limit内存和CPU,但是我这边的node_exporter是没有配置request和limit资源的,也不是这个问题,最后参考别人的配置文件

apiVersion: apps/v1

kind: DaemonSet

metadata:

annotations:

deprecated.daemonset.template.generation: "5"

prometheus.io/scrape: "true"

generation: 5

labels:

app: node-exporter

name: node-exporter

namespace: monitoring

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app: node-exporter

template:

metadata:

creationTimestamp: null

labels:

app: node-exporter

name: node-exporter

spec:

containers:

- args:

- --web.listen-address=$(HOSTIP):9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host # 修改了这个原来是/host/root

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

- --log.level=debug

env:

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

image: prom/node-exporter:latest

imagePullPolicy: IfNotPresent

name: node-exporter

ports:

- containerPort: 9100

hostPort: 9100

protocol: TCP

resources: {}

securityContext:

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /host/proc

name: proc

- mountPath: /host/sys

name: sys

- mountPath: /host # 修改了这个原来是/rootfs

name: rootfs

dnsPolicy: ClusterFirst

hostIPC: true

hostNetwork: true

hostPID: true

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

volumes:

- hostPath:

path: /proc

name: proc

- hostPath:

path: /sys

name: sys

- hostPath:

path: /

name: rootfs

updateStrategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate观察了一天

根据上述修改后仍有问题,其中一个报权限不足

apiVersion: apps/v1

kind: DaemonSet

metadata:

annotations:

deprecated.daemonset.template.generation: "5"

prometheus.io/scrape: "true"

generation: 5

labels:

app: node-exporter

name: node-exporter

namespace: monitoring

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app: node-exporter

template:

metadata:

creationTimestamp: null

labels:

app: node-exporter

name: node-exporter

spec:

containers:

- args:

- --web.listen-address=$(HOSTIP):9100

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

- --path.rootfs=/host

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+)($|/)

- --collector.filesystem.ignored-fs-types=^(autofs|binfmt_misc|cgroup|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|mqueue|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|sysfs|tracefs)$

- --log.level=debug

env:

- name: HOSTIP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

image: prom/node-exporter:latest

imagePullPolicy: IfNotPresent

name: node-exporter

ports:

- containerPort: 9100

hostPort: 9100

protocol: TCP

resources: {}

securityContext:

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /host/proc

name: proc

- mountPath: /host/sys

name: sys

- mountPath: /host

name: rootfs

dnsPolicy: ClusterFirst

hostIPC: true

hostNetwork: true

hostPID: true

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

runAsUser: 0 # 添加该选项 默认是nobody用户 65534的uid

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

volumes:

- hostPath:

path: /proc

name: proc

- hostPath:

path: /sys

name: sys

- hostPath:

path: /

name: rootfs

updateStrategy:

rollingUpdate:

maxSurge: 0

maxUnavailable: 1

type: RollingUpdate

再观察一天最新的问题有重新出现

报大量的error encoding and sending metric family: write tcp 10.37.0.163:9100" msg="->10.37.0.167:50266: write: broken pip

查了很多资料最后一个资料比较有用文章来源:https://www.toymoban.com/news/detail-498567.html

地址:https://github.com/prometheus/node_exporter/issues/2500文章来源地址https://www.toymoban.com/news/detail-498567.html

我这边的节点3个内核是5.4.0-132,三个都是有问题的,而更高版本的内核5.15.0是没问题的

最终结论是内核版本问题;

临时解决方案是在node_exporter中添加环境变量GOMAXPROCS=1

永久解决是升级内核

到了这里,关于关于k8s中的node_exporter异常write: broken pipe问题排查的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!