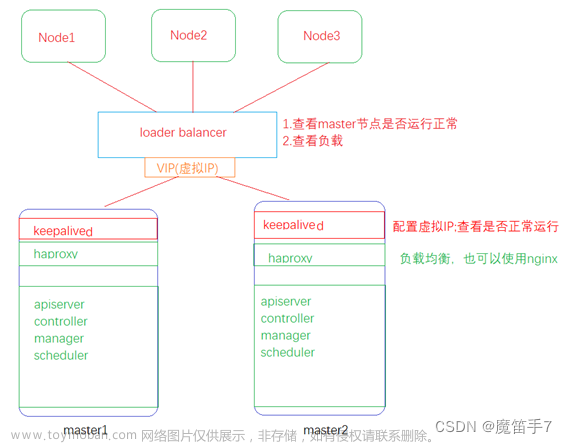

使用nginx搭建kubernetes高可用集群

本文使用 nginx 搭建 kubernetes 高可用集群。

1、环境准备

服务器规划(本实验采用虚拟机):

| ip | hostname | 说明 |

|---|---|---|

| 192.168.43.200 | master | master |

| 192.168.43.201 | slave1 | slave |

| 192.168.43.202 | slave2 | slave |

| 192.168.43.203 | master2 | master |

| 192.168.43.165 | nginx | nginx主机 |

2、系统初始化(master&&slave)

2.1 关闭防火墙

# 第1步

# 临时关闭

systemctl stop firewalld

# 永久关闭

systemctl disable firewalld

2.2 关闭 selinux

# 第2步

# 临时关闭

setenforce 0

# 永久关闭

sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

2.3 关闭 swap

# 第3步

# 临时关闭

swapoff -a

# 永久关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab

2.4 设置主机名称

使用命令 hostnamectl set-hostname hostname 设置主机名称,如下四台主机分别设置为:

# 第4步

# 设置

hostnamectl set-hostname master

hostnamectl set-hostname slave1

hostnamectl set-hostname slave2

hostnamectl set-hostname master2

# 查看当前主机名称

hostname

2.5 添加hosts

在每个节点中添加 hosts,即节点IP地址+节点名称。

# 第5步

cat >> /etc/hosts << EOF

192.168.43.200 master

192.168.43.201 slave1

192.168.43.202 slave2

192.168.43.203 master2

EOF

2.6 将桥接的IPv4流量传递到iptables的链

# 第6步

# 设置

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 使其生效

sysctl --system

2.7 时间同步

让各个节点(虚拟机)中的时间与本机时间保持一致。

# 第7步

yum install ntpdate -y

ntpdate time.windows.com

注意:虚拟机不管关机还是挂起,每次重新操作都需要更新时间进行同步。

3、Docker的安装(all node)

3.1 卸载旧版本

# 第8步

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

3.2 设置镜像仓库

# 第9步

# 默认是国外的,这里使用阿里云的镜像

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

3.3 安装需要的插件

# 第10步

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

3.4 更新yum软件包索引

# 第11步

# 更新yum软件包索引

yum makecache fast

3.5 安装docker引擎

# 第12步

# 安装特定版本

# 查看有哪些版本

yum list docker-ce --showduplicates | sort -r

yum install docker-ce-<VERSION_STRING> docker-ce-cli-<VERSION_STRING> containerd.io

yum install docker-ce-20.10.21 docker-ce-cli-20.10.21 containerd.io

# 安装最新版本

yum install docker-ce docker-ce-cli containerd.io

3.6 启动Docker

# 第13步

systemctl enable docker && systemctl start docker

3.7 配置Docker镜像加速

# 第14步

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

# 重启

systemctl restart docker

3.8 查看加速是否生效

# 第15步

docker info

3.9 验证Docker信息

# 第16步

docker -v

3.10 其它Docker命令

# 停止docker

systemctl stop docker

# 查看docker状态

systemctl status docker

3.11 卸载Docker的命令

yum remove docker-ce-20.10.21 docker-ce-cli-20.10.21 containerd.io

rm -rf /var/lib/docker

rm -rf /var/lib/containerd

4、添加阿里云yum源(master&&slave)

所有节点都需要执行,nginx节点不需要执行。

# 第17步

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[Kubernetes]

name=kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

5、kubeadm、kubelet、kubectl的安装(master&&slave)

所有节点都需要执行,nginx节点不需要执行。

# 第18步

yum install -y kubelet-1.21.0 kubeadm-1.21.0 kubectl-1.21.0 --disableexcludes=kubernetes

6、启动kubelet服务(master&&slave)

所有节点都需要执行,nginx节点不需要执行。

# 第19步

systemctl enable kubelet && systemctl start kubelet

7、Nginx节点安装Nginx(nginx node)

这里我们使用 docker 的方式进行安装,以下操作只需要在 Nginx 节点部署即可。

# 设置

# 第20步

hostnamectl set-hostname nginx

systemctl stop firewalld

systemctl disable firewalld

# 第21步

# 镜像下载

[root@nginx ~]# docker pull nginx:1.17.2

1.17.2: Pulling from library/nginx

1ab2bdfe9778: Pull complete

c88f4a4e0a55: Pull complete

1a18b1b95ce1: Pull complete

Digest: sha256:5411d8897c3da841a1f45f895b43ad4526eb62d3393c3287124a56be49962d41

Status: Downloaded newer image for nginx:1.17.2

docker.io/library/nginx:1.17.2

# 第22步

# 编辑配置文件

[root@nginx ~]# mkdir -p /data/nginx && cd /data/nginx

[root@nginx nginx]# vim nginx-lb.conf

user nginx;

worker_processes 2; # 根据服务器cpu核数修改

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 8192;

}

stream {

upstream apiserver {

server 192.168.43.200:6443 weight=5 max_fails=3 fail_timeout=30s; #master apiserver ip和端口

server 192.168.43.203:6443 weight=5 max_fails=3 fail_timeout=30s; #master2 apiserver ip和端口

}

server {

listen 8443; # 监听端口

proxy_pass apiserver;

}

}

# 第23步

# 启动容器

[root@nginx nginx]# docker run -d --restart=unless-stopped -p 8443:8443 -v /data/nginx/nginx-lb.conf:/etc/nginx/nginx.conf --name nginx-lb --hostname nginx-lb nginx:1.17.2

fd9d945c1ae1c39ab6aa9da3675a523694a8ef1aaf687ad6d1509abc0b21b822

# 第24步

# 查看启动情况

[root@nginx nginx]# docker ps | grep nginx-lb

fd9d945c1ae1 nginx:1.17.2 "nginx -g 'daemon of…" 22 seconds ago Up 21 seconds 80/tcp, 0.0.0.0:8443->8443/tcp nginx-lb

8、部署k8s-master

8.1 kubeadm初始化(master node)

1.21.0 版本在初始化过程中会报错,是因为阿里云仓库中不存在 coredns/coredns 镜像,也就是

registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0镜像不存在。

解决方法:

# 第25步

# master节点执行

# 该步骤需要提前执行,否则的话在初始化的时候由于找不到镜像会报错

[root@master ~]# docker pull coredns/coredns:1.8.0

1.8.0: Pulling from coredns/coredns

c6568d217a00: Pull complete

5984b6d55edf: Pull complete

Digest: sha256:cc8fb77bc2a0541949d1d9320a641b82fd392b0d3d8145469ca4709ae769980e

Status: Downloaded newer image for coredns/coredns:1.8.0

docker.io/coredns/coredns:1.8.0

[root@master ~]# docker tag coredns/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

[root@master ~]# docker rmi coredns/coredns:1.8.0

Untagged: coredns/coredns:1.8.0

Untagged: coredns/coredns@sha256:cc8fb77bc2a0541949d1d9320a641b82fd392b0d3d8145469ca4709ae769980e

在 master 节点中执行以下命令,注意将 master 节点 IP 和 kubeadm 版本号和 --control-plane-endpoint 修改为

自己主机中所对应的。

# 第26步

# master节点执行

[root@master ~]# kubeadm init \

--apiserver-advertise-address=192.168.43.200 \

--image-repository registry.aliyuncs.com/google_containers \

--control-plane-endpoint=192.168.43.165:8443 \

--kubernetes-version v1.21.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.21.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.43.200 192.168.43.165]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.43.200 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192.168.43.200 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 106.045002 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: fa1p76.qfwoidudtbxes0o5

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.43.165:8443 --token fa1p76.qfwoidudtbxes0o5 \

--discovery-token-ca-cert-hash sha256:644548f3c2f5d5961bb7630bdcf4f4908c3be42185a544f3855ca7b21c98f0eb \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.43.165:8443 --token fa1p76.qfwoidudtbxes0o5 \

--discovery-token-ca-cert-hash sha256:644548f3c2f5d5961bb7630bdcf4f4908c3be42185a544f3855ca7b21c98f0eb

查看命令执行后的提示信息,看到 Your Kubernetes control-plane has initialized successfully! 说

明我们 master 节点上的 k8s 集群已经搭建成功。

8.2 开启kubectl工具的使用(master node)

# 第27步

# master节点执行

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看集群的节点:

# 第28步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 3m7s v1.21.0

8.3 slave节点加入集群(slave node)

# 第29步

# slave1节点执行

[root@slave1 ~]# kubeadm join 192.168.43.165:8443 --token fa1p76.qfwoidudtbxes0o5 --discovery-token-ca-cert-hash sha256:644548f3c2f5d5961bb7630bdcf4f4908c3be42185a544f3855ca7b21c98f0eb

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

# 第30步

# slave2节点执行

[root@slave2 ~]# kubeadm join 192.168.43.165:8443 --token fa1p76.qfwoidudtbxes0o5 --discovery-token-ca-cert-hash sha256:644548f3c2f5d5961bb7630bdcf4f4908c3be42185a544f3855ca7b21c98f0eb

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

查看集群的节点:

# 第31步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 7m46s v1.21.0

slave1 NotReady <none> 89s v1.21.0

slave2 NotReady <none> 81s v1.21.0

8.4 master2节点加入集群(master2 node)

# 第32步

# master2节点执行

# 镜像下载

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/pause:3.4.1

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/etcd:3.4.13-0

# 1.21.0版本的k8s中,阿里云镜像中没有registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0镜像,所以需要从别的地方下载镜像,然后再进行处理

[root@master2 ~]# docker pull coredns/coredns:1.8.0

[root@master2 ~]# docker tag coredns/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

[root@master2 ~]# docker rmi coredns/coredns:1.8.0

证书拷贝:

# 第33步

# master2节点执行

# 创建目录

[root@master2 ~]# mkdir -p /etc/kubernetes/pki/etcd

# 第34步

# master节点执行

# 将master节点上的证书拷贝到master2节点上

[root@master ~]# scp -rp /etc/kubernetes/pki/ca.* master2:/etc/kubernetes/pki

[root@master ~]# scp -rp /etc/kubernetes/pki/sa.* master2:/etc/kubernetes/pki

[root@master ~]# scp -rp /etc/kubernetes/pki/front-proxy-ca.* master2:/etc/kubernetes/pki

[root@master ~]# scp -rp /etc/kubernetes/pki/etcd/ca.* master2:/etc/kubernetes/pki/etcd

[root@master ~]# scp -rp /etc/kubernetes/admin.conf master2:/etc/kubernetes

加入集群:

# 第35步

# master2节点执行

[root@master2 ~]# kubeadm join 192.168.43.165:8443 --token fa1p76.qfwoidudtbxes0o5 --discovery-token-ca-cert-hash sha256:644548f3c2f5d5961bb7630bdcf4f4908c3be42185a544f3855ca7b21c98f0eb --control-plane

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master2] and IPs [192.168.43.203 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master2] and IPs [192.168.43.203 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master2] and IPs [10.96.0.1 192.168.43.203 192.168.43.165]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node master2 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

# 第36步

# master2节点执行

[root@master2 ~]# mkdir -p $HOME/.kube

[root@master2 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master2 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看节点:

# 第37步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 11m v1.21.0

master2 NotReady control-plane,master 68s v1.21.0

slave1 NotReady <none> 5m1s v1.21.0

slave2 NotReady <none> 4m53s v1.21.0

# 第38步

# master2节点执行

[root@master2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 11m v1.21.0

master2 NotReady control-plane,master 68s v1.21.0

slave1 NotReady <none> 5m1s v1.21.0

slave2 NotReady <none> 4m53s v1.21.0

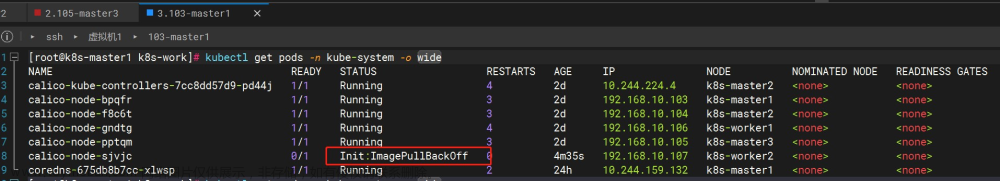

注:由于网络插件还没有部署,所有节点还没有准备就绪,状态为 NotReady,下面安装网络插件。

9、安装网络插件fannel(master node)

查看集群的信息:

# 第39步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 13m v1.21.0

master2 NotReady control-plane,master 2m50s v1.21.0

slave1 NotReady <none> 6m43s v1.21.0

slave2 NotReady <none> 6m35s v1.21.0

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-545d6fc579-2cp4q 1/1 Running 0 12m

kube-system coredns-545d6fc579-nv2bx 1/1 Running 0 12m

kube-system etcd-master 1/1 Running 0 12m

kube-system etcd-master2 1/1 Running 0 2m53s

kube-system kube-apiserver-master 1/1 Running 1 13m

kube-system kube-apiserver-master2 1/1 Running 0 2m56s

kube-system kube-controller-manager-master 1/1 Running 1 12m

kube-system kube-controller-manager-master2 1/1 Running 0 2m56s

kube-system kube-proxy-6dtsk 1/1 Running 0 2m57s

kube-system kube-proxy-hc5tl 1/1 Running 0 6m50s

kube-system kube-proxy-kc824 1/1 Running 0 6m42s

kube-system kube-proxy-mltbt 1/1 Running 0 12m

kube-system kube-scheduler-master 1/1 Running 1 12m

kube-system kube-scheduler-master2 1/1 Running 0 2m57

# 第40步

# master节点执行

# 获取fannel的配置文件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 如果出现无法访问的情况,可以直接用下面的flannel网络的官方github地址

wget https://github.com/flannel-io/flannel/tree/master/Documentation/kube-flannel.yml

# 第41步

# master节点执行

# 修改文件内容

net-conf.json: |

{

"Network": "10.244.0.0/16", #这里的网段地址需要与master初始化的必须保持一致

"Backend": {

"Type": "vxlan"

}

}

# 第42步

# master节点执行

[root@master ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

查看节点情况:

# 第43步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 15m v1.21.0

master2 Ready control-plane,master 4m58s v1.21.0

slave1 Ready <none> 8m51s v1.21.0

slave2 Ready <none> 8m43s v1.21.0

# 第44步

# master2节点执行

[root@master2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 15m v1.21.0

master2 Ready control-plane,master 4m58s v1.21.0

slave1 Ready <none> 8m51s v1.21.0

slave2 Ready <none> 8m43s v1.21.0

查看 pod 情况:文章来源:https://www.toymoban.com/news/detail-498858.html

# 第45步

# master节点执行

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-2c8np 1/1 Running 0 53s

kube-flannel kube-flannel-ds-2zrrm 1/1 Running 0 53s

kube-flannel kube-flannel-ds-blr77 1/1 Running 0 53s

kube-flannel kube-flannel-ds-llxlh 1/1 Running 0 53s

kube-system coredns-545d6fc579-2cp4q 1/1 Running 0 15m

kube-system coredns-545d6fc579-nv2bx 1/1 Running 0 15m

kube-system etcd-master 1/1 Running 0 15m

kube-system etcd-master2 1/1 Running 0 5m20s

kube-system kube-apiserver-master 1/1 Running 1 15m

kube-system kube-apiserver-master2 1/1 Running 0 5m23s

kube-system kube-controller-manager-master 1/1 Running 1 15m

kube-system kube-controller-manager-master2 1/1 Running 0 5m23s

kube-system kube-proxy-6dtsk 1/1 Running 0 5m24s

kube-system kube-proxy-hc5tl 1/1 Running 0 9m17s

kube-system kube-proxy-kc824 1/1 Running 0 9m9s

kube-system kube-proxy-mltbt 1/1 Running 0 15m

kube-system kube-scheduler-master 1/1 Running 1 15m

kube-system kube-scheduler-master2 1/1 Running 0 5m24s

# 第46步

# master2节点执行

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-2c8np 1/1 Running 0 53s

kube-flannel kube-flannel-ds-2zrrm 1/1 Running 0 53s

kube-flannel kube-flannel-ds-blr77 1/1 Running 0 53s

kube-flannel kube-flannel-ds-llxlh 1/1 Running 0 53s

kube-system coredns-545d6fc579-2cp4q 1/1 Running 0 15m

kube-system coredns-545d6fc579-nv2bx 1/1 Running 0 15m

kube-system etcd-master 1/1 Running 0 15m

kube-system etcd-master2 1/1 Running 0 5m20s

kube-system kube-apiserver-master 1/1 Running 1 15m

kube-system kube-apiserver-master2 1/1 Running 0 5m23s

kube-system kube-controller-manager-master 1/1 Running 1 15m

kube-system kube-controller-manager-master2 1/1 Running 0 5m23s

kube-system kube-proxy-6dtsk 1/1 Running 0 5m24s

kube-system kube-proxy-hc5tl 1/1 Running 0 9m17s

kube-system kube-proxy-kc824 1/1 Running 0 9m9s

kube-system kube-proxy-mltbt 1/1 Running 0 15m

kube-system kube-scheduler-master 1/1 Running 1 15m

kube-system kube-scheduler-master2 1/1 Running 0 5m24s

10、测试

# 第47步

[root@master ~]# curl -k https://192.168.43.165:8443/version

[root@slave1 ~]# curl -k https://192.168.43.165:8443/version

[root@slave2 ~]# curl -k https://192.168.43.165:8443/version

[root@master2 ~]# curl -k https://192.168.43.165:8443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

至此,通过 kubeadm 工具就实现了 Kubernetes 高可用集群的快速搭建。文章来源地址https://www.toymoban.com/news/detail-498858.html

到了这里,关于使用nginx搭建kubernetes高可用集群的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!