import numpy as np

import h5py

import matplotlib.pyplot as plt

import testCases

from dnn_utils import sigmoid,sigmoid_backward,relu,relu_backward

import lr_utils

np.random.seed(1)

def initialize_parameters(n_x,n_h,n_y):

W1=np.random.randn(n_h,n_x)*0.01

b1=np.zeros((n_h,1))

W2=np.random.randn((n_y,n_h))*0.01

b2=np.zeros((n_y,1))

assert(W1.shape ==(n_h,n_x))

assert(b1.shape==(n_h,1))

assert(W2.shape==(n_y,n_h))

assert(b2.shape==(n_y,1))

parameters={"W1":W1,"b1":b1,"W2":W2,"b2":b2}

return parameters

def initialize_parameters_deep(layers_dims):

np.random.seed(3)

parameters ={}

L=len(layers_dims)

for l in range(1,L):

parameters["W"+str(l)] =np.random.randn(layers_dims[l],layers_dims[l-1])/np.sqrt(layers_dims[l-1])

parameters["b"+str(l)] =np.zeros((layers_dims[l],1))

assert(parameters["W"+str(l)].shape ==(layers_dims[l],layers_dims[l-1]))

assert(parameters["b"+str(l)].shape==(layers_dims[l],1))

return parameters

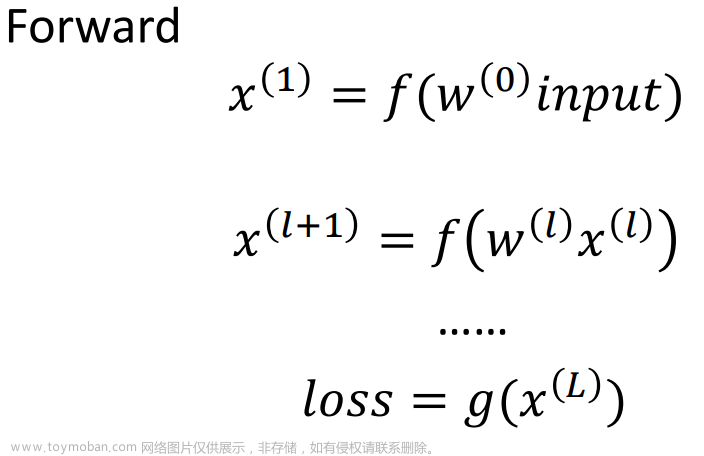

#前向传播函数

def linear_forward(A,W,b):

Z=np.dot(W,A)+b

assert(Z.shape==(W.shape[0],A.shape[1]))

cache=(A,W,b)

return Z,cache文章来源:https://www.toymoban.com/news/detail-501697.html

def linear_activation_forward(A_prev,W,b,activation):

if activation ==“sigmoid”:

Z,linear_cache =linear_forward(A_prev,W,b)

A,activation_cache =sigmoid(Z)文章来源地址https://www.toymoban.com/news/detail-501697.html

elif activation =="relu":

Z

到了这里,关于一步步搭建多层神经网络以及应用的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!