引入依赖

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>3.1.2</version>

</dependency>

源码理解

https://blog.csdn.net/qq_38783098/article/details/116092731

编写函数

注意必须要继承GenericUDF 类

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

public class SimilarEval extends GenericUDF {

/**

* 初始化方法,一般用来校验数据参数个数,返回值是int类型的PrimitiveObjectInspectorFactory

* @param objectInspectors

* @return

* @throws UDFArgumentException

*/

@Override

public ObjectInspector initialize(ObjectInspector[] objectInspectors) throws UDFArgumentException {

if (objectInspectors.length != 2){

//判断参数个数是否为2,抛出异常

throw new UDFArgumentException("参数个数必须为2,请重新输入");

}

return PrimitiveObjectInspectorFactory.javaIntObjectInspector; // 注意这个的返回值,是针对evaluate函数的结果返回值

}

/**

* 业务逻辑处理方法

* @param

* @return

* @throws HiveException

*/

@Override

public Object evaluate(DeferredObject[] deferredObjects) throws HiveException {

//获取输入的参数

String targetStr = deferredObjects[0].get().toString();

String sourceStr = deferredObjects[1].get().toString();

//判断输入数据是否为null,如果为null,返回0

if (targetStr == null || sourceStr == null){

return 0;

}else{

//输入数据不为null,进行业务处理

int tar_len = targetStr.length();

int b = 0;

for (int i = 0; i < tar_len; i++) {

String tmpChar = targetStr.substring(i,i+1);

int a = sourceStr.indexOf(tmpChar);

if (a >= 0){

a+=1;

b = b +1;

} else{

a = 0;

}

}

int result = (int) Math.round((b*100)/(double)tar_len);

return result;

}

}

/**

*

* @param strings

* @return

*/

@Override

public String getDisplayString(String[] strings) {

return "";

}

}

项目结构

打成jar包,并上传到linux服务器,将jar包添加到hive指定路径

# jar 包上传

hdfs dfs -put de_encry-2.0-SNAPSHOT.jar hdfs://drmcluster/stq/ltdq/jars/

# 指定jar 路径

add jar hdfs:/stq/ltdq/jars/SimilarEval-1.0-SNAPSHOT.jar;

# 创建函数

create function similar_eval as 'SimilarEval';

# 展示函数

show functions;

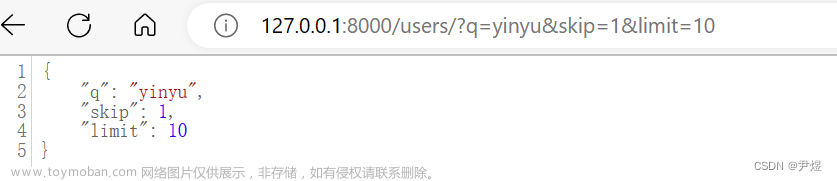

使用效果

文章来源:https://www.toymoban.com/news/detail-506757.html

文章来源:https://www.toymoban.com/news/detail-506757.html

可能会出现的报错

ERROR ExecuteStatement: Error operating ExecuteStatement: org.apache.spark.sql.AnalysisException: No handler for UDF/UDAF/UDTF 'de_encry': java.lang.ClassCastException: java.lang.String cannot be cast to java.lang.Integer; line 1 pos 7

原因:这个就是String 转换层 Integer出问题了,主要是initialize方法返回值 是integer值,evaluate必须返回是integer值。文章来源地址https://www.toymoban.com/news/detail-506757.html

到了这里,关于hive引入外部函数-java实现的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!