代码链接:https://github.com/WongKinYiu/yolov7

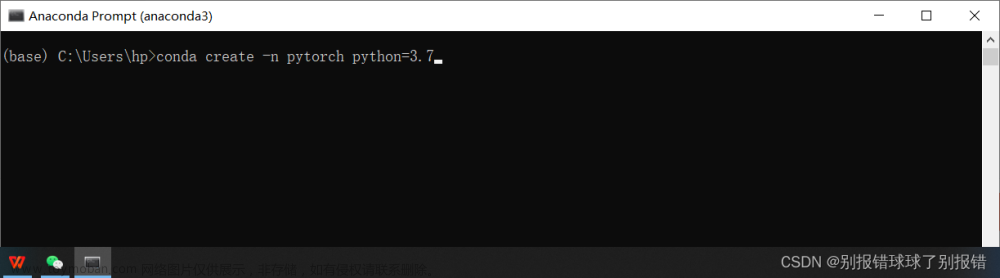

输入指令

python test.py --data data/coco.yaml --img 640 --batch 32 --conf 0.001 --iou 0.65 --device 0 --weights yolov7.pt --name yolov7_640_val参数解析

if __name__ == '__main__':

parser = argparse.ArgumentParser(prog='test.py')

parser.add_argument('--weights', nargs='+', type=str, default='/kaxier01/projects/FAS/yolov7/weights/yolov7.pt', help='model.pt path(s)')

parser.add_argument('--data', type=str, default='/kaxier01/projects/FAS/yolov7/data/coco.yaml', help='*.data path')

parser.add_argument('--batch-size', type=int, default=32, help='size of each image batch')

parser.add_argument('--img-size', type=int, default=640, help='inference size (pixels)')

parser.add_argument('--conf-thres', type=float, default=0.001, help='object confidence threshold')

parser.add_argument('--iou-thres', type=float, default=0.65, help='IOU threshold for NMS')

parser.add_argument('--task', default='val', help='train, val, test, speed or study')

parser.add_argument('--device', default='0', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--single-cls', action='store_true', help='treat as single-class dataset')

parser.add_argument('--augment', action='store_true', help='augmented inference')

parser.add_argument('--verbose', action='store_true', help='report mAP by class')

parser.add_argument('--save-txt', action='store_true', help='save results to *.txt')

parser.add_argument('--save-hybrid', action='store_true', help='save label+prediction hybrid results to *.txt')

parser.add_argument('--save-conf', action='store_true', help='save confidences in --save-txt labels')

parser.add_argument('--save-json', action='store_true', help='save a cocoapi-compatible JSON results file')

parser.add_argument('--project', default='runs/test', help='save to project/name')

parser.add_argument('--name', default='yolov7_640_val', help='save to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--no-trace', action='store_true', help='don`t trace model')

parser.add_argument('--v5-metric', action='store_true', help='assume maximum recall as 1.0 in AP calculation')

opt = parser.parse_args()

opt.save_json |= opt.data.endswith('coco.yaml')上述代码中的参数基本与源码保持一致,只是修改了部分路径。

coco.yaml

# COCO 2017 dataset http://cocodataset.org

# download command/URL (optional)

download: bash ./scripts/get_coco.sh

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

train: /kaxier01/projects/FAS/yolov7/coco/train2017.txt # 118287 images

val: /kaxier01/projects/FAS/yolov7/coco/val2017.txt # 5000 images

test: /kaxier01/projects/FAS/yolov7/coco/test-dev2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

# number of classes

nc: 80

# class names

names: [ 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush' ]如果已经提前下载好了coco2017数据集,可以注释掉代码:

download: bash ./scripts/get_coco.sh根据解析参数,执行test()

if opt.task in ('train', 'val', 'test'): # run normally

test(opt.data,

opt.weights,

opt.batch_size,

opt.img_size,

opt.conf_thres,

opt.iou_thres,

opt.save_json,

opt.single_cls,

opt.augment,

opt.verbose,

save_txt=opt.save_txt | opt.save_hybrid,

save_hybrid=opt.save_hybrid,

save_conf=opt.save_conf,

trace=not opt.no_trace,

v5_metric=opt.v5_metric

)模型加载

model = attempt_load(weights, map_location=device)

def attempt_load(weights, map_location=None):

# Loads an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a

model = Ensemble()

for w in weights if isinstance(weights, list) else [weights]:

# attempt_download(w)

ckpt = torch.load(w, map_location=map_location) # load

model.append(ckpt['ema' if ckpt.get('ema') else 'model'].float().fuse().eval()) # FP32 model

# Compatibility updates

for m in model.modules():

if type(m) in [nn.Hardswish, nn.LeakyReLU, nn.ReLU, nn.ReLU6, nn.SiLU]:

m.inplace = True # pytorch 1.7.0 compatibility

elif type(m) is nn.Upsample:

m.recompute_scale_factor = None # torch 1.11.0 compatibility

elif type(m) is Conv:

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

if len(model) == 1:

return model[-1] # return model

else:

print('Ensemble created with %s\n' % weights)

for k in ['names', 'stride']:

setattr(model, k, getattr(model[-1], k))

return model # return ensemble如果代码在gpu上运行,则将所有浮点参数和缓冲转换为半浮点数据类型,能缩短模型推理时间

half = device.type != 'cpu' and half_precision # half precision only supported on CUDA

if half:

model.half()mAP@0.5:0.95

iouv = torch.linspace(0.5, 0.95, 10).to(device) # iou vector for mAP@0.5:0.95, tensor([0.5000, 0.5500, 0.6000, 0.6500, 0.7000, 0.7500, 0.8000, 0.8500, 0.9000, 0.9500])加载数据集

task = opt.task if opt.task in ('train', 'val', 'test') else 'val'

dataloader = create_dataloader(data[task], imgsz, batch_size, gs, opt, pad=0.5, rect=True,

prefix=colorstr(f'{task}: '))[0]

def create_dataloader(path, imgsz, batch_size, stride, opt, hyp=None, augment=False, cache=False, pad=0.0, rect=False,

rank=-1, world_size=1, workers=8, image_weights=False, quad=False, prefix=''):

# Make sure only the first process in DDP process the dataset first, and the following others can use the cache

with torch_distributed_zero_first(rank):

dataset = LoadImagesAndLabels(path, imgsz, batch_size,

augment=augment, # augment images

hyp=hyp, # augmentation hyperparameters

rect=rect, # rectangular training

cache_images=cache,

single_cls=opt.single_cls,

stride=int(stride),

pad=pad,

image_weights=image_weights,

prefix=prefix)

batch_size = min(batch_size, len(dataset))

nw = min([os.cpu_count() // world_size, batch_size if batch_size > 1 else 0, workers]) # number of workers

sampler = torch.utils.data.distributed.DistributedSampler(dataset) if rank != -1 else None

loader = torch.utils.data.DataLoader if image_weights else InfiniteDataLoader

dataloader = loader(dataset,

batch_size=batch_size,

num_workers=nw,

sampler=sampler,

pin_memory=True,

collate_fn=LoadImagesAndLabels.collate_fn4 if quad else LoadImagesAndLabels.collate_fn)

return dataloader, dataset我是在单机上训练的,因此sampler为None。

LoadImagesAndLabels类

class LoadImagesAndLabels(Dataset): # for training/testing

def __init__(self, path, img_size=640, batch_size=16, augment=False, hyp=None, rect=False, image_weights=False,

cache_images=False, single_cls=False, stride=32, pad=0.0, prefix=''):

self.img_size = img_size # 640

self.augment = augment

self.hyp = hyp

self.image_weights = image_weights

self.rect = False if image_weights else rect # True

self.mosaic = self.augment and not self.rect # load 4 images at a time into a mosaic (only during training)

self.mosaic_border = [-img_size // 2, -img_size // 2]

self.stride = stride # 32

self.path = path # /kaxier01/projects/FAS/yolov7/coco/val2017.txt

try:

f = [] # image files

for p in path if isinstance(path, list) else [path]:

p = Path(p) # os-agnostic

if p.is_dir(): # dir

f += glob.glob(str(p / '**' / '*.*'), recursive=True)

elif p.is_file(): # file

with open(p, 'r') as t:

t = t.read().strip().splitlines()

parent = str(p.parent) + os.sep

f += [x.replace('./', parent) if x.startswith('./') else x for x in t] # local to global path

else:

raise Exception(f'{prefix}{p} does not exist')

self.img_files = sorted([x.replace('/', os.sep) for x in f if x.split('.')[-1].lower() in img_formats])

assert self.img_files, f'{prefix}No images found'

except Exception as e:

raise Exception(f'{prefix}Error loading data from {path}: {e}\nSee {help_url}')

# Check cache

self.label_files = img2label_paths(self.img_files) # labels

cache_path = (p if p.is_file() else Path(self.label_files[0]).parent).with_suffix('.cache') # cached labels

if cache_path.is_file():

cache, exists = torch.load(cache_path), True # load

else:

cache, exists = self.cache_labels(cache_path, prefix), False # cache

# Display cache

nf, nm, ne, nc, n = cache.pop('results') # found, missing, empty, corrupted, total

if exists:

d = f"Scanning '{cache_path}' images and labels... {nf} found, {nm} missing, {ne} empty, {nc} corrupted"

tqdm(None, desc=prefix + d, total=n, initial=n) # display cache results

assert nf > 0 or not augment, f'{prefix}No labels in {cache_path}. Can not train without labels. See {help_url}'

# Read cache

cache.pop('hash') # remove hash

cache.pop('version') # remove version

labels, shapes, self.segments = zip(*cache.values())

self.labels = list(labels)

self.shapes = np.array(shapes, dtype=np.float64)

self.img_files = list(cache.keys()) # update

self.label_files = img2label_paths(cache.keys()) # update

if single_cls:

for x in self.labels:

x[:, 0] = 0

n = len(shapes) # number of images

bi = np.floor(np.arange(n) / batch_size).astype(int) # batch index

nb = bi[-1] + 1 # number of batches

self.batch = bi # batch index of image

self.n = n

self.indices = range(n)

# Rectangular Training

if self.rect:

# Sort by aspect ratio

s = self.shapes # wh

ar = s[:, 1] / s[:, 0] # aspect ratio

irect = ar.argsort()

self.img_files = [self.img_files[i] for i in irect]

self.label_files = [self.label_files[i] for i in irect]

self.labels = [self.labels[i] for i in irect]

self.shapes = s[irect] # wh

ar = ar[irect]

# Set training image shapes

shapes = [[1, 1]] * nb

for i in range(nb):

ari = ar[bi == i]

mini, maxi = ari.min(), ari.max()

if maxi < 1:

shapes[i] = [maxi, 1]

elif mini > 1:

shapes[i] = [1, 1 / mini]

self.batch_shapes = np.ceil(np.array(shapes) * img_size / stride + pad).astype(int) * stride

# Cache images into memory for faster training (WARNING: large datasets may exceed system RAM)

self.imgs = [None] * n

if cache_images:

if cache_images == 'disk':

self.im_cache_dir = Path(Path(self.img_files[0]).parent.as_posix() + '_npy')

self.img_npy = [self.im_cache_dir / Path(f).with_suffix('.npy').name for f in self.img_files]

self.im_cache_dir.mkdir(parents=True, exist_ok=True)

gb = 0 # Gigabytes of cached images

self.img_hw0, self.img_hw = [None] * n, [None] * n

results = ThreadPool(8).imap(lambda x: load_image(*x), zip(repeat(self), range(n)))

pbar = tqdm(enumerate(results), total=n)

for i, x in pbar:

if cache_images == 'disk':

if not self.img_npy[i].exists():

np.save(self.img_npy[i].as_posix(), x[0])

gb += self.img_npy[i].stat().st_size

else:

self.imgs[i], self.img_hw0[i], self.img_hw[i] = x

gb += self.imgs[i].nbytes

pbar.desc = f'{prefix}Caching images ({gb / 1E9:.1f}GB)'

pbar.close()

def cache_labels(self, path=Path('./labels.cache'), prefix=''):

# Cache dataset labels, check images and read shapes

x = {} # dict

nm, nf, ne, nc = 0, 0, 0, 0 # number missing, found, empty, duplicate

pbar = tqdm(zip(self.img_files, self.label_files), desc='Scanning images', total=len(self.img_files))

for i, (im_file, lb_file) in enumerate(pbar):

try:

# verify images

im = Image.open(im_file)

im.verify() # PIL verify

shape = exif_size(im) # image size

segments = [] # instance segments

assert (shape[0] > 9) & (shape[1] > 9), f'image size {shape} <10 pixels'

assert im.format.lower() in img_formats, f'invalid image format {im.format}'

# verify labels

if os.path.isfile(lb_file):

nf += 1 # label found

with open(lb_file, 'r') as f:

l = [x.split() for x in f.read().strip().splitlines()]

if any([len(x) > 8 for x in l]): # is segment

classes = np.array([x[0] for x in l], dtype=np.float32)

segments = [np.array(x[1:], dtype=np.float32).reshape(-1, 2) for x in l] # (cls, xy1...)

l = np.concatenate((classes.reshape(-1, 1), segments2boxes(segments)), 1) # (cls, xywh)

l = np.array(l, dtype=np.float32)

if len(l):

assert l.shape[1] == 5, 'labels require 5 columns each'

assert (l >= 0).all(), 'negative labels'

assert (l[:, 1:] <= 1).all(), 'non-normalized or out of bounds coordinate labels'

assert np.unique(l, axis=0).shape[0] == l.shape[0], 'duplicate labels'

else:

ne += 1 # label empty

l = np.zeros((0, 5), dtype=np.float32)

else:

nm += 1 # label missing

l = np.zeros((0, 5), dtype=np.float32)

x[im_file] = [l, shape, segments]

except Exception as e:

nc += 1

print(f'{prefix}WARNING: Ignoring corrupted image and/or label {im_file}: {e}')

pbar.desc = f"{prefix}Scanning '{path.parent / path.stem}' images and labels... " \

f"{nf} found, {nm} missing, {ne} empty, {nc} corrupted"

pbar.close()

if nf == 0:

print(f'{prefix}WARNING: No labels found in {path}. See {help_url}')

x['hash'] = get_hash(self.label_files + self.img_files)

x['results'] = nf, nm, ne, nc, i + 1

x['version'] = 0.1 # cache version

torch.save(x, path) # save for next time

logging.info(f'{prefix}New cache created: {path}')

return x

def __len__(self):

return len(self.img_files)

def __getitem__(self, index):

index = self.indices[index] # linear, shuffled, or image_weights

hyp = self.hyp

mosaic = self.mosaic and random.random() < hyp['mosaic']

if mosaic:

# Load mosaic

if random.random() < 0.8:

img, labels = load_mosaic(self, index)

else:

img, labels = load_mosaic9(self, index)

shapes = None

# MixUp https://arxiv.org/pdf/1710.09412.pdf

if random.random() < hyp['mixup']:

if random.random() < 0.8:

img2, labels2 = load_mosaic(self, random.randint(0, len(self.labels) - 1))

else:

img2, labels2 = load_mosaic9(self, random.randint(0, len(self.labels) - 1))

r = np.random.beta(8.0, 8.0) # mixup ratio, alpha=beta=8.0

img = (img * r + img2 * (1 - r)).astype(np.uint8)

labels = np.concatenate((labels, labels2), 0)

else:

# Load image

img, (h0, w0), (h, w) = load_image(self, index)

# Letterbox

shape = self.batch_shapes[self.batch[index]] if self.rect else self.img_size # final letterboxed shape

img, ratio, pad = letterbox(img, shape, auto=False, scaleup=self.augment)

shapes = (h0, w0), ((h / h0, w / w0), pad) # for COCO mAP rescaling

labels = self.labels[index].copy()

if labels.size: # normalized xywh to pixel xyxy format

labels[:, 1:] = xywhn2xyxy(labels[:, 1:], ratio[0] * w, ratio[1] * h, padw=pad[0], padh=pad[1])

if self.augment:

# Augment imagespace

if not mosaic:

img, labels = random_perspective(img, labels,

degrees=hyp['degrees'],

translate=hyp['translate'],

scale=hyp['scale'],

shear=hyp['shear'],

perspective=hyp['perspective'])

# Augment colorspace

augment_hsv(img, hgain=hyp['hsv_h'], sgain=hyp['hsv_s'], vgain=hyp['hsv_v'])

if random.random() < hyp['paste_in']:

sample_labels, sample_images, sample_masks = [], [], []

while len(sample_labels) < 30:

sample_labels_, sample_images_, sample_masks_ = load_samples(self, random.randint(0, len(self.labels) - 1))

sample_labels += sample_labels_

sample_images += sample_images_

sample_masks += sample_masks_

if len(sample_labels) == 0:

break

labels = pastein(img, labels, sample_labels, sample_images, sample_masks)

nL = len(labels) # number of labels

if nL:

labels[:, 1:5] = xyxy2xywh(labels[:, 1:5]) # convert xyxy to xywh

labels[:, [2, 4]] /= img.shape[0] # normalized height 0-1

labels[:, [1, 3]] /= img.shape[1] # normalized width 0-1

if self.augment:

# flip up-down

if random.random() < hyp['flipud']:

img = np.flipud(img)

if nL:

labels[:, 2] = 1 - labels[:, 2]

# flip left-right

if random.random() < hyp['fliplr']:

img = np.fliplr(img)

if nL:

labels[:, 1] = 1 - labels[:, 1]

labels_out = torch.zeros((nL, 6))

if nL:

labels_out[:, 1:] = torch.from_numpy(labels) # [0, cls, cx, cy, w, h]

# Convert

img = img[:, :, ::-1].transpose(2, 0, 1) # BGR to RGB, to 3x416x416

img = np.ascontiguousarray(img)

return torch.from_numpy(img), labels_out, self.img_files[index], shapes

@staticmethod

def collate_fn(batch):

img, label, path, shapes = zip(*batch) # transposed

for i, l in enumerate(label):

l[:, 0] = i # add target image index for build_targets()

return torch.stack(img, 0), torch.cat(label, 0), path, shapes

@staticmethod

def collate_fn4(batch):

img, label, path, shapes = zip(*batch) # transposed

n = len(shapes) // 4

img4, label4, path4, shapes4 = [], [], path[:n], shapes[:n]

ho = torch.tensor([[0., 0, 0, 1, 0, 0]])

wo = torch.tensor([[0., 0, 1, 0, 0, 0]])

s = torch.tensor([[1, 1, .5, .5, .5, .5]]) # scale

for i in range(n): # zidane torch.zeros(16,3,720,1280) # BCHW

i *= 4

if random.random() < 0.5:

im = F.interpolate(img[i].unsqueeze(0).float(), scale_factor=2., mode='bilinear', align_corners=False)[

0].type(img[i].type())

l = label[i]

else:

im = torch.cat((torch.cat((img[i], img[i + 1]), 1), torch.cat((img[i + 2], img[i + 3]), 1)), 2)

l = torch.cat((label[i], label[i + 1] + ho, label[i + 2] + wo, label[i + 3] + ho + wo), 0) * s

img4.append(im)

label4.append(l)

for i, l in enumerate(label4):

l[:, 0] = i # add target image index for build_targets()

return torch.stack(img4, 0), torch.cat(label4, 0), path4, shapes4label的格式为:(image,class,x,y,w,h),其中xywh为归一化后的边界框中心点坐标及边界框的宽高。

图片预处理

首先将图片的数据类型从uint8转成float16(半精度),然后归一化图片

img = img.half() if half else img.float()

img /= 255.0 # 0 - 255 to 0.0 - 1.0模型推理

out, train_out = model(img, augment=augment)模型后处理(NMS)

# Run NMS

targets[:, 2:] *= torch.Tensor([width, height, width, height]).to(device) # to pixels

lb = [targets[targets[:, 0] == i, 1:] for i in range(nb)] if save_hybrid else [] # for autolabelling

out = non_max_suppression(out, conf_thres=conf_thres, iou_thres=iou_thres, labels=lb, multi_label=True)计算性能指标

# Statistics per image

for si, pred in enumerate(out):

labels = targets[targets[:, 0] == si, 1:]

nl = len(labels)

tcls = labels[:, 0].tolist() if nl else [] # target class

path = Path(paths[si])

seen += 1

if len(pred) == 0:

if nl:

stats.append((torch.zeros(0, niou, dtype=torch.bool), torch.Tensor(), torch.Tensor(), tcls))

continue

# Predictions

predn = pred.clone()

scale_coords(img[si].shape[1:], predn[:, :4], shapes[si][0], shapes[si][1]) # native-space pred

# Append to text file

if save_txt:

gn = torch.tensor(shapes[si][0])[[1, 0, 1, 0]] # normalization gain whwh

for *xyxy, conf, cls in predn.tolist():

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

line = (cls, *xywh, conf) if save_conf else (cls, *xywh) # label format

with open(save_dir / 'labels' / (path.stem + '.txt'), 'a') as f:

f.write(('%g ' * len(line)).rstrip() % line + '\n')

# W&B logging - Media Panel Plots

if len(wandb_images) < log_imgs and wandb_logger.current_epoch > 0: # Check for test operation

if wandb_logger.current_epoch % wandb_logger.bbox_interval == 0:

box_data = [{"position": {"minX": xyxy[0], "minY": xyxy[1], "maxX": xyxy[2], "maxY": xyxy[3]},

"class_id": int(cls),

"box_caption": "%s %.3f" % (names[cls], conf),

"scores": {"class_score": conf},

"domain": "pixel"} for *xyxy, conf, cls in pred.tolist()]

boxes = {"predictions": {"box_data": box_data, "class_labels": names}} # inference-space

wandb_images.append(wandb_logger.wandb.Image(img[si], boxes=boxes, caption=path.name))

wandb_logger.log_training_progress(predn, path, names) if wandb_logger and wandb_logger.wandb_run else None

# Append to pycocotools JSON dictionary

if save_json:

# [{"image_id": 42, "category_id": 18, "bbox": [258.15, 41.29, 348.26, 243.78], "score": 0.236}, ...

image_id = int(path.stem) if path.stem.isnumeric() else path.stem

box = xyxy2xywh(predn[:, :4]) # xywh

box[:, :2] -= box[:, 2:] / 2 # xy center to top-left corner

for p, b in zip(pred.tolist(), box.tolist()):

jdict.append({'image_id': image_id,

'category_id': coco91class[int(p[5])] if is_coco else int(p[5]),

'bbox': [round(x, 3) for x in b],

'score': round(p[4], 5)})

# Assign all predictions as incorrect

correct = torch.zeros(pred.shape[0], niou, dtype=torch.bool, device=device)

if nl:

detected = [] # target indices

tcls_tensor = labels[:, 0]

# target boxes

tbox = xywh2xyxy(labels[:, 1:5])

scale_coords(img[si].shape[1:], tbox, shapes[si][0], shapes[si][1]) # native-space labels

if plots:

confusion_matrix.process_batch(predn, torch.cat((labels[:, 0:1], tbox), 1))

# Per target class

for cls in torch.unique(tcls_tensor):

ti = (cls == tcls_tensor).nonzero(as_tuple=False).view(-1) # prediction indices

pi = (cls == pred[:, 5]).nonzero(as_tuple=False).view(-1) # target indices

# Search for detections

if pi.shape[0]:

# Prediction to target ious

ious, i = box_iou(predn[pi, :4], tbox[ti]).max(1) # best ious, indices

# Append detections

detected_set = set()

for j in (ious > iouv[0]).nonzero(as_tuple=False):

d = ti[i[j]] # detected target

if d.item() not in detected_set:

detected_set.add(d.item())

detected.append(d)

correct[pi[j]] = ious[j] > iouv # iou_thres is 1xn

if len(detected) == nl: # all targets already located in image

break

# Append statistics (correct, conf, pcls, tcls)

stats.append((correct.cpu(), pred[:, 4].cpu(), pred[:, 5].cpu(), tcls))如果不想使用wandb,则文章来源:https://www.toymoban.com/news/detail-508797.html

pip uninstall wandb运行结果文章来源地址https://www.toymoban.com/news/detail-508797.html

到了这里,关于YOLOV7算法(一)test.py代码学习记录的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!