1. 问题背景

用spark-sql,insert overwrite分区表时发现两个比较麻烦的问题:

- 从目标表select出来再insert overwrite目标表时报错:Error in query: Cannot overwrite a path that is also being read from.

- 从其他表select出来再insert overwrite目标表时,其他分区都被删除了.

2. 问题描述

2.1 代码示例

drop table pt_table_test1;

create table pt_table_test1 (

id int,

region string,

dt string

) using parquet

partitioned by (region, dt)

;

drop table pt_table_test2;

create table pt_table_test2 (

id int,

region string,

dt string

) using parquet

partitioned by (region, dt)

;

set hive.exec.dynamic.partition =true;

set hive.exec.dynamic.partition.mode = nonstrict;

truncate table pt_table_test1;

insert into table pt_table_test1 values (1,'id', '2022-10-01'),(2,'id', '2022-10-02'),(3,'ph', '2022-10-03'),(1,'sg', '2022-10-01'),(2,'sg', '2022-10-02'),(3,'ph', '2022-10-03');

select * from pt_table_test1;

insert overwrite table pt_table_test1 select * from pt_table_test1 where dt = '2022-10-01';

select * from pt_table_test1;

truncate table pt_table_test2;

insert into table pt_table_test2 values (2,'id', '2022-10-01'),(2,'id', '2022-10-02'),(2,'sg', '2022-10-01'),(2,'sg', '2022-10-02');

insert overwrite table pt_table_test1 select * from pt_table_test2 where id = 2;

select * from pt_table_test1;2.2 错误演示

3. 解决方法

印象中这两个问题也出现过,但凭经验和感觉,应该可以解决。找到以前正常运行的表,对比分析了下,发现是建表方式不一致问题:

- 错误建表,指定表的文件格式:using parquet

- 正确姿势,指定表的文件格式:stored as parquet

3.1 示例代码

drop table pt_table_test1;

create table pt_table_test1 (

id int,

region string,

dt string

) stored as parquet

partitioned by (region, dt)

;

drop table pt_table_test2;

create table pt_table_test2 (

id int,

region string,

dt string

) stored as parquet

partitioned by (region, dt)

;

set hive.exec.dynamic.partition =true;

set hive.exec.dynamic.partition.mode = nonstrict;

truncate table pt_table_test1;

insert into table pt_table_test1 values (1,'id', '2022-10-01'),(1,'id', '2022-10-02'),(1,'ph', '2022-10-03'),(1,'sg', '2022-10-01'),(1,'sg', '2022-10-02'),(1,'ph', '2022-10-03');

select * from pt_table_test1;

insert overwrite table pt_table_test1 select * from pt_table_test1 where dt = '2022-10-01';

select * from pt_table_test1;

truncate table pt_table_test2;

insert into table pt_table_test2 values (2,'id', '2022-10-01'),(2,'id', '2022-10-02'),(2,'sg', '2022-10-01'),(2,'sg', '2022-10-02');

insert overwrite table pt_table_test1 select * from pt_table_test2 where id = 2;

select * from pt_table_test1;3.2 正确演示

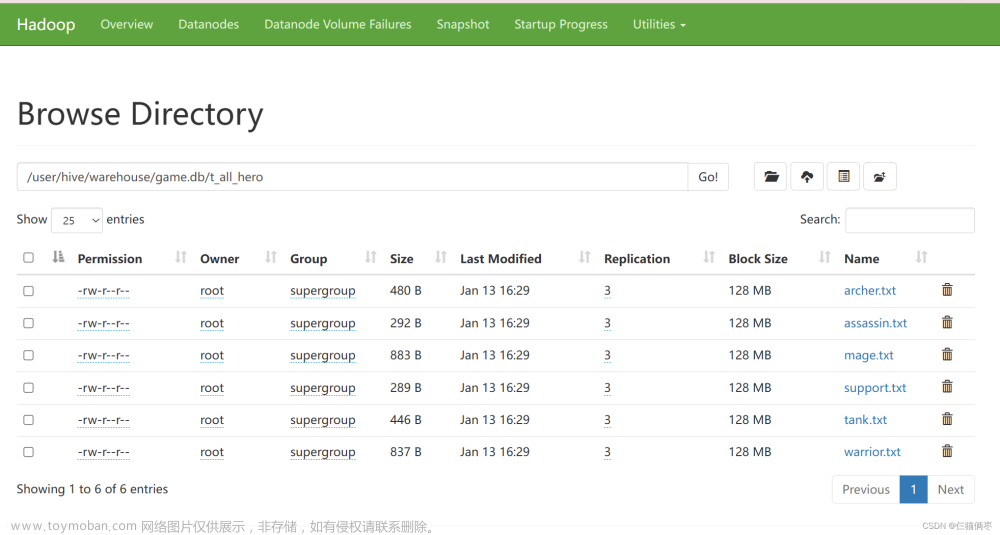

4. using parqnet和stored as parquet

对比两种建表:文章来源:https://www.toymoban.com/news/detail-510028.html

文章来源地址https://www.toymoban.com/news/detail-510028.html

文章来源地址https://www.toymoban.com/news/detail-510028.html

- 建表无论是using parquet还是stored as parquet,执行show create table都显示: USING parquet。

- stored as parquet时,执行show create table,新增了TBLPROPERTIES属性。

到了这里,关于spark-sql: insert overwrite分区表问题的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!