目录

环境安装

黑白照片上色

文生图-Stable Diffusion

文生图-Dreambooth

图生图-ControlNet-Canny

图生图-ControlNet-Pose

图生图-ControlNet Animation

训练自己的ControlNet

环境安装

mim install mmagic

pip install opencv-python pillow matplotlib seaborn tqdm -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install clip transformers gradio 'httpx[socks]' diffusers==0.14.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

mim install 'mmdet>=3.0.0'# 检查 Pytorch

import torch, torchvision

print('Pytorch 版本', torch.__version__)

print('CUDA 是否可用',torch.cuda.is_available())

# 检查 mmcv

import mmcv

from mmcv.ops import get_compiling_cuda_version, get_compiler_version

print('MMCV版本', mmcv.__version__)

print('CUDA版本', get_compiling_cuda_version())

print('编译器版本', get_compiler_version())

# 检查 mmagic

import mmagic

print('MMagic版本', mmagic.__version__)黑白照片上色

下载样例图

python demo/mmagic_inference_demo.py --model-name inst_colorization --img data/test_colorization.jpg --result-out-dir outpusts/out_colorization.png样例效果: 测试结果:

测试结果:

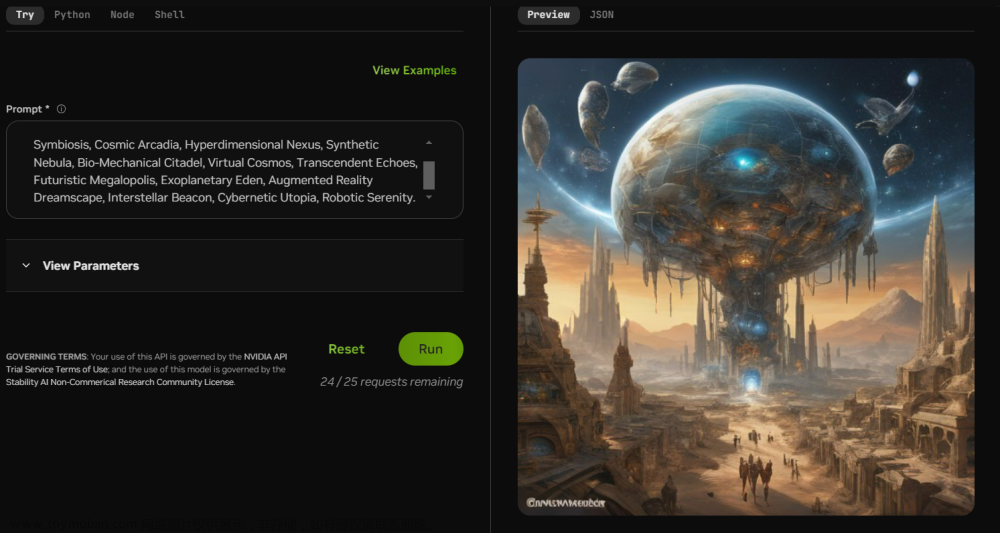

文生图-Stable Diffusion

from mmagic.apis import MMagicInferencer

# 载入 Stable Diffusion 模型

sd_inferencer = MMagicInferencer(model_name='stable_diffusion')

# 指定Prompt文本

text_prompts = 'A panda is having dinner at KFC'

text_prompts = 'A Persian cat walking in the streets of New York'

# 执行预测

sd_inferencer.infer(text=text_prompts, result_out_dir='outputs/sd_res.png') 测试效果:

文生图-Dreambooth

在数据集上训练Dreambooth, 数据集下载链接

python .\tools\train.py .\configs\dreambooth\dreambooth-lora.py 用训练好的模型做预测

import torch

from mmengine import Config

from mmagic.registry import MODELS

from mmagic.utils import register_all_modules

register_all_modules()

cfg = Config.fromfile('configs/dreambooth/dreambooth-lora.py')

dreambooth_lora = MODELS.build(cfg.model)

state = torch.load('work_dirs/dreambooth-lora/iter_1000.pth')['state_dict']

def convert_state_dict(state):

state_dict_new = {}

for k, v in state.items():

if '.module' in k:

k_new = k.replace('.module', '')

else:

k_new = k

if 'vae' in k:

if 'to_q' in k:

k_new = k.replace('to_q', 'query')

elif 'to_k' in k:

k_new = k.replace('to_k', 'key')

elif 'to_v' in k:

k_new = k.replace('to_v', 'value')

elif 'to_out' in k:

k_new = k.replace('to_out.0', 'proj_attn')

state_dict_new[k_new] = v

return state_dict_new

dreambooth_lora.load_state_dict(convert_state_dict(state))

dreambooth_lora = dreambooth_lora.cuda()

samples = dreambooth_lora.infer('side view of sks dog', guidance_scale=5)

samples = dreambooth_lora.infer('ear close-up of sks dog', guidance_scale=5)图生图-ControlNet-Canny

import cv2

import numpy as np

import mmcv

from mmengine import Config

from PIL import Image

from mmagic.registry import MODELS

from mmagic.utils import register_all_modules

register_all_modules()

#载入ControNet模型

cfg = Config.fromfile('configs/controlnet/controlnet-canny.py')

controlnet = MODELS.build(cfg.model).cuda()

#输入Canny边缘图

control_url = 'https://user-images.githubusercontent.com/28132635/230288866-99603172-04cb-47b3-8adb-d1aa532d1d2c.jpg'

control_img = mmcv.imread(control_url)

control = cv2.Canny(control_img, 100, 200)

control = control[:, :, None]

control = np.concatenate([control] * 3, axis=2)

control = Image.fromarray(control)

#咒语Prompt

prompt = 'Room with blue walls and a yellow ceiling.'

#执行预测

output_dict = controlnet.infer(prompt, control=control)

samples = output_dict['samples']

for idx, sample in enumerate(samples):

sample.save(f'sample_{idx}.png')

controls = output_dict['controls']

for idx, control in enumerate(controls):

control.save(f'control_{idx}.png')图生图-ControlNet-Pose

import mmcv

from mmengine import Config

from PIL import Image

from mmagic.registry import MODELS

from mmagic.utils import register_all_modules

register_all_modules()

# 载入ControlNet模型

cfg = Config.fromfile('configs/controlnet/controlnet-pose.py')

# convert ControlNet's weight from SD-v1.5 to Counterfeit-v2.5

cfg.model.unet.from_pretrained = 'gsdf/Counterfeit-V2.5'

cfg.model.vae.from_pretrained = 'gsdf/Counterfeit-V2.5'

cfg.model.init_cfg['type'] = 'convert_from_unet'

controlnet = MODELS.build(cfg.model).cuda()

# call init_weights manually to convert weight

controlnet.init_weights()

# 咒语Prompt

prompt = 'masterpiece, best quality, sky, black hair, skirt, sailor collar, looking at viewer, short hair, building, bangs, neckerchief, long sleeves, cloudy sky, power lines, shirt, cityscape, pleated skirt, scenery, blunt bangs, city, night, black sailor collar, closed mouth'

# 输入Pose图

control_url = 'https://user-images.githubusercontent.com/28132635/230380893-2eae68af-d610-4f7f-aa68-c2f22c2abf7e.png'

control_img = mmcv.imread(control_url)

control = Image.fromarray(control_img)

control.save('control.png')

# 执行预测

output_dict = controlnet.infer(prompt, control=control, width=512, height=512, guidance_scale=7.5)

samples = output_dict['samples']

for idx, sample in enumerate(samples):

sample.save(f'sample_{idx}.png')

controls = output_dict['controls']

for idx, control in enumerate(controls):

control.save(f'control_{idx}.png')图生图-ControlNet Animation

方式一:Gradio命令行

python .\demo\gradio_controlnet_animation.py

方式二:MMagic API 文章来源:https://www.toymoban.com/news/detail-513274.html

# 导入工具包

from mmagic.apis import MMagicInferencer

# Create a MMEdit instance and infer

editor = MMagicInferencer(model_name='controlnet_animation')

# 指定 prompt 咒语

prompt = 'a girl, black hair, T-shirt, smoking, best quality, extremely detailed'

negative_prompt = 'longbody, lowres, bad anatomy, bad hands, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality'

# 待测视频

# https://user-images.githubusercontent.com/12782558/227418400-80ad9123-7f8e-4c1a-8e19-0892ebad2a4f.mp4

video = '../run_forrest_frames_rename_resized.mp4'

save_path = '../output_video.mp4'

# 执行预测

editor.infer(video=video, prompt=prompt, image_width=512, image_height=512, negative_prompt=negative_prompt, save_path=save_path)训练自己的ControlNet

下载数据集文章来源地址https://www.toymoban.com/news/detail-513274.html

python .\tools\train.py .\configs\controlnet\controlnet-1xb1-fill50k.py

到了这里,关于AI实战营:生成模型+底层视觉+AIGC多模态 算法库MMagic的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!