1、docker 安装zookeeper

1、拉取镜像

docker pull zookeeper

2、运行容器

docker run -d -e TZ="Asia/Shanghai" -p 2181:2181 -v /home/sunyuhua/docker/zookeeper:/data --name zookeeper --restart always zookeeper

3、查看容器是不是运行成功

docker exec -it zookeeper bash

./bin/zkCli.sh

2、docker 安装 shardingsphere-proxy

1、获取镜像中的配置

docker run -d --name tmp --entrypoint=bash apache/shardingsphere-proxy

docker cp tmp:/opt/shardingsphere-proxy/conf /home/sunyuhua/docker/shardingsphere-proxy/conf

docker rm tmp

2、启动shardingsphere-proxy

docker run -d -v /home/sunyuhua/docker/shardingsphere-proxy/conf:/opt/shardingsphere-proxy/conf -v /home/sunyuhua/docker/shardingsphere-proxy/ext-lib:/opt/shardingsphere-proxy/ext-lib -v /home/sunyuhua/docker/shardingsphere-proxy/logs:/opt/shardingsphere-proxy/logs -p3307:3307 --name sharding-proxy --restart always --network sharding-network apache/shardingsphere-proxy

3、检查shardingsphere-proxy是否启动成功

docker logs sharding-proxy

3、docker 安装 postgresql13

1、拉取镜像

docker pull postgres:13

2、运行镜像

docker run -d --name postgres -e POSTGRES_USER=postgres -e POSTGRES_PASSWORD=postgres -v /home/sunyuhua/docker/postgresql/data:/var/lib/postgresql/data -v /etc/localtime:/etc/localtime --restart=always -p 5432:5432 postgres:13

3、进入docker容器检查是否成功

docker exec -it postgres /bin/bash

4、创建查询用户,并赋值权限

psql -U postgres

CREATE ROLE sa WITH SUPERUSER LOGIN PASSWORD 'boKYLXIERWnzB0gX';

grant all PRIVILEGES on database postgres to sa;

grant all PRIVILEGES on all tables in schema public TO sa;

ALTER ROLE sa CREATEROLE SUPERUSER;

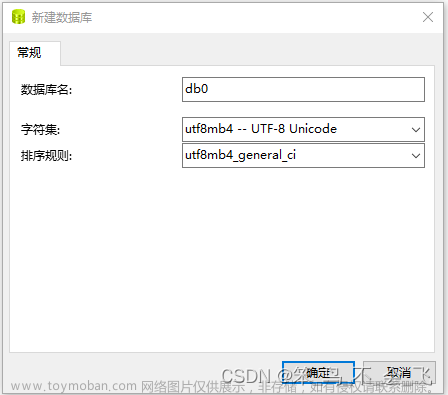

4、准备数据库的表结构

CREATE DATABASE demo_ds_0;

\c demo_ds_0;

CREATE TABLE t_order_0 (

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

total_money INT NOT NULL,

PRIMARY KEY (order_id)

);

CREATE INDEX idx_user_id_0 ON t_order_0 (user_id);

CREATE TABLE t_order_1 (

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

total_money INT NOT NULL,

PRIMARY KEY (order_id)

);

CREATE INDEX idx_user_id_1 ON t_order_1 (user_id);

CREATE TABLE t_order_item_0 (

order_item_id BIGINT NOT NULL,

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

money INT NOT NULL,

PRIMARY KEY (order_item_id)

);

CREATE INDEX idx_order_id_0 ON t_order_item_0 (order_id);

CREATE INDEX idx_user_id_0 ON t_order_item_0 (user_id);

CREATE TABLE t_order_item_1 (

order_item_id BIGINT NOT NULL,

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

money INT NOT NULL,

PRIMARY KEY (order_item_id)

);

CREATE INDEX idx_order_id_1 ON t_order_item_1 (order_id);

CREATE INDEX idx_user_id_1 ON t_order_item_1 (user_id);

CREATE DATABASE demo_ds_1;

\c demo_ds_1;

CREATE TABLE t_order_0 (

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

total_money INT NOT NULL,

PRIMARY KEY (order_id)

);

CREATE INDEX idx_user_id_0 ON t_order_0 (user_id);

CREATE TABLE t_order_1 (

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

total_money INT NOT NULL,

PRIMARY KEY (order_id)

);

CREATE INDEX idx_user_id_1 ON t_order_1 (user_id);

CREATE TABLE t_order_item_0 (

order_item_id BIGINT NOT NULL,

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

money INT NOT NULL,

PRIMARY KEY (order_item_id)

);

CREATE INDEX idx_order_id_0 ON t_order_item_0 (order_id);

CREATE INDEX idx_user_id_0 ON t_order_item_0 (user_id);

CREATE TABLE t_order_item_1 (

order_item_id BIGINT NOT NULL,

order_id BIGINT NOT NULL,

user_id BIGINT NOT NULL,

money INT NOT NULL,

PRIMARY KEY (order_item_id)

);

CREATE INDEX idx_order_id_1 ON t_order_item_1 (order_id);

CREATE INDEX idx_user_id_1 ON t_order_item_1 (user_id);

5、配置shardingsphere-proxy分库分表的策略

修改server.xml文件,文件地址:/home/sunyuhua/docker/shardingsphere-proxy/conf

mode:

type: Cluster

repository:

type: ZooKeeper

props:

namespace: governance_ds

server-lists: host.docker.internal:2181

retryIntervalMilliseconds: 500

timeToLiveSeconds: 60

maxRetries: 3

operationTimeoutMilliseconds: 500

# overwrite: false

#

rules:

- !AUTHORITY

users:

- root@%:root

- sharding@:sharding

provider:

type: ALL_PRIVILEGES_PERMITTED

# - !TRANSACTION

# defaultType: XA

# providerType: Atomikos

props:

# max-connections-size-per-query: 1

# kernel-executor-size: 16 # Infinite by default.

# proxy-frontend-flush-threshold: 128 # The default value is 128.

# proxy-opentracing-enabled: false

# proxy-hint-enabled: false

sql-show: true

# check-table-metadata-enabled: false

# show-process-list-enabled: false

# # Proxy backend query fetch size. A larger value may increase the memory usage of ShardingSphere Proxy.

# # The default value is -1, which means set the minimum value for different JDBC drivers.

# proxy-backend-query-fetch-size: -1

# check-duplicate-table-enabled: false

# sql-comment-parse-enabled: false

# proxy-frontend-executor-size: 0 # Proxy frontend executor size. The default value is 0, which means let Netty decide.

# # Available options of proxy backend executor suitable: OLAP(default), OLTP. The OLTP option may reduce time cost of writing packets to client, but it may increase the latency of SQL execution

# # if client connections are more than proxy-frontend-netty-executor-size, especially executing slow SQL.

# proxy-backend-executor-suitable: OLAP

# proxy-frontend-max-connections: 0 # Less than or equal to 0 means no limitation.

# sql-federation-enabled: false

修改config-sharding.yaml 文件,文件地址/home/sunyuhua/docker/shardingsphere-proxy/conf

schemaName: sharding_db_postgres

dataSources:

ds_0:

url: jdbc:postgresql://host.docker.internal:5432/demo_ds_0

username: sa

password: boKYLXIERWnzB0gX

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 50

minPoolSize: 1

ds_1:

url: jdbc:postgresql://host.docker.internal:5432/demo_ds_1

username: sa

password: boKYLXIERWnzB0gX

connectionTimeoutMilliseconds: 30000

idleTimeoutMilliseconds: 60000

maxLifetimeMilliseconds: 1800000

maxPoolSize: 50

minPoolSize: 1

rules:

- !SHARDING

tables:

t_order:

actualDataNodes: ds_${0..1}.t_order_${0..1}

tableStrategy:

standard:

shardingColumn: order_id

shardingAlgorithmName: t_order_inline

keyGenerateStrategy:

column: order_id

keyGeneratorName: snowflake

t_order_item:

actualDataNodes: ds_${0..1}.t_order_item_${0..1}

tableStrategy:

standard:

shardingColumn: order_id

shardingAlgorithmName: t_order_item_inline

keyGenerateStrategy:

column: order_item_id

keyGeneratorName: snowflake

bindingTables:

- t_order,t_order_item

defaultDatabaseStrategy:

standard:

shardingColumn: user_id

shardingAlgorithmName: database_inline

defaultTableStrategy:

none:

shardingAlgorithms:

database_inline:

type: INLINE

props:

algorithm-expression: ds_${user_id % 2}

t_order_inline:

type: INLINE

props:

algorithm-expression: t_order_${order_id % 2}

t_order_item_inline:

type: INLINE

props:

algorithm-expression: t_order_item_${order_id % 2}

keyGenerators:

snowflake:

type: SNOWFLAKE

props:

worker-id: 123

重新启动shardingsphere

docker restart sharding-proxy

检查shardingsphere-proxy是不是启动

docker logs sharding-proxy

或者查看日志

/opt/shardingsphere-proxy/logs/stdout.log

[INFO ] 2023-06-28 05:46:54.015 [main-SendThread(host.docker.internal:2181)] org.apache.zookeeper.ClientCnxn - Session establishment complete on server host.docker.internal/192.168.65.2:2181, session id = 0x100000045ca0001, negotiated timeout = 40000

[INFO ] 2023-06-28 05:46:54.024 [main-EventThread] o.a.c.f.state.ConnectionStateManager - State change: CONNECTED

[INFO ] 2023-06-28 05:46:54.052 [main-EventThread] o.a.c.framework.imps.EnsembleTracker - New config event received: {}

[INFO ] 2023-06-28 05:46:54.052 [main-EventThread] o.a.c.framework.imps.EnsembleTracker - New config event received: {}

Thanks for using Atomikos! Evaluate http://www.atomikos.com/Main/ExtremeTransactions for advanced features and professional support

or register at http://www.atomikos.com/Main/RegisterYourDownload to disable this message and receive FREE tips & advice

[INFO ] 2023-06-28 05:46:56.075 [main] o.apache.curator.utils.Compatibility - Using org.apache.zookeeper.server.quorum.MultipleAddresses

[INFO ] 2023-06-28 05:46:56.093 [main] o.a.s.p.i.BootstrapInitializer - Database name is `MySQL`, version is `5.7.42-log`

[INFO ] 2023-06-28 05:46:56.283 [main] o.a.s.p.frontend.ShardingSphereProxy - ShardingSphere-Proxy start success

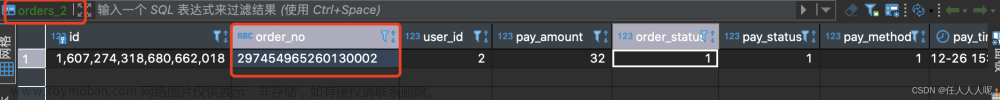

6、插入和查询数据,检查分库分表策略是不是生效

7、检查物理库是不是已经插入成功

常见错误

1、postgresql 客户端DBeaver 出现进行 SSL 连线时发生错误。 解决办法:降低shardingsphere-proxy到5.2.1版本即可文章来源:https://www.toymoban.com/news/detail-513294.html

[ERROR] 2023-06-30 07:28:39.479 [epollEventLoopGroup-3-3] o.a.s.p.f.n.FrontendChannelInboundHandler - Exception occur:

java.lang.IndexOutOfBoundsException: readerIndex(1) + length(4) exceeds writerIndex(1): PooledSlicedByteBuf(ridx: 1, widx: 1, cap: 1/1, unwrapped: PooledUnsafeDirectByteBuf(ridx: 4, widx: 8, cap: 2048))

at io.netty.buffer.AbstractByteBuf.checkReadableBytes0(AbstractByteBuf.java:1442)

at io.netty.buffer.AbstractByteBuf.readIntLE(AbstractByteBuf.java:817)

at org.apache.shardingsphere.db.protocol.mysql.payload.MySQLPacketPayload.readInt4(MySQLPacketPayload.java:115)

at org.apache.shardingsphere.db.protocol.mysql.packet.handshake.MySQLHandshakeResponse41Packet.<init>(MySQLHandshakeResponse41Packet.java:56)

at org.apache.shardingsphere.proxy.frontend.mysql.authentication.MySQLAuthenticationEngine.authPhaseFastPath(MySQLAuthenticationEngine.java:86)

at org.apache.shardingsphere.proxy.frontend.mysql.authentication.MySQLAuthenticationEngine.authenticate(MySQLAuthenticationEngine.java:73)

at org.apache.shardingsphere.proxy.frontend.netty.FrontendChannelInboundHandler.authenticate(FrontendChannelInboundHandler.java:80)

at org.apache.shardingsphere.proxy.frontend.netty.FrontendChannelInboundHandler.channelRead(FrontendChannelInboundHandler.java:72)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:324)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:311)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:432)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

at io.netty.handler.codec.ByteToMessageCodec.channelRead(ByteToMessageCodec.java:103)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

at io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

2、DBeaver出现SQL 错误 [22023]: ERROR: No value specified for parameter 1. 解决方法:更换Navicat for postgresql文章来源地址https://www.toymoban.com/news/detail-513294.html

[ERROR] 2023-06-30 08:47:23.424 [Connection-23-ThreadExecutor] o.a.s.p.f.c.CommandExecutorTask - Exception occur:

org.postgresql.util.PSQLException: No value specified for parameter 1.

at org.postgresql.core.v3.SimpleParameterList.checkAllParametersSet(SimpleParameterList.java:284)

at org.postgresql.core.v3.QueryExecutorImpl.execute(QueryExecutorImpl.java:340)

at org.postgresql.jdbc.PgStatement.executeInternal(PgStatement.java:490)

at org.postgresql.jdbc.PgStatement.execute(PgStatement.java:408)

at org.postgresql.jdbc.PgPreparedStatement.executeWithFlags(PgPreparedStatement.java:181)

at org.postgresql.jdbc.PgPreparedStatement.executeQuery(PgPreparedStatement.java:133)

at com.zaxxer.hikari.pool.ProxyPreparedStatement.executeQuery(ProxyPreparedStatement.java:52)

at com.zaxxer.hikari.pool.HikariProxyPreparedStatement.executeQuery(HikariProxyPreparedStatement.java)

at org.apache.shardingsphere.proxy.backend.handler.admin.executor.AbstractDatabaseMetadataExecutor$DefaultDatabaseMetadataExecutor.getSourceData(AbstractDatabaseMetadataExecutor.java:216)

at org.apache.shardingsphere.proxy.backend.handler.admin.executor.AbstractDatabaseMetadataExecutor.execute(AbstractDatabaseMetadataExecutor.java:76)

at org.apache.shardingsphere.proxy.backend.handler.admin.DatabaseAdminQueryBackendHandler.execute(DatabaseAdminQueryBackendHandler.java:56)

at org.apache.shardingsphere.proxy.frontend.postgresql.command.query.extended.JDBCPortal.bind(JDBCPortal.java:113)

at org.apache.shardingsphere.proxy.frontend.postgresql.command.query.extended.bind.PostgreSQLComBindExecutor.execute(PostgreSQLComBindExecutor.java:53)

at org.apache.shardingsphere.proxy.frontend.postgresql.command.query.extended.PostgreSQLAggregatedCommandExecutor.execute(PostgreSQLAggregatedCommandExecutor.java:41)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.executeCommand(CommandExecutorTask.java:111)

at org.apache.shardingsphere.proxy.frontend.command.CommandExecutorTask.run(CommandExecutorTask.java:78)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

at java.base/java.lang.Thread.run(Thread.java:833)

到了这里,关于shardingsphere-proxy 实现postgresql的分库分表的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!