hive中的datagrip和beeline客户端的权限问题

使用ranger和kerberos配置了hadoop和hive,今天想用来测试其权限

测试xwq用户:

1.首先添加xwq用户权限,命令如下:

useradd xwq -G hadoop

echo xwq | passwd --stdin xwq

echo 'xwq ALL=(ALL) NOPASSWD: NOPASSWD: ALL' >> /etc/sudoers

kadmin -padmin/admin -wNTVfPQY9kNs6 -q"addprinc -randkey xwq"

kadmin -padmin/admin -wNTVfPQY9kNs6 -q"xst -k /etc/security/keytab/xwq.keytab xwq"

chown xwq:hadoop /etc/security/keytab/xwq.keytab

chmod 660 /etc/security/keytab/xwq.keytab

2.进行认证

[root@hadoop102 keytab]# kinit xwq

Password for xwq@EXAMPLE.COM:

[root@hadoop102 keytab]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: xwq@EXAMPLE.COM

Valid starting Expires Service principal

07/01/2023 10:09:21 07/02/2023 10:09:21 krbtgt/EXAMPLE.COM@EXAMPLE.COM

renew until 07/08/2023 10:09:21

3.连接beeline客户端

[root@hadoop102 ~]# beeline

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/module/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/ha/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Beeline version 3.1.2 by Apache Hive

beeline> !connect jdbc:hive2://hadoop102:10000/;principal=hive/hadoop102@EXAMPLE.COM

Connecting to jdbc:hive2://hadoop102:10000/;principal=hive/hadoop102@EXAMPLE.COM

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://hadoop102:10000/> select current_user();

INFO : Compiling command(queryId=hive_20230701095227_419c1fe7-2f6b-47af-828c-bcf67fd6043a): select current_user()

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)`在这里插入代码片`

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:_c0, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20230701095227_419c1fe7-2f6b-47af-828c-bcf67fd6043a); Time taken: 0.212 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=hive_20230701095227_419c1fe7-2f6b-47af-828c-bcf67fd6043a): select current_user()

INFO : Completed executing command(queryId=hive_20230701095227_419c1fe7-2f6b-47af-828c-bcf67fd6043a); Time taken: 0.0 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+------+

| _c0 |

+------+

| xwq |

+------+

1 row selected (0.301 seconds)

4.执行插入语句

0: jdbc:hive2://hadoop102:10000/> insert into student values(2,'1');

INFO : Compiling command(queryId=hive_20230701095229_d7d5807d-ff37-4aef-81d5-bc10fd929ebf): insert into student values(2,'1')

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:col1, type:int, comment:null), FieldSchema(name:col2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20230701095229_d7d5807d-ff37-4aef-81d5-bc10fd929ebf); Time taken: 0.318 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=hive_20230701095229_d7d5807d-ff37-4aef-81d5-bc10fd929ebf): insert into student values(2,'1')

INFO : Query ID = hive_20230701095229_d7d5807d-ff37-4aef-81d5-bc10fd929ebf

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

ERROR : Job hasn't been submitted after 61s. Aborting it.

Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc.

Please check YARN or Spark driver's logs for further information.

The timeout is controlled by hive.spark.job.monitor.timeout.

ERROR : FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Spark job failed during runtime. Please check stacktrace for the root cause.

INFO : Completed executing command(queryId=hive_20230701095229_d7d5807d-ff37-4aef-81d5-bc10fd929ebf); Time taken: 216.921 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

Error: Error while processing statement: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Spark job failed during runtime. Please check stacktrace for the root cause. (state=42000,code=2)

0: jdbc:hive2://hadoop102:10000/> insert into student values(2,'1');

INFO : Compiling command(queryId=hive_20230701095708_a92293d5-eb6e-448c-b623-c5c49660ae66): insert into student values(2,'1')

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:col1, type:int, comment:null), FieldSchema(name:col2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20230701095708_a92293d5-eb6e-448c-b623-c5c49660ae66); Time taken: 0.28 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=hive_20230701095708_a92293d5-eb6e-448c-b623-c5c49660ae66): insert into student values(2,'1')

INFO : Query ID = hive_20230701095708_a92293d5-eb6e-448c-b623-c5c49660ae66

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

ERROR : Job hasn't been submitted after 61s. Aborting it.

Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc.

Please check YARN or Spark driver's logs for further information.

The timeout is controlled by hive.spark.job.monitor.timeout.

ERROR : FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Spark job failed during runtime. Please check stacktrace for the root cause.

INFO : Completed executing command(queryId=hive_20230701095708_a92293d5-eb6e-448c-b623-c5c49660ae66); Time taken: 181.098 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

Error: Error while processing statement: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Spark job failed during runtime. Please check stacktrace for the root cause. (state=42000,code=2)

任务执行失败,后面观察了yarn界面,发现是向yarn成功提交了job,job也经历 了accept到running的过程,但是最后执行失败了,日志的报错信息如下:

2023-07-01T10:16:07,513 INFO [44a5e8c7-dc6f-43f7-8a98-1037e8deffa3 HiveServer2-Handler-Pool: Thread-85] session.SessionState: Resetting thread name to HiveServer2-Handler-Pool: Thread-85

2023-07-01T10:16:30,717 ERROR [HiveServer2-Background-Pool: Thread-158] client.SparkClientImpl: Timed out waiting for client to connect.

Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc.

Please check YARN or Spark driver's logs for further information.

java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Timed out waiting for client connection.

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41) ~[netty-all-4.1.17.Final.jar:4.1.17.Final]

at org.apache.hive.spark.client.SparkClientImpl.<init>(SparkClientImpl.java:106) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:88) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:105) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.<init>(RemoteHiveSparkClient.java:101) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:76) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:87) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:115) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:136) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:115) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:205) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2664) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:2335) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:2011) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1709) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1703) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:157) ~[hive-exec-3.1.2.jar:3.1.2]

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:224) ~[hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.cli.operation.SQLOperation.access$700(SQLOperation.java:87) ~[hive-service-3.1.2.jar:3.1.2]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:316) ~[hive-service-3.1.2.jar:3.1.2]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_361]

at javax.security.auth.Subject.doAs(Subject.java:422) ~[?:1.8.0_361]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729) ~[hadoop-common-3.1.3.jar:?]

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork.run(SQLOperation.java:329) ~[hive-service-3.1.2.jar:3.1.2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_361]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_361]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_361]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_361]

at java.lang.Thread.run(Thread.java:750) [?:1.8.0_361]

Caused by: java.util.concurrent.TimeoutException: Timed out waiting for client connection.

at org.apache.hive.spark.client.rpc.RpcServer$2.run(RpcServer.java:172) ~[hive-exec-3.1.2.jar:3.1.2]

at io.netty.util.concurrent.PromiseTask$RunnableAdapter.call(PromiseTask.java:38) ~[netty-all-4.1.17.Final.jar:4.1.17.Final]

at io.netty.util.concurrent.ScheduledFutureTask.run(ScheduledFutureTask.java:120) ~[netty-all-4.1.17.Final.jar:4.1.17.Final]

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163) ~[netty-all-4.1.17.Final.jar:4.1.17.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:403) ~[netty-all-4.1.17.Final.jar:4.1.17.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:463) ~[netty-all-4.1.17.Final.jar:4.1.17.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858) ~[netty-all-4.1.17.Final.jar:4.1.17.Final]

... 1 more

2023-07-01T10:16:30,741 ERROR [HiveServer2-Background-Pool: Thread-158] spark.SparkTask: Failed to execute spark task, with exception 'org.apache.hadoop.hive.ql.metadata.HiveException(Failed to create Spark client for Spark session 2c551365-6d3c-458d-8d7c-3c8566d3c802)'

org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client for Spark session 2c551365-6d3c-458d-8d7c-3c8566d3c802

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.getHiveException(SparkSessionImpl.java:215)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:92)

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:115)

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:136)

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:115)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:205)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:97)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2664)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:2335)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:2011)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1709)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1703)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:157)

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:224)

at org.apache.hive.service.cli.operation.SQLOperation.access$700(SQLOperation.java:87)

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork$1.run(SQLOperation.java:316)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hive.service.cli.operation.SQLOperation$BackgroundWork.run(SQLOperation.java:329)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

检查了spark分配的资源和yarn的资源,资源充足,不知道是什么原因

后面用sarah用户进行测试,发现job执行成功,结果如下:

INFO : Concurrency mode is disabled, not creating a lock manager

+--------+

| _c0 |

+--------+

| sarah |

+--------+

1 row selected (0.399 seconds)

0: jdbc:hive2://hadoop102:10000/> insert into student values(2,'1');

INFO : Compiling command(queryId=hive_20230701095037_eb26098a-e4e9-438b-a33b-9bf8b6205d1f): insert into student values(2,'1')

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:col1, type:int, comment:null), FieldSchema(name:col2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20230701095037_eb26098a-e4e9-438b-a33b-9bf8b6205d1f); Time taken: 0.281 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=hive_20230701095037_eb26098a-e4e9-438b-a33b-9bf8b6205d1f): insert into student values(2,'1')

INFO : Query ID = hive_20230701095037_eb26098a-e4e9-438b-a33b-9bf8b6205d1f

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Running with YARN Application = application_1688108003994_0006

INFO : Kill Command = /opt/ha/hadoop/bin/yarn application -kill application_1688108003994_0006

INFO : Hive on Spark Session Web UI URL: http://hadoop102:32853

INFO :

Query Hive on Spark job[0] stages: [0, 1]

INFO : Spark job[0] status = RUNNING

INFO : Job Progress Format

CurrentTime StageId_StageAttemptId: SucceededTasksCount(+RunningTasksCount-FailedTasksCount)/TotalTasksCount

INFO : 2023-07-01 09:51:30,101 Stage-0_0: 0(+1)/1 Stage-1_0: 0/1

INFO : 2023-07-01 09:51:33,126 Stage-0_0: 0(+1)/1 Stage-1_0: 0/1

INFO : 2023-07-01 09:51:34,131 Stage-0_0: 1/1 Finished Stage-1_0: 0/1

INFO : 2023-07-01 09:51:35,139 Stage-0_0: 1/1 Finished Stage-1_0: 1/1 Finished

INFO : Spark job[0] finished successfully in 8.11 second(s)

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table default.student from hdfs://mycluster/user/hive/warehouse/student/.hive-staging_hive_2023-07-01_09-50-37_765_7507751690563815963-7/-ext-10000

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : Completed executing command(queryId=hive_20230701095037_eb26098a-e4e9-438b-a33b-9bf8b6205d1f); Time taken: 57.47 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (57.758 seconds)

不知道为什么sarah用户可以成功,但是xwq用户失败了,后面有使用了hdfs进行测试,和xwq用户一样失败

如下结果:

INFO : Concurrency mode is disabled, not creating a lock manager

+-------+

| _c0 |

+-------+

| hdfs |

+-------+

1 row selected (0.376 seconds)

0: jdbc:hive2://hadoop102:10000/> insert into student values(2,'1');

INFO : Compiling command(queryId=hive_20230630172135_4dc05a0d-7783-48ec-a6d7-8b11f81f8f85): insert into student values(2,'1')

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:col1, type:int, comment:null), FieldSchema(name:col2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20230630172135_4dc05a0d-7783-48ec-a6d7-8b11f81f8f85); Time taken: 0.841 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=hive_20230630172135_4dc05a0d-7783-48ec-a6d7-8b11f81f8f85): insert into student values(2,'1')

INFO : Query ID = hive_20230630172135_4dc05a0d-7783-48ec-a6d7-8b11f81f8f85

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

ERROR : FAILED: Execution Error, return code 30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client for Spark session 98d08d85-f20b-4ce9-8e88-aed333485cb5

INFO : Completed executing command(queryId=hive_20230630172135_4dc05a0d-7783-48ec-a6d7-8b11f81f8f85); Time taken: 300.171 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

Error: Error while processing statement: FAILED: Execution Error, return code 30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client for Spark session 98d

0: jdbc:hive2://hadoop102:10000/> !quit

Closing: 0: jdbc:hive2://hadoop102:10000/;principal=hive/hadoop102@EXAMPLE.COM

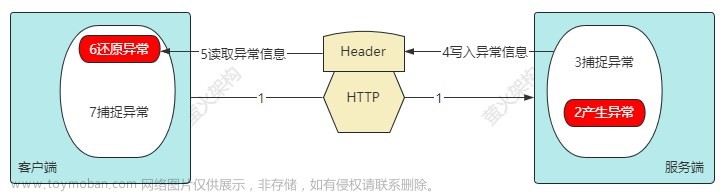

分析:

看日志发现也看不出什么,但是我刚刚看yarn web页面时,突然发现了一个比较明显的错误

详细的bug是

Diagnostics:

Application application_1688108003994_0009 failed 1 times (global limit =2; local limit is =1) due to AM Container for appattempt_1688108003994_0009_000001 exited with exitCode: -1000

Failing this attempt.Diagnostics: [2023-07-01 10:10:53.661]Application application_1688108003994_0009 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is xwq

main : requested yarn user is xwq

User xwq not found

For more detailed output, check the application tracking page: http://hadoop103:8088/cluster/app/application_1688108003994_0009 Then click on links to logs of each attempt.

. Failing the application.

看到這個錯誤我就知道了,之前碰到過這樣的錯誤,當時是執行一個MR任務是失敗

解決辦法

在其他節點上執行

useradd xwq -G hadoop

echo xwq | passwd --stdin xwq

echo 'xwq ALL=(ALL) NOPASSWD: NOPASSWD: ALL' >> /etc/sudoers

kadmin -padmin/admin -wNTVfPQY9kNs6 -q"addprinc -randkey xwq"

然後再執行job

INFO : Concurrency mode is disabled, not creating a lock manager

+------+

| _c0 |

+------+

| xwq |

+------+

1 row selected (0.316 seconds)

0: jdbc:hive2://hadoop102:10000/> insert into student values(2,'1');

INFO : Compiling command(queryId=hive_20230701104001_8b825171-b12d-416a-9044-14e40ce66b4e): insert into student values(2,'1')

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:col1, type:int, comment:null), FieldSchema(name:col2, type:string, comment:null)], properties:null)

INFO : Completed compiling command(queryId=hive_20230701104001_8b825171-b12d-416a-9044-14e40ce66b4e); Time taken: 0.274 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=hive_20230701104001_8b825171-b12d-416a-9044-14e40ce66b4e): insert into student values(2,'1')

INFO : Query ID = hive_20230701104001_8b825171-b12d-416a-9044-14e40ce66b4e

INFO : Total jobs = 1

INFO : Launching Job 1 out of 1

INFO : Starting task [Stage-1:MAPRED] in serial mode

INFO : Running with YARN Application = application_1688108003994_0011

INFO : Kill Command = /opt/ha/hadoop/bin/yarn application -kill application_1688108003994_0011

INFO : Hive on Spark Session Web UI URL: http://hadoop104:38576

INFO :

Query Hive on Spark job[0] stages: [0, 1]

INFO : Spark job[0] status = RUNNING

INFO : Job Progress Format

CurrentTime StageId_StageAttemptId: SucceededTasksCount(+RunningTasksCount-FailedTasksCount)/TotalTasksCount

INFO : 2023-07-01 10:40:47,412 Stage-0_0: 0(+1)/1 Stage-1_0: 0/1

INFO : 2023-07-01 10:40:50,428 Stage-0_0: 0(+1)/1 Stage-1_0: 0/1

INFO : 2023-07-01 10:40:51,432 Stage-0_0: 1/1 Finished Stage-1_0: 1/1 Finished

INFO : Spark job[0] finished successfully in 7.05 second(s)

INFO : Starting task [Stage-0:MOVE] in serial mode

INFO : Loading data to table default.student from hdfs://mycluster/user/hive/warehouse/student/.hive-staging_hive_2023-07-01_10-40-01_182_6190336792685741127-8/-ext-10000

INFO : Starting task [Stage-2:STATS] in serial mode

INFO : Completed executing command(queryId=hive_20230701104001_8b825171-b12d-416a-9044-14e40ce66b4e); Time taken: 50.419 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

No rows affected (50.703 seconds)

0: jdbc:hive2://hadoop102:10000/>

執行成功

至於hdfs用戶為什麼沒有成功,是因為yarn是禁止hdfs用戶提交任務的文章来源:https://www.toymoban.com/news/detail-514326.html

所以以後看hive的bug可以看log日誌,還是可以yarn web頁面Diagnostics板塊文章来源地址https://www.toymoban.com/news/detail-514326.html

到了这里,关于hive中的datagrip和beeline客户端的权限问题的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!