参考文献如下

[1] 通过设置PYTORCH_CUDA_ALLOC_CONF中的max_split_size_mb解决Pytorch的显存碎片化导致的CUDA:Out Of Memory问题

https://blog.csdn.net/MirageTanker/article/details/127998036

[2] shell环境变量说明

https://blog.csdn.net/JOJOY_tester/article/details/90738717

具体解决步骤

报错信息如下:

RuntimeError: CUDA out of memory.

Tried to allocate 6.18 GiB (GPU 0; 24.00 GiB total capacity;

11.39 GiB already allocated;

3.43 GiB free; 17.62 GiB reserved in total by PyTorch)

If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation.

See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

计算 reserved - allocated = 17.62 - 11.39 = 6.23 > 6.18 (暂且不用管如何来的,更多说明参考文献[1])

查看CUDA中管理缓存的环境变量

echo $PYTORCH_CUDA_ALLOC_CONF

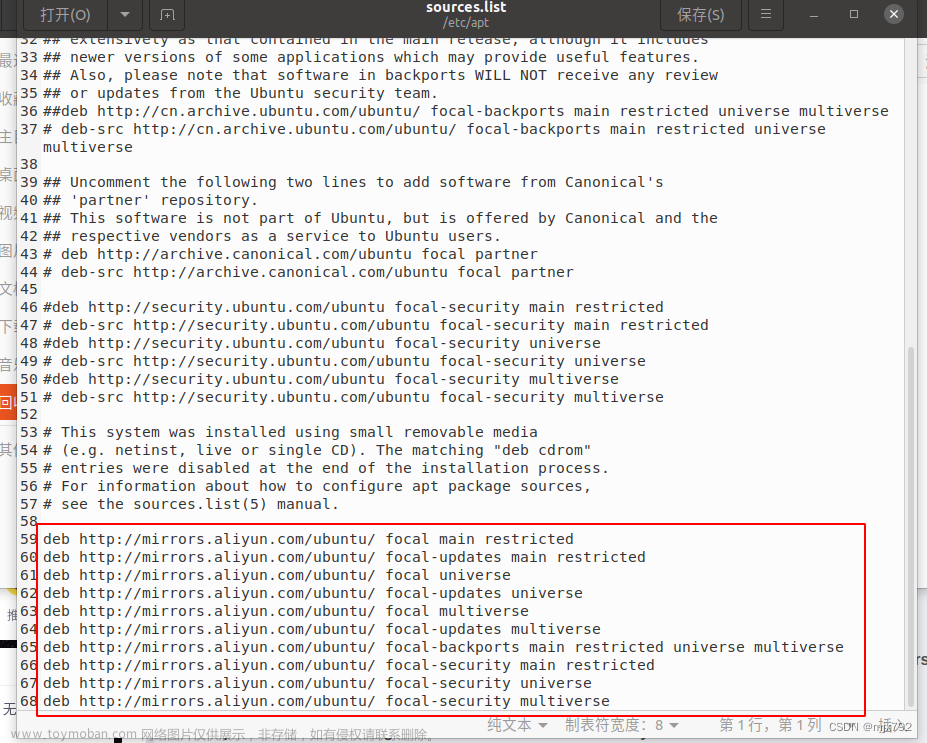

设置环境变量的值(这里用到6.18这个数了,简单理解6.18表示缓存空间6.18GB)文章来源:https://www.toymoban.com/news/detail-515312.html

export PYTORCH_CUDA_ALLOC_CONF=max_split_size_mb:6110

(6110的由来简单理解为6110MB,我们要选择比6.18GB小的最大空间,推荐直接设置为6.1*1000MB)

问题圆满解决,可喜可贺 可喜可贺文章来源地址https://www.toymoban.com/news/detail-515312.html

到了这里,关于PYTORCH_CUDA_ALLOC_CONF max_split_size_mb | Shell ( Linux ) 环境下的解决措施的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!