定制程序开发

heguannan @ 163.com

一、项目背景

国内天生有墙,海外与国内的视频平台不连通,视频搬运业务应运而生。 通过国内的视频搬运到海外平台或者将海外平台视频搬运到国内,以获得平台的报酬。

二、技术路线

当然,可以直接采用成熟的API直接做到 汉语语音<->英语语音。然而,大多数API都要收费,且价格不便宜。Google和Microsoft 一个月只有5条音频能够试用。不能满足搬运的需求。

本文通过

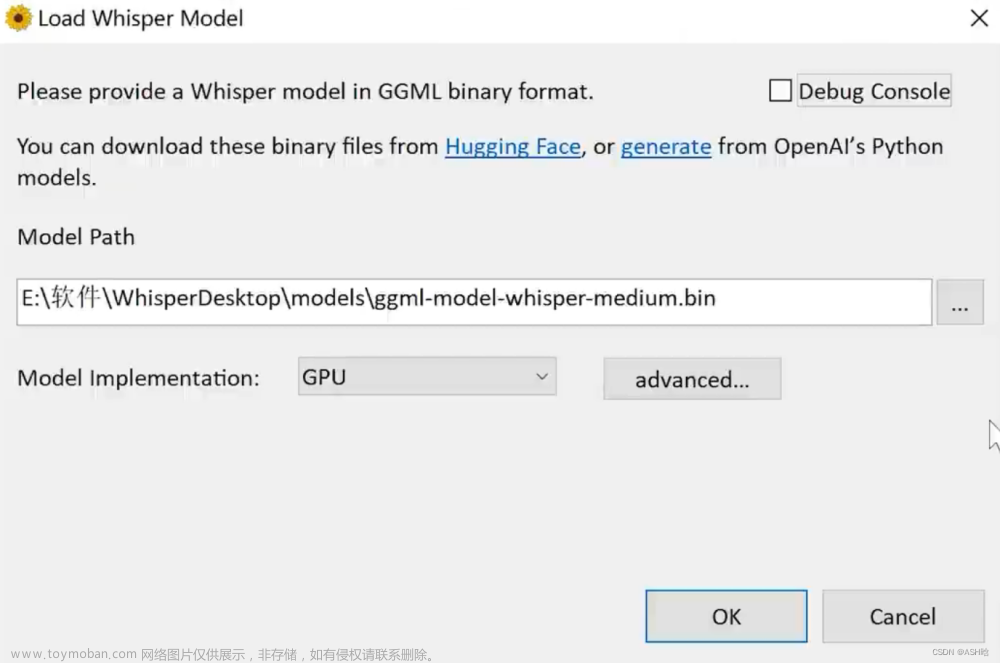

1.开源离线模型whisper 完成视频字幕的提取,可以获得视频的字幕开始时间,字幕结束时间和字幕内容

2.opencc进行简体和繁体中文转换

3.Baidu翻译API (每月免费100万个字符,能够满足需求) 进行汉英互相翻译

4.edge_tts:采用微软的免费API进行Text to Speech 进行配音。

三、具体代码

程序分为S1和S2。

S1从中文视频中提取中文字幕文件,由于识别的字幕可能有错,文件生成后,可以进行人工修改。文章来源:https://www.toymoban.com/news/detail-518225.html

修改完成后,再运行S2,就可以得到英语配音。文章来源地址https://www.toymoban.com/news/detail-518225.html

# -*- coding: utf-8 -*-

"""

Created on Sun Jun 18 16:17:41 2023

@author: hegua

脚本1:

1_EA:获取音频/没有音频的视频并保存在Temp文件夹中。

2_TS:转换为繁体中文字幕并转化为简体

3_SS:保存中间文件

"""

from moviepy.editor import VideoFileClip

import whisper

import opencc

##1_EA

video_name = "0618"

video_path = rf"./Ori/"+ video_name + ".mp4"

# 读取视频文件

video = VideoFileClip(video_path)

# 提取视频和音频

audio = video.audio

video = video.without_audio()

temp_path = rf"./Temp/"+ video_name

vwa_path = rf"./Res/"+ video_name

# 保存提取出来的视频和音频

video.write_videofile(vwa_path+".mp4")

audio.write_audiofile(temp_path+".mp3")

##2_TS :

whisper_path = temp_path +".mp3"

model = whisper.load_model("medium")

whisper_result = model.transcribe(audio = whisper_path,language = "Chinese")

converter = opencc.OpenCC("t2s.json")

idx = 0

while idx < len(whisper_result['segments']):

t2s_res = converter.convert(whisper_result['segments'][idx]['text'])

whisper_result['segments'][idx]['text'] = t2s_res

idx += 1

##3_SS

# 字幕开始时间、结束时间和内容列表

subs = whisper_result['segments']

tup_list = []

for sub in subs:

tup1 =(sub['start'],sub['end'],sub['text'])

tup_list.append(tup1)

srt_path = temp_path +'.txt'

with open(srt_path, 'w') as f:

for i, (start, end, text) in enumerate(tup_list):

f.write(str(start)+","+str(end) +"," +text + '\n')

# -*- coding: utf-8 -*-

"""

Created on Sun Jun 18 17:49:47 2023

@author: hegua

## 1_读取修正的字幕文本文件

## 2_进行翻译

## 2_进行配音

## 3_生成音频文件

"""

import http.client

import hashlib

import urllib

import random

import json

import time

##利用微软的TTS API进行合成

import edge_tts

import asyncio

import nest_asyncio

nest_asyncio.apply()

##语音集合

from pydub import AudioSegment

async def TTS(TEXT,output,rate):

voice = 'en-US-EricNeural'

volume = '+30%'

tts = edge_tts.Communicate(text=TEXT, voice=voice, rate=rate, volume=volume)

print(output+'TTS保存成功')

await tts.save(output)

def BaiduTrans(q,idx,fromLang = 'zh', toLang ='en'):

appid = '' # 填写你的appid

secretKey = '' # 填写你的密钥

httpClient = None

myurl = '/api/trans/vip/translate'

salt = random.randint(32768, 65536)

sign = appid + q + str(salt) + secretKey

sign = hashlib.md5(sign.encode()).hexdigest()

myurl = myurl + '?appid=' + appid + '&q=' + urllib.parse.quote(q) + '&from=' + fromLang + '&to=' + toLang + '&salt=' + str(

salt) + '&sign=' + sign

try:

httpClient = http.client.HTTPConnection('api.fanyi.baidu.com')

httpClient.request('GET', myurl)

# response是HTTPResponse对象

response = httpClient.getresponse()

result_all = response.read().decode("utf-8")

result = json.loads(result_all)

except Exception as e:

print (e)

finally:

if httpClient:

httpClient.close()

print(str(idx)+" 翻译成功")

return result

video_name = '0618'

file_path = rf"./Temp/"+ video_name + '.txt'

dub_path = rf"./Temp/"+ video_name

audio_segments = []

with open(file_path, 'r') as file:

content = file.read()

# 按照空行将内容分割为多个字幕记录

records = content.split('\n')

idx = 0

while idx < len(records):

if records[idx]:

s = records[idx].split(',')[0]

e = records[idx].split(',')[1]

q = records[idx].split(',')[2]

t = BaiduTrans(q,idx)

text = t['trans_result'][0]['dst']

start = float(s)

end = float(e)

#翻译的结果是t,进行Bark配音

output = dub_path+'_'+str(idx)+".mp3"

asyncio.run(TTS(text,output,'+5%'))

##读取语音,获取语音的成都

# audio = AudioSegment.from_file(output)

# duration = len(audio) / 1000 # 将毫秒转换为秒

# if duration > end-start:

# rate = int(duration/(end-start)*100 + 10)

# rate = '+' + str(rate)+'%'

# asyncio.run(TTS(text,output,rate))

audio_temp = {"file": output, "start":start , "end":end }

# 将解析后的字幕记录添加到列表中

audio_segments.append(audio_temp)

#time.sleep(0.5)

idx += 1

combined_audio = AudioSegment.silent(duration=0)

duration_all = 0

for segment in audio_segments:

audio = AudioSegment.from_file(segment["file"])

duration = len(audio) / 1000

duration_all = duration + duration_all

end = segment["end"]

if duration_all < end:

audio = audio + AudioSegment.silent(duration= (end - duration_all)*1000)

duration_all = end

combined_audio += audio

combined_audio_path = rf"./Res/"+ video_name +'.wav'

combined_audio.export(combined_audio_path, format="wav")到了这里,关于语音翻译项目(Whisper,opencc,Baidu翻译API,edge_tts)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!