19C集群public调整单网卡为BOND2整体步骤总结:

1 使用oifcfg删除老的配置 ,添加需要的配置。

2 关闭集群

3 配置BOND并启动bond。

4 由于VIP信息未调整,VIP以下相关资源启动异常,调整VIP以及network资源。

5 重启集群。

19C集群priv调整单网卡为BOND1整体步骤总结:

1 使用oifcfg删除老的配置 ,添加需要的配置。

2 关闭数据库集群。

3 配置bond,启动bond。

4 启动数据库集群

5 查看GIPC日志 网卡日志是否正常。

实验总结

1 oracle 19C集群 网络分为3种,

2 集群已经启动双栈协议,IPV6地址可以直接调整public ip和VIP.

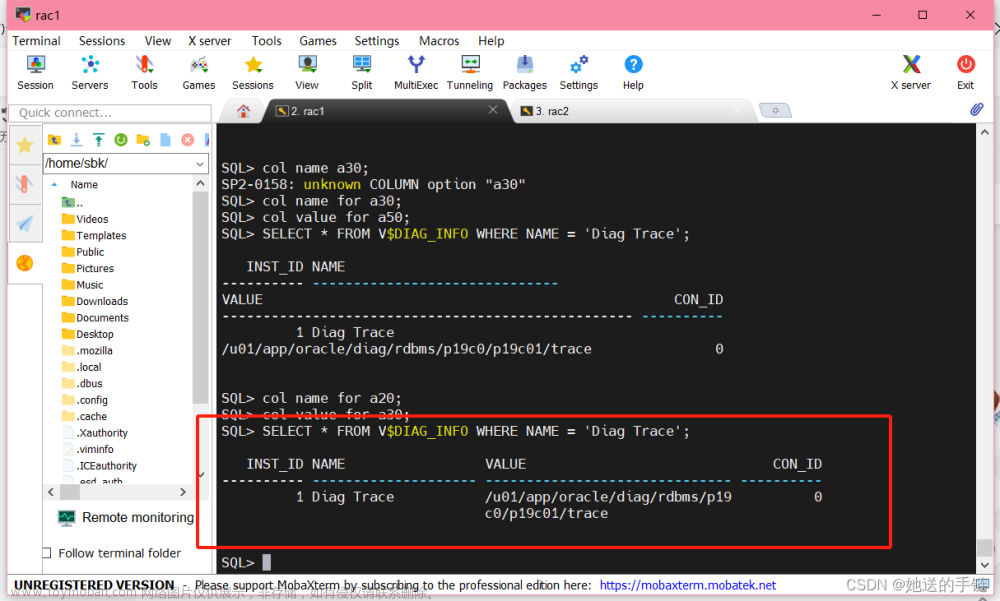

1 NETWORK 公网netnum

srvctl config network

srvctl config vip -n orcldb1

srvctl config vip -n orcldb2

2 私网接口asm网络和pri网络

oifcfg getif

3 asmnet 一般和 私网公用 asm网络 。

1 操作步骤总结

查看当前配置信息

[root@orcldb1 bin]# ./oifcfg getif (查看每个网卡是做什么用的)

eno3 10.369.224.0 global public

eno3 2109:8102:5B06:0120:0010:0000:0002:D000 global public

bond1 10.2.0.0 global cluster_interconnect,asm

删除当前public网卡信息

[root@orcldb1 bin]# ./oifcfg delif -global eno3

[root@orcldb1 bin]# ./oifcfg getif

bond1 10.2.0.0 global cluster_interconnect,asm

[root@orcldb1 bin]#

[root@orcldb1 bin]# ./oifcfg delif -global eno3

[root@orcldb1 bin]# ./oifcfg getif

bond1 10.2.0.0 global cluster_interconnect,asm

配置新的网卡信息

oifcfg setif -global bond2/10.369.224.0:public

oifcfg setif -global bond2/2109:8102:5B06:0120:0010:0000:0002:D000:public

[root@orcldb1 bin]# ./oifcfg getif

bond1 10.2.0.0 global cluster_interconnect,asm

bond2 10.369.224.0 global public

bond2 2109:8102:5B06:0120:0010:0000:0002:D000 global public ---oifcfg就是我们需要的结果。

[root@orcldb1 bin]#

配置BOND2 并测试BOND的高可用性。

[root@orcldb1 ~]# more /proc/net/bonding/bond2

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eno4

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eno3

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 1

Permanent HW addr: 2c:97:b1:e5:a6:e7

Slave queue ID: 0

Slave Interface: eno4

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 2c:97:b1:e5:a6:e6

Slave queue ID: 0

[root@orcldb1 ~]# ping 10.369.224.7

PING 10.369.224.7 (10.369.224.7) 56(84) bytes of data.

64 bytes from 10.369.224.7: icmp_seq=1 ttl=64 time=0.080 ms

64 bytes from 10.369.224.7: icmp_seq=2 ttl=64 time=0.040 ms

64 bytes from 10.369.224.7: icmp_seq=3 ttl=64 time=0.040 ms

^C

--- 10.369.224.7 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.040/0.053/0.080/0.019 ms

[root@orcldb1 ~]#

[root@orcldb1 ~]#

[root@orcldb2 bin]# cat /proc/net/bonding/bond2

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: eno3

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eno3

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: c4:b8:b4:2e:b7:0f

Slave queue ID: 0

Slave Interface: eno4

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: c4:b8:b4:2e:b7:0e

Slave queue ID: 0

[root@orcldb2 bin]# ping 10.369.224.5

PING 10.369.224.5 (10.369.224.5) 56(84) bytes of data.

64 bytes from 10.369.224.5: icmp_seq=1 ttl=64 time=0.070 ms

64 bytes from 10.369.224.5: icmp_seq=2 ttl=64 time=0.043 ms

64 bytes from 10.369.224.5: icmp_seq=3 ttl=64 time=0.044 ms

64 bytes from 10.369.224.5: icmp_seq=4 ttl=64 time=0.037 ms

^C

--- 10.369.224.5 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 2999ms

rtt min/avg/max/mdev = 0.037/0.048/0.070/0.014 ms

[root@orcldb2 bin]#

重启集群。

修改VIP

此次修改VIP采用的是先删除在添加的方式

./srvctl remove vip -vip orcldb1-vip

Please confirm that you intend to remove the VIPs orcldb1-vip (y/[n]) y

./srvctl remove vip -vip orcldb2-vip

Please confirm that you intend to remove the VIPs orcldb2-vip (y/[n]) y

./srvctl config vip -n orcldb1

[root@orcldb1 bin]# ./srvctl add vip -node orcldb1 -netnum 1 -address 10.369.224.16/255.255.255.192/bond2

PRCN-3005 : Failed to add or use registered network 1 with network interfaces 'bond2' because the network was already registered for network interfaces 'eno3'

很纳闷,到底哪里还有eno3的信息那?

查看network资源信息

./srvctl modify network

发现了eno3的信息,进行重新配置

./srvctl modify network -subnet 10.369.224.0/255.255.255.192/bond2 (修改network对应的网卡名称)

[root@orcldb1 bin]# ./srvctl config network

Network 1 exists

Subnet IPv4: 10.369.224.0/255.255.255.192/bond2, static

Subnet IPv6: 2109:8102:5B06:120:10:0:2:d000/116/bond2, static

Ping Targets:

Network is enabled

Network is individually enabled on nodes:

Network is individually disabled on nodes:

[root@orcldb1 bin]#

./srvctl add vip -node orcldb1 -netnum 1 -address 10.369.224.16/255.255.255.192/bond2

./srvctl add vip -node orcldb2 -netnum 1 -address 10.369.224.17/255.255.255.192/bond2

./srvctl modify vip -node orcldb1 -netnum 1 -address 2109:8102:5B06:0120:0010:0000:0002:D010/116/bond2

./srvctl modify vip -node orcldb2 -netnum 1 -address 2109:8102:5B06:0120:0010:0000:0002:D011/116/bond2

操作日志如下:

[root@orcldb1 bin]# ./srvctl config network

Network 1 exists

Subnet IPv4: 10.369.224.0/255.255.255.192/eno3, static

Subnet IPv6: 2109:8102:5B06:120:10:0:2:d000/116/eno3, static

Ping Targets:

Network is enabled

Network is individually enabled on nodes:

Network is individually disabled on nodes:

[root@orcldb1 bin]# ./oifcfg getif

bond1 10.2.0.0 global cluster_interconnect,asm

bond2 10.369.224.0 global public

bond2 2109:8102:5B06:0120:0010:0000:0002:D000 global public

[root@orcldb1 bin]# ./srvctl modify network -subnet 10.369.224.0/255.255.255.192/bond2

[root@orcldb1 bin]# ./srvctl config network

Network 1 exists

Subnet IPv4: 10.369.224.0/255.255.255.192/bond2, static

Subnet IPv6: 2109:8102:5B06:120:10:0:2:d000/116/bond2, static

Ping Targets:

Network is enabled

Network is individually enabled on nodes:

Network is individually disabled on nodes:

[root@orcldb1 bin]# ./srvctl add vip -node orcldb1 -netnum 1 -address 10.369.224.16/255.255.255.192/bond2

[root@orcldb1 bin]# ./srvctl add vip -node orcldb2 -netnum 1 -address 10.369.224.17/255.255.255.192/bond2

[root@orcldb1 bin]# ./srvctl config vip -n orcldb1

VIP exists: network number 1, hosting node orcldb1

VIP IPv4 Address: 10.369.224.16

VIP IPv6 Address:

VIP is enabled.

VIP is individually enabled on nodes:

VIP is individually disabled on nodes:

[root@orcldb1 bin]# ./srvctl modify vip -node orcldb1 -netnum 1 -address 2109:8102:5B06:0120:0010:0000:0002:D010/116/bond2

[root@orcldb1 bin]# ./srvctl modify vip -node orcldb2 -netnum 1 -address 2109:8102:5B06:0120:0010:0000:0002:D011/116/bond2

[root@orcldb1 bin]#

[root@orcldb1 bin]# ./srvctl config vip -n orcldb1

VIP exists: network number 1, hosting node orcldb1

VIP IPv4 Address: 10.369.224.16

VIP IPv6 Address: 2109:8102:5B06:120:10:0:2:d010

VIP is enabled.

VIP is individually enabled on nodes:

VIP is individually disabled on nodes:

[root@orcldb1 bin]# ./srvctl config vip -n orcldb2

VIP exists: network number 1, hosting node orcldb2

VIP IPv4 Address: 10.369.224.17

VIP IPv6 Address: 2109:8102:5B06:120:10:0:2:d011

VIP is enabled.

VIP is individually enabled on nodes:

VIP is individually disabled on nodes:

[root@orcldb1 bin]#

问题处理1 :”重启集群以后私网不能正常连接。

2023-06-30 11:59:28.999 :GIPCXCPT:3374573312: gipcConnectSyncF [clscrsconGipcConnect : clscrscon.c : 700]: EXCEPTION[ ret gipcretConnectionRefused (29) ] failed sync connect endp 0x7f05a0154ba0 [0000000000005c37] { gipcEndpoint : localAddr 'clsc://(ADDRESS=(PROTOCOL=ipc)(KEY=)(GIPCID=00000000-00000000-0))', remoteAddr 'clsc://(ADDRESS=(PROTOCOL=ipc)(KEY=CRSD_UI_SOCKET)(GIPCID=00000000-00000000-0))', numPend 0, numReady 0, numDone 0, numDead 1, numTransfer 0, objFlags 0x0, pidPeer 0, readyRef (nil), ready 1, wobj 0x7f05a015fc70, sendp 0x7f05a015fa90 status 13flags 0xa108071a, flags-2 0x0, usrFlags 0x0 }, addr 0x7f05a015e690 [0000000000005c3e] { gipcAddress : name 'clsc://(ADDRESS=(PROTOCOL=ipc)(KEY=CRSD_UI_SOCKET)(GIPCID=00000000-00000000-0))', objFlags 0x0, addrFlags 0x4 }, flags 0x0

2023-06-30 11:59:28.999 : CSSDGNS:3374573312: clssgnsCrsQuery: CRS is not ready. Cannot query GNS resource state.

2023-06-30 11:59:29.220 : CSSD:3366692608: [ INFO] clssscWaitOnEventValue: after CmInfo State val 3, eval 1 waited 1000 with cvtimewait status 4294967186

2023-06-30 11:59:29.469 : CSSD:2052392704: [ INFO] clssnmvDHBValidateNCopy: node 2, orcldb2, has a disk HB, but no network HB, DHB has rcfg 583591829, wrtcnt, 244854213, LATS 334474, lastSeqNo 244854210, uniqueness 1688097018, timestamp 1688097570/344904

2023-06-30 11:59:29.543 : CSSD:2047661824: [ INFO] clssnmvDHBValidateNCopy: node 2, orcldb2, has a disk HB, but no network HB, DHB has rcfg 583591829, wrtcnt, 244854214, LATS 334554, lastSeqNo 244854208, uniqueness 1688097018, timestamp 1688097570/344914

2023-06-30 11:59:29.984 : CSSD:3380881152: clsssc_CLSFAInit_CB: System not ready for CLSFA initialization

2023-06-30 11:59:30.220 : CSSD:3366692608: [ INFO] clssscWaitOnEventValue: after CmInfo State val 3, eval 1 waited 1000 with cvtimewait status 4294967186

2023-06-30 11:59:30.368 : CSSD:2036623104: [ INFO] clssnmRcfgMgrThread: Local Join

2023-06-30 11:59:30.368 : CSSD:2036623104: [ INFO] clssnmLocalJoinEvent: begin on node(1), waittime 193000

2023-06-30 11:59:30.368 : CSSD:2036623104: [ INFO] clssnmLocalJoinEvent: set curtime (335374) for my node

2023-06-30 11:59:30.368 : CSSD:2036623104: [ INFO] clssnmLocalJoinEvent: scanning 32 nodes

2023-06-30 11:59:30.368 : CSSD:2036623104: [ INFO] clssnmLocalJoinEvent: Node orcldb2, number 2, is in an existing cluster with disk state 3

2023-06-30 11:59:30.368 : CSSD:2036623104: [ WARNING] clssnmLocalJoinEvent: takeover aborted due to cluster member node found on disk

2023-06-30 11:59:30.469 : CSSD:2052392704: [ INFO] clssnmvDHBValidateNCopy: node 2, orcldb2, has a disk HB, but no network HB, DHB has rcfg 583591829, wrtcnt, 244854216, LATS 335474, lastSeqNo 244854213, uniqueness 1688097018, timestamp 1688097571/345904

2023-06-30 11:59:30.544 : CSSD:2042930944: [ INFO] clssnmvDHBValidateNCopy: node 2, orcldb2, has a disk HB, but no network HB, DHB has rcfg 583591829, wrtcnt, 244854217, LATS 335554, lastSeqNo 244854211, uniqueness 1688097018, timestamp 1688097571/345914

2023-06-30 11:59:30.984 : CSSD:3380881152: clsssc_CLSFAInit_CB: System not ready for CLSFA initialization

2023-06-30 11:59:31.220 : CSSD:3366692608: [ INFO] clssscWaitOnEventValue: after CmInfo State val 3, eval 1 waited 1000 with cvtimewait status 4294967186

2023-06-30 11:59:31.390 :GIPCHDEM:2068125440: gipchaDaemonCreateResolveResponse: creating resolveResponse for host:orcldb2, port:nm2_orcldb, haname:, ret:1

2023-06-30 11:59:31.390 :GIPCHTHR:2069702400: gipchaWorkerProcessClientResolveResponse: resolve from connect FAILED for host 'orcldb2', port 'nm2_orcldb' with ret:gipcretFail (1)

2023-06-30 11:59:31.390 :GIPCGMOD:2035046144: gipcmodGipcCompleteRequest: [gipc] completing req 0x7f05200436c0 [0000000000005942] { gipcConnectRequest : addr 'gipcha://orcldb2:nm2_orcldb', parentEndp 0x7f052003b8e0, ret gipcretFail (1), objFlags 0x0, reqFlags 0x2 }

2023-06-30 11:59:31.390 :GIPCGMOD:2035046144: gipcmodGipcCompleteConnect: [gipc] completed connect on endp 0x7f052003b8e0 [00000000000058de] { gipcEndpoint : localAddr 'gipcha://orcldb1:4-0000-0000-0000-0015', remoteAddr 'gipcha://orcldb2:nm2_orcldb', numPend 1, numReady 1, numDone 0, numDead 0, numTransfer 0, objFlags 0x0, pidPeer 0, readyRef 0x55e3f83e1a90, ready 0, wobj 0x7f0520045620, sendp (nil) status 13flags 0x200b8602, flags-2 0x10, usrFlags 0x0 }

2023-06-30 11:59:31.390 : GIPCTLS:2035046144: gipcmodTlsDisconnect: [tls] disconnect issued on endp 0x7f052003b8e0 [00000000000058de] { gipcEndpoint : localAddr 'gipcha://orcldb1:4-0000-0000-0000-0015', remoteAddr 'gipcha://orcldb2:nm2_orcldb', numPend 1, numReady 0, numDone 1, numDead 0, numTransfer 0, objFlags 0x0, pidPeer 0, readyRef 0x55e3f83e1a90, ready 1, wobj 0x7f0520045620, sendp (nil) status 13flags 0x200b8602, flags-2 0x10, usrFlags 0x0 }

2023-06-30 11:59:31.390 :GIPCGMOD:2035046144: gipcmodGipcDisconnect: [gipc] Issued endpoint close for endp 0x7f052003b8e0 [00000000000058de] { gipcEndpoint : localAddr 'gipcha://orcldb1:4-0000-0000-0000-0015', remoteAddr 'gipcha://orcldb2:nm2_orcldb', numPend 1, numReady 0, numDone 1, numDead 0, numTransfer 0, objFlags 0x0, pidPeer 0, readyRef 0x55e3f83e1a90, ready 1, wobj 0x7f0520045620, sendp (nil) status 13flags 0x200b8602, flags-2 0x10, usrFlags 0x0 }

2023-06-30 11:59:31.390 : CSSD:2035046144: [ INFO] clssscSelect: conn complete ctx 0x55e3f83e3b70 endp 0x58de

2023-06-30 11:59:31.390 : CSSD:2035046144: clsde_evtdata_init: mod [CSSD], comment [GIPC operation 2 failed for gipc-oid 22750 with ret gipcretFail(1)]

2023-06-30 11:59:31.390 : CSSD:2035046144: clsde_evtdata_init: evtdata [0x7f0520038a10], subtype [NETWORK], mod [CSSD], time [2023-06-30 11:59:31.390], loc [orcldb1], comment [GIPC operation 2 failed for gipc-oid 22750 with ret gipcretFail(1)]

2023-06-30 11:59:31.390 : CSSD:2035046144: [ INFO] clssnmeventhndlr: node(2), endp(0x58de) failed, probe(0x7f05000058de) ninf->endp (0x55e300000000) CONNCOMPLETE

2023-06-30 11:59:31.390 : CSSD:2035046144: [ INFO] clssnmDiscHelper: orcldb2, node(2) connection failed, endp (0x58de), probe(0x55e3000058de), ninf->endp 0x7f0500000000

2023-06-30 11:59:31.390 : CSSD:2035046144: [ INFO] clssscSelect: gipcwait returned with status gipcretPosted (17)

2023-06-30 11:59:31.390 : CSSD:3379304192: clsde_clsceevt_publish: Clusterwide event

2023-06-30 11:59:31.391 : GIPCTLS:2035046144: gipcmodTlsDisconnect: [tls] disconnect issued on endp 0x7f052003b8e0 [00000000000058de] { gipcEndpoint : localAddr 'gipcha://orcldb1:4-0000-0000-0000-0015', remoteAddr 'gipcha://orcldb2:nm2_orcldb', numPend 1, numReady 0, numDone 0, numDead 0, numTransfer 0, objFlags 0x0, pidPeer 0, readyRef 0x55e3f83c14b0, ready 1, wobj 0x7f0520045620, sendp (nil) status 13flags 0x260b860a, flags-2 0x10, usrFlags 0x0 }

2023-06-30 11:59:31.391 : CSSD:2035046144: [ INFO] clssnmDiscEndp: gipcDestroy 0x58de

2023-06-30 11:59:31.391 : CSSD:2035046144: [ INFO] clssscSelect: gipcwait returned with status gipcretPosted (17)

2023-06-30 11:59:31.391 : CSSD:3379304192: clsde_evtdata_destroy: pdata [0x7f0520038a10], subtype [NETWORK] mod [CSSD], time [2023-06-30 11:59:31.390], loc [orcldb1], comment [GIPC operation 2 failed for gipc-oid 22750 with ret gipcretFail(1)]

2023-06-30 11:59:31.470 : CSSD:2052392704: [ INFO] clssnmvDHBValidateNCopy: node 2, orcldb2, has a disk HB, but no network HB, DHB has rcfg 583591829, wrtcnt, 244854219, LATS 336474, lastSeqNo 244854216, uniqueness 1688097018, timestamp 1688097572/346904

2023-06-30 11:59:31.470 : CSSD:2035046144: [ INFO] clssscSelect: gipcwait returned with status gipcretPosted (17)

2023-06-30 11:59:31.474 :GIPCHDEM:2068125440: gipchaDaemonProcessClientReq: processing req 0x7f052004f9b0 type gipchaClientReqTypePublish (1)

2023-06-30 11:59:31.475 :GIPCGMOD:2069702400: gipcmodGipcCallbackEndpClosed: [gipc] Endpoint close for endp 0x7f052003b8e0 [00000000000058de] { gipcEndpoint : localAddr '(dying)', remoteAddr '(dying)', numPend 0, numReady 1, numDone 0, numDead 0, numTransfer 0, objFlags 0x2, pidPeer 0, readyRef 0x55e3f83c14b0, ready 1, wobj 0x7f0520045620, sendp (nil) status 13flags 0x2e0b860a, flags-2 0x10, usrFlags 0x0 }

2023-06-30 11:59:31.475 :GIPCHDEM:2068125440: gipchaDaemonProcessClientReq: processing req 0x7f05540a2820 type gipchaClientReqTypeDeleteName (12)

2023-06-30 11:59:31.475 :GIPCHTHR:2069702400: gipchaWorkerProcessClientConnect: starting resolve from connect for host:orcldb2, port:nm2_orcldb, cookie:0x7f052004f9b0

2023-06-30 11:59:31.475 : CSSD:2035046144: [ INFO] clssscConnect: endp 0x5cba - cookie 0x55e3f83e3b70 - addr gipcha://orcldb2:nm2_orcldb

2023-06-30 11:59:31.475 : CSSD:2035046144: [ INFO] clssnmRetryConnections: Probing node orcldb2 (2), probendp(0x7f0500005cba)

2023-06-30 11:59:31.475 :GIPCHDEM:2068125440: gipchaDaemonProcessClientReq: processing req 0x7f0554083600 type gipchaClientReqTypeResolve (4)

2023-06-30 11:59:31.544 : CSSD:2047661824: [ INFO] clssnmvDHBValidateNCopy: node 2, orcldb2, has a disk HB, but no network HB, DHB has rcfg 583591829, wrtcnt, 244854221, LATS 336554, lastSeqNo 244854214, uniqueness 1688097018, timestamp 1688097572/346914

2023-06-30 11:59:31.984 : CSSD:3380881152: clsssc_CLSFAInit_CB: System not ready for CLSFA initialization

^C

[grid@orcldb1 trace]$

将节点1 重启网卡后恢复正常。文章来源:https://www.toymoban.com/news/detail-518802.html

crsctl stop has -f

crsctl start has文章来源地址https://www.toymoban.com/news/detail-518802.html

到了这里,关于Oracle 19.18集群网络管理-单网卡(IPV4 IPV6双栈)调整为bond模式实战的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!