django celery 记录

dvadmin-celery

Django+Django-Celery+Celery的整合实战

https://cloud.tencent.com/developer/article/1445252

https://blog.csdn.net/wowocpp/article/details/131475484

https://docs.celeryq.dev/en/latest/django/first-steps-with-django.html

https://docs.celeryq.dev/en/latest/django/index.html

http://docs.celeryproject.org/en/latest/

https://github.com/pylixm/celery-examples

https://pylixm.cc/posts/2015-12-03-Django-celery.html

中文

https://www.celerycn.io/ru-men/celery-chu-ci-shi-yong

中文

https://docs.jinkan.org/docs/celery/

Python Django构建简易CMDB

https://blog.51cto.com/u_15352934/3819825

简约而不简单的 Django 新手图文教程

https://zhuanlan.zhihu.com/p/393724439

牛哄哄的celery

https://www.cnblogs.com/pyedu/p/12461819.html

example

https://github.com/celery/celery

Backends and Brokers

Brokers 消息中间件 消息转发

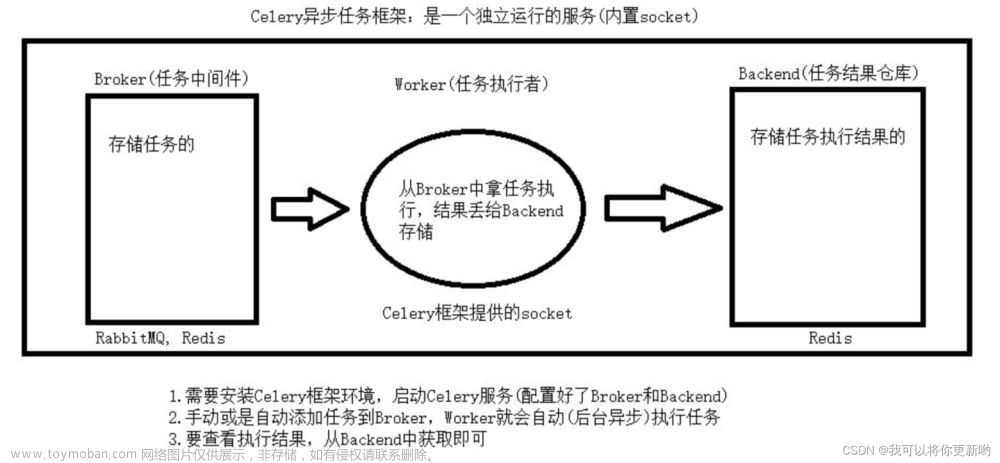

Celery的架构由三部分组成,消息中间件(message broker),任务执行单元(worker)和任务执行结果存储(task result store)组成。

消息中间件

Celery本身不提供消息服务,但是可以方便的和第三方提供的消息中间件集成。包括,RabbitMQ, Redis等等

任务执行单元

Worker是Celery提供的任务执行的单元,worker并发的运行在分布式的系统节点中。

任务结果存储

Task result store用来存储Worker执行的任务的结果,Celery支持以不同方式存储任务的结果,包括AMQP, redis等

另外, Celery还支持不同的并发和序列化的手段

并发:Prefork, Eventlet, gevent, threads/single threaded

序列化:pickle, json, yaml, msgpack. zlib, bzip2 compression, Cryptographic message signing 等等

第一个例子:

python3 -m venv demo1_venv

source demo1_venv/bin/activate

mkdir work

cd work/

pip install -U Celery

pip install redis

mkdir CeleryTask

vi celery_task.py

import celery

import time

backend='redis://127.0.0.1:6379/1'

broker='redis://127.0.0.1:6379/2'

cel=celery.Celery('test',backend=backend,broker=broker)

@cel.task

def send_email(name):

print("向%s发送邮件..."%name)

time.sleep(5)

print("向%s发送邮件完成"%name)

return "ok"

celery -A celery_task worker -l info

(demo1_venv) mike@ubuntu:~/work/CeleryTask$ celery -A celery_task worker -l info

-------------- celery@ubuntu v5.3.1 (emerald-rush)

--- ***** -----

-- ******* ---- Linux-5.15.0-76-generic-x86_64-with-glibc2.29 2023-07-03 02:40:28

- *** --- * ---

- ** ---------- [config]

- ** ---------- .> app: test:0x7f985fa979a0

- ** ---------- .> transport: redis://127.0.0.1:6379/2

- ** ---------- .> results: redis://127.0.0.1:6379/1

- *** --- * --- .> concurrency: 1 (prefork)

-- ******* ---- .> task events: OFF (enable -E to monitor tasks in this worker)

--- ***** -----

-------------- [queues]

.> celery exchange=celery(direct) key=celery

[tasks]

. celery_task.send_email

[2023-07-03 02:40:28,783: WARNING/MainProcess] /home/mike/demo1_venv/lib/python3.8/site-packages/celery/worker/consumer/consumer.py:498: CPendingDeprecationWarning: The broker_connection_retry configuration setting will no longer determine

whether broker connection retries are made during startup in Celery 6.0 and above.

If you wish to retain the existing behavior for retrying connections on startup,

you should set broker_connection_retry_on_startup to True.

warnings.warn(

[2023-07-03 02:40:28,806: INFO/MainProcess] Connected to redis://127.0.0.1:6379/2

[2023-07-03 02:40:28,808: WARNING/MainProcess] /home/mike/demo1_venv/lib/python3.8/site-packages/celery/worker/consumer/consumer.py:498: CPendingDeprecationWarning: The broker_connection_retry configuration setting will no longer determine

whether broker connection retries are made during startup in Celery 6.0 and above.

If you wish to retain the existing behavior for retrying connections on startup,

you should set broker_connection_retry_on_startup to True.

warnings.warn(

[2023-07-03 02:40:28,811: INFO/MainProcess] mingle: searching for neighbors

[2023-07-03 02:40:29,827: INFO/MainProcess] mingle: all alone

[2023-07-03 02:40:29,870: INFO/MainProcess] celery@ubuntu ready.

第二个例子

mkdir app

vi tasks.py

from celery import Celery

backend='redis://127.0.0.1:6379/3'

broker='redis://127.0.0.1:6379/4'

app = Celery('tasks', broker=broker, backend=backend)

@app.task

def add(x, y):

return x + y

启动实例任务celery worker (消费者)

celery -A tasks worker --loglevel=info

等待 redis中 来消息之后,执行任务

调用实例任务 (生产者)

给 消息队列发送消息(redis)

可以

celery worker --help

Usage: celery worker [OPTIONS]

Start worker instance.

Examples

--------

$ celery --app=proj worker -l INFO

$ celery -A proj worker -l INFO -Q hipri,lopri

$ celery -A proj worker --concurrency=4

$ celery -A proj worker --concurrency=1000 -P eventlet

$ celery worker --autoscale=10,0

Worker Options:

-n, --hostname HOSTNAME Set custom hostname (e.g., 'w1@%%h').

Expands: %%h (hostname), %%n (name) and %%d,

(domain).

-D, --detach Start worker as a background process.

-S, --statedb PATH Path to the state database. The extension

'.db' may be appended to the filename.

-l, --loglevel [DEBUG|INFO|WARNING|ERROR|CRITICAL|FATAL]

Logging level.

-O, --optimization [default|fair]

Apply optimization profile.

--prefetch-multiplier <prefetch multiplier>

Set custom prefetch multiplier value for

this worker instance.

Pool Options:

-c, --concurrency <concurrency>

Number of child processes processing the

queue. The default is the number of CPUs

available on your system.

-P, --pool [prefork|eventlet|gevent|solo|processes|threads|custom]

Pool implementation.

-E, --task-events, --events Send task-related events that can be

captured by monitors like celery events,

celerymon, and others.

--time-limit FLOAT Enables a hard time limit (in seconds

int/float) for tasks.

--soft-time-limit FLOAT Enables a soft time limit (in seconds

int/float) for tasks.

--max-tasks-per-child INTEGER Maximum number of tasks a pool worker can

execute before it's terminated and replaced

by a new worker.

--max-memory-per-child INTEGER Maximum amount of resident memory, in KiB,

that may be consumed by a child process

before it will be replaced by a new one. If

a single task causes a child process to

exceed this limit, the task will be

completed and the child process will be

replaced afterwards. Default: no limit.

Queue Options:

--purge, --discard

-Q, --queues COMMA SEPARATED LIST

-X, --exclude-queues COMMA SEPARATED LIST

-I, --include COMMA SEPARATED LIST

Features:

--without-gossip

--without-mingle

--without-heartbeat

--heartbeat-interval INTEGER

--autoscale <MIN WORKERS>, <MAX WORKERS>

Embedded Beat Options:

-B, --beat

-s, --schedule-filename, --schedule TEXT

--scheduler TEXT

Daemonization Options:

-f, --logfile TEXT Log destination; defaults to stderr

--pidfile TEXT

--uid TEXT

--gid TEXT

--umask TEXT

--executable TEXT

Options:

--help Show this message and exit.

celery --help

Usage: celery [OPTIONS] COMMAND [ARGS]...

Celery command entrypoint.

Options:

-A, --app APPLICATION

-b, --broker TEXT

--result-backend TEXT

--loader TEXT

--config TEXT

--workdir PATH

-C, --no-color

-q, --quiet

--version

--skip-checks Skip Django core checks on startup.

--help Show this message and exit.

Commands:

amqp AMQP Administration Shell.

beat Start the beat periodic task scheduler.

call Call a task by name.

control Workers remote control.

events Event-stream utilities.

graph The ``celery graph`` command.

inspect Inspect the worker at runtime.

list Get info from broker.

logtool The ``celery logtool`` command.

migrate Migrate tasks from one broker to another.

multi Start multiple worker instances.

purge Erase all messages from all known task queues.

report Shows information useful to include in bug-reports.

result Print the return value for a given task id.

shell Start shell session with convenient access to celery symbols.

status Show list of workers that are online.

upgrade Perform upgrade between versions.

worker Start worker instance.

backend 任务结果后端

Celery 进阶使用

https://www.celerycn.io/ru-men/celery-jin-jie-shi-yong

在 work目录下创建proj目录

celery.py

from __future__ import absolute_import, unicode_literals

from celery import Celery

backend='redis://127.0.0.1:6379/5'

broker='redis://127.0.0.1:6379/6'

app = Celery('proj',

broker=broker,

backend=backend,

include=['proj.tasks'])

# Optional configuration, see the application user guide.

app.conf.update(

result_expires=3600,

)

if __name__ == '__main__':

print("hello")

app.start()

vi tasks.py

from __future__ import absolute_import, unicode_literals

from .celery import app

@app.task

def add(x, y):

return x + y

@app.task

def mul(x, y):

return x * y

@app.task

def xsum(numbers):

return sum(numbers)

测试的话

在work目录下面执行

celery -A proj worker -l info文章来源:https://www.toymoban.com/news/detail-519385.html

work目录下面执行:文章来源地址https://www.toymoban.com/news/detail-519385.html

python3

>>> from proj.tasks import add

>>> add.delay(2,2)

<AsyncResult: 32a194e0-1e91-49bf-8436-b215beb96e16>

到了这里,关于django celery 记录的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!